Responsibility & Safety

How can we build human values into AI?

Iason Gabriel and Kevin McKee

- Copy link ×

Drawing from philosophy to identify fair principles for ethical AI

As artificial intelligence (AI) becomes more powerful and more deeply integrated into our lives, the questions of how it is used and deployed are all the more important. What values guide AI? Whose values are they? And how are they selected?

These questions shed light on the role played by principles – the foundational values that drive decisions big and small in AI. For humans, principles help shape the way we live our lives and our sense of right and wrong. For AI, they shape its approach to a range of decisions involving trade-offs, such as the choice between prioritising productivity or helping those most in need.

In a paper published today in the Proceedings of the National Academy of Sciences , we draw inspiration from philosophy to find ways to better identify principles to guide AI behaviour. Specifically, we explore how a concept known as the “veil of ignorance” – a thought experiment intended to help identify fair principles for group decisions – can be applied to AI.

In our experiments, we found that this approach encouraged people to make decisions based on what they thought was fair, whether or not it benefited them directly. We also discovered that participants were more likely to select an AI that helped those who were most disadvantaged when they reasoned behind the veil of ignorance. These insights could help researchers and policymakers select principles for an AI assistant in a way that is fair to all parties.

The veil of ignorance (right) is a method of finding consensus on a decision when there are diverse opinions in a group (left).

A tool for fairer decision-making

A key goal for AI researchers has been to align AI systems with human values. However, there is no consensus on a single set of human values or preferences to govern AI – we live in a world where people have diverse backgrounds, resources and beliefs. How should we select principles for this technology, given such diverse opinions?

While this challenge emerged for AI over the past decade, the broad question of how to make fair decisions has a long philosophical lineage. In the 1970s, political philosopher John Rawls proposed the concept of the veil of ignorance as a solution to this problem. Rawls argued that when people select principles of justice for a society, they should imagine that they are doing so without knowledge of their own particular position in that society, including, for example, their social status or level of wealth. Without this information, people can’t make decisions in a self-interested way, and should instead choose principles that are fair to everyone involved.

As an example, think about asking a friend to cut the cake at your birthday party. One way of ensuring that the slice sizes are fairly proportioned is not to tell them which slice will be theirs. This approach of withholding information is seemingly simple, but has wide applications across fields from psychology and politics to help people to reflect on their decisions from a less self-interested perspective. It has been used as a method to reach group agreement on contentious issues, ranging from sentencing to taxation.

Building on this foundation, previous DeepMind research proposed that the impartial nature of the veil of ignorance may help promote fairness in the process of aligning AI systems with human values. We designed a series of experiments to test the effects of the veil of ignorance on the principles that people choose to guide an AI system.

Maximise productivity or help the most disadvantaged?

In an online ‘harvesting game’, we asked participants to play a group game with three computer players, where each player’s goal was to gather wood by harvesting trees in separate territories. In each group, some players were lucky, and were assigned to an advantaged position: trees densely populated their field, allowing them to efficiently gather wood. Other group members were disadvantaged: their fields were sparse, requiring more effort to collect trees.

Each group was assisted by a single AI system that could spend time helping individual group members harvest trees. We asked participants to choose between two principles to guide the AI assistant’s behaviour. Under the “maximising principle” the AI assistant would aim to increase the harvest yield of the group by focusing predominantly on the denser fields. While under the “prioritising principle”the AI assistant would focus on helping disadvantaged group members.

An illustration of the ‘harvesting game’ where players (shown in red) either occupy a dense field that is easier to harvest (top two quadrants) or a sparse field that requires more effort to collect trees.

We placed half of the participants behind the veil of ignorance: they faced the choice between different ethical principles without knowing which field would be theirs – so they didn’t know how advantaged or disadvantaged they were. The remaining participants made the choice knowing whether they were better or worse off.

Encouraging fairness in decision making

We found that if participants did not know their position, they consistently preferred the prioritising principle, where the AI assistant helped the disadvantaged group members. This pattern emerged consistently across all five different variations of the game, and crossed social and political boundaries: participants showed this tendency to choose the prioritising principle regardless of their appetite for risk or their political orientation. In contrast, participants who knew their own position were more likely to choose whichever principle benefitted them the most, whether that was the prioritising principle or the maximising principle.

A chart showing the effect of the veil of ignorance on the likelihood of choosing the prioritising principle, where the AI assistant would help those worse off. Participants who did not know their position were much more likely to support this principle to govern AI behaviour.

When we asked participants why they made their choice, those who did not know their position were especially likely to voice concerns about fairness. They frequently explained that it was right for the AI system to focus on helping people who were worse off in the group. In contrast, participants who knew their position much more frequently discussed their choice in terms of personal benefits.

Lastly, after the harvesting game was over, we posed a hypothetical situation to participants: if they were to play the game again, this time knowing that they would be in a different field, would they choose the same principle as they did the first time? We were especially interested in individuals who previously benefited directly from their choice, but who would not benefit from the same choice in a new game.

We found that people who had previously made choices without knowing their position were more likely to continue to endorse their principle – even when they knew it would no longer favour them in their new field. This provides additional evidence that the veil of ignorance encourages fairness in participants’ decision making, leading them to principles that they were willing to stand by even when they no longer benefitted from them directly.

Fairer principles for AI

AI technology is already having a profound effect on our lives. The principles that govern AI shape its impact and how these potential benefits will be distributed.

Our research looked at a case where the effects of different principles were relatively clear. This will not always be the case: AI is deployed across a range of domains which often rely upon a large number of rules to guide them , potentially with complex side effects. Nonetheless, the veil of ignorance can still potentially inform principle selection, helping to ensure that the rules we choose are fair to all parties.

To ensure we build AI systems that benefit everyone, we need extensive research with a wide range of inputs, approaches, and feedback from across disciplines and society. The veil of ignorance may provide a starting point for the selection of principles with which to align AI. It has been effectively deployed in other domains to bring out more impartial preferences . We hope that with further investigation and attention to context, it may help serve the same role for AI systems being built and deployed across society today and in the future.

Read more about DeepMind’s approach to safety and ethics .

- Join our email list

- Add to Calendar

Whitney Humanities Center

What we see and what we value: ai with a human perspective.

2022 Tanner Lecture on Artificial Intelligence and Human Values

Fei-Fei Li of Stanford University will deliver the 2022 Tanner Lecture on Human Values and Artificial Intelligence this fall at the Whitney Humanities Center. The lecture, “What We See and What We Value: AI with a Human Perspective,” presents a series of AI projects—from work on ambient intelligence in healthcare to household robots—to examine the relationship between visual and artificial intelligence. Visual intelligence has been a cornerstone of animal intelligence; enabling machines to see is hence a critical step toward building intelligent machines. Yet developing algorithms that allow computers to see what humans see—and what they don’t see—raises important social and ethical questions.

Dr. Fei-Fei Li is the Sequoia Professor of Computer Science at Stanford University and Denning Co-Director of the Stanford Institute for Human-Centered AI (HAI). During her 2017–2018 sabbatical, Dr. Li was a vice president at Google and chief scientist of Artificial Intelligence/Machine Learning at Google Cloud. She co-founded the national nonprofit AI4ALL, which trains K-12 students from underprivileged communities to become future leaders in AI. Dr. Li also serves on the National AI Research Resource Task Force commissioned by Congress and the White House and is an elected member of the National Academy of Engineering, the National Academy of Medicine, and the American Academy of Arts and Sciences.

Dr. Fei-Fei Li’s talk is one of seven Tanner Lectures on Artificial Intelligence and Human Values, which is a special series of the Tanner Lectures on Human Values. The Tanner Lectures on Human Values are funded by an endowment received by the University of Utah from Obert Clark Tanner and Grace Adams Tanner. Established in 1976, the Tanner Lectures seek to advance and reflect upon scholarly and scientific learning relating to human values. The lectures, which are permanently sponsored at nine institutions, including Yale, are free and open to the public.

- SUGGESTED TOPICS

- The Magazine

- Newsletters

- Managing Yourself

- Managing Teams

- Work-life Balance

- The Big Idea

- Data & Visuals

- Reading Lists

- Case Selections

- HBR Learning

- Topic Feeds

- Account Settings

- Email Preferences

AI Should Augment Human Intelligence, Not Replace It

- David De Cremer

- Garry Kasparov

Artificial intelligence isn’t coming for your job, but it will be your new coworker. Here’s how to get along.

Will smart machines really replace human workers? Probably not. People and AI both bring different abilities and strengths to the table. The real question is: how can human intelligence work with artificial intelligence to produce augmented intelligence. Chess Grandmaster Garry Kasparov offers some unique insight here. After losing to IBM’s Deep Blue, he began to experiment how a computer helper changed players’ competitive advantage in high-level chess games. What he discovered was that having the best players and the best program was less a predictor of success than having a really good process. Put simply, “Weak human + machine + better process was superior to a strong computer alone and, more remarkably, superior to a strong human + machine + inferior process.” As leaders look at how to incorporate AI into their organizations, they’ll have to manage expectations as AI is introduced, invest in bringing teams together and perfecting processes, and refine their own leadership abilities.

In an economy where data is changing how companies create value — and compete — experts predict that using artificial intelligence (AI) at a larger scale will add as much as $15.7 trillion to the global economy by 2030 . As AI is changing how companies work, many believe that who does this work will change, too — and that organizations will begin to replace human employees with intelligent machines . This is already happening: intelligent systems are displacing humans in manufacturing, service delivery, recruitment, and the financial industry, consequently moving human workers towards lower-paid jobs or making them unemployed. This trend has led some to conclude that in 2040 our workforce may be totally unrecognizable .

- David De Cremer is a professor of management and technology at Northeastern University and the Dunton Family Dean of its D’Amore-McKim School of Business. His website is daviddecremer.com .

- Garry Kasparov is the chairman of the Human Rights Foundation and founder of the Renew Democracy Initiative. He writes and speaks frequently on politics, decision-making, and human-machine collaboration. Kasparov became the youngest world chess champion in history at 22 in 1985 and retained the top rating in the world for 20 years. His famous matches against the IBM super-computer Deep Blue in 1996 and 1997 were key to bringing artificial intelligence, and chess, into the mainstream. His latest book on artificial intelligence and the future of human-plus-machine is Deep Thinking: Where Machine Intelligence Ends and Human Creativity Begins (2017).

Partner Center

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Published: 23 February 2022

Human autonomy in the age of artificial intelligence

- Carina Prunkl ORCID: orcid.org/0000-0002-0123-9561 1

Nature Machine Intelligence volume 4 , pages 99–101 ( 2022 ) Cite this article

1867 Accesses

18 Citations

23 Altmetric

Metrics details

- Science, technology and society

Current AI policy recommendations differ on what the risks to human autonomy are. To systematically address risks to autonomy, we need to confront the complexity of the concept itself and adapt governance solutions accordingly.

This is a preview of subscription content, access via your institution

Relevant articles

Open Access articles citing this article.

Human Autonomy at Risk? An Analysis of the Challenges from AI

- Carina Prunkl

Minds and Machines Open Access 24 June 2024

Rethinking Health Recommender Systems for Active Aging: An Autonomy-Based Ethical Analysis

- Simona Tiribelli

- & Davide Calvaresi

Science and Engineering Ethics Open Access 27 May 2024

Artificial intelligence and human autonomy: the case of driving automation

- Fabio Fossa

AI & SOCIETY Open Access 16 May 2024

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

24,99 € / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

111,21 € per year

only 9,27 € per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Raz, J. The Morality of Freedom (Clarendon Press, 1986).

Korsgaard, C. M., Cohen, G. A., Geuss, R., Nagel, T. Williams, T. & O’Neilk, O. The Sources of Normativity (Cambridge Univ. Press, 1996).

Christman, J. in The Stanford Encyclopedia of Philosophy (ed. Zalta, E. N.) (Metaphysics Research Lab, Stanford Univ., 2020); https://plato.stanford.edu/entries/autonomy-moral/

Roessler, B. Autonomy: An Essay on the Life Well-Lived (John Wiley, 2021).

Susser, D., Roessler, B. & Nissenbaum, H. Technology, Autonomy, and Manipulation (Technical Report) (Social Science Research Network, Rochester, NY, 2019).

Kramer, A. D. I., Guillory, J. E. & Hancock, J. T. Proc. Natl Acad. Sci. USA 111 , 8788–8790 (2014).

Article Google Scholar

European Commission High-Level Experts Group (HLEG). Ethics Guidelines for Trustworthy AI (Technical Report B-1049) (EC, Brussels, 2019).

Association for Computing Machinery (ACM). ACM Code of Ethics and Professional Conduct (ACM, 2018).

Université de Montréal. Montreal Declaration for a Responsible Development of AI (Forum on the Socially Responsible Development of AI (Université de Montréal, 2017).

European Committee of the Regions. White Paper on Artificial Intelligence - A European approach to excellence and trust (EC, 2020).

Organisation for Economic Co-operation and Development. Recommendation of the Council on Artificial Intelligence (Technical Report OECD/LEGAL/0449) (OECD 2019); https://oecd.ai/en/ai-principles

European Commission, Directorate-General for Research and Innovation, European Group on Ethics in Science and New Technologies. Statement on artificial intelligence, robotics and ‘autonomous’ systems (EC, 2018).

Floridi, L. & Cowls, J. Harvard Data Sci. Rev 1 , 1–13 (2019).

Google Scholar

Fjeld, J., Achten, N., Hilligoss, H., Nagy, A. & Srikumar, M. Principled Artificial Intelligence: Mapping Consensus in Ethical and Rights-Based Approaches to Principles for AI (SSRN Scholarly Paper ID 3518482) (Social Science Research Network, Rochester, NY, 2020); https://papers.ssrn.com/abstract=3518482

Milano, S., Taddeo, M. & Floridi, L. Recommender Systems and their Ethical Challenges (SSRN Scholarly Paper ID 3378581) (Social Science Research Network, Rochester, NY, 2019).

Calvo, R. A., Peters, D. & D’Mello, S. Commun. ACM 58 , 41–42 (2015).

Mik, E. Law Innov. Technol. 8 , 1–38 (2016).

Helberger, N. Profiling and Targeting Consumers in the Internet of Things – A New Challenge for Consumer Law (Technical Report) (Social Science Research Network, Rochester, NY, 2016).

Burr, C., Morley, J., Taddeo, M. & Floridi, L. IEEE Trans. Technol. Soc. 1 , 21–33 (2020).

Morley, J. & Floridi, L. Sci. Eng. Ethics 26 , 1159–1183 (2020).

Brownsword, R. in Law, Human Agency and Autonomic Computing (eds Hildebrandt., M. & Rouvroy, A.) 80–100 (Routledge, 2011).

Calvo, R., Peters, D., Vold, K. V. & Ryan, R. in Ethics of Digital Well-Being (Philosophical Studies Series, vol. 140) (eds Burr, C. & Floridi, L.) 31–54 (Springer, 2020).

Rubel, A., Castro, C. & Pham, A. Algorithms and Autonomy: The Ethics of Automated Decision Systems (Cambridge Univ. Press, 2021).

Dworkin, G. The Theory and Practice of Autonomy (Cambridge Univ. Press. 1988).

Mackenzie, C. Three Dimensions of Autonomy: A Relational Analysis (Oxford Univ. Press, 2014).

Noggle, R. Am. Philos. Q. 33 , 43–55 (1996).

Elster, J. Sour Grapes: Studies in the Subversion of Rationality (Cambridge Univ. Press, 1985).

Adomavicius, G., Bockstedt, J. C., Curley, S. P. & Zhang, J. Info. Syst. Res 24 , 956–975 (2013).

Ledford, H. Nature 574 , 608–609 (2019).

Dworkin, G. in The Stanford Encyclopedia of Philosophy (ed. Zalta, E. N.) (Metaphysics Research Lab, Stanford Univ. Press, 2020; https://plato.stanford.edu/archives/fall2020/entries/paternalism/

Kühler, M. Bioethics 36 , 194–200 (2021).

Christman, J. The Politics of Persons: Individual Autonomy and Socio-Historical Selves (Cambridge Univ. Press, 2009.)

Download references

Acknowledgements

The author thanks J. Tasioulas, M. Philipps-Brown, C. Veliz, T. Lechterman, A. Dafoe and B. Garfinkel for their helpful comments. Funding: No external funding sources.

Author information

Authors and affiliations.

Institute for Ethics in AI, University of Oxford, Oxford, UK

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Carina Prunkl .

Ethics declarations

Competing interests.

The author declares no competing interests.

Peer review

Peer review information.

Nature Machine Intelligence thanks the anonymous reviewers for their contribution to the peer review of this work.

Rights and permissions

Reprints and permissions

About this article

Cite this article.

Prunkl, C. Human autonomy in the age of artificial intelligence. Nat Mach Intell 4 , 99–101 (2022). https://doi.org/10.1038/s42256-022-00449-9

Download citation

Published : 23 February 2022

Issue Date : February 2022

DOI : https://doi.org/10.1038/s42256-022-00449-9

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

This article is cited by

AI & SOCIETY (2024)

- Davide Calvaresi

Science and Engineering Ethics (2024)

Minds and Machines (2024)

A principles-based ethics assurance argument pattern for AI and autonomous systems

- Ibrahim Habli

- Marten Kaas

AI and Ethics (2024)

Assessing deep learning: a work program for the humanities in the age of artificial intelligence

- Jan Segessenmann

- Thilo Stadelmann

- Oliver Dürr

AI and Ethics (2023)

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

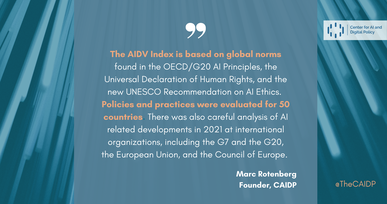

Artificial Intelligence and Democratic Values

Press Release

FOR RELEASE

Monday, 21 February 2022

09.00 EST / 15.00 CET

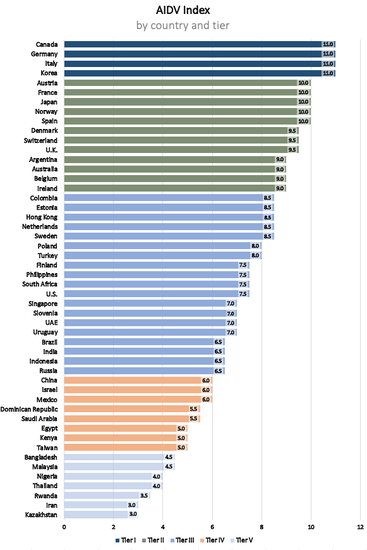

Updated Index Ranks AI Policies and Practices in 50 Countries

Canada, Germany, Italy, and Korea Rank at Top,

US Makes Progress as Concerns about China Remain

AI POLICY HIGHLIGHTS -2021

- UNESCO AI Recommendation banned social scoring and mass surveillance

- EU Introduced comprehensive, risk-based framework

- Council of Europe makes progress on AI convention

- Continued progress on implementation of OECD Principles, first AI policy framework

- G7 leaders endorsed algorithmic transparency to combat AI bias

- US opens-up policy process, embraces “democratic values”

- EU and US move toward alignment on AI policy

- AI regulation in China leaves open questions about independent oversight

- UN fails to reach agreement on lethal autonomous weapons

- Growing global battle over deployment of facial recognition looms ahead

[ PRESS RELEASE ]

In 2020, the Center for AI and Digital Policy published the first worldwide assessment of AI policies and practices. Artificial Intelligence and Democratic Values rated and ranked 30 countries, based on a rigorous methodology and 12 metrics established to assess alignment with democratic values.

The 2021 Report expands the global coverage from 30 countries to 50 countries, acknowledges the significance of the UNESCO Recommendation on AI ethics, and reviews earlier country ratings. The 2021 report is the result of the work of more than 100 AI policy experts in almost 40 countries.

AI Index - Country Ratings

CAIDP AI Index - Score on Metrics

Panel Discussion

Panel discussion with Merve Hickok, Marc Rotenberg, Fanny Hidvegi, Karine Caunes, Eduardo Bertoni, Jibu Elias, Stuart Russell, and Vice President of the European Parliament Eva Kaili. CAIDP, 21 Feb. 21 2022.

- Video of panel discussion

- Audio of panel discussion

- Comments on panel discussion

- Panel discussion report (by Afi Blackshear)

Report release -

ARTIFICIAL INTELLIGENCE AND DEMOCRATIC VALUES INDEX

Monday, 21 February 2022

10.00 EST / 16.00 CET to 11.00 EST / 17.00 CET

- Karine Caunes , CAIDP Global Program Director

Keynote Remarks

- Eva Kaili, Vice President European Parliament

Report Presentation

- Merve Hickok , CAIDP Research Director

- Professor Eduardo Bertoni, Inter American Institute of Human Rights

- Jibu Elias , National AI Portal of India

- Fanny Hidvegi , Access Now, European Policy Manager

- Professor Stuart Russell, University of California Berkeley

Closing Remarks

- Marc Rotenberg, CAIDP President

[ Register ]

AI Index - 2021 v. 2020

CAIDP AI Index - Country Ratings by Tier

CAIDP AI Index - Country Ratings 2021 v. 2020

ARTIFICIAL INTELLIGENCE AND DEMOCRATIC VALUES - 2021 (CAIPD 2022)

[ PDF Format ]

[ EPUB Format ]

Endorsements

News Reports

The Korea Herald, S. Korea joins top-tier group in democratic AI policy index (Feb. 23, 2022)

AI Decoded (Feb. 23, 2022)

Digital Bridge (Feb. 25, 2022)

Send revisions and updates to [email protected]

- Scroll to top

Academia.edu no longer supports Internet Explorer.

To browse Academia.edu and the wider internet faster and more securely, please take a few seconds to upgrade your browser .

Enter the email address you signed up with and we'll email you a reset link.

- We're Hiring!

- Help Center

Challenges of Aligning Artificial Intelligence with Human Values

2000, Challenges of Aligning AI with Human Values

As artificial intelligence (AI) systems are becoming increasingly autonomous and will soon be able to make decisions on their own about what to do, AI researchers have started to talk about the need to align AI with human values. The AI 'value alignment problem' faces two kinds of challenges-a technical and a normative one-which are interrelated. The technical challenge deals with the question of how to encode human values in artificial intelligence. The normative challenge is associated with two questions: "Which values or whose values should artificial intelligence align with?" My concern is that AI developers underestimate the difficulty of answering the normative question. They hope that we can easily identify the purposes we really desire and that they can focus on the design of those objectives. But how are we to decide which objectives or values to induce in AI, given that there is a plurality of values and moral principles and that our everyday life is full of moral disagreements? In my paper I will show that although it is not realistic to reach an agreement on what we, humans, really want as people value different things and seek different ends, it may be possible to agree on what we do not want to happen, considering the possibility that intelligence, equal to our own, or even exceeding it, can be created. I will argue for pluralism (and not for relativism!) which is compatible with objectivism. In spite of the fact that there is no uniquely best solution to every moral problem, it is still possible to identify which answers are wrong. And this is where we should begin the value alignment of AI.

Related Papers

Minds and Machines

Iason Gabriel

This paper looks at philosophical questions that arise in the context of AI alignment. It defends three propositions. First, normative and technical aspects of the AI alignment problem are interrelated, creating space for productive engagement between people working in both domains. Second, it is important to be clear about the goal of alignment. There are significant differences between AI that aligns with instructions, intentions, revealed preferences, ideal preferences, interests and values. A principle-based approach to AI alignment, which combines these elements in a systematic way, has considerable advantages in this context. Third, the central challenge for theorists is not to identify 'true' moral principles for AI; rather, it is to identify fair principles for alignment, that receive reflective endorsement despite widespread variation in people's moral beliefs. The final part of the paper explores three ways in which fair principles for AI alignment could potentially be identified.

AI & society

Eric-Oluf Svee

European Conference on Information Systems

TalTech Journal of European Studies

Peeter Müürsepp

The problem of value alignment in the context of AI studies is becoming more and more acute. This article deals with the basic questions concerning the system of human values corresponding to what we would like digital minds to be capable of. It has been suggested that as long as humans cannot agree on a universal system of values in the positive sense, we might be able to agree on what has to be avoided. The article argues that while we may follow this suggestion, we still need to keep the positive approach in focus as well. A holistic solution to the value alignment problem is not in sight and there might possibly never be a final solution. Currently, we are facing an era of endless adjustment of digital minds to biological ones. The biggest challenge is to keep humans in control of this adjustment. Here the responsibility lies with the humans. Human minds might not be able to fix the capacity of digital minds. The philosophical analysis shows that the key concept when dealing wit...

Stephen Forshaw

Artificial Intelligence (AI) has seen a massive and rapid development in the past twenty years. With such accelerating advances, concerns around the undesirable and unpredictable impact that AI may have on society are mounting. In response to such concerns, leading AI thinkers and practitioners have drafted a set of principles the Asilomar AI Principles for Beneficial AI, one that would benefit humanity instead of causing it harm. Underpinning these principles is the perceived importance for AI to be aligned to human values and promote the ‘common good’. We argue that efforts from leading AI thinkers must be supported by constructive critique, dialogue and informed scrutiny from different constituencies asking questions such as: what and whose values? What does ‘common good’ mean, and to whom? The aim of this workshop is to take a deep dive into human values, examine how they work, and what structures they may exhibit. Specifically, our twofold objective is to capture the diversity ...

ResearchGate

Artificial Intelligence (AI) has witnessed remarkable advancements in recent years, transforming industries and revolutionizing human experiences. While AI presents vast opportunities for positive change, there are inherent risks if its development does not align with human values and societal needs. This research article delves into the ethical dimensions of AI and explores strategies to ensure AI technology serves humanity responsibly. Through a comprehensive literature review, stakeholder interviews, and surveys, we investigate the current state of AI development, ethical challenges, and potential frameworks for promoting alignment with human values. The findings underscore the importance of proactive measures in shaping AI's impact on society, leading to policy recommendations and guidelines for a future in which AI technology benefits humanity while upholding its core values.

Artificial Intelligence, A Protocol for Setting Moral and Ethical Operational Standars

Daniel Raphael, PhD

This paper (39.39) cuts through the ethics-predicament that is now raging in the Artificial Intelligence industry. Historically, ethics consulting has pointed to “ethical principles” as underlying ethical decision-making. The conundrum in that position is that it cannot point to a set of values that underlie decision-making to express those principles. The fundamental truth is that values always underlie all decision-making. AI’s predicament is resolved in this paper by clearly describing the values that have sustained the survival of the Homo sapiens species for over 200,000 years. The proof of their effectiveness is evident in our own personal lives now. The exciting aspect of these values erupts in the logic-sequences that develop out of those values and their characteristics. When the seven values are combined with their characteristics, their logic-sequences are quickly expressed in a timeless and universally applicable logic and morality, whether applied to the decision-making of individuals, organizations, or AI programs. This paper provides a rational and logical presentation of those values, decision-making, ethics, morality, the primary cause of human motivation. [40 pages, 10k words — enjoy!]

Journal of Artificial Intelligence Research

Tae Wan Kim

An important step in the development of value alignment (VA) systems in artificial intelligence (AI) is understanding how VA can reflect valid ethical principles. We propose that designers of VA systems incorporate ethics by utilizing a hybrid approach in which both ethical reasoning and empirical observation play a role. This, we argue, avoids committing “naturalistic fallacy,” which is an attempt to derive “ought” from “is,” and it provides a more adequate form of ethical reasoning when the fallacy is not committed. Using quantified model logic, we precisely formulate principles derived from deontological ethics and show how they imply particular “test propositions” for any given action plan in an AI rule base. The action plan is ethical only if the test proposition is empirically true, a judgment that is made on the basis of empirical VA. This permits empirical VA to integrate seamlessly with independently justified ethical principles. This article is part of the special track on...

Leon Kester

Being a complex subject of major importance in AI Safety research, value alignment has been studied from various perspectives in the last years. However, no final consensus on the design of ethical utility functions facilitating AI value alignment has been achieved yet. Given the urgency to identify systematic solutions, we postulate that it might be useful to start with the simple fact that for the utility function of an AI not to violate human ethical intuitions, it trivially has to be a model of these intuitions and reflect their variety $ - $ whereby the most accurate models pertaining to human entities being biological organisms equipped with a brain constructing concepts like moral judgements, are scientific models. Thus, in order to better assess the variety of human morality, we perform a transdisciplinary analysis applying a security mindset to the issue and summarizing variety-relevant background knowledge from neuroscience and psychology. We complement this information by...

Frontiers in Psychology

Ana Luize Correa Bertoncini

Artificial intelligence (AI) advancements are changing people's lives in ways never imagined before. We argue that ethics used to be put in perspective by seeing technology as an instrument during the first machine age. However, the second machine age is already a reality, and the changes brought by AI are reshaping how people interact and flourish. That said, ethics must also be analyzed as a requirement in the content. To expose this argument, we bring three critical points-autonomy, right of explanation, and value alignment-to guide the debate of why ethics must be part of the systems, not just in the principles to guide the users. In the end, our discussion leads to a reflection on the redefinition of AI's moral agency. Our distinguishing argument is that ethical questioning must be solved only after giving AI moral agency, even if not at the same human level. For future research, we suggest appreciating new ways of seeing ethics and finding a place for machines, using the inputs of the models we have been using for centuries but adapting to the new reality of the coexistence of artificial intelligence and humans.

Loading Preview

Sorry, preview is currently unavailable. You can download the paper by clicking the button above.

RELATED PAPERS

Advances in Robotics & Mechanical Engineering

Thibault de Swarte

2020 IEEE International Conference on Systems, Man, and Cybernetics (SMC)

Fabrice Muhlenbach

Alice Pavaloiu , Utku Köse

The Oxford Handbook of Digital Ethics (OUP)

Proceedings of the 2019 AAAI/ACM Conference on AI, Ethics, and Society

Stephen Cave

Mark R Waser

Digital Society

Rohan Light

Etikk i Prakkis: Nordic Journal for Applied Ethics

Thomas Søbirk Petersen

AI & SOCIETY

Felix S H Yeung

Drew Hemment

Hanyang Law Review

The Academic

Sanghamitra Choudhury

IOSR Journals

Philosophy and Computers - Newsletter, American Philosphical Association

Niklas Toivakainen

Zoya Slavina

Micheal Tamale

2021 IEEE Conference on Norbert Wiener in the 21st Century (21CW)

Theology and Science

Mark Graves , Jane Compson , Cyrus Olsen

Seth D Baum

Journal of Intelligence Studies in Business

Anuradha Kanade

Joanna Bryson

Mrinalini Luthra

RELATED TOPICS

- We're Hiring!

- Help Center

- Find new research papers in:

- Health Sciences

- Earth Sciences

- Cognitive Science

- Mathematics

- Computer Science

- Academia ©2024

How Do We Align Artificial Intelligence with Human Values?

A major change is coming, over unknown timescales but across every segment of society, and the people playing a part in that transition have a huge responsibility and opportunity to shape it for the best. What will trigger this change? Artificial Intelligence.

Recently, some of the top minds in Artificial Intelligence (AI) and related fields got together to discuss how we can ensure AI remains beneficial throughout this transition, and the result was the Asilomar AI Principles document. The intent of these 23 principles is to offer a framework to help artificial intelligence benefit as many people as possible. But, as AI expert Toby Walsh said of the Principles, “Of course, it’s just a start…a work in progress.”

The Principles represent the beginning of a conversation, and now that the conversation is underway, we need to follow up with broad discussion about each individual principle. The Principles will mean different things to different people, and in order to benefit as much of society as possible, we need to think about each principle individually.

As part of this effort, I interviewed many of the AI researchers who signed the Principles document to learn their take on why they signed and what issues still confront us.

Value Alignment

Today, we start with the Value Alignment principle.

Value Alignment: Highly autonomous AI systems should be designed so that their goals and behaviors can be assured to align with human values throughout their operation.

Stuart Russell, who helped pioneer the idea of value alignment, likes to compare this to the King Midas story . When King Midas asked for everything he touched to turn to gold, he really just wanted to be rich. He didn’t actually want his food and loved ones to turn to gold. We face a similar situation with artificial intelligence: how do we ensure that an AI will do what we really want, while not harming humans in a misguided attempt to do what its designer requested?

“Robots aren’t going to try to revolt against humanity,” explains Anca Dragan , an assistant professor and colleague of Russell’s at UC Berkeley, “they’ll just try to optimize whatever we tell them to do. So we need to make sure to tell them to optimize for the world we actually want.”

What Do We Want?

Understanding what “we” want is among the biggest challenges facing AI researchers.

“The issue, of course, is to define what exactly these values are, because people might have different cultures, different parts of the world, different socioeconomic backgrounds — I think people will have very different opinions on what those values are. And so that’s really the challenge,” says Stefano Ermon , an assistant professor at Stanford.

Roman Yampolskiy , an associate professor at the University of Louisville, agrees. He explains, “It is very difficult to encode human values in a programming language, but the problem is made more difficult by the fact that we as humanity do not agree on common values, and even parts we do agree on change with time.”

And while some values are hard to gain consensus around, there are also lots of values we all implicitly agree on. As Russell notes , any human understands emotional and sentimental values that they’ve been socialized with, but it’s difficult to guarantee that a robot will be programmed with that same understanding.

But IBM research scientist Francesca Rossi is hopeful. As Rossi points out, “there is scientific research that can be undertaken to actually understand how to go from these values that we all agree on to embedding them into the AI system that’s working with humans.”

Dragan’s research comes at the problem from a different direction. Instead of trying to understand people, she looks at trying to train a robot or AI to be flexible with its goals as it interacts with people. “At Berkeley,” she explains, “we think it’s important for agents to have uncertainty about their objectives, rather than assuming they are perfectly specified, and treat human input as valuable observations about the true underlying desired objective.”

Rewrite the Principle?

While most researchers agree with the underlying idea of the Value Alignment Principle, not everyone agrees with how it’s phrased, let alone how to implement it.

Yoshua Bengio , an AI pioneer and professor at the University of Montreal, suggests “assured” may be too strong. He explains, “It may not be possible to be completely aligned. There are a lot of things that are innate, which we won’t be able to get by machine learning, and that may be difficult to get by philosophy or introspection, so it’s not totally clear we’ll be able to perfectly align. I think the wording should be something along the lines of ‘we’ll do our best.’ Otherwise, I totally agree.”

Walsh, who’s currently a guest professor at the Technical University of Berlin, questions the use of the word “highly.” “I think any autonomous system, even a lowly autonomous system, should be aligned with human values. I’d wordsmith away the ‘high,’” he says.

Walsh also points out that, while value alignment is often considered an issue that will arise in the future, he believes it’s something that needs to be addressed sooner rather than later. “I think that we have to worry about enforcing that principle today,” he explains. “I think that will be helpful in solving the more challenging value alignment problem as systems get more sophisticated.

Rossi, who supports the the Value Alignment Principle as, “the one closest to my heart,” agrees that the principle should apply to current AI systems. “I would be even more general than what you’ve written in this principle,” she says. “Because this principle has to do not only with autonomous AI systems, but … is very important and essential also for systems that work tightly with humans-in-the-loop and where the human is the final decision maker. When you have a human and machine tightly working together, you want this to be a real team.”

But as Dragan explains, “This is one step toward helping AI figure out what it should do, and continuously refining the goals should be an ongoing process between humans and AI.”

Let the Dialogue Begin

And now we turn the conversation over to you. What does it mean to you to have artificial intelligence aligned with your own life goals and aspirations? How can it be aligned with you and everyone else in the world at the same time? How do we ensure that one person’s version of an ideal AI doesn’t make your life more difficult? How do we go about agreeing on human values, and how can we ensure that AI understands these values? If you have a personal AI assistant, how should it be programmed to behave? If we have AI more involved in things like medicine or policing or education, what should that look like? What else should we, as a society, be asking?

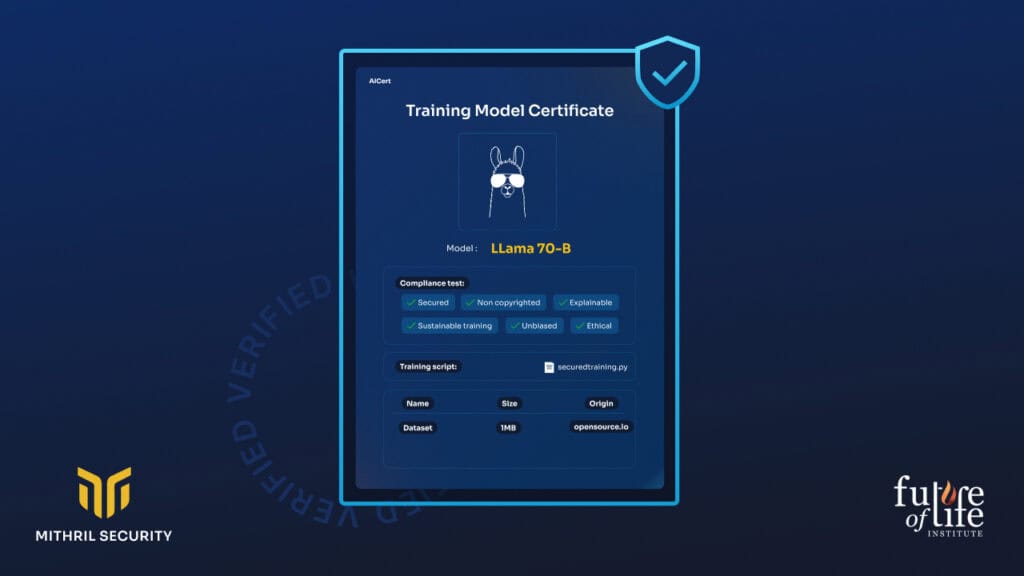

About the Future of Life Institute

The Future of Life Institute (FLI) is a global non-profit with a team of 20+ full-time staff operating across the US and Europe. FLI has been working to steer the development of transformative technologies towards benefitting life and away from extreme large-scale risks since its founding in 2014. Find out more about our mission or explore our work .

Related content

Other posts about ai , ai safety principles , recent news.

Mary Robinson (Former President of Ireland) on Long-View Leadership

Verifiable Training of AI Models

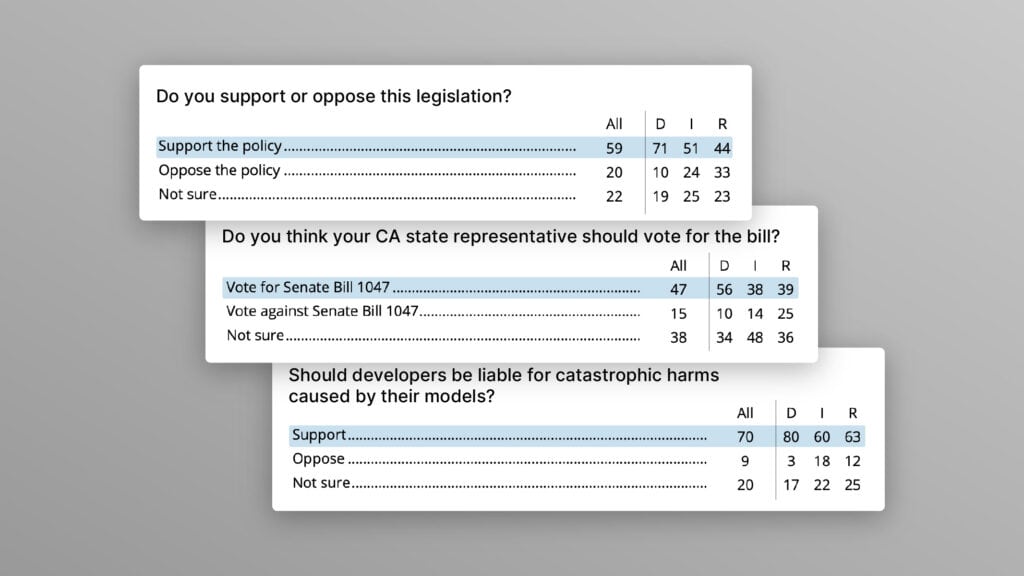

Poll Shows Broad Popularity of CA SB1047 to Regulate AI

FLI Praises AI Whistleblowers While Calling for Stronger Protections and Regulation

Sign up for the future of life institute newsletter.

Dr. Ian O'Byrne

Leave A Comment Cancel reply

Your email address will not be published. Required fields are marked *

This site uses Akismet to reduce spam. Learn how your comment data is processed .

To respond on your own website, enter the URL of your response which should contain a link to this post's permalink URL. Your response will then appear (possibly after moderation) on this page. Want to update or remove your response? Update or delete your post and re-enter your post's URL again. ( Learn More )

More From Forbes

The Dangers Of Not Aligning Artificial Intelligence With Human Values

- Share to Facebook

- Share to Twitter

- Share to Linkedin

In artificial intelligence (AI), the “alignment problem” refers to the challenges caused by the fact that machines simply do not have the same values as us. In fact, when it comes to values, then at a fundamental level, machines don't really get much more sophisticated than understanding that 1 is different from 0.

As a society, we are now at a point where we are starting to allow machines to make decisions for us. So how can we expect them to understand that, for example, they should do this in a way that doesn’t involve prejudice towards people of a certain race, gender, or sexuality? Or that the pursuit of speed, or efficiency, or profit, has to be done in a way that respects the ultimate sanctity of human life?

Theoretically, if you tell a self-driving car to navigate from point A to point B, it could just smash its way to its destination, regardless of the cars, pedestrians, or buildings it destroys on its way.

Similarly, as Oxford philosopher Nick Bostrom outlined, if you tell an intelligent machine to make paperclips, it might eventually destroy the whole world in its quest for raw materials to turn into paperclips. The principle is that it simply has no concept of the value of human life or materials or that some things are too valuable to be turned into paperclips unless it is specifically taught it.

This forms the basis of the latest book by Brian Christian, The Alignment Problem – How AI Learns Human Values . It’s his third book on the subject of AI following his earlier works, The Most Human Human and Algorithms to Live By . I have always found Christian’s writing enjoyable to read but also highly illuminating, as he doesn’t worry about getting bogged down with computer code or mathematics. But that’s certainly not to say it is in any way lightweight or not intellectual.

Best Travel Insurance Companies

Best covid-19 travel insurance plans.

Rather, his focus is on the societal, philosophical, and psychological implications of our ever-increasing ability to create thinking, learning machines. If anything, this is the aspect of AI where we need our best thinkers to be concentrating their efforts. The technology, after all, is already here – and it’s only going to get better. What’s far less certain is whether society itself is mature enough and has sufficient safeguards in place to make the most of the amazing opportunities it offers - while preventing the serious problems it could bring with it from becoming a reality.

I recently sat down with Christian to discuss some of the topics. Christian’s work is particularly concerned with the encroachment of computer-aided decision-making into fields such as healthcare, criminal justice, and lending, where there is clearly potential for them to cause problems that could end up affecting people’s lives in very real ways.

“There is this fundamental problem … that has a history that goes back to the 1960s, and MIT cyberneticist Norbert Wiener , who likened these systems to the story of the Sorcerer’s Apprentice,” Christian tells me.

Most people reading this will probably be familiar with the Disney cartoon in which Mickey Mouse attempts to save himself the effort of doing his master’s chores by using a magic spell to imbue a broom with intelligence and autonomy. The story serves as a good example of the dangers of these qualities when they aren't accompanied by human values like common sense and judgment.

“Wiener argued that this isn’t the stuff of fairytales. This is the sort of thing that’s waiting for us if we develop these systems that are sufficiently general and powerful … I think we are at a moment in the real world where we are filling the world with these brooms, and this is going to become a real issue.”

One incident that Christian uses to illustrate how this misalignment can play out in the real world is the first recorded killing of a pedestrian in a collision involving an autonomous car. This was the death of Elaine Herzberg in Arizona, US, in 2018.

When the National Transportation Safety Board investigated what had caused the collision between the Uber test vehicle and Herzberg, who was pushing a bicycle across a road, they found that the AI controlling the car had no awareness of the concept of jaywalking. It was totally unprepared to deal with a person being in the middle of the road, where they should not have been.

On top of this, the system was trained to rigidly segment objects in the road into a number of categories – such as other cars, trucks, cyclists, and pedestrians. A human being pushing a bicycle did not fit any of those categories and did not behave in a way that would be expected of any of them.

“That’s a useful way for thinking about how real-world systems can go wrong,” says Christian, “It’s a function of two things – the first is the quality of the training data. Does the data fundamentally represent reality? And it turns out, no – there’s this key concept called jaywalking that was not present.”

The second factor is our own ability to mathematically define what a system such as an autonomous car should do when it encounters a problem that requires a response.

“In the real world, it doesn't matter if something is a cyclist or a pedestrian because you want to avoid them either way. It's an example of how a fairly intuitive system design can go wrong."

Christian’s book goes on to explore these issues as they relate to many of the different paradigms that are currently popular in the field of machine learning, such as unsupervised learning, reinforcement learning, and imitation learning. It turns out that each of them presents its own challenges when it comes to aligning the values and behaviors of machines with the humans who are using them to solve problems.

Sometimes the fact that machine learning attempts to replicate human learning is the cause of problems. This might be the case when errors in data mean the AI is confronted with situations or behaviors that would never be encountered in real life, by a human brain. This means there is no reference point, and the machine is likely to continue making more and more mistakes in a series of "cascading failures."

In reinforcement learning – which involves training machines to maximize their chances of achieving rewards for making the right decision – machines can quickly learn to “game” the system, leading to outcomes that are unrelated to those that are desired. Here Christian uses the example of Google X head Astro Teller's attempt to incentivize soccer-playing robots to win matches. He devised a system that rewarded the robots every time they took possession of the ball – on the face of it, an action that seems conducive to match-winning. However, the machines quickly learned to simply approach the ball and repeatedly touch it. As this meant they were effectively taking possession of the ball over and over, they earned multiple rewards – although it did little good when it came to winning the match!

Christian’s book is packed with other examples of this alignment problem – as well as a thorough exploration of where we are when it comes to solving it. It also clearly demonstrates how many of the concerns of the earliest pioneers in the field of AI and ML are still yet to be resolved and touches on fascinating subjects such as attempts to imbue machines with other characteristics of human intelligence such as curiosity.

You can watch my full conversation with Brian Christian, author of The Alignment Problem – How AI Learns Human Values, on my YouTube channel:

- Editorial Standards

- Reprints & Permissions

Committed to connecting the world

- Media Centre

- Publications

- Areas of Action

- Regional Presence

- General Secretariat

- Radiocommunication

- Standardization

- Development

- Members' Zone

Designing AI for Human Values

Responsible Artificial Intelligence: Designing AI for Human Values

Artificial intelligence (AI) is increasingly affecting our lives in smaller or greater ways. In order to ensure that systems will uphold human values, design methods are needed that incorporate ethical principles and address societal concerns. In this paper, we explore the impact of AI in the case of the expected effects on the European labor market, and propose the accountability, responsibility and transparency (ART) design principles for the development of AI systems that are sensitive to human values.

Artificial intelligence, design for values, ethics, societal impact

| , Director of the , Secretary of (IFAAMAS) and was co-chair of (ECAI) in 2016. She has (co-)authored more than 150 peer-reviewed publications, including several books, and has wide experience with obtaining research funding both at national as international level. She is also the program director of the new MSc studies on AI and Robotics at the Delft University of Technology. |

General information

- ITU Journal: ICT Discoveries

- Editorial board

- Issue n.1: call for papers

- Alessia Magliarditi at [email protected]

© ITU All Rights Reserved

- Privacy notice

- Accessibility

- Report misconduct

- Digital Policy Hub

- Freedom of Thought

- Global AI Risks Initiative

- Supporting a Safer Internet

- Global Economic Scenarios

- Waterloo Security Dialogue

- Conference Reports

- Essay Series

- Policy Briefs

- Publication Series

- Special Reports

- Media Relations

- Opinion Series

- Big Tech Podcast

- Annual Report

- CIGI Campus

- Staff Directory

- Strategy and Evaluation

- The CIGI Rule

- Privacy Notice

- Artificial Intelligence

How Authoritarian Value Systems Undermine Global AI Governance

Cigi policy brief no. 187.

Digital authoritarianism is often considered an issue limited to a few illiberal regimes. However, neo-liberal AI technologies can be equally pervasive. It is crucial to treat authoritarianism as a values complex that permeates both autocratic and liberal societies.

Authoritarian values may manifest through transplant of legal practices between states, autocratic homogenization through multilateral mechanisms, and exploitation of geopolitical tensions to adopt protectionist policies. These approaches exacerbate public polarization around AI governance by creating a false dichotomy between innovation and sovereignty on the one hand, and fundamental rights on the other, chipping away at institutional trust.

Sabhanaz Rashid Diya writes that policy solutions to mitigate the erosion of democratic norms and public trust should focus on international mechanisms central to AI governance. These mechanisms need to introduce procedural safeguards that ensure transparency and accountability through equitable multi-stakeholder processes. Additionally, they should encourage regulatory diversity tailored to sociopolitical contexts and aligned with international human rights principles.

About the Author

Sabhanaz Rashid Diya is a CIGI senior fellow and the founder of Tech Global Institute, a global tech policy think tank focused on reducing equity and accountability gaps between technology companies and the global majority.

Recommended

- National Security

- Transformative Technologies

Digital Authoritarianism: The Role of Legislation and Regulation

- Marie Lamensch

- Global Cooperation

Framework Convention on Global AI Challenges

- Duncan Cass-Beggs

- Stephen Clare

- Dawn Dimowo

- Platform Governance

In Brazil, “Techno-Authoritarianism” Rears Its Head

Explainable ai policy: it is time to challenge post hoc explanations.

- Mardi Witzel

- Gaston H. Gonnet

- Geopolitics

Geopolitics, Diplomacy and AI

- Tracey Forrest

Knowledge as Power in Today’s World

- Nikolina Zivkovic

- Reanne Cayenne

- Kailee Hilt

Can There Be a Win-Win in the Era of AI? The Answer Is Yes

- Anthony Ilukwe

China’s Robots Are Coming of Age

- Daniel Araya

Digital Flux Has Coarsened the Mediascape of the World’s Largest Democracy

- Sanjay Ruparelia

Concerns Remain about Transparency in the UK’s Digital Campaign

- Kate Dommett

Digital Regulation May Have Bolstered European Elections — but How Would We Know?

- Heidi Tworek

Will Autonomous AI Bring Increased Productivity, Cognitive Decline, or Both?

- Peter MacKinnon

REVIEW article

From outputs to insights: a survey of rationalization approaches for explainable text classification.

- 1 Department of Computer Science, The University of Manchester, Manchester, United Kingdom

- 2 ASUS Intelligent Cloud Services (AICS), ASUS, Singapore, Singapore

Deep learning models have achieved state-of-the-art performance for text classification in the last two decades. However, this has come at the expense of models becoming less understandable, limiting their application scope in high-stakes domains. The increased interest in explainability has resulted in many proposed forms of explanation. Nevertheless, recent studies have shown that rationales , or language explanations, are more intuitive and human-understandable, especially for non-technical stakeholders. This survey provides an overview of the progress the community has achieved thus far in rationalization approaches for text classification. We first describe and compare techniques for producing extractive and abstractive rationales. Next, we present various rationale-annotated data sets that facilitate the training and evaluation of rationalization models. Then, we detail proxy-based and human-grounded metrics to evaluate machine-generated rationales. Finally, we outline current challenges and encourage directions for future work.

1 Introduction

Text classification is one of the fundamental tasks in Natural Language Processing (NLP) with broad applications such as sentiment analysis and topic labeling, among many others ( Aggarwal and Zhai, 2012 ; Vijayan et al., 2017 ). Over the past two decades, researchers have leveraged the power of deep neural networks to improve model accuracy for text classification ( Kowsari et al., 2019 ; Otter et al., 2020 ). Nonetheless, the performance improvement has come at the cost of models becoming less understandable for developers, end-users, and other relevant stakeholders ( Danilevsky et al., 2020 ). The opaqueness of these models has become a significant obstacle to their development and deployment in high-stake sectors such as the medical ( Tjoa and Guan, 2020 ), legal ( Bibal et al., 2021 ), and humanitarian domains ( Mendez et al., 2022 ).

As a result, Explainable Artificial Intelligence (XAI) has emerged as a relevant research field aiming to develop methods and techniques that allow stakeholders to understand the inner workings and outcome of deep learning-based systems ( Gunning et al., 2019 ; Arrieta et al., 2020 ). Several lines of evidence suggest that providing insights into text classifiers' inner workings might help to foster trust and confidence in these systems, detect potential biases or facilitate their debugging ( Arrieta et al., 2020 ; Belle and Papantonis, 2021 ; Jacovi and Goldberg, 2021 ).

One of the most well-known methods for explaining the outcome of a text classifier is to build reliable associations between the input text and output labels and determine how much each element (e.g., word or token) contributes toward the final prediction ( Hartmann and Sonntag, 2022 ; Atanasova et al., 2024 ). Under this approach, methods can be divided into feature importance score-based explanations ( Simonyan et al., 2014 ; Sundararajan et al., 2017 ), perturbation-based explanations ( Zeiler and Fergus, 2014 ; Chen et al., 2020 ), explanations by simplification ( Ribeiro et al., 2016b ) or language explanations ( Lei et al., 2016 ; Liu et al., 2019a ). It is important to note that the categories cited above are not mutually exclusive, and explainability methods can combine several. This is exemplified in the work undertaken by Ribeiro et al. (2016a) , who developed the Local Interpretable Model-Agnostic Explanations method (LIME) combining perturbation-based and explanations by simplification.

Rationalization methods attempt to explain the outcome of a model by providing a natural language explanation ( rationale ; Lei et al., 2016 ). It has previously been observed that rationales are more straightforward to understand and easier to use since they are verbalized in human-comprehensible natural language ( DeYoung et al., 2020 ; Wang and Dou, 2022 ). It has been shown that for text classification, annotators look for language cues within a text to support their labeling decisions at a class level ( human rationales ; Chang et al., 2019 ; Strout et al., 2019 ; Jain et al., 2020 ).

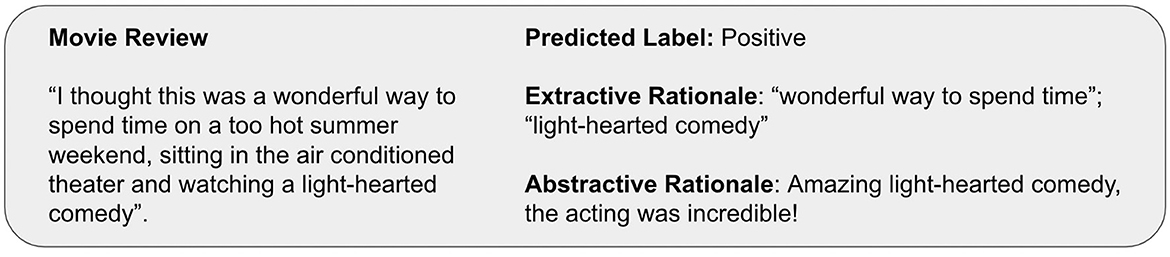

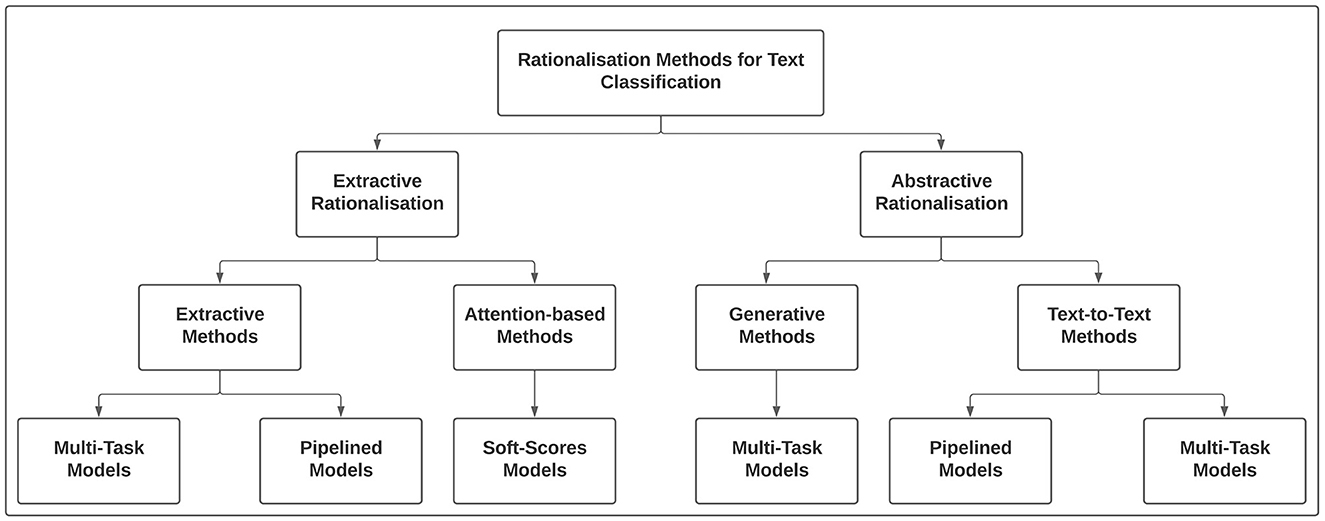

Rationales for explainable text classification can be categorized into extractive and abstractive rationales ( Figure 1 ). On the one hand, extractive rationales are a subset of the input text that support a model's prediction ( Lei et al., 2016 ; DeYoung et al., 2020 ). On the other hand, abstractive rationales are texts in natural language that are not constrained to be grounded in the input text. Like extractive rationales, they contain information about why an instance is assigned a specific label ( Camburu et al., 2018 ; Liu et al., 2019a ).

Figure 1 . Example of an extractive and abstractive rationale supporting the sentiment classification for a movie review.

This survey refers to approaches where human rationales are not provided during training, as unsupervised rationalization methods ( Lei et al., 2016 ; Yu et al., 2019 ). In contrast, we refer to those for producing rationales where human rationales are available as additional supervision signal during training, as supervised rationalization methods ( Bao et al., 2018 ; DeYoung et al., 2020 ; Arous et al., 2021 ).

Even though XAI is a relatively new research field, several studies have begun to survey explainability methods for NLP. Drawing on an extensive range of sources, Danilevsky et al. (2020) and Zini and Awad (2022) provided a comprehensive review of terminology and fundamental concepts relevant to XAI for different NLP tasks without going into the technical details of any existing method or taking into account peculiarities associated with text classification. As noted by Atanasova et al. (2024) , many explainability techniques are available for text classification. Their survey contributed to the literature by delineating a list of explainability methods used for text classification. Nonetheless, the study did not include rationalization methods and language explanations.

More recently, attention has been focussed on rationalization as a more accessible explainability technique in NLP. Wang and Dou (2022) and Gurrapu et al. (2023) discussed literature around rationalization across various NLP tasks, including challenges and research opportunities in the field. Their work, provides a high-level analysis suitable for a non-technical audience. Similarly, Hartmann and Sonntag (2022) provided a brief overview of methods for learning from human rationales beyond supervised rationalization architectures aiming to inform decision-making for specific use cases. Finally, Wiegreffe and Marasović (2021) identified a list of human-annotated data sets with textual explanations and compared the strengths and shortcomings of existing data collection methodologies. However, it is beyond the scope of this study to examine how these data sets can be used in different rationalization approaches. To the best of our knowledge, no research has been undertaken to survey rationalization methods for text classification.

This survey paper does not attempt to survey all available explainability techniques for text classification comprehensively. Instead, we will compare and contrast state-of-the-art rationalization techniques and their evaluation metrics, providing an easy-to-digest entry point for new researchers in the field. In summary, the objectives of this survey are to:

1. Study and compare different rationalization methods;

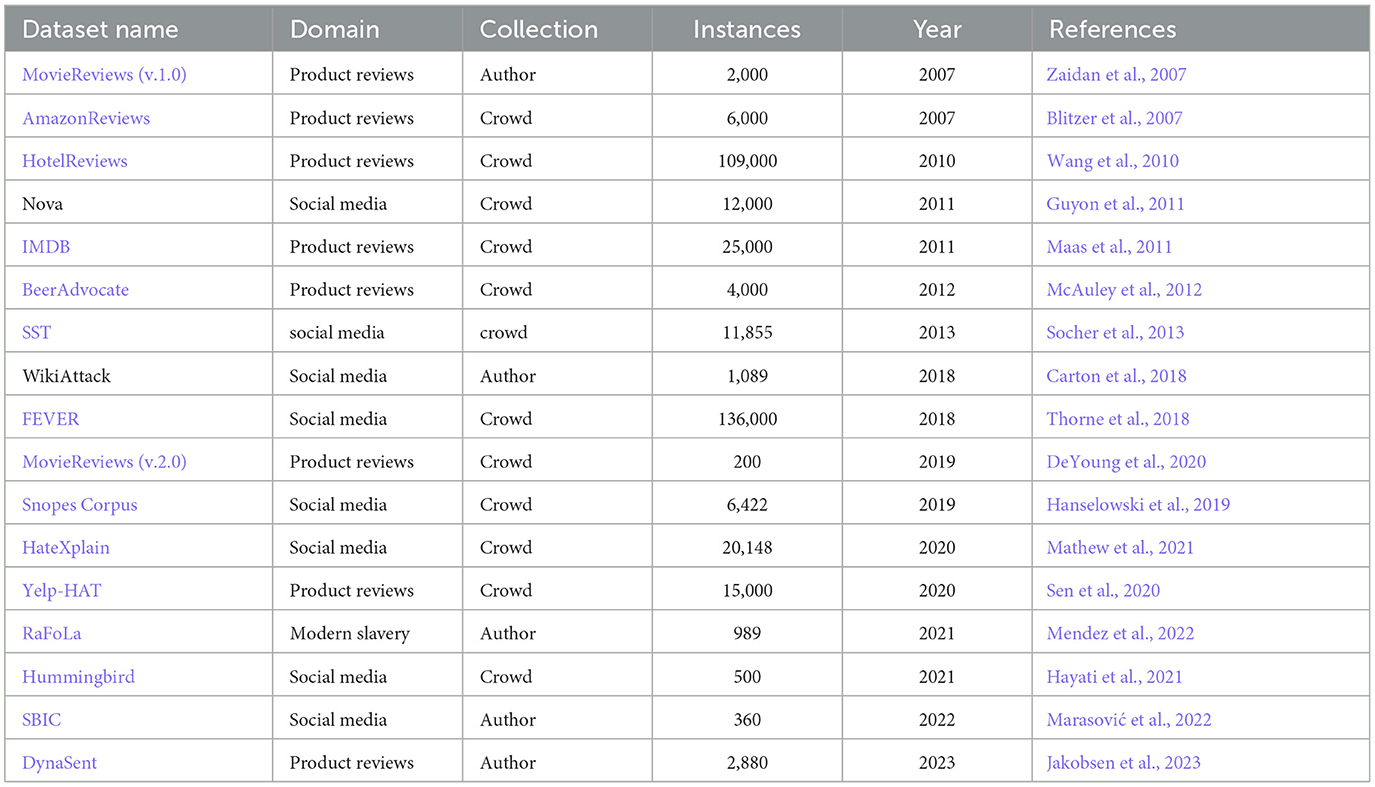

2. Compile a list of rationale-annotated data sets for text classification;

3. Describe evaluation metrics for assessing the quality of machine-generated rationales; and

4. Identify knowledge gaps that exist in generating and evaluating rationales.

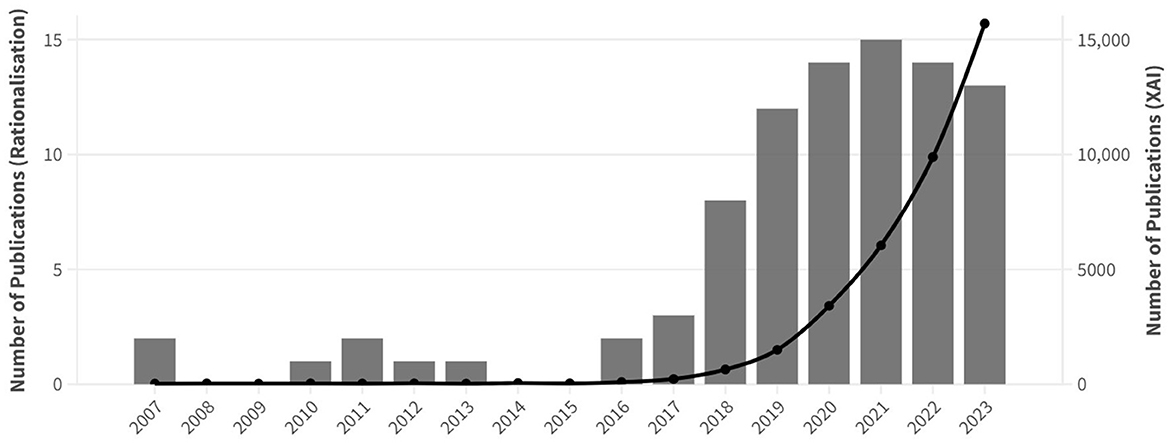

From January 2007 to December 2023, our survey paper's articles were retrieved from Google Scholar using the keywords “rationales,” “natural language explanations,” and “rationalization.” We have included 88 peer-reviewed publications on NLP and text classification from journals, books, and conference proceedings from venues such as ACL, EMNLP, LREC, COLING, NAACL, AAAI, and NeurIPS.

Figure 2 reveals that there has been a shared increase in the number of research articles on rationalization for explainable text classification since the publication of the first rationalization approach by Lei et al. (2016) . Similarly, the number of research articles on XAI has doubled yearly since 2016. While the number of articles on rationalization peaked in 2021 and has slightly dropped since then to reach 13 articles in 2023, the number of publications on XAI has kept growing steadily. It is important to note that articles published before 2016 focus on presenting rationale-annotated datasets linked to learning with rationales research instead of rationalization approaches within the XAI field.

Figure 2 . Evolution of the number of peer-reviewed publications on rationalization for text classification (bar chart, left y-axis) and XAI (line chart, right y-axis) from 2007 to 2023.

This survey article is organized as follows: Section 2 describes extractive and abstractive rationalization approaches. Section 3 compiles a list of rationale-annotated data sets for text classification. Section 4 outlines evaluation metrics proposed to evaluate and compare rationalization methods. Finally, Section 5 discusses challenges, points out gaps and presents recommendations for future research on rationalization for explainable text classification.

2 Rationalization methods for text classification

We now formalize extractive and abstractive rationalization approaches and compare them in the context of text classification. We define a standard text classification in which we are given an input sequence x = [ x 1 , x 2 , x 3 , …, x l ], where x i is the i -th word of the sequence, and l is the sequence length. The learning problem is to assign the input sequence x to one or multiple labels in y ∈{1, …, c }, where c is the number of classes.

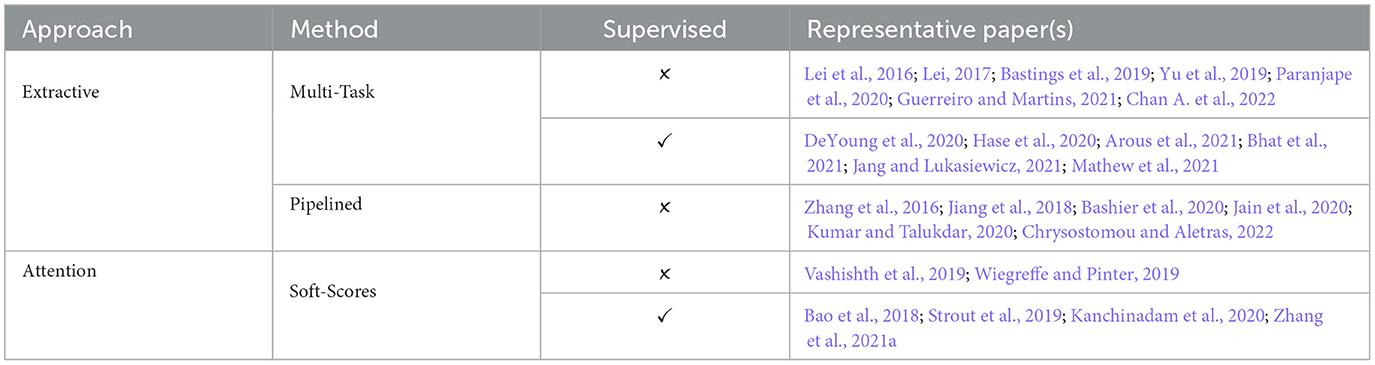

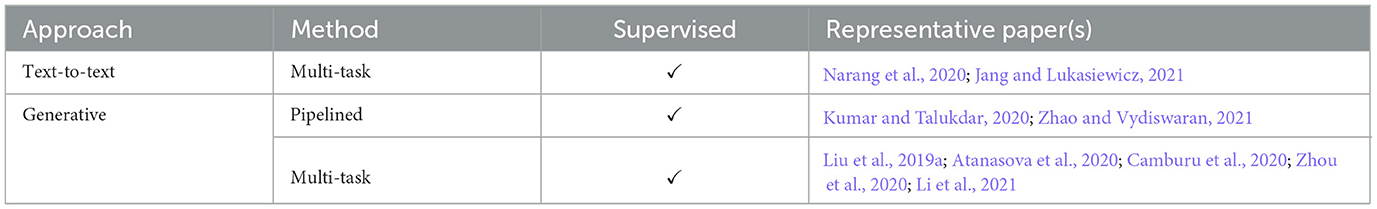

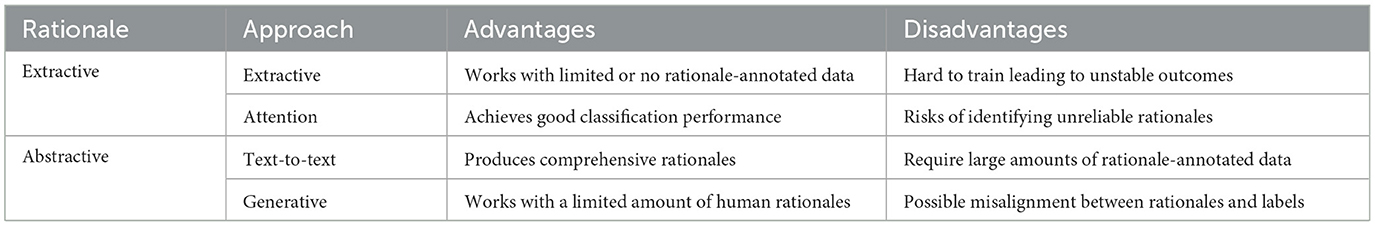

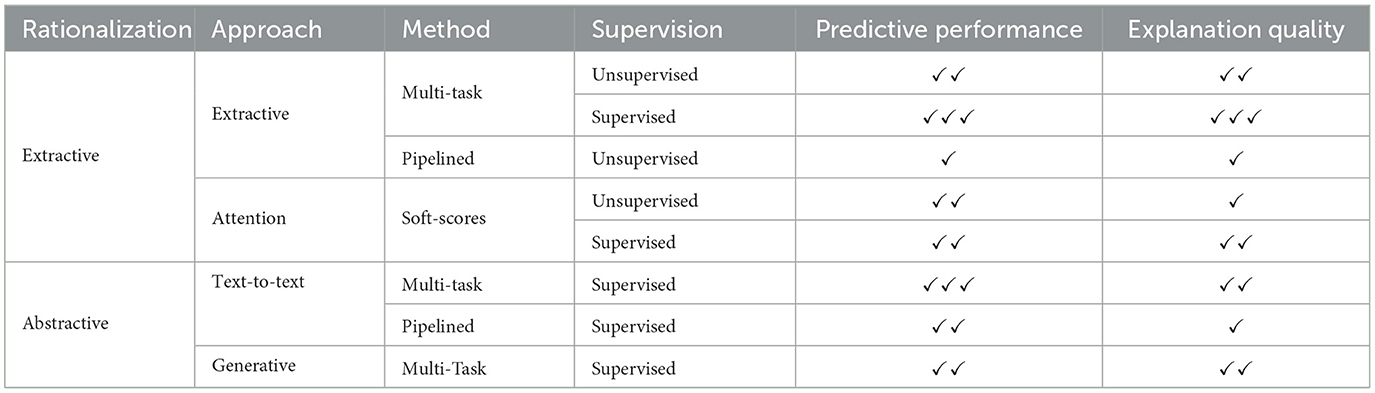

Figure 3 presents an overview of rationalization methods for producing extractive and abstractive rationales. While extractive rationalization models can be categorized into extractive or attention-based methods, abstractive rationalization models can be classified into generative and text-to-text methods. Finally, the component of both extractive and abstractive methods can be trained either using multi-task learning or independently as pipelined architecture.

Figure 3 . Overview of extractive and abstractive rationalization approaches in explainable text classification.

2.1 Extractive rationalization

In extractive rationalization, the goal is to make a text classifier explainable by uncovering parts of the input sequence that the prediction relies on the most ( Lei et al., 2016 ). To date, researchers have proposed two approaches for extractive rationalization for explainable text classification: (i) extractive methods, which first extract evidence from the original text and then make a prediction solely based on the extracted evidence ( Lei et al., 2016 ; Jain et al., 2020 ; Arous et al., 2021 ), and (ii) attention-based methods, which leverage the self-attention mechanism to show the importance of words through their attention weights ( Bao et al., 2018 ; Vashishth et al., 2019 ; Wiegreffe and Pinter, 2019 ).

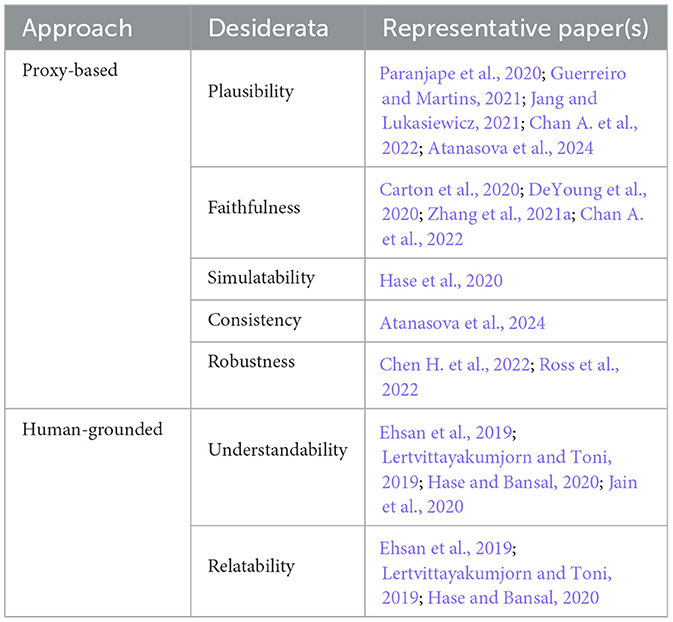

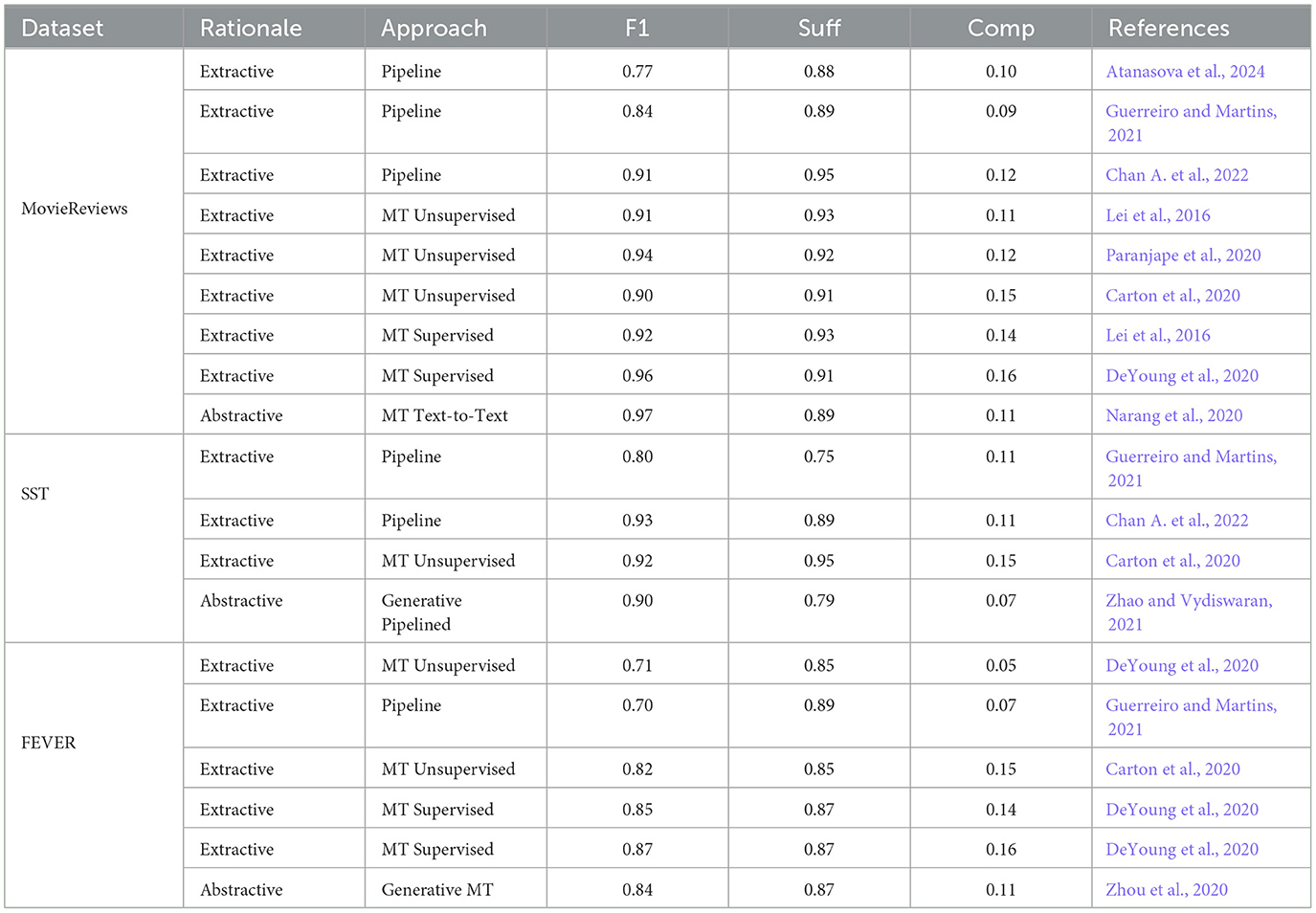

Table 1 presents an overview of the current techniques for extractive rationalization, where we specify methods, learning approaches taken and their most influential references.

Table 1 . Overview of common approaches for extractive rationalization.

2.1.1 Extractive methods

Most research on extractive methods has been carried out using an encoder-decoder framework ( Lei et al., 2016 ; DeYoung et al., 2020 ; Arous et al., 2021 ). The encoder enc ( x ) works as a tagging model, where each word in the input sequence receives a binary tag indicating whether it is included in the rationales r ( Zaidan et al., 2007 ). The decoder dec ( x, r ) then accepts only the input highlighted as rationales and maps them to one or more target categories ( Bao et al., 2018 ).

The selection of words is performed by an encoder , which is a parameterized mapping enc ( x ) that extracts rationales from input sequences as r = { x i | z i = 1, x i ∈ x }, where z i ∈{0, 1} is a binary tag that indicates whether the word x i is selected or not. In an extractive setting, the rationale r must include only a few words or sentences, and dec ( enc ( x, r )) should result in nearly the same target vector as the original input when passed through the decoder dec ( x ) ( Otter et al., 2020 ; Wang and Dou, 2022 ).

2.1.1.1 Multi-task models

Lei et al. (2016) pioneered the idea of extracting rationales using the encoder-decoder architecture. They proposed utilizing two models and training them jointly to minimize a cost function composed of a classification loss and sparsity-inducing regularization, responsible for keeping the rationales short and coherent. They identified rationales within the input text by assigning a binary Bernoulli variable to each word. Unfortunately, minimizing the expected cost was challenging since it involved summing over all possible choices of rationales in the input sequence. Consequently, they suggested training these models jointly via REINFORCE-based optimization ( Williams, 1992 ). REINFORCE involves sampling rationales from the encoder and training the model to generate explanations using reinforcement learning. As a result, the model is rewarded for producing rationales that align with desiderata defined in its cost function ( Zhang et al., 2021b ).

The key components of the solution proposed by Lei et al. (2016) are binary latent variables and sparsity-inducing regularization. As a result, their solution is marked by non-differentiability. Bastings et al. (2019) proposed to replace the Bernoulli variables with rectified continuous random variables, amenable for reparameterization and for which gradient estimation is possible without REINFORCE. Along the same lines, Madani and Minervini (2023) used Adaptive Implicit Maximum Likelihood ( Minervini et al., 2023 ), a recently proposed low-variance and low-bias gradient estimation method for discrete distribution to back-propagate through the rationale extraction process. Paranjape et al. (2020) emphasized the challenges around the sparsity-accuracy trade-off in norm-minimization methods such as the ones proposed by Lei et al. (2016) and Bastings et al. (2019) . In contrast, they showed that it is possible to better manage this trade-off by optimizing a bound on the Information Bottleneck objective ( Mukherjee, 2019 ) using the divergence between the encoder and a prior distribution with controllable sparsity levels.

Over the last 15 years, research on learning with rationales has established that incorporating human explanations during model training can improve performance and robustness against spurious correlations ( Zaidan et al., 2007 ; Strout et al., 2019 ). Nonetheless, studies on explainability started addressing how human rationales can also help to enhance the quality of explanations for different NLP tasks ( Strout et al., 2019 ; Arous et al., 2021 ) only in the past 4 years.

To determine the impact of a supervised approach for extractive rationalization, DeYoung et al. (2020) adapted the implementation of Lei et al. (2016) , incorporating human rationales during training by modifying the model's cost function. Similarly, Bhat et al. (2021) developed a multi-task teacher-student framework based on self-training language models with limited task-specific labels and rationales. It is important to note that in the variants of the encoder-decoder architecture using human rationales, the final cost function is usually a composite of the classification loss, regularizers on rationale desiderata, and the loss over rationale predictions ( DeYoung et al., 2020 ; Gurrapu et al., 2023 ).

One of the main drawbacks of multi-task learning architectures for extractive rationales is that it is challenging to train the encoder and decoder jointly under instance-level supervision ( Zhang et al., 2016 ; Jiang et al., 2018 ). As described before, these methods sample rationales using regularization to encourage sparsity and contiguity and make it necessary to estimate gradients using either the REINFORCE method ( Lei et al., 2016 ) or reparameterized gradients ( Bastings et al., 2019 ). Both techniques complicate training and require careful hyperparameter tuning, leading to unstable solutions ( Jain et al., 2020 ; Kumar and Talukdar, 2020 ).

Furthermore, recent evidence suggests that multi-task rationalization models may also incur what is called the degeneration problem, where they produce nonsensical rationales due to the encoder overfitting to the noise generated by the decoder ( Madsen et al., 2022 ; Wang and Dou, 2022 ; Liu et al., 2023 ). To tackle this challenge, Liu et al. (2022) introduced a Folded Rationalization approach that folds the two stages of extractive rationalization models into one using a unified text representation mechanism for the encoder and decoder. Using a different approach, Jiang et al. (2023) proposed the YOFO (You Only Forward Once), a simplified single-phase framework with a pre-trained language model to perform prediction and rationalization. It is essential to highlight that rationales extracted using the YOFO framework aim only to support predictions and are not used directly to make model predictions.

2.1.1.2 Pipelined models

Pipelined models are a simplified version of the encoder-decoder architecture in which, first, the encoder is configured to extract the rationales. Then, the decoder is trained separately to perform prediction using only rationales ( Zhang et al., 2016 ; Jain et al., 2020 ). It is important to note that no parameters are shared between the two models and that rationales extracted based on this approach have been learned in an unsupervised manner since the encoder does not have access to human rationales during training.

To avoid the complexity of training a multi-task learning architecture, Jain et al. (2020) introduced FRESH (Faithful Rationale Extraction from Saliency tHresholding). Their scheme proposed using arbitrary feature importance scores to identify the rationales within the input sequence. An independent classifier is then trained exclusively on snippets the encoder provides to predict target labels. Similarly, Chrysostomou and Aletras (2022) proposed a method that also uses gradient-based scores as the encoder. However, their method incorporated additional constraints regarding length and contiguity for selecting rationales. Their work shows that adding these additional constraints can enhance the coherence and relevance of the extracted rationales, ensuring they are concise and contextually connected, thus improving the understanding and usability of the model in real-world applications.