Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- Types of Interviews in Research | Guide & Examples

Types of Interviews in Research | Guide & Examples

Published on March 10, 2022 by Tegan George . Revised on June 22, 2023.

An interview is a qualitative research method that relies on asking questions in order to collect data . Interviews involve two or more people, one of whom is the interviewer asking the questions.

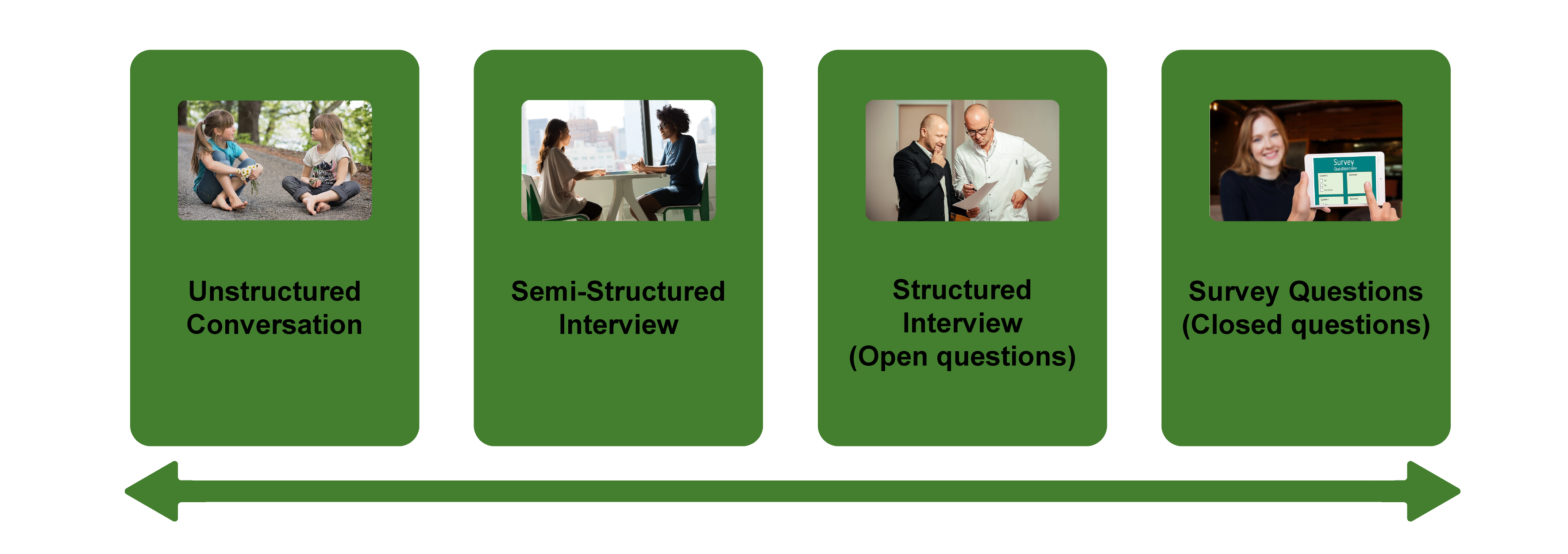

There are several types of interviews, often differentiated by their level of structure.

- Structured interviews have predetermined questions asked in a predetermined order.

- Unstructured interviews are more free-flowing.

- Semi-structured interviews fall in between.

Interviews are commonly used in market research, social science, and ethnographic research .

Table of contents

What is a structured interview, what is a semi-structured interview, what is an unstructured interview, what is a focus group, examples of interview questions, advantages and disadvantages of interviews, other interesting articles, frequently asked questions about types of interviews.

Structured interviews have predetermined questions in a set order. They are often closed-ended, featuring dichotomous (yes/no) or multiple-choice questions. While open-ended structured interviews exist, they are much less common. The types of questions asked make structured interviews a predominantly quantitative tool.

Asking set questions in a set order can help you see patterns among responses, and it allows you to easily compare responses between participants while keeping other factors constant. This can mitigate research biases and lead to higher reliability and validity. However, structured interviews can be overly formal, as well as limited in scope and flexibility.

- You feel very comfortable with your topic. This will help you formulate your questions most effectively.

- You have limited time or resources. Structured interviews are a bit more straightforward to analyze because of their closed-ended nature, and can be a doable undertaking for an individual.

- Your research question depends on holding environmental conditions between participants constant.

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

Semi-structured interviews are a blend of structured and unstructured interviews. While the interviewer has a general plan for what they want to ask, the questions do not have to follow a particular phrasing or order.

Semi-structured interviews are often open-ended, allowing for flexibility, but follow a predetermined thematic framework, giving a sense of order. For this reason, they are often considered “the best of both worlds.”

However, if the questions differ substantially between participants, it can be challenging to look for patterns, lessening the generalizability and validity of your results.

- You have prior interview experience. It’s easier than you think to accidentally ask a leading question when coming up with questions on the fly. Overall, spontaneous questions are much more difficult than they may seem.

- Your research question is exploratory in nature. The answers you receive can help guide your future research.

An unstructured interview is the most flexible type of interview. The questions and the order in which they are asked are not set. Instead, the interview can proceed more spontaneously, based on the participant’s previous answers.

Unstructured interviews are by definition open-ended. This flexibility can help you gather detailed information on your topic, while still allowing you to observe patterns between participants.

However, so much flexibility means that they can be very challenging to conduct properly. You must be very careful not to ask leading questions, as biased responses can lead to lower reliability or even invalidate your research.

- You have a solid background in your research topic and have conducted interviews before.

- Your research question is exploratory in nature, and you are seeking descriptive data that will deepen and contextualize your initial hypotheses.

- Your research necessitates forming a deeper connection with your participants, encouraging them to feel comfortable revealing their true opinions and emotions.

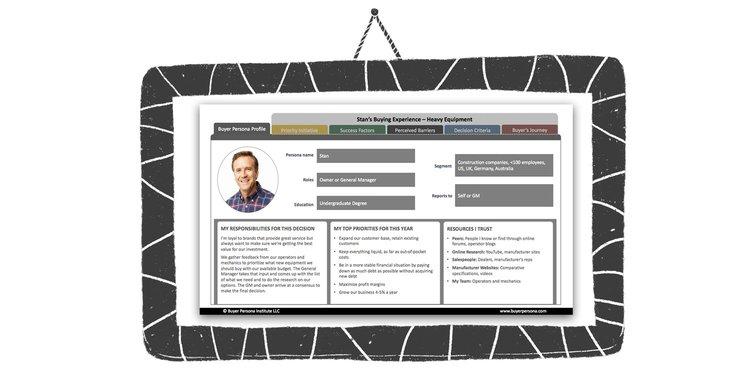

A focus group brings together a group of participants to answer questions on a topic of interest in a moderated setting. Focus groups are qualitative in nature and often study the group’s dynamic and body language in addition to their answers. Responses can guide future research on consumer products and services, human behavior, or controversial topics.

Focus groups can provide more nuanced and unfiltered feedback than individual interviews and are easier to organize than experiments or large surveys . However, their small size leads to low external validity and the temptation as a researcher to “cherry-pick” responses that fit your hypotheses.

- Your research focuses on the dynamics of group discussion or real-time responses to your topic.

- Your questions are complex and rooted in feelings, opinions, and perceptions that cannot be answered with a “yes” or “no.”

- Your topic is exploratory in nature, and you are seeking information that will help you uncover new questions or future research ideas.

Prevent plagiarism. Run a free check.

Depending on the type of interview you are conducting, your questions will differ in style, phrasing, and intention. Structured interview questions are set and precise, while the other types of interviews allow for more open-endedness and flexibility.

Here are some examples.

- Semi-structured

- Unstructured

- Focus group

- Do you like dogs? Yes/No

- Do you associate dogs with feeling: happy; somewhat happy; neutral; somewhat unhappy; unhappy

- If yes, name one attribute of dogs that you like.

- If no, name one attribute of dogs that you don’t like.

- What feelings do dogs bring out in you?

- When you think more deeply about this, what experiences would you say your feelings are rooted in?

Interviews are a great research tool. They allow you to gather rich information and draw more detailed conclusions than other research methods, taking into consideration nonverbal cues, off-the-cuff reactions, and emotional responses.

However, they can also be time-consuming and deceptively challenging to conduct properly. Smaller sample sizes can cause their validity and reliability to suffer, and there is an inherent risk of interviewer effect arising from accidentally leading questions.

Here are some advantages and disadvantages of each type of interview that can help you decide if you’d like to utilize this research method.

| Type of interview | Advantages | Disadvantages |

|---|---|---|

| Structured interview | ||

| Semi-structured interview | , , , and | |

| Unstructured interview | , , , and | |

| Focus group | , , and , since there are multiple people present |

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Student’s t -distribution

- Normal distribution

- Null and Alternative Hypotheses

- Chi square tests

- Confidence interval

- Quartiles & Quantiles

- Cluster sampling

- Stratified sampling

- Data cleansing

- Reproducibility vs Replicability

- Peer review

- Prospective cohort study

Research bias

- Implicit bias

- Cognitive bias

- Placebo effect

- Hawthorne effect

- Hindsight bias

- Affect heuristic

- Social desirability bias

The four most common types of interviews are:

- Structured interviews : The questions are predetermined in both topic and order.

- Semi-structured interviews : A few questions are predetermined, but other questions aren’t planned.

- Unstructured interviews : None of the questions are predetermined.

- Focus group interviews : The questions are presented to a group instead of one individual.

The interviewer effect is a type of bias that emerges when a characteristic of an interviewer (race, age, gender identity, etc.) influences the responses given by the interviewee.

There is a risk of an interviewer effect in all types of interviews , but it can be mitigated by writing really high-quality interview questions.

Social desirability bias is the tendency for interview participants to give responses that will be viewed favorably by the interviewer or other participants. It occurs in all types of interviews and surveys , but is most common in semi-structured interviews , unstructured interviews , and focus groups .

Social desirability bias can be mitigated by ensuring participants feel at ease and comfortable sharing their views. Make sure to pay attention to your own body language and any physical or verbal cues, such as nodding or widening your eyes.

This type of bias can also occur in observations if the participants know they’re being observed. They might alter their behavior accordingly.

A focus group is a research method that brings together a small group of people to answer questions in a moderated setting. The group is chosen due to predefined demographic traits, and the questions are designed to shed light on a topic of interest. It is one of 4 types of interviews .

Quantitative research deals with numbers and statistics, while qualitative research deals with words and meanings.

Quantitative methods allow you to systematically measure variables and test hypotheses . Qualitative methods allow you to explore concepts and experiences in more detail.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

George, T. (2023, June 22). Types of Interviews in Research | Guide & Examples. Scribbr. Retrieved June 9, 2024, from https://www.scribbr.com/methodology/interviews-research/

Is this article helpful?

Tegan George

Other students also liked, unstructured interview | definition, guide & examples, structured interview | definition, guide & examples, semi-structured interview | definition, guide & examples, get unlimited documents corrected.

✔ Free APA citation check included ✔ Unlimited document corrections ✔ Specialized in correcting academic texts

Chapter 11. Interviewing

Introduction.

Interviewing people is at the heart of qualitative research. It is not merely a way to collect data but an intrinsically rewarding activity—an interaction between two people that holds the potential for greater understanding and interpersonal development. Unlike many of our daily interactions with others that are fairly shallow and mundane, sitting down with a person for an hour or two and really listening to what they have to say is a profound and deep enterprise, one that can provide not only “data” for you, the interviewer, but also self-understanding and a feeling of being heard for the interviewee. I always approach interviewing with a deep appreciation for the opportunity it gives me to understand how other people experience the world. That said, there is not one kind of interview but many, and some of these are shallower than others. This chapter will provide you with an overview of interview techniques but with a special focus on the in-depth semistructured interview guide approach, which is the approach most widely used in social science research.

An interview can be variously defined as “a conversation with a purpose” ( Lune and Berg 2018 ) and an attempt to understand the world from the point of view of the person being interviewed: “to unfold the meaning of peoples’ experiences, to uncover their lived world prior to scientific explanations” ( Kvale 2007 ). It is a form of active listening in which the interviewer steers the conversation to subjects and topics of interest to their research but also manages to leave enough space for those interviewed to say surprising things. Achieving that balance is a tricky thing, which is why most practitioners believe interviewing is both an art and a science. In my experience as a teacher, there are some students who are “natural” interviewers (often they are introverts), but anyone can learn to conduct interviews, and everyone, even those of us who have been doing this for years, can improve their interviewing skills. This might be a good time to highlight the fact that the interview is a product between interviewer and interviewee and that this product is only as good as the rapport established between the two participants. Active listening is the key to establishing this necessary rapport.

Patton ( 2002 ) makes the argument that we use interviews because there are certain things that are not observable. In particular, “we cannot observe feelings, thoughts, and intentions. We cannot observe behaviors that took place at some previous point in time. We cannot observe situations that preclude the presence of an observer. We cannot observe how people have organized the world and the meanings they attach to what goes on in the world. We have to ask people questions about those things” ( 341 ).

Types of Interviews

There are several distinct types of interviews. Imagine a continuum (figure 11.1). On one side are unstructured conversations—the kind you have with your friends. No one is in control of those conversations, and what you talk about is often random—whatever pops into your head. There is no secret, underlying purpose to your talking—if anything, the purpose is to talk to and engage with each other, and the words you use and the things you talk about are a little beside the point. An unstructured interview is a little like this informal conversation, except that one of the parties to the conversation (you, the researcher) does have an underlying purpose, and that is to understand the other person. You are not friends speaking for no purpose, but it might feel just as unstructured to the “interviewee” in this scenario. That is one side of the continuum. On the other side are fully structured and standardized survey-type questions asked face-to-face. Here it is very clear who is asking the questions and who is answering them. This doesn’t feel like a conversation at all! A lot of people new to interviewing have this ( erroneously !) in mind when they think about interviews as data collection. Somewhere in the middle of these two extreme cases is the “ semistructured” interview , in which the researcher uses an “interview guide” to gently move the conversation to certain topics and issues. This is the primary form of interviewing for qualitative social scientists and will be what I refer to as interviewing for the rest of this chapter, unless otherwise specified.

Informal (unstructured conversations). This is the most “open-ended” approach to interviewing. It is particularly useful in conjunction with observational methods (see chapters 13 and 14). There are no predetermined questions. Each interview will be different. Imagine you are researching the Oregon Country Fair, an annual event in Veneta, Oregon, that includes live music, artisan craft booths, face painting, and a lot of people walking through forest paths. It’s unlikely that you will be able to get a person to sit down with you and talk intensely about a set of questions for an hour and a half. But you might be able to sidle up to several people and engage with them about their experiences at the fair. You might have a general interest in what attracts people to these events, so you could start a conversation by asking strangers why they are here or why they come back every year. That’s it. Then you have a conversation that may lead you anywhere. Maybe one person tells a long story about how their parents brought them here when they were a kid. A second person talks about how this is better than Burning Man. A third person shares their favorite traveling band. And yet another enthuses about the public library in the woods. During your conversations, you also talk about a lot of other things—the weather, the utilikilts for sale, the fact that a favorite food booth has disappeared. It’s all good. You may not be able to record these conversations. Instead, you might jot down notes on the spot and then, when you have the time, write down as much as you can remember about the conversations in long fieldnotes. Later, you will have to sit down with these fieldnotes and try to make sense of all the information (see chapters 18 and 19).

Interview guide ( semistructured interview ). This is the primary type employed by social science qualitative researchers. The researcher creates an “interview guide” in advance, which she uses in every interview. In theory, every person interviewed is asked the same questions. In practice, every person interviewed is asked mostly the same topics but not always the same questions, as the whole point of a “guide” is that it guides the direction of the conversation but does not command it. The guide is typically between five and ten questions or question areas, sometimes with suggested follow-ups or prompts . For example, one question might be “What was it like growing up in Eastern Oregon?” with prompts such as “Did you live in a rural area? What kind of high school did you attend?” to help the conversation develop. These interviews generally take place in a quiet place (not a busy walkway during a festival) and are recorded. The recordings are transcribed, and those transcriptions then become the “data” that is analyzed (see chapters 18 and 19). The conventional length of one of these types of interviews is between one hour and two hours, optimally ninety minutes. Less than one hour doesn’t allow for much development of questions and thoughts, and two hours (or more) is a lot of time to ask someone to sit still and answer questions. If you have a lot of ground to cover, and the person is willing, I highly recommend two separate interview sessions, with the second session being slightly shorter than the first (e.g., ninety minutes the first day, sixty minutes the second). There are lots of good reasons for this, but the most compelling one is that this allows you to listen to the first day’s recording and catch anything interesting you might have missed in the moment and so develop follow-up questions that can probe further. This also allows the person being interviewed to have some time to think about the issues raised in the interview and go a little deeper with their answers.

Standardized questionnaire with open responses ( structured interview ). This is the type of interview a lot of people have in mind when they hear “interview”: a researcher comes to your door with a clipboard and proceeds to ask you a series of questions. These questions are all the same whoever answers the door; they are “standardized.” Both the wording and the exact order are important, as people’s responses may vary depending on how and when a question is asked. These are qualitative only in that the questions allow for “open-ended responses”: people can say whatever they want rather than select from a predetermined menu of responses. For example, a survey I collaborated on included this open-ended response question: “How does class affect one’s career success in sociology?” Some of the answers were simply one word long (e.g., “debt”), and others were long statements with stories and personal anecdotes. It is possible to be surprised by the responses. Although it’s a stretch to call this kind of questioning a conversation, it does allow the person answering the question some degree of freedom in how they answer.

Survey questionnaire with closed responses (not an interview!). Standardized survey questions with specific answer options (e.g., closed responses) are not really interviews at all, and they do not generate qualitative data. For example, if we included five options for the question “How does class affect one’s career success in sociology?”—(1) debt, (2) social networks, (3) alienation, (4) family doesn’t understand, (5) type of grad program—we leave no room for surprises at all. Instead, we would most likely look at patterns around these responses, thinking quantitatively rather than qualitatively (e.g., using regression analysis techniques, we might find that working-class sociologists were twice as likely to bring up alienation). It can sometimes be confusing for new students because the very same survey can include both closed-ended and open-ended questions. The key is to think about how these will be analyzed and to what level surprises are possible. If your plan is to turn all responses into a number and make predictions about correlations and relationships, you are no longer conducting qualitative research. This is true even if you are conducting this survey face-to-face with a real live human. Closed-response questions are not conversations of any kind, purposeful or not.

In summary, the semistructured interview guide approach is the predominant form of interviewing for social science qualitative researchers because it allows a high degree of freedom of responses from those interviewed (thus allowing for novel discoveries) while still maintaining some connection to a research question area or topic of interest. The rest of the chapter assumes the employment of this form.

Creating an Interview Guide

Your interview guide is the instrument used to bridge your research question(s) and what the people you are interviewing want to tell you. Unlike a standardized questionnaire, the questions actually asked do not need to be exactly what you have written down in your guide. The guide is meant to create space for those you are interviewing to talk about the phenomenon of interest, but sometimes you are not even sure what that phenomenon is until you start asking questions. A priority in creating an interview guide is to ensure it offers space. One of the worst mistakes is to create questions that are so specific that the person answering them will not stray. Relatedly, questions that sound “academic” will shut down a lot of respondents. A good interview guide invites respondents to talk about what is important to them, not feel like they are performing or being evaluated by you.

Good interview questions should not sound like your “research question” at all. For example, let’s say your research question is “How do patriarchal assumptions influence men’s understanding of climate change and responses to climate change?” It would be worse than unhelpful to ask a respondent, “How do your assumptions about the role of men affect your understanding of climate change?” You need to unpack this into manageable nuggets that pull your respondent into the area of interest without leading him anywhere. You could start by asking him what he thinks about climate change in general. Or, even better, whether he has any concerns about heatwaves or increased tornadoes or polar icecaps melting. Once he starts talking about that, you can ask follow-up questions that bring in issues around gendered roles, perhaps asking if he is married (to a woman) and whether his wife shares his thoughts and, if not, how they negotiate that difference. The fact is, you won’t really know the right questions to ask until he starts talking.

There are several distinct types of questions that can be used in your interview guide, either as main questions or as follow-up probes. If you remember that the point is to leave space for the respondent, you will craft a much more effective interview guide! You will also want to think about the place of time in both the questions themselves (past, present, future orientations) and the sequencing of the questions.

Researcher Note

Suggestion : As you read the next three sections (types of questions, temporality, question sequence), have in mind a particular research question, and try to draft questions and sequence them in a way that opens space for a discussion that helps you answer your research question.

Type of Questions

Experience and behavior questions ask about what a respondent does regularly (their behavior) or has done (their experience). These are relatively easy questions for people to answer because they appear more “factual” and less subjective. This makes them good opening questions. For the study on climate change above, you might ask, “Have you ever experienced an unusual weather event? What happened?” Or “You said you work outside? What is a typical summer workday like for you? How do you protect yourself from the heat?”

Opinion and values questions , in contrast, ask questions that get inside the minds of those you are interviewing. “Do you think climate change is real? Who or what is responsible for it?” are two such questions. Note that you don’t have to literally ask, “What is your opinion of X?” but you can find a way to ask the specific question relevant to the conversation you are having. These questions are a bit trickier to ask because the answers you get may depend in part on how your respondent perceives you and whether they want to please you or not. We’ve talked a fair amount about being reflective. Here is another place where this comes into play. You need to be aware of the effect your presence might have on the answers you are receiving and adjust accordingly. If you are a woman who is perceived as liberal asking a man who identifies as conservative about climate change, there is a lot of subtext that can be going on in the interview. There is no one right way to resolve this, but you must at least be aware of it.

Feeling questions are questions that ask respondents to draw on their emotional responses. It’s pretty common for academic researchers to forget that we have bodies and emotions, but people’s understandings of the world often operate at this affective level, sometimes unconsciously or barely consciously. It is a good idea to include questions that leave space for respondents to remember, imagine, or relive emotional responses to particular phenomena. “What was it like when you heard your cousin’s house burned down in that wildfire?” doesn’t explicitly use any emotion words, but it allows your respondent to remember what was probably a pretty emotional day. And if they respond emotionally neutral, that is pretty interesting data too. Note that asking someone “How do you feel about X” is not always going to evoke an emotional response, as they might simply turn around and respond with “I think that…” It is better to craft a question that actually pushes the respondent into the affective category. This might be a specific follow-up to an experience and behavior question —for example, “You just told me about your daily routine during the summer heat. Do you worry it is going to get worse?” or “Have you ever been afraid it will be too hot to get your work accomplished?”

Knowledge questions ask respondents what they actually know about something factual. We have to be careful when we ask these types of questions so that respondents do not feel like we are evaluating them (which would shut them down), but, for example, it is helpful to know when you are having a conversation about climate change that your respondent does in fact know that unusual weather events have increased and that these have been attributed to climate change! Asking these questions can set the stage for deeper questions and can ensure that the conversation makes the same kind of sense to both participants. For example, a conversation about political polarization can be put back on track once you realize that the respondent doesn’t really have a clear understanding that there are two parties in the US. Instead of asking a series of questions about Republicans and Democrats, you might shift your questions to talk more generally about political disagreements (e.g., “people against abortion”). And sometimes what you do want to know is the level of knowledge about a particular program or event (e.g., “Are you aware you can discharge your student loans through the Public Service Loan Forgiveness program?”).

Sensory questions call on all senses of the respondent to capture deeper responses. These are particularly helpful in sparking memory. “Think back to your childhood in Eastern Oregon. Describe the smells, the sounds…” Or you could use these questions to help a person access the full experience of a setting they customarily inhabit: “When you walk through the doors to your office building, what do you see? Hear? Smell?” As with feeling questions , these questions often supplement experience and behavior questions . They are another way of allowing your respondent to report fully and deeply rather than remain on the surface.

Creative questions employ illustrative examples, suggested scenarios, or simulations to get respondents to think more deeply about an issue, topic, or experience. There are many options here. In The Trouble with Passion , Erin Cech ( 2021 ) provides a scenario in which “Joe” is trying to decide whether to stay at his decent but boring computer job or follow his passion by opening a restaurant. She asks respondents, “What should Joe do?” Their answers illuminate the attraction of “passion” in job selection. In my own work, I have used a news story about an upwardly mobile young man who no longer has time to see his mother and sisters to probe respondents’ feelings about the costs of social mobility. Jessi Streib and Betsy Leondar-Wright have used single-page cartoon “scenes” to elicit evaluations of potential racial discrimination, sexual harassment, and classism. Barbara Sutton ( 2010 ) has employed lists of words (“strong,” “mother,” “victim”) on notecards she fans out and asks her female respondents to select and discuss.

Background/Demographic Questions

You most definitely will want to know more about the person you are interviewing in terms of conventional demographic information, such as age, race, gender identity, occupation, and educational attainment. These are not questions that normally open up inquiry. [1] For this reason, my practice has been to include a separate “demographic questionnaire” sheet that I ask each respondent to fill out at the conclusion of the interview. Only include those aspects that are relevant to your study. For example, if you are not exploring religion or religious affiliation, do not include questions about a person’s religion on the demographic sheet. See the example provided at the end of this chapter.

Temporality

Any type of question can have a past, present, or future orientation. For example, if you are asking a behavior question about workplace routine, you might ask the respondent to talk about past work, present work, and ideal (future) work. Similarly, if you want to understand how people cope with natural disasters, you might ask your respondent how they felt then during the wildfire and now in retrospect and whether and to what extent they have concerns for future wildfire disasters. It’s a relatively simple suggestion—don’t forget to ask about past, present, and future—but it can have a big impact on the quality of the responses you receive.

Question Sequence

Having a list of good questions or good question areas is not enough to make a good interview guide. You will want to pay attention to the order in which you ask your questions. Even though any one respondent can derail this order (perhaps by jumping to answer a question you haven’t yet asked), a good advance plan is always helpful. When thinking about sequence, remember that your goal is to get your respondent to open up to you and to say things that might surprise you. To establish rapport, it is best to start with nonthreatening questions. Asking about the present is often the safest place to begin, followed by the past (they have to know you a little bit to get there), and lastly, the future (talking about hopes and fears requires the most rapport). To allow for surprises, it is best to move from very general questions to more particular questions only later in the interview. This ensures that respondents have the freedom to bring up the topics that are relevant to them rather than feel like they are constrained to answer you narrowly. For example, refrain from asking about particular emotions until these have come up previously—don’t lead with them. Often, your more particular questions will emerge only during the course of the interview, tailored to what is emerging in conversation.

Once you have a set of questions, read through them aloud and imagine you are being asked the same questions. Does the set of questions have a natural flow? Would you be willing to answer the very first question to a total stranger? Does your sequence establish facts and experiences before moving on to opinions and values? Did you include prefatory statements, where necessary; transitions; and other announcements? These can be as simple as “Hey, we talked a lot about your experiences as a barista while in college.… Now I am turning to something completely different: how you managed friendships in college.” That is an abrupt transition, but it has been softened by your acknowledgment of that.

Probes and Flexibility

Once you have the interview guide, you will also want to leave room for probes and follow-up questions. As in the sample probe included here, you can write out the obvious probes and follow-up questions in advance. You might not need them, as your respondent might anticipate them and include full responses to the original question. Or you might need to tailor them to how your respondent answered the question. Some common probes and follow-up questions include asking for more details (When did that happen? Who else was there?), asking for elaboration (Could you say more about that?), asking for clarification (Does that mean what I think it means or something else? I understand what you mean, but someone else reading the transcript might not), and asking for contrast or comparison (How did this experience compare with last year’s event?). “Probing is a skill that comes from knowing what to look for in the interview, listening carefully to what is being said and what is not said, and being sensitive to the feedback needs of the person being interviewed” ( Patton 2002:374 ). It takes work! And energy. I and many other interviewers I know report feeling emotionally and even physically drained after conducting an interview. You are tasked with active listening and rearranging your interview guide as needed on the fly. If you only ask the questions written down in your interview guide with no deviations, you are doing it wrong. [2]

The Final Question

Every interview guide should include a very open-ended final question that allows for the respondent to say whatever it is they have been dying to tell you but you’ve forgotten to ask. About half the time they are tired too and will tell you they have nothing else to say. But incredibly, some of the most honest and complete responses take place here, at the end of a long interview. You have to realize that the person being interviewed is often discovering things about themselves as they talk to you and that this process of discovery can lead to new insights for them. Making space at the end is therefore crucial. Be sure you convey that you actually do want them to tell you more, that the offer of “anything else?” is not read as an empty convention where the polite response is no. Here is where you can pull from that active listening and tailor the final question to the particular person. For example, “I’ve asked you a lot of questions about what it was like to live through that wildfire. I’m wondering if there is anything I’ve forgotten to ask, especially because I haven’t had that experience myself” is a much more inviting final question than “Great. Anything you want to add?” It’s also helpful to convey to the person that you have the time to listen to their full answer, even if the allotted time is at the end. After all, there are no more questions to ask, so the respondent knows exactly how much time is left. Do them the courtesy of listening to them!

Conducting the Interview

Once you have your interview guide, you are on your way to conducting your first interview. I always practice my interview guide with a friend or family member. I do this even when the questions don’t make perfect sense for them, as it still helps me realize which questions make no sense, are poorly worded (too academic), or don’t follow sequentially. I also practice the routine I will use for interviewing, which goes something like this:

- Introduce myself and reintroduce the study

- Provide consent form and ask them to sign and retain/return copy

- Ask if they have any questions about the study before we begin

- Ask if I can begin recording

- Ask questions (from interview guide)

- Turn off the recording device

- Ask if they are willing to fill out my demographic questionnaire

- Collect questionnaire and, without looking at the answers, place in same folder as signed consent form

- Thank them and depart

A note on remote interviewing: Interviews have traditionally been conducted face-to-face in a private or quiet public setting. You don’t want a lot of background noise, as this will make transcriptions difficult. During the recent global pandemic, many interviewers, myself included, learned the benefits of interviewing remotely. Although face-to-face is still preferable for many reasons, Zoom interviewing is not a bad alternative, and it does allow more interviews across great distances. Zoom also includes automatic transcription, which significantly cuts down on the time it normally takes to convert our conversations into “data” to be analyzed. These automatic transcriptions are not perfect, however, and you will still need to listen to the recording and clarify and clean up the transcription. Nor do automatic transcriptions include notations of body language or change of tone, which you may want to include. When interviewing remotely, you will want to collect the consent form before you meet: ask them to read, sign, and return it as an email attachment. I think it is better to ask for the demographic questionnaire after the interview, but because some respondents may never return it then, it is probably best to ask for this at the same time as the consent form, in advance of the interview.

What should you bring to the interview? I would recommend bringing two copies of the consent form (one for you and one for the respondent), a demographic questionnaire, a manila folder in which to place the signed consent form and filled-out demographic questionnaire, a printed copy of your interview guide (I print with three-inch right margins so I can jot down notes on the page next to relevant questions), a pen, a recording device, and water.

After the interview, you will want to secure the signed consent form in a locked filing cabinet (if in print) or a password-protected folder on your computer. Using Excel or a similar program that allows tables/spreadsheets, create an identifying number for your interview that links to the consent form without using the name of your respondent. For example, let’s say that I conduct interviews with US politicians, and the first person I meet with is George W. Bush. I will assign the transcription the number “INT#001” and add it to the signed consent form. [3] The signed consent form goes into a locked filing cabinet, and I never use the name “George W. Bush” again. I take the information from the demographic sheet, open my Excel spreadsheet, and add the relevant information in separate columns for the row INT#001: White, male, Republican. When I interview Bill Clinton as my second interview, I include a second row: INT#002: White, male, Democrat. And so on. The only link to the actual name of the respondent and this information is the fact that the consent form (unavailable to anyone but me) has stamped on it the interview number.

Many students get very nervous before their first interview. Actually, many of us are always nervous before the interview! But do not worry—this is normal, and it does pass. Chances are, you will be pleasantly surprised at how comfortable it begins to feel. These “purposeful conversations” are often a delight for both participants. This is not to say that sometimes things go wrong. I often have my students practice several “bad scenarios” (e.g., a respondent that you cannot get to open up; a respondent who is too talkative and dominates the conversation, steering it away from the topics you are interested in; emotions that completely take over; or shocking disclosures you are ill-prepared to handle), but most of the time, things go quite well. Be prepared for the unexpected, but know that the reason interviews are so popular as a technique of data collection is that they are usually richly rewarding for both participants.

One thing that I stress to my methods students and remind myself about is that interviews are still conversations between people. If there’s something you might feel uncomfortable asking someone about in a “normal” conversation, you will likely also feel a bit of discomfort asking it in an interview. Maybe more importantly, your respondent may feel uncomfortable. Social research—especially about inequality—can be uncomfortable. And it’s easy to slip into an abstract, intellectualized, or removed perspective as an interviewer. This is one reason trying out interview questions is important. Another is that sometimes the question sounds good in your head but doesn’t work as well out loud in practice. I learned this the hard way when a respondent asked me how I would answer the question I had just posed, and I realized that not only did I not really know how I would answer it, but I also wasn’t quite as sure I knew what I was asking as I had thought.

—Elizabeth M. Lee, Associate Professor of Sociology at Saint Joseph’s University, author of Class and Campus Life , and co-author of Geographies of Campus Inequality

How Many Interviews?

Your research design has included a targeted number of interviews and a recruitment plan (see chapter 5). Follow your plan, but remember that “ saturation ” is your goal. You interview as many people as you can until you reach a point at which you are no longer surprised by what they tell you. This means not that no one after your first twenty interviews will have surprising, interesting stories to tell you but rather that the picture you are forming about the phenomenon of interest to you from a research perspective has come into focus, and none of the interviews are substantially refocusing that picture. That is when you should stop collecting interviews. Note that to know when you have reached this, you will need to read your transcripts as you go. More about this in chapters 18 and 19.

Your Final Product: The Ideal Interview Transcript

A good interview transcript will demonstrate a subtly controlled conversation by the skillful interviewer. In general, you want to see replies that are about one paragraph long, not short sentences and not running on for several pages. Although it is sometimes necessary to follow respondents down tangents, it is also often necessary to pull them back to the questions that form the basis of your research study. This is not really a free conversation, although it may feel like that to the person you are interviewing.

Final Tips from an Interview Master

Annette Lareau is arguably one of the masters of the trade. In Listening to People , she provides several guidelines for good interviews and then offers a detailed example of an interview gone wrong and how it could be addressed (please see the “Further Readings” at the end of this chapter). Here is an abbreviated version of her set of guidelines: (1) interview respondents who are experts on the subjects of most interest to you (as a corollary, don’t ask people about things they don’t know); (2) listen carefully and talk as little as possible; (3) keep in mind what you want to know and why you want to know it; (4) be a proactive interviewer (subtly guide the conversation); (5) assure respondents that there aren’t any right or wrong answers; (6) use the respondent’s own words to probe further (this both allows you to accurately identify what you heard and pushes the respondent to explain further); (7) reuse effective probes (don’t reinvent the wheel as you go—if repeating the words back works, do it again and again); (8) focus on learning the subjective meanings that events or experiences have for a respondent; (9) don’t be afraid to ask a question that draws on your own knowledge (unlike trial lawyers who are trained never to ask a question for which they don’t already know the answer, sometimes it’s worth it to ask risky questions based on your hypotheses or just plain hunches); (10) keep thinking while you are listening (so difficult…and important); (11) return to a theme raised by a respondent if you want further information; (12) be mindful of power inequalities (and never ever coerce a respondent to continue the interview if they want out); (13) take control with overly talkative respondents; (14) expect overly succinct responses, and develop strategies for probing further; (15) balance digging deep and moving on; (16) develop a plan to deflect questions (e.g., let them know you are happy to answer any questions at the end of the interview, but you don’t want to take time away from them now); and at the end, (17) check to see whether you have asked all your questions. You don’t always have to ask everyone the same set of questions, but if there is a big area you have forgotten to cover, now is the time to recover ( Lareau 2021:93–103 ).

Sample: Demographic Questionnaire

ASA Taskforce on First-Generation and Working-Class Persons in Sociology – Class Effects on Career Success

Supplementary Demographic Questionnaire

Thank you for your participation in this interview project. We would like to collect a few pieces of key demographic information from you to supplement our analyses. Your answers to these questions will be kept confidential and stored by ID number. All of your responses here are entirely voluntary!

What best captures your race/ethnicity? (please check any/all that apply)

- White (Non Hispanic/Latina/o/x)

- Black or African American

- Hispanic, Latino/a/x of Spanish

- Asian or Asian American

- American Indian or Alaska Native

- Middle Eastern or North African

- Native Hawaiian or Pacific Islander

- Other : (Please write in: ________________)

What is your current position?

- Grad Student

- Full Professor

Please check any and all of the following that apply to you:

- I identify as a working-class academic

- I was the first in my family to graduate from college

- I grew up poor

What best reflects your gender?

- Transgender female/Transgender woman

- Transgender male/Transgender man

- Gender queer/ Gender nonconforming

Anything else you would like us to know about you?

Example: Interview Guide

In this example, follow-up prompts are italicized. Note the sequence of questions. That second question often elicits an entire life history , answering several later questions in advance.

Introduction Script/Question

Thank you for participating in our survey of ASA members who identify as first-generation or working-class. As you may have heard, ASA has sponsored a taskforce on first-generation and working-class persons in sociology and we are interested in hearing from those who so identify. Your participation in this interview will help advance our knowledge in this area.

- The first thing we would like to as you is why you have volunteered to be part of this study? What does it mean to you be first-gen or working class? Why were you willing to be interviewed?

- How did you decide to become a sociologist?

- Can you tell me a little bit about where you grew up? ( prompts: what did your parent(s) do for a living? What kind of high school did you attend?)

- Has this identity been salient to your experience? (how? How much?)

- How welcoming was your grad program? Your first academic employer?

- Why did you decide to pursue sociology at the graduate level?

- Did you experience culture shock in college? In graduate school?

- Has your FGWC status shaped how you’ve thought about where you went to school? debt? etc?

- Were you mentored? How did this work (not work)? How might it?

- What did you consider when deciding where to go to grad school? Where to apply for your first position?

- What, to you, is a mark of career success? Have you achieved that success? What has helped or hindered your pursuit of success?

- Do you think sociology, as a field, cares about prestige?

- Let’s talk a little bit about intersectionality. How does being first-gen/working class work alongside other identities that are important to you?

- What do your friends and family think about your career? Have you had any difficulty relating to family members or past friends since becoming highly educated?

- Do you have any debt from college/grad school? Are you concerned about this? Could you explain more about how you paid for college/grad school? (here, include assistance from family, fellowships, scholarships, etc.)

- (You’ve mentioned issues or obstacles you had because of your background.) What could have helped? Or, who or what did? Can you think of fortuitous moments in your career?

- Do you have any regrets about the path you took?

- Is there anything else you would like to add? Anything that the Taskforce should take note of, that we did not ask you about here?

Further Readings

Britten, Nicky. 1995. “Qualitative Interviews in Medical Research.” BMJ: British Medical Journal 31(6999):251–253. A good basic overview of interviewing particularly useful for students of public health and medical research generally.

Corbin, Juliet, and Janice M. Morse. 2003. “The Unstructured Interactive Interview: Issues of Reciprocity and Risks When Dealing with Sensitive Topics.” Qualitative Inquiry 9(3):335–354. Weighs the potential benefits and harms of conducting interviews on topics that may cause emotional distress. Argues that the researcher’s skills and code of ethics should ensure that the interviewing process provides more of a benefit to both participant and researcher than a harm to the former.

Gerson, Kathleen, and Sarah Damaske. 2020. The Science and Art of Interviewing . New York: Oxford University Press. A useful guidebook/textbook for both undergraduates and graduate students, written by sociologists.

Kvale, Steiner. 2007. Doing Interviews . London: SAGE. An easy-to-follow guide to conducting and analyzing interviews by psychologists.

Lamont, Michèle, and Ann Swidler. 2014. “Methodological Pluralism and the Possibilities and Limits of Interviewing.” Qualitative Sociology 37(2):153–171. Written as a response to various debates surrounding the relative value of interview-based studies and ethnographic studies defending the particular strengths of interviewing. This is a must-read article for anyone seriously engaging in qualitative research!

Pugh, Allison J. 2013. “What Good Are Interviews for Thinking about Culture? Demystifying Interpretive Analysis.” American Journal of Cultural Sociology 1(1):42–68. Another defense of interviewing written against those who champion ethnographic methods as superior, particularly in the area of studying culture. A classic.

Rapley, Timothy John. 2001. “The ‘Artfulness’ of Open-Ended Interviewing: Some considerations in analyzing interviews.” Qualitative Research 1(3):303–323. Argues for the importance of “local context” of data production (the relationship built between interviewer and interviewee, for example) in properly analyzing interview data.

Weiss, Robert S. 1995. Learning from Strangers: The Art and Method of Qualitative Interview Studies . New York: Simon and Schuster. A classic and well-regarded textbook on interviewing. Because Weiss has extensive experience conducting surveys, he contrasts the qualitative interview with the survey questionnaire well; particularly useful for those trained in the latter.

- I say “normally” because how people understand their various identities can itself be an expansive topic of inquiry. Here, I am merely talking about collecting otherwise unexamined demographic data, similar to how we ask people to check boxes on surveys. ↵

- Again, this applies to “semistructured in-depth interviewing.” When conducting standardized questionnaires, you will want to ask each question exactly as written, without deviations! ↵

- I always include “INT” in the number because I sometimes have other kinds of data with their own numbering: FG#001 would mean the first focus group, for example. I also always include three-digit spaces, as this allows for up to 999 interviews (or, more realistically, allows for me to interview up to one hundred persons without having to reset my numbering system). ↵

A method of data collection in which the researcher asks the participant questions; the answers to these questions are often recorded and transcribed verbatim. There are many different kinds of interviews - see also semistructured interview , structured interview , and unstructured interview .

A document listing key questions and question areas for use during an interview. It is used most often for semi-structured interviews. A good interview guide may have no more than ten primary questions for two hours of interviewing, but these ten questions will be supplemented by probes and relevant follow-ups throughout the interview. Most IRBs require the inclusion of the interview guide in applications for review. See also interview and semi-structured interview .

A data-collection method that relies on casual, conversational, and informal interviewing. Despite its apparent conversational nature, the researcher usually has a set of particular questions or question areas in mind but allows the interview to unfold spontaneously. This is a common data-collection technique among ethnographers. Compare to the semi-structured or in-depth interview .

A form of interview that follows a standard guide of questions asked, although the order of the questions may change to match the particular needs of each individual interview subject, and probing “follow-up” questions are often added during the course of the interview. The semi-structured interview is the primary form of interviewing used by qualitative researchers in the social sciences. It is sometimes referred to as an “in-depth” interview. See also interview and interview guide .

The cluster of data-collection tools and techniques that involve observing interactions between people, the behaviors, and practices of individuals (sometimes in contrast to what they say about how they act and behave), and cultures in context. Observational methods are the key tools employed by ethnographers and Grounded Theory .

Follow-up questions used in a semi-structured interview to elicit further elaboration. Suggested prompts can be included in the interview guide to be used/deployed depending on how the initial question was answered or if the topic of the prompt does not emerge spontaneously.

A form of interview that follows a strict set of questions, asked in a particular order, for all interview subjects. The questions are also the kind that elicits short answers, and the data is more “informative” than probing. This is often used in mixed-methods studies, accompanying a survey instrument. Because there is no room for nuance or the exploration of meaning in structured interviews, qualitative researchers tend to employ semi-structured interviews instead. See also interview.

The point at which you can conclude data collection because every person you are interviewing, the interaction you are observing, or content you are analyzing merely confirms what you have already noted. Achieving saturation is often used as the justification for the final sample size.

An interview variant in which a person’s life story is elicited in a narrative form. Turning points and key themes are established by the researcher and used as data points for further analysis.

Introduction to Qualitative Research Methods Copyright © 2023 by Allison Hurst is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License , except where otherwise noted.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Neurol Res Pract

How to use and assess qualitative research methods

Loraine busetto.

1 Department of Neurology, Heidelberg University Hospital, Im Neuenheimer Feld 400, 69120 Heidelberg, Germany

Wolfgang Wick

2 Clinical Cooperation Unit Neuro-Oncology, German Cancer Research Center, Heidelberg, Germany

Christoph Gumbinger

Associated data.

Not applicable.

This paper aims to provide an overview of the use and assessment of qualitative research methods in the health sciences. Qualitative research can be defined as the study of the nature of phenomena and is especially appropriate for answering questions of why something is (not) observed, assessing complex multi-component interventions, and focussing on intervention improvement. The most common methods of data collection are document study, (non-) participant observations, semi-structured interviews and focus groups. For data analysis, field-notes and audio-recordings are transcribed into protocols and transcripts, and coded using qualitative data management software. Criteria such as checklists, reflexivity, sampling strategies, piloting, co-coding, member-checking and stakeholder involvement can be used to enhance and assess the quality of the research conducted. Using qualitative in addition to quantitative designs will equip us with better tools to address a greater range of research problems, and to fill in blind spots in current neurological research and practice.

The aim of this paper is to provide an overview of qualitative research methods, including hands-on information on how they can be used, reported and assessed. This article is intended for beginning qualitative researchers in the health sciences as well as experienced quantitative researchers who wish to broaden their understanding of qualitative research.

What is qualitative research?

Qualitative research is defined as “the study of the nature of phenomena”, including “their quality, different manifestations, the context in which they appear or the perspectives from which they can be perceived” , but excluding “their range, frequency and place in an objectively determined chain of cause and effect” [ 1 ]. This formal definition can be complemented with a more pragmatic rule of thumb: qualitative research generally includes data in form of words rather than numbers [ 2 ].

Why conduct qualitative research?

Because some research questions cannot be answered using (only) quantitative methods. For example, one Australian study addressed the issue of why patients from Aboriginal communities often present late or not at all to specialist services offered by tertiary care hospitals. Using qualitative interviews with patients and staff, it found one of the most significant access barriers to be transportation problems, including some towns and communities simply not having a bus service to the hospital [ 3 ]. A quantitative study could have measured the number of patients over time or even looked at possible explanatory factors – but only those previously known or suspected to be of relevance. To discover reasons for observed patterns, especially the invisible or surprising ones, qualitative designs are needed.

While qualitative research is common in other fields, it is still relatively underrepresented in health services research. The latter field is more traditionally rooted in the evidence-based-medicine paradigm, as seen in " research that involves testing the effectiveness of various strategies to achieve changes in clinical practice, preferably applying randomised controlled trial study designs (...) " [ 4 ]. This focus on quantitative research and specifically randomised controlled trials (RCT) is visible in the idea of a hierarchy of research evidence which assumes that some research designs are objectively better than others, and that choosing a "lesser" design is only acceptable when the better ones are not practically or ethically feasible [ 5 , 6 ]. Others, however, argue that an objective hierarchy does not exist, and that, instead, the research design and methods should be chosen to fit the specific research question at hand – "questions before methods" [ 2 , 7 – 9 ]. This means that even when an RCT is possible, some research problems require a different design that is better suited to addressing them. Arguing in JAMA, Berwick uses the example of rapid response teams in hospitals, which he describes as " a complex, multicomponent intervention – essentially a process of social change" susceptible to a range of different context factors including leadership or organisation history. According to him, "[in] such complex terrain, the RCT is an impoverished way to learn. Critics who use it as a truth standard in this context are incorrect" [ 8 ] . Instead of limiting oneself to RCTs, Berwick recommends embracing a wider range of methods , including qualitative ones, which for "these specific applications, (...) are not compromises in learning how to improve; they are superior" [ 8 ].

Research problems that can be approached particularly well using qualitative methods include assessing complex multi-component interventions or systems (of change), addressing questions beyond “what works”, towards “what works for whom when, how and why”, and focussing on intervention improvement rather than accreditation [ 7 , 9 – 12 ]. Using qualitative methods can also help shed light on the “softer” side of medical treatment. For example, while quantitative trials can measure the costs and benefits of neuro-oncological treatment in terms of survival rates or adverse effects, qualitative research can help provide a better understanding of patient or caregiver stress, visibility of illness or out-of-pocket expenses.

How to conduct qualitative research?

Given that qualitative research is characterised by flexibility, openness and responsivity to context, the steps of data collection and analysis are not as separate and consecutive as they tend to be in quantitative research [ 13 , 14 ]. As Fossey puts it : “sampling, data collection, analysis and interpretation are related to each other in a cyclical (iterative) manner, rather than following one after another in a stepwise approach” [ 15 ]. The researcher can make educated decisions with regard to the choice of method, how they are implemented, and to which and how many units they are applied [ 13 ]. As shown in Fig. 1 , this can involve several back-and-forth steps between data collection and analysis where new insights and experiences can lead to adaption and expansion of the original plan. Some insights may also necessitate a revision of the research question and/or the research design as a whole. The process ends when saturation is achieved, i.e. when no relevant new information can be found (see also below: sampling and saturation). For reasons of transparency, it is essential for all decisions as well as the underlying reasoning to be well-documented.

Iterative research process

While it is not always explicitly addressed, qualitative methods reflect a different underlying research paradigm than quantitative research (e.g. constructivism or interpretivism as opposed to positivism). The choice of methods can be based on the respective underlying substantive theory or theoretical framework used by the researcher [ 2 ].

Data collection

The methods of qualitative data collection most commonly used in health research are document study, observations, semi-structured interviews and focus groups [ 1 , 14 , 16 , 17 ].

Document study

Document study (also called document analysis) refers to the review by the researcher of written materials [ 14 ]. These can include personal and non-personal documents such as archives, annual reports, guidelines, policy documents, diaries or letters.

Observations

Observations are particularly useful to gain insights into a certain setting and actual behaviour – as opposed to reported behaviour or opinions [ 13 ]. Qualitative observations can be either participant or non-participant in nature. In participant observations, the observer is part of the observed setting, for example a nurse working in an intensive care unit [ 18 ]. In non-participant observations, the observer is “on the outside looking in”, i.e. present in but not part of the situation, trying not to influence the setting by their presence. Observations can be planned (e.g. for 3 h during the day or night shift) or ad hoc (e.g. as soon as a stroke patient arrives at the emergency room). During the observation, the observer takes notes on everything or certain pre-determined parts of what is happening around them, for example focusing on physician-patient interactions or communication between different professional groups. Written notes can be taken during or after the observations, depending on feasibility (which is usually lower during participant observations) and acceptability (e.g. when the observer is perceived to be judging the observed). Afterwards, these field notes are transcribed into observation protocols. If more than one observer was involved, field notes are taken independently, but notes can be consolidated into one protocol after discussions. Advantages of conducting observations include minimising the distance between the researcher and the researched, the potential discovery of topics that the researcher did not realise were relevant and gaining deeper insights into the real-world dimensions of the research problem at hand [ 18 ].

Semi-structured interviews

Hijmans & Kuyper describe qualitative interviews as “an exchange with an informal character, a conversation with a goal” [ 19 ]. Interviews are used to gain insights into a person’s subjective experiences, opinions and motivations – as opposed to facts or behaviours [ 13 ]. Interviews can be distinguished by the degree to which they are structured (i.e. a questionnaire), open (e.g. free conversation or autobiographical interviews) or semi-structured [ 2 , 13 ]. Semi-structured interviews are characterized by open-ended questions and the use of an interview guide (or topic guide/list) in which the broad areas of interest, sometimes including sub-questions, are defined [ 19 ]. The pre-defined topics in the interview guide can be derived from the literature, previous research or a preliminary method of data collection, e.g. document study or observations. The topic list is usually adapted and improved at the start of the data collection process as the interviewer learns more about the field [ 20 ]. Across interviews the focus on the different (blocks of) questions may differ and some questions may be skipped altogether (e.g. if the interviewee is not able or willing to answer the questions or for concerns about the total length of the interview) [ 20 ]. Qualitative interviews are usually not conducted in written format as it impedes on the interactive component of the method [ 20 ]. In comparison to written surveys, qualitative interviews have the advantage of being interactive and allowing for unexpected topics to emerge and to be taken up by the researcher. This can also help overcome a provider or researcher-centred bias often found in written surveys, which by nature, can only measure what is already known or expected to be of relevance to the researcher. Interviews can be audio- or video-taped; but sometimes it is only feasible or acceptable for the interviewer to take written notes [ 14 , 16 , 20 ].

Focus groups

Focus groups are group interviews to explore participants’ expertise and experiences, including explorations of how and why people behave in certain ways [ 1 ]. Focus groups usually consist of 6–8 people and are led by an experienced moderator following a topic guide or “script” [ 21 ]. They can involve an observer who takes note of the non-verbal aspects of the situation, possibly using an observation guide [ 21 ]. Depending on researchers’ and participants’ preferences, the discussions can be audio- or video-taped and transcribed afterwards [ 21 ]. Focus groups are useful for bringing together homogeneous (to a lesser extent heterogeneous) groups of participants with relevant expertise and experience on a given topic on which they can share detailed information [ 21 ]. Focus groups are a relatively easy, fast and inexpensive method to gain access to information on interactions in a given group, i.e. “the sharing and comparing” among participants [ 21 ]. Disadvantages include less control over the process and a lesser extent to which each individual may participate. Moreover, focus group moderators need experience, as do those tasked with the analysis of the resulting data. Focus groups can be less appropriate for discussing sensitive topics that participants might be reluctant to disclose in a group setting [ 13 ]. Moreover, attention must be paid to the emergence of “groupthink” as well as possible power dynamics within the group, e.g. when patients are awed or intimidated by health professionals.

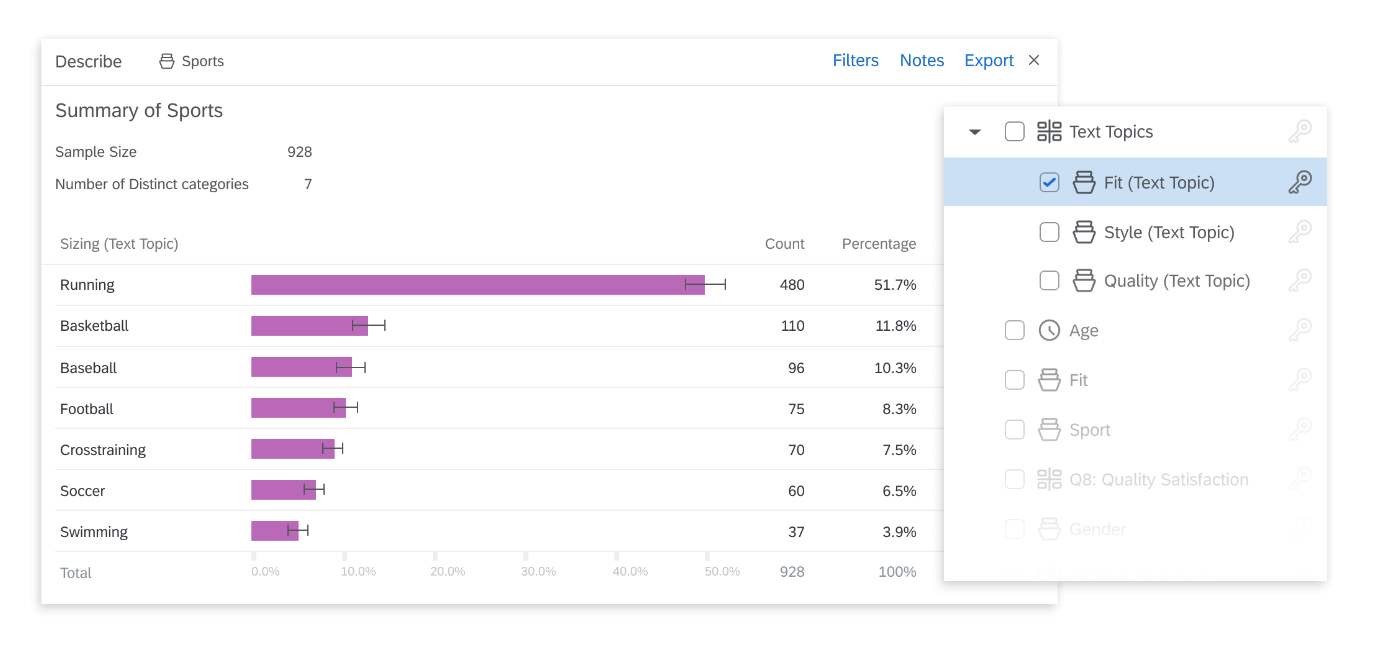

Choosing the “right” method

As explained above, the school of thought underlying qualitative research assumes no objective hierarchy of evidence and methods. This means that each choice of single or combined methods has to be based on the research question that needs to be answered and a critical assessment with regard to whether or to what extent the chosen method can accomplish this – i.e. the “fit” between question and method [ 14 ]. It is necessary for these decisions to be documented when they are being made, and to be critically discussed when reporting methods and results.

Let us assume that our research aim is to examine the (clinical) processes around acute endovascular treatment (EVT), from the patient’s arrival at the emergency room to recanalization, with the aim to identify possible causes for delay and/or other causes for sub-optimal treatment outcome. As a first step, we could conduct a document study of the relevant standard operating procedures (SOPs) for this phase of care – are they up-to-date and in line with current guidelines? Do they contain any mistakes, irregularities or uncertainties that could cause delays or other problems? Regardless of the answers to these questions, the results have to be interpreted based on what they are: a written outline of what care processes in this hospital should look like. If we want to know what they actually look like in practice, we can conduct observations of the processes described in the SOPs. These results can (and should) be analysed in themselves, but also in comparison to the results of the document analysis, especially as regards relevant discrepancies. Do the SOPs outline specific tests for which no equipment can be observed or tasks to be performed by specialized nurses who are not present during the observation? It might also be possible that the written SOP is outdated, but the actual care provided is in line with current best practice. In order to find out why these discrepancies exist, it can be useful to conduct interviews. Are the physicians simply not aware of the SOPs (because their existence is limited to the hospital’s intranet) or do they actively disagree with them or does the infrastructure make it impossible to provide the care as described? Another rationale for adding interviews is that some situations (or all of their possible variations for different patient groups or the day, night or weekend shift) cannot practically or ethically be observed. In this case, it is possible to ask those involved to report on their actions – being aware that this is not the same as the actual observation. A senior physician’s or hospital manager’s description of certain situations might differ from a nurse’s or junior physician’s one, maybe because they intentionally misrepresent facts or maybe because different aspects of the process are visible or important to them. In some cases, it can also be relevant to consider to whom the interviewee is disclosing this information – someone they trust, someone they are otherwise not connected to, or someone they suspect or are aware of being in a potentially “dangerous” power relationship to them. Lastly, a focus group could be conducted with representatives of the relevant professional groups to explore how and why exactly they provide care around EVT. The discussion might reveal discrepancies (between SOPs and actual care or between different physicians) and motivations to the researchers as well as to the focus group members that they might not have been aware of themselves. For the focus group to deliver relevant information, attention has to be paid to its composition and conduct, for example, to make sure that all participants feel safe to disclose sensitive or potentially problematic information or that the discussion is not dominated by (senior) physicians only. The resulting combination of data collection methods is shown in Fig. 2 .

Possible combination of data collection methods

Attributions for icons: “Book” by Serhii Smirnov, “Interview” by Adrien Coquet, FR, “Magnifying Glass” by anggun, ID, “Business communication” by Vectors Market; all from the Noun Project

The combination of multiple data source as described for this example can be referred to as “triangulation”, in which multiple measurements are carried out from different angles to achieve a more comprehensive understanding of the phenomenon under study [ 22 , 23 ].

Data analysis

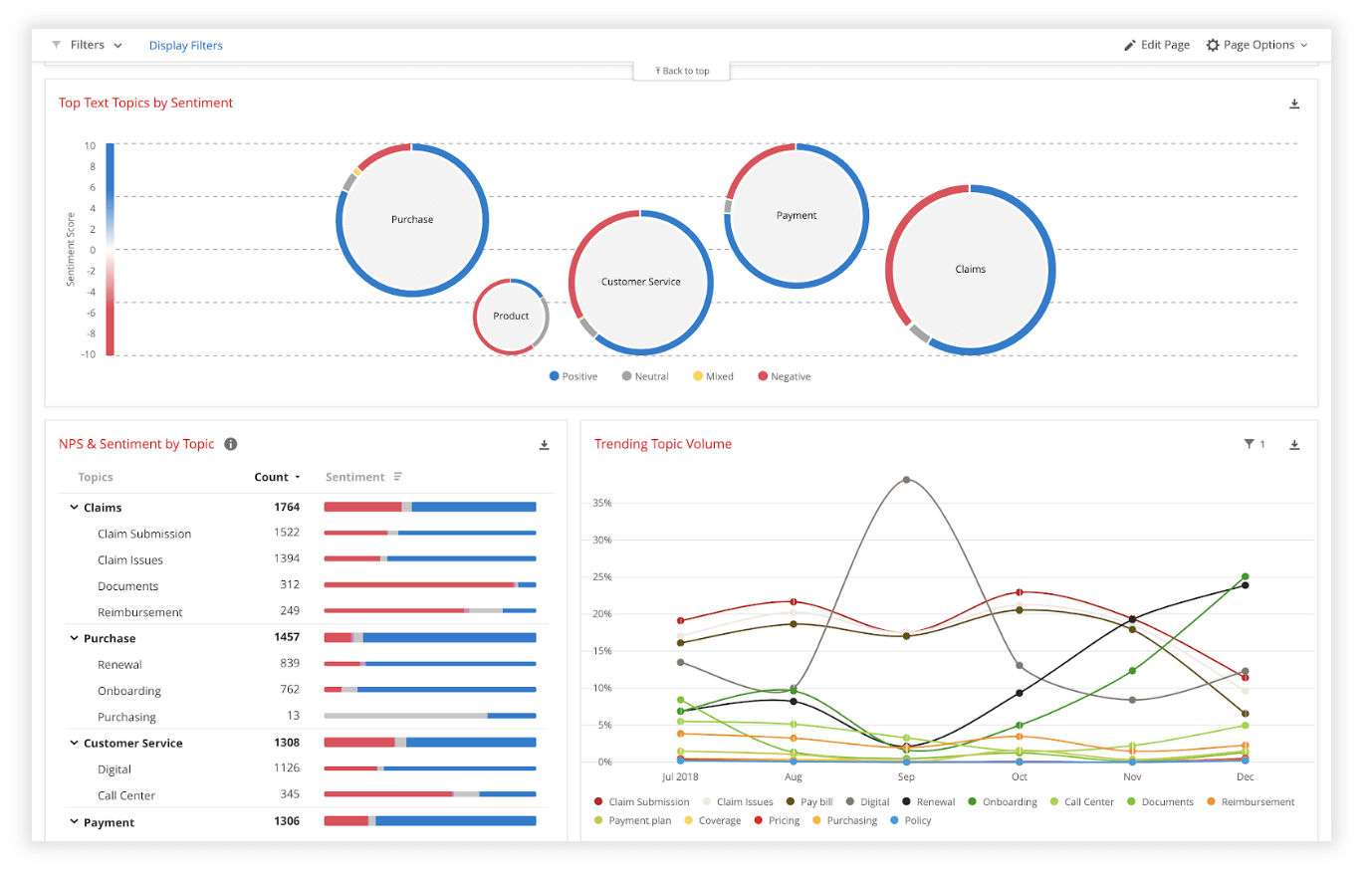

To analyse the data collected through observations, interviews and focus groups these need to be transcribed into protocols and transcripts (see Fig. 3 ). Interviews and focus groups can be transcribed verbatim , with or without annotations for behaviour (e.g. laughing, crying, pausing) and with or without phonetic transcription of dialects and filler words, depending on what is expected or known to be relevant for the analysis. In the next step, the protocols and transcripts are coded , that is, marked (or tagged, labelled) with one or more short descriptors of the content of a sentence or paragraph [ 2 , 15 , 23 ]. Jansen describes coding as “connecting the raw data with “theoretical” terms” [ 20 ]. In a more practical sense, coding makes raw data sortable. This makes it possible to extract and examine all segments describing, say, a tele-neurology consultation from multiple data sources (e.g. SOPs, emergency room observations, staff and patient interview). In a process of synthesis and abstraction, the codes are then grouped, summarised and/or categorised [ 15 , 20 ]. The end product of the coding or analysis process is a descriptive theory of the behavioural pattern under investigation [ 20 ]. The coding process is performed using qualitative data management software, the most common ones being InVivo, MaxQDA and Atlas.ti. It should be noted that these are data management tools which support the analysis performed by the researcher(s) [ 14 ].

From data collection to data analysis

Attributions for icons: see Fig. Fig.2, 2 , also “Speech to text” by Trevor Dsouza, “Field Notes” by Mike O’Brien, US, “Voice Record” by ProSymbols, US, “Inspection” by Made, AU, and “Cloud” by Graphic Tigers; all from the Noun Project

How to report qualitative research?

Protocols of qualitative research can be published separately and in advance of the study results. However, the aim is not the same as in RCT protocols, i.e. to pre-define and set in stone the research questions and primary or secondary endpoints. Rather, it is a way to describe the research methods in detail, which might not be possible in the results paper given journals’ word limits. Qualitative research papers are usually longer than their quantitative counterparts to allow for deep understanding and so-called “thick description”. In the methods section, the focus is on transparency of the methods used, including why, how and by whom they were implemented in the specific study setting, so as to enable a discussion of whether and how this may have influenced data collection, analysis and interpretation. The results section usually starts with a paragraph outlining the main findings, followed by more detailed descriptions of, for example, the commonalities, discrepancies or exceptions per category [ 20 ]. Here it is important to support main findings by relevant quotations, which may add information, context, emphasis or real-life examples [ 20 , 23 ]. It is subject to debate in the field whether it is relevant to state the exact number or percentage of respondents supporting a certain statement (e.g. “Five interviewees expressed negative feelings towards XYZ”) [ 21 ].

How to combine qualitative with quantitative research?