- Find a tutor

- IB Subjects , IB Success Tips , Online learning , Psychology , Tips

- December 31, 2022

Your Ultimate Guide for Acing IB Psychology Paper 3 (HL only)

Written By Rashi S.

Before beginning, feel free to check out how to tackle IB Psychology Paper 1 and Paper 2 if you have not yet and/or are interested!

Paper 3 (P3) is only for HL students, focusing on the research methods in psychology. It consists of 3 parts, all of which must be answered (no choice of questions). A research scenario is presented and students answer questions related to the methods and conclusions of it.

Question (Q) 1 consists of 3 parts, all of which must be answered for a total of 3 marks each, 9 marks in total.

1. (a) Identify the research method used and outline two characteristics of the method.

I do not recommend using bullet points for this question; nevertheless, ensure that your answer is clear for the examiner. Generally, the IB requires that the number of maximum marks you can gain on a question is the number of points that you need to make. In this case, you need to make three points as the question is worth three marks; one mark for identifying the correct research method and two marks for stating its two correct characteristics. Additionally, I would recommend mentioning an additional point to the two points that you are already made, to ensure that you maximize the number of marks you earn (i.e. in case the point you make is not on the mark scheme or a point made is ambiguous).

- The research method used is semi-structured interviews. Some characteristics of it are that the interviewer pre-determines topics or themes to explore. Moreover, it contains both open-ended and close-ended questions; whilst the former allows the respondents to elaborate, the latter invites brief and precise answers. Lastly, semi-structured interviews are informal and conversational, facilitating a rapport between the interviewer and the interviewee.

(b) Describe the sampling method used in the study.

The strategy to employ for tackling this question is the same as the one used in 1(a). This question requires that you correctly identify the sampling method used in the study (1 mark), and state two correct characteristics of the sampling method (2 marks). I do not recommend using bullet points for this question as well and recommend mentioning an additional point to the two points that you have already made.

- The sampling method used is purposive sampling. Participants are chosen because they possess characteristics salient to the research study, in this case, those who have some experience with taking drugs. Participants are recruited through advertising in places where those with the selected criteria can be found. In this case, in school magazines, for instance. Finally, purposive sampling may include snowball sampling.

(c) Suggest an alternative or additional research method giving one reason for your choice.

For this question, I recommend suggesting an additional research method instead of an alternative one. This is because it is suggesting an additional research method is easier to justify instead of arguing for using an alternative method.

- An additional research method is to utilize a quantitative survey, in which objective questions are used to gain insights from respondents. Subsequently, interviews can be conducted with some randomly selected participants to gain deeper insight into their cultural identity. The survey would enable the researchers to design questions to elicit the required data. Moreover, it can be quantified to make comparisons and enables larger samples size compared to only 19 participants, as in the study.

N.B. Connect your answer to the stimulus material presented when you can.

Q2 on the exam can be on any ONE of the two prompts (no choice) for a total of 6 marks.

1. Describe the ethical considerations that were applied in the study and explain if further ethical considerations could be applied.

- You are required to write about six different ethical considerations (because question = 6 marks maximum)

- The question is divided into two parts: first, students need to write about the three ethical considerations that were taken in the study, and second, you must explain three additional ethical considerations that can be accounted for. Although the question mentions “ if further ethical considerations could be applied,” it is, in fact, not asking that. Instead, think of it as “ how further ethical considerations could be applied”

- Thus, this requires you to explain three additional ethical considerations which were not mentioned/taken in the stimulus material.

- Informed consent or parental consent (if the participant is a minor)

- Confidentiality

- Right to withdraw before the study, during the study, or after the study

- Any use of concealment or deception is justified

- Approval from an ethics review board

- Coercion (is there any pressure to participate?)

2.Describe the ethical considerations in reporting the results and explain additional ethical considerations that could be taken into account when applying the findings of the study.

- This question is different from the first one in that it specifically asks students to refer to ethical considerations that could be taken into account when applying the findings of the study and not general additional ethical considerations.

- Your answer can refer to any of the following:

- Anonymity/pseudo anonymization: involves removing names or using fake names respectively for the publication of results

- Right to withdraw

- Informed consent/parental consent: informing people how their data will be used

- Debriefing: informing people how the results will be reported/published

N.B. Connect your answer to the stimulus material presented, at least once.

Q3 on the exam is on any ONE of the three prompts (no choice) for a total of 9 marks.

However, before discussing the questions and how to answer them, it is salient to note that the top most markband for P3 Q3 mentions the following:

- 7 – 9: The question is understood and answered in a focused and effective manner with an accurate argument that addresses the requirements of the question. The response contains accurate references to approaches to research with regard to the question, describing their strengths and limitations. The response makes effective use of the stimulus material.

From my research, there seems to be ambiguity regarding how exactly to earn the 9 points in Q3 and it is not as clear-cut as it is for the previous questions. Therefore, from a quality answer key from Pamoja online IB psychology, I mention the principle characteristics of two answers for two prompts and direct you to a sample answer for the third one:

1.Discuss how a researcher could ensure that the results of the study are credible.

- Begin by defining “credibility”

- Moreover, you can discuss the internal validity, member checking, peer debriefing, and triangulation. Provide a definition for each of this concept before discussing it and relating it to the stimulus material.

2.Discuss how the researcher in the study could avoid bias.

- Start by defining “bias”

- Moreover, you can discuss sampling bias, researcher bias, participant bias, and triagulation. Similar to above, provide a definition for each of this concept before discussing it and relating it to the stimulus material.

3.Discuss the possibility of generalizing/transfering the findings of the study. The relevant term, generalizing or transferability is used in exams depending on whether the question relates to a quantitative or qualitative study.

- When I was in the IB DP, Dixon’s IB psychology website and youtube videos were of huge help; hence, I suggest utilizing this resource. Scroll to the bottom of this blog to see an example answer for this question.

Time yourself

P3 is one hour. Thus, I recommended using 10 mins for Q1, 20 mins for Q2, and 25 mins for Q3, leaving the last 5 mins to proofread.

Related Posts:

Your Ultimate Guide for Acing IB Psychology Paper 1 | IB | ++tutors (plusplustutors.com)

Your Ultimate Guide for Acing IB Psychology Paper 2 | IB | ++tutors (plusplustutors.com)

- About the IB

- Exam pattern

- Extended Essay

- IB Languages

- IB Subjects

- IB Success Tips

- Internal Assessment (IA)

- Online learning

- Predicted Grades

- Uncategorized

RECENT POSTS

- Mastering Motivation and Stress for Exam Success April 16, 2024

- Top group study tips to make the most out of your time April 5, 2024

- Top IB Exam Preparation Techniques for Success March 30, 2024

- How to Navigate Post-Mock Challenges February 13, 2024

- Affordable Tutoring Options for IB Students February 12, 2024

Find me a tutor.

Please take one minute to fill in the form to tell us about your tuition needs. Once completed, one of our dedicated team members will reach out to you to fully understand your needs and find the best-match tutor. Our service is risk-free with our 100% money-back guarantee policy in the unlikely event that you are not satisfied with your matched ++tutor.

IB ++tutors is a Canadian company that is committed to providing global, high-quality IB private tutoring services by IB expert tutors.

Get Started

- How it works

- Free Lesson per Friend

- Affiliate Program

- Become a tutor

- IB School Support

- Privacy Policy

- Terms of Use

- Toll-Free Support (US & CA): 1-833-611-1133

- Global Support: +1-833-611-1133 (charges may apply)

- [email protected]

- 407 Iroquois Shore Rd. Unit 8, Suite V4 Oakville, Ontario, Canada L6H 1M3

AQA A-Level Psychology Past Papers With Answers

Saul Mcleod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul Mcleod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Learn about our Editorial Process

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

AQA A-Level Psychology (7182) and AS-Level Psychology (7181) past exam papers and marking schemes. The past papers are free to download for you to use as practice for your exams.

| : Paper 1 | : Paper 1 |

|---|---|

| 72 Marks | 96 Marks |

| 90 minutes | 120 minutes |

| 50% of AS Qualification | 33.3% of A-Level Qualification |

- Download Past Paper : A-Level (7182)

- Download Past Paper : AS (7181)

- Download Mark Scheme : A-Level (7182)

- Download Mark Scheme : AS (7181)

November 2021 (Labelled as June 2021)

November 2020 (Labelled as June 2020)

- Download Past Paper: A-Level (7182)

- Download Past Paper: AS (7181)

| : Paper 2 | : Paper 2 |

|---|---|

| 72 Marks | 96 Marks |

| 90 minutes | 120 minutes |

| 50% of AS Qualification | 33.3% of A-Level Qualification |

| Approaches, Psychopathology, Research Methods | Approaches, Biopsychology, Research Methods |

- Download Mark Scheme: A-Level (7182)

| : Paper 3 |

|---|

| 96 Marks |

| 120 minutes |

| 33.3% of A-Level Qualification |

| Students must answer one compulsory question & choose one topic per option. : Issues and Debates in Psychology : Relationships, Gender, Stress : Schizophrenia, Eating Behaviour, Cognition and Development : Aggression, Forensic Psychology, Addiction |

- Download Past Paper

- Download Mark Scheme

Related Articles

A-Level Psychology

A-level Psychology AQA Revision Notes

Aggression Psychology Revision Notes

Relationship Theories Revision Notes

Psychopathology Revision Notes

Addiction in Psychology: Revision Notes for A-level Psychology

Psychology Approaches Revision for A-level

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Ch 2: Psychological Research Methods

Have you ever wondered whether the violence you see on television affects your behavior? Are you more likely to behave aggressively in real life after watching people behave violently in dramatic situations on the screen? Or, could seeing fictional violence actually get aggression out of your system, causing you to be more peaceful? How are children influenced by the media they are exposed to? A psychologist interested in the relationship between behavior and exposure to violent images might ask these very questions.

The topic of violence in the media today is contentious. Since ancient times, humans have been concerned about the effects of new technologies on our behaviors and thinking processes. The Greek philosopher Socrates, for example, worried that writing—a new technology at that time—would diminish people’s ability to remember because they could rely on written records rather than committing information to memory. In our world of quickly changing technologies, questions about the effects of media continue to emerge. Is it okay to talk on a cell phone while driving? Are headphones good to use in a car? What impact does text messaging have on reaction time while driving? These are types of questions that psychologist David Strayer asks in his lab.

Watch this short video to see how Strayer utilizes the scientific method to reach important conclusions regarding technology and driving safety.

You can view the transcript for “Understanding driver distraction” here (opens in new window) .

How can we go about finding answers that are supported not by mere opinion, but by evidence that we can all agree on? The findings of psychological research can help us navigate issues like this.

Introduction to the Scientific Method

Learning objectives.

- Explain the steps of the scientific method

- Describe why the scientific method is important to psychology

- Summarize the processes of informed consent and debriefing

- Explain how research involving humans or animals is regulated

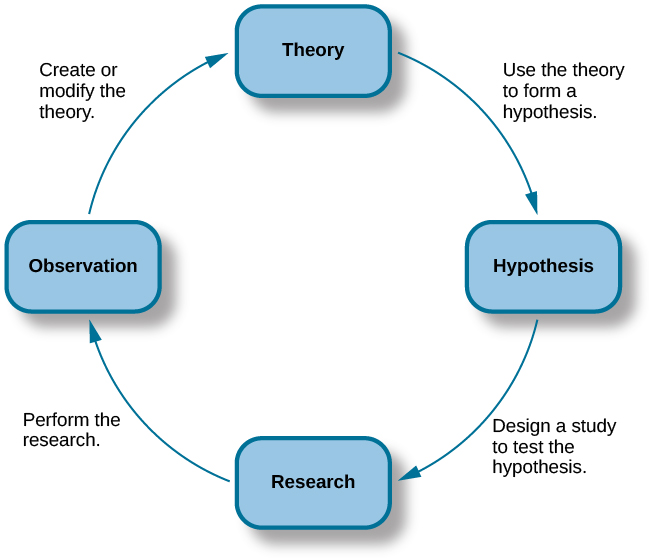

Scientists are engaged in explaining and understanding how the world around them works, and they are able to do so by coming up with theories that generate hypotheses that are testable and falsifiable. Theories that stand up to their tests are retained and refined, while those that do not are discarded or modified. In this way, research enables scientists to separate fact from simple opinion. Having good information generated from research aids in making wise decisions both in public policy and in our personal lives. In this section, you’ll see how psychologists use the scientific method to study and understand behavior.

The Scientific Process

The goal of all scientists is to better understand the world around them. Psychologists focus their attention on understanding behavior, as well as the cognitive (mental) and physiological (body) processes that underlie behavior. In contrast to other methods that people use to understand the behavior of others, such as intuition and personal experience, the hallmark of scientific research is that there is evidence to support a claim. Scientific knowledge is empirical : It is grounded in objective, tangible evidence that can be observed time and time again, regardless of who is observing.

While behavior is observable, the mind is not. If someone is crying, we can see the behavior. However, the reason for the behavior is more difficult to determine. Is the person crying due to being sad, in pain, or happy? Sometimes we can learn the reason for someone’s behavior by simply asking a question, like “Why are you crying?” However, there are situations in which an individual is either uncomfortable or unwilling to answer the question honestly, or is incapable of answering. For example, infants would not be able to explain why they are crying. In such circumstances, the psychologist must be creative in finding ways to better understand behavior. This module explores how scientific knowledge is generated, and how important that knowledge is in forming decisions in our personal lives and in the public domain.

Process of Scientific Research

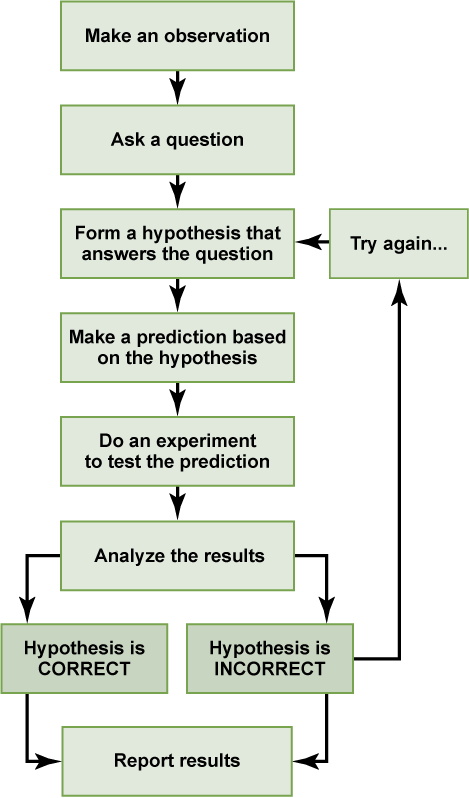

Scientific knowledge is advanced through a process known as the scientific method. Basically, ideas (in the form of theories and hypotheses) are tested against the real world (in the form of empirical observations), and those empirical observations lead to more ideas that are tested against the real world, and so on.

The basic steps in the scientific method are:

- Observe a natural phenomenon and define a question about it

- Make a hypothesis, or potential solution to the question

- Test the hypothesis

- If the hypothesis is true, find more evidence or find counter-evidence

- If the hypothesis is false, create a new hypothesis or try again

- Draw conclusions and repeat–the scientific method is never-ending, and no result is ever considered perfect

In order to ask an important question that may improve our understanding of the world, a researcher must first observe natural phenomena. By making observations, a researcher can define a useful question. After finding a question to answer, the researcher can then make a prediction (a hypothesis) about what he or she thinks the answer will be. This prediction is usually a statement about the relationship between two or more variables. After making a hypothesis, the researcher will then design an experiment to test his or her hypothesis and evaluate the data gathered. These data will either support or refute the hypothesis. Based on the conclusions drawn from the data, the researcher will then find more evidence to support the hypothesis, look for counter-evidence to further strengthen the hypothesis, revise the hypothesis and create a new experiment, or continue to incorporate the information gathered to answer the research question.

Basic Principles of the Scientific Method

Two key concepts in the scientific approach are theory and hypothesis. A theory is a well-developed set of ideas that propose an explanation for observed phenomena that can be used to make predictions about future observations. A hypothesis is a testable prediction that is arrived at logically from a theory. It is often worded as an if-then statement (e.g., if I study all night, I will get a passing grade on the test). The hypothesis is extremely important because it bridges the gap between the realm of ideas and the real world. As specific hypotheses are tested, theories are modified and refined to reflect and incorporate the result of these tests.

Other key components in following the scientific method include verifiability, predictability, falsifiability, and fairness. Verifiability means that an experiment must be replicable by another researcher. To achieve verifiability, researchers must make sure to document their methods and clearly explain how their experiment is structured and why it produces certain results.

Predictability in a scientific theory implies that the theory should enable us to make predictions about future events. The precision of these predictions is a measure of the strength of the theory.

Falsifiability refers to whether a hypothesis can be disproved. For a hypothesis to be falsifiable, it must be logically possible to make an observation or do a physical experiment that would show that there is no support for the hypothesis. Even when a hypothesis cannot be shown to be false, that does not necessarily mean it is not valid. Future testing may disprove the hypothesis. This does not mean that a hypothesis has to be shown to be false, just that it can be tested.

To determine whether a hypothesis is supported or not supported, psychological researchers must conduct hypothesis testing using statistics. Hypothesis testing is a type of statistics that determines the probability of a hypothesis being true or false. If hypothesis testing reveals that results were “statistically significant,” this means that there was support for the hypothesis and that the researchers can be reasonably confident that their result was not due to random chance. If the results are not statistically significant, this means that the researchers’ hypothesis was not supported.

Fairness implies that all data must be considered when evaluating a hypothesis. A researcher cannot pick and choose what data to keep and what to discard or focus specifically on data that support or do not support a particular hypothesis. All data must be accounted for, even if they invalidate the hypothesis.

Applying the Scientific Method

To see how this process works, let’s consider a specific theory and a hypothesis that might be generated from that theory. As you’ll learn in a later module, the James-Lange theory of emotion asserts that emotional experience relies on the physiological arousal associated with the emotional state. If you walked out of your home and discovered a very aggressive snake waiting on your doorstep, your heart would begin to race and your stomach churn. According to the James-Lange theory, these physiological changes would result in your feeling of fear. A hypothesis that could be derived from this theory might be that a person who is unaware of the physiological arousal that the sight of the snake elicits will not feel fear.

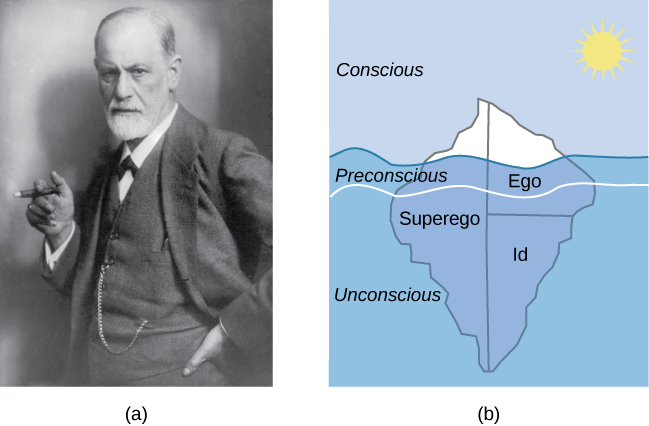

Remember that a good scientific hypothesis is falsifiable, or capable of being shown to be incorrect. Recall from the introductory module that Sigmund Freud had lots of interesting ideas to explain various human behaviors (Figure 5). However, a major criticism of Freud’s theories is that many of his ideas are not falsifiable; for example, it is impossible to imagine empirical observations that would disprove the existence of the id, the ego, and the superego—the three elements of personality described in Freud’s theories. Despite this, Freud’s theories are widely taught in introductory psychology texts because of their historical significance for personality psychology and psychotherapy, and these remain the root of all modern forms of therapy.

In contrast, the James-Lange theory does generate falsifiable hypotheses, such as the one described above. Some individuals who suffer significant injuries to their spinal columns are unable to feel the bodily changes that often accompany emotional experiences. Therefore, we could test the hypothesis by determining how emotional experiences differ between individuals who have the ability to detect these changes in their physiological arousal and those who do not. In fact, this research has been conducted and while the emotional experiences of people deprived of an awareness of their physiological arousal may be less intense, they still experience emotion (Chwalisz, Diener, & Gallagher, 1988).

Link to Learning

Why the scientific method is important for psychology.

The use of the scientific method is one of the main features that separates modern psychology from earlier philosophical inquiries about the mind. Compared to chemistry, physics, and other “natural sciences,” psychology has long been considered one of the “social sciences” because of the subjective nature of the things it seeks to study. Many of the concepts that psychologists are interested in—such as aspects of the human mind, behavior, and emotions—are subjective and cannot be directly measured. Psychologists often rely instead on behavioral observations and self-reported data, which are considered by some to be illegitimate or lacking in methodological rigor. Applying the scientific method to psychology, therefore, helps to standardize the approach to understanding its very different types of information.

The scientific method allows psychological data to be replicated and confirmed in many instances, under different circumstances, and by a variety of researchers. Through replication of experiments, new generations of psychologists can reduce errors and broaden the applicability of theories. It also allows theories to be tested and validated instead of simply being conjectures that could never be verified or falsified. All of this allows psychologists to gain a stronger understanding of how the human mind works.

Scientific articles published in journals and psychology papers written in the style of the American Psychological Association (i.e., in “APA style”) are structured around the scientific method. These papers include an Introduction, which introduces the background information and outlines the hypotheses; a Methods section, which outlines the specifics of how the experiment was conducted to test the hypothesis; a Results section, which includes the statistics that tested the hypothesis and state whether it was supported or not supported, and a Discussion and Conclusion, which state the implications of finding support for, or no support for, the hypothesis. Writing articles and papers that adhere to the scientific method makes it easy for future researchers to repeat the study and attempt to replicate the results.

Ethics in Research

Today, scientists agree that good research is ethical in nature and is guided by a basic respect for human dignity and safety. However, as you will read in the Tuskegee Syphilis Study, this has not always been the case. Modern researchers must demonstrate that the research they perform is ethically sound. This section presents how ethical considerations affect the design and implementation of research conducted today.

Research Involving Human Participants

Any experiment involving the participation of human subjects is governed by extensive, strict guidelines designed to ensure that the experiment does not result in harm. Any research institution that receives federal support for research involving human participants must have access to an institutional review board (IRB) . The IRB is a committee of individuals often made up of members of the institution’s administration, scientists, and community members (Figure 6). The purpose of the IRB is to review proposals for research that involves human participants. The IRB reviews these proposals with the principles mentioned above in mind, and generally, approval from the IRB is required in order for the experiment to proceed.

An institution’s IRB requires several components in any experiment it approves. For one, each participant must sign an informed consent form before they can participate in the experiment. An informed consent form provides a written description of what participants can expect during the experiment, including potential risks and implications of the research. It also lets participants know that their involvement is completely voluntary and can be discontinued without penalty at any time. Furthermore, the informed consent guarantees that any data collected in the experiment will remain completely confidential. In cases where research participants are under the age of 18, the parents or legal guardians are required to sign the informed consent form.

While the informed consent form should be as honest as possible in describing exactly what participants will be doing, sometimes deception is necessary to prevent participants’ knowledge of the exact research question from affecting the results of the study. Deception involves purposely misleading experiment participants in order to maintain the integrity of the experiment, but not to the point where the deception could be considered harmful. For example, if we are interested in how our opinion of someone is affected by their attire, we might use deception in describing the experiment to prevent that knowledge from affecting participants’ responses. In cases where deception is involved, participants must receive a full debriefing upon conclusion of the study—complete, honest information about the purpose of the experiment, how the data collected will be used, the reasons why deception was necessary, and information about how to obtain additional information about the study.

Dig Deeper: Ethics and the Tuskegee Syphilis Study

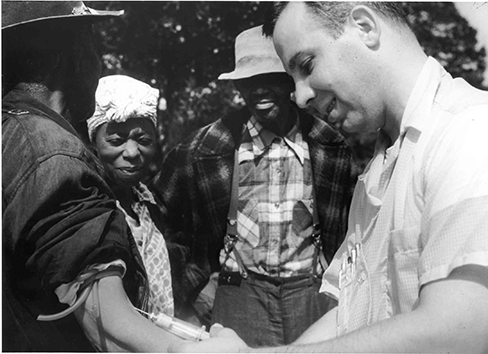

Unfortunately, the ethical guidelines that exist for research today were not always applied in the past. In 1932, poor, rural, black, male sharecroppers from Tuskegee, Alabama, were recruited to participate in an experiment conducted by the U.S. Public Health Service, with the aim of studying syphilis in black men (Figure 7). In exchange for free medical care, meals, and burial insurance, 600 men agreed to participate in the study. A little more than half of the men tested positive for syphilis, and they served as the experimental group (given that the researchers could not randomly assign participants to groups, this represents a quasi-experiment). The remaining syphilis-free individuals served as the control group. However, those individuals that tested positive for syphilis were never informed that they had the disease.

While there was no treatment for syphilis when the study began, by 1947 penicillin was recognized as an effective treatment for the disease. Despite this, no penicillin was administered to the participants in this study, and the participants were not allowed to seek treatment at any other facilities if they continued in the study. Over the course of 40 years, many of the participants unknowingly spread syphilis to their wives (and subsequently their children born from their wives) and eventually died because they never received treatment for the disease. This study was discontinued in 1972 when the experiment was discovered by the national press (Tuskegee University, n.d.). The resulting outrage over the experiment led directly to the National Research Act of 1974 and the strict ethical guidelines for research on humans described in this chapter. Why is this study unethical? How were the men who participated and their families harmed as a function of this research?

Learn more about the Tuskegee Syphilis Study on the CDC website .

Research Involving Animal Subjects

This does not mean that animal researchers are immune to ethical concerns. Indeed, the humane and ethical treatment of animal research subjects is a critical aspect of this type of research. Researchers must design their experiments to minimize any pain or distress experienced by animals serving as research subjects.

Whereas IRBs review research proposals that involve human participants, animal experimental proposals are reviewed by an Institutional Animal Care and Use Committee (IACUC) . An IACUC consists of institutional administrators, scientists, veterinarians, and community members. This committee is charged with ensuring that all experimental proposals require the humane treatment of animal research subjects. It also conducts semi-annual inspections of all animal facilities to ensure that the research protocols are being followed. No animal research project can proceed without the committee’s approval.

Introduction to Approaches to Research

- Differentiate between descriptive, correlational, and experimental research

- Explain the strengths and weaknesses of case studies, naturalistic observation, and surveys

- Describe the strength and weaknesses of archival research

- Compare longitudinal and cross-sectional approaches to research

- Explain what a correlation coefficient tells us about the relationship between variables

- Describe why correlation does not mean causation

- Describe the experimental process, including ways to control for bias

- Identify and differentiate between independent and dependent variables

Psychologists use descriptive, experimental, and correlational methods to conduct research. Descriptive, or qualitative, methods include the case study, naturalistic observation, surveys, archival research, longitudinal research, and cross-sectional research.

Experiments are conducted in order to determine cause-and-effect relationships. In ideal experimental design, the only difference between the experimental and control groups is whether participants are exposed to the experimental manipulation. Each group goes through all phases of the experiment, but each group will experience a different level of the independent variable: the experimental group is exposed to the experimental manipulation, and the control group is not exposed to the experimental manipulation. The researcher then measures the changes that are produced in the dependent variable in each group. Once data is collected from both groups, it is analyzed statistically to determine if there are meaningful differences between the groups.

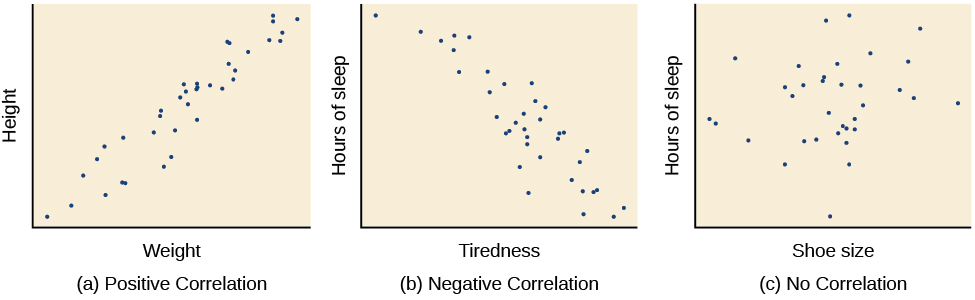

When scientists passively observe and measure phenomena it is called correlational research. Here, psychologists do not intervene and change behavior, as they do in experiments. In correlational research, they identify patterns of relationships, but usually cannot infer what causes what. Importantly, with correlational research, you can examine only two variables at a time, no more and no less.

Watch It: More on Research

If you enjoy learning through lectures and want an interesting and comprehensive summary of this section, then click on the Youtube link to watch a lecture given by MIT Professor John Gabrieli . Start at the 30:45 minute mark and watch through the end to hear examples of actual psychological studies and how they were analyzed. Listen for references to independent and dependent variables, experimenter bias, and double-blind studies. In the lecture, you’ll learn about breaking social norms, “WEIRD” research, why expectations matter, how a warm cup of coffee might make you nicer, why you should change your answer on a multiple choice test, and why praise for intelligence won’t make you any smarter.

You can view the transcript for “Lec 2 | MIT 9.00SC Introduction to Psychology, Spring 2011” here (opens in new window) .

Descriptive Research

There are many research methods available to psychologists in their efforts to understand, describe, and explain behavior and the cognitive and biological processes that underlie it. Some methods rely on observational techniques. Other approaches involve interactions between the researcher and the individuals who are being studied—ranging from a series of simple questions to extensive, in-depth interviews—to well-controlled experiments.

The three main categories of psychological research are descriptive, correlational, and experimental research. Research studies that do not test specific relationships between variables are called descriptive, or qualitative, studies . These studies are used to describe general or specific behaviors and attributes that are observed and measured. In the early stages of research it might be difficult to form a hypothesis, especially when there is not any existing literature in the area. In these situations designing an experiment would be premature, as the question of interest is not yet clearly defined as a hypothesis. Often a researcher will begin with a non-experimental approach, such as a descriptive study, to gather more information about the topic before designing an experiment or correlational study to address a specific hypothesis. Descriptive research is distinct from correlational research , in which psychologists formally test whether a relationship exists between two or more variables. Experimental research goes a step further beyond descriptive and correlational research and randomly assigns people to different conditions, using hypothesis testing to make inferences about how these conditions affect behavior. It aims to determine if one variable directly impacts and causes another. Correlational and experimental research both typically use hypothesis testing, whereas descriptive research does not.

Each of these research methods has unique strengths and weaknesses, and each method may only be appropriate for certain types of research questions. For example, studies that rely primarily on observation produce incredible amounts of information, but the ability to apply this information to the larger population is somewhat limited because of small sample sizes. Survey research, on the other hand, allows researchers to easily collect data from relatively large samples. While this allows for results to be generalized to the larger population more easily, the information that can be collected on any given survey is somewhat limited and subject to problems associated with any type of self-reported data. Some researchers conduct archival research by using existing records. While this can be a fairly inexpensive way to collect data that can provide insight into a number of research questions, researchers using this approach have no control on how or what kind of data was collected.

Correlational research can find a relationship between two variables, but the only way a researcher can claim that the relationship between the variables is cause and effect is to perform an experiment. In experimental research, which will be discussed later in the text, there is a tremendous amount of control over variables of interest. While this is a powerful approach, experiments are often conducted in very artificial settings. This calls into question the validity of experimental findings with regard to how they would apply in real-world settings. In addition, many of the questions that psychologists would like to answer cannot be pursued through experimental research because of ethical concerns.

The three main types of descriptive studies are, naturalistic observation, case studies, and surveys.

Naturalistic Observation

If you want to understand how behavior occurs, one of the best ways to gain information is to simply observe the behavior in its natural context. However, people might change their behavior in unexpected ways if they know they are being observed. How do researchers obtain accurate information when people tend to hide their natural behavior? As an example, imagine that your professor asks everyone in your class to raise their hand if they always wash their hands after using the restroom. Chances are that almost everyone in the classroom will raise their hand, but do you think hand washing after every trip to the restroom is really that universal?

This is very similar to the phenomenon mentioned earlier in this module: many individuals do not feel comfortable answering a question honestly. But if we are committed to finding out the facts about hand washing, we have other options available to us.

Suppose we send a classmate into the restroom to actually watch whether everyone washes their hands after using the restroom. Will our observer blend into the restroom environment by wearing a white lab coat, sitting with a clipboard, and staring at the sinks? We want our researcher to be inconspicuous—perhaps standing at one of the sinks pretending to put in contact lenses while secretly recording the relevant information. This type of observational study is called naturalistic observation : observing behavior in its natural setting. To better understand peer exclusion, Suzanne Fanger collaborated with colleagues at the University of Texas to observe the behavior of preschool children on a playground. How did the observers remain inconspicuous over the duration of the study? They equipped a few of the children with wireless microphones (which the children quickly forgot about) and observed while taking notes from a distance. Also, the children in that particular preschool (a “laboratory preschool”) were accustomed to having observers on the playground (Fanger, Frankel, & Hazen, 2012).

It is critical that the observer be as unobtrusive and as inconspicuous as possible: when people know they are being watched, they are less likely to behave naturally. If you have any doubt about this, ask yourself how your driving behavior might differ in two situations: In the first situation, you are driving down a deserted highway during the middle of the day; in the second situation, you are being followed by a police car down the same deserted highway (Figure 9).

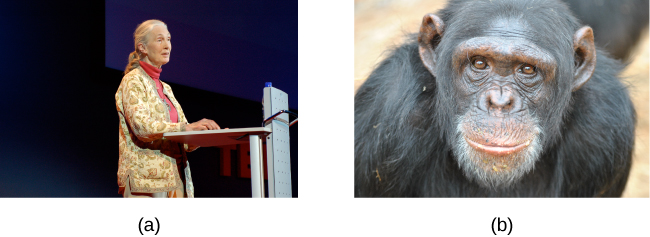

It should be pointed out that naturalistic observation is not limited to research involving humans. Indeed, some of the best-known examples of naturalistic observation involve researchers going into the field to observe various kinds of animals in their own environments. As with human studies, the researchers maintain their distance and avoid interfering with the animal subjects so as not to influence their natural behaviors. Scientists have used this technique to study social hierarchies and interactions among animals ranging from ground squirrels to gorillas. The information provided by these studies is invaluable in understanding how those animals organize socially and communicate with one another. The anthropologist Jane Goodall, for example, spent nearly five decades observing the behavior of chimpanzees in Africa (Figure 10). As an illustration of the types of concerns that a researcher might encounter in naturalistic observation, some scientists criticized Goodall for giving the chimps names instead of referring to them by numbers—using names was thought to undermine the emotional detachment required for the objectivity of the study (McKie, 2010).

The greatest benefit of naturalistic observation is the validity, or accuracy, of information collected unobtrusively in a natural setting. Having individuals behave as they normally would in a given situation means that we have a higher degree of ecological validity, or realism, than we might achieve with other research approaches. Therefore, our ability to generalize the findings of the research to real-world situations is enhanced. If done correctly, we need not worry about people or animals modifying their behavior simply because they are being observed. Sometimes, people may assume that reality programs give us a glimpse into authentic human behavior. However, the principle of inconspicuous observation is violated as reality stars are followed by camera crews and are interviewed on camera for personal confessionals. Given that environment, we must doubt how natural and realistic their behaviors are.

The major downside of naturalistic observation is that they are often difficult to set up and control. In our restroom study, what if you stood in the restroom all day prepared to record people’s hand washing behavior and no one came in? Or, what if you have been closely observing a troop of gorillas for weeks only to find that they migrated to a new place while you were sleeping in your tent? The benefit of realistic data comes at a cost. As a researcher you have no control of when (or if) you have behavior to observe. In addition, this type of observational research often requires significant investments of time, money, and a good dose of luck.

Sometimes studies involve structured observation. In these cases, people are observed while engaging in set, specific tasks. An excellent example of structured observation comes from Strange Situation by Mary Ainsworth (you will read more about this in the module on lifespan development). The Strange Situation is a procedure used to evaluate attachment styles that exist between an infant and caregiver. In this scenario, caregivers bring their infants into a room filled with toys. The Strange Situation involves a number of phases, including a stranger coming into the room, the caregiver leaving the room, and the caregiver’s return to the room. The infant’s behavior is closely monitored at each phase, but it is the behavior of the infant upon being reunited with the caregiver that is most telling in terms of characterizing the infant’s attachment style with the caregiver.

Another potential problem in observational research is observer bias . Generally, people who act as observers are closely involved in the research project and may unconsciously skew their observations to fit their research goals or expectations. To protect against this type of bias, researchers should have clear criteria established for the types of behaviors recorded and how those behaviors should be classified. In addition, researchers often compare observations of the same event by multiple observers, in order to test inter-rater reliability : a measure of reliability that assesses the consistency of observations by different observers.

Case Studies

In 2011, the New York Times published a feature story on Krista and Tatiana Hogan, Canadian twin girls. These particular twins are unique because Krista and Tatiana are conjoined twins, connected at the head. There is evidence that the two girls are connected in a part of the brain called the thalamus, which is a major sensory relay center. Most incoming sensory information is sent through the thalamus before reaching higher regions of the cerebral cortex for processing.

The implications of this potential connection mean that it might be possible for one twin to experience the sensations of the other twin. For instance, if Krista is watching a particularly funny television program, Tatiana might smile or laugh even if she is not watching the program. This particular possibility has piqued the interest of many neuroscientists who seek to understand how the brain uses sensory information.

These twins represent an enormous resource in the study of the brain, and since their condition is very rare, it is likely that as long as their family agrees, scientists will follow these girls very closely throughout their lives to gain as much information as possible (Dominus, 2011).

In observational research, scientists are conducting a clinical or case study when they focus on one person or just a few individuals. Indeed, some scientists spend their entire careers studying just 10–20 individuals. Why would they do this? Obviously, when they focus their attention on a very small number of people, they can gain a tremendous amount of insight into those cases. The richness of information that is collected in clinical or case studies is unmatched by any other single research method. This allows the researcher to have a very deep understanding of the individuals and the particular phenomenon being studied.

If clinical or case studies provide so much information, why are they not more frequent among researchers? As it turns out, the major benefit of this particular approach is also a weakness. As mentioned earlier, this approach is often used when studying individuals who are interesting to researchers because they have a rare characteristic. Therefore, the individuals who serve as the focus of case studies are not like most other people. If scientists ultimately want to explain all behavior, focusing attention on such a special group of people can make it difficult to generalize any observations to the larger population as a whole. Generalizing refers to the ability to apply the findings of a particular research project to larger segments of society. Again, case studies provide enormous amounts of information, but since the cases are so specific, the potential to apply what’s learned to the average person may be very limited.

Often, psychologists develop surveys as a means of gathering data. Surveys are lists of questions to be answered by research participants, and can be delivered as paper-and-pencil questionnaires, administered electronically, or conducted verbally (Figure 11). Generally, the survey itself can be completed in a short time, and the ease of administering a survey makes it easy to collect data from a large number of people.

Surveys allow researchers to gather data from larger samples than may be afforded by other research methods . A sample is a subset of individuals selected from a population , which is the overall group of individuals that the researchers are interested in. Researchers study the sample and seek to generalize their findings to the population.

There is both strength and weakness of the survey in comparison to case studies. By using surveys, we can collect information from a larger sample of people. A larger sample is better able to reflect the actual diversity of the population, thus allowing better generalizability. Therefore, if our sample is sufficiently large and diverse, we can assume that the data we collect from the survey can be generalized to the larger population with more certainty than the information collected through a case study. However, given the greater number of people involved, we are not able to collect the same depth of information on each person that would be collected in a case study.

Another potential weakness of surveys is something we touched on earlier in this chapter: people don’t always give accurate responses. They may lie, misremember, or answer questions in a way that they think makes them look good. For example, people may report drinking less alcohol than is actually the case.

Any number of research questions can be answered through the use of surveys. One real-world example is the research conducted by Jenkins, Ruppel, Kizer, Yehl, and Griffin (2012) about the backlash against the US Arab-American community following the terrorist attacks of September 11, 2001. Jenkins and colleagues wanted to determine to what extent these negative attitudes toward Arab-Americans still existed nearly a decade after the attacks occurred. In one study, 140 research participants filled out a survey with 10 questions, including questions asking directly about the participant’s overt prejudicial attitudes toward people of various ethnicities. The survey also asked indirect questions about how likely the participant would be to interact with a person of a given ethnicity in a variety of settings (such as, “How likely do you think it is that you would introduce yourself to a person of Arab-American descent?”). The results of the research suggested that participants were unwilling to report prejudicial attitudes toward any ethnic group. However, there were significant differences between their pattern of responses to questions about social interaction with Arab-Americans compared to other ethnic groups: they indicated less willingness for social interaction with Arab-Americans compared to the other ethnic groups. This suggested that the participants harbored subtle forms of prejudice against Arab-Americans, despite their assertions that this was not the case (Jenkins et al., 2012).

Think It Over

Archival research.

In comparing archival research to other research methods, there are several important distinctions. For one, the researcher employing archival research never directly interacts with research participants. Therefore, the investment of time and money to collect data is considerably less with archival research. Additionally, researchers have no control over what information was originally collected. Therefore, research questions have to be tailored so they can be answered within the structure of the existing data sets. There is also no guarantee of consistency between the records from one source to another, which might make comparing and contrasting different data sets problematic.

Longitudinal and Cross-Sectional Research

Sometimes we want to see how people change over time, as in studies of human development and lifespan. When we test the same group of individuals repeatedly over an extended period of time, we are conducting longitudinal research. Longitudinal research is a research design in which data-gathering is administered repeatedly over an extended period of time. For example, we may survey a group of individuals about their dietary habits at age 20, retest them a decade later at age 30, and then again at age 40.

Another approach is cross-sectional research . In cross-sectional research, a researcher compares multiple segments of the population at the same time. Using the dietary habits example above, the researcher might directly compare different groups of people by age. Instead of observing a group of people for 20 years to see how their dietary habits changed from decade to decade, the researcher would study a group of 20-year-old individuals and compare them to a group of 30-year-old individuals and a group of 40-year-old individuals. While cross-sectional research requires a shorter-term investment, it is also limited by differences that exist between the different generations (or cohorts) that have nothing to do with age per se, but rather reflect the social and cultural experiences of different generations of individuals make them different from one another.

To illustrate this concept, consider the following survey findings. In recent years there has been significant growth in the popular support of same-sex marriage. Many studies on this topic break down survey participants into different age groups. In general, younger people are more supportive of same-sex marriage than are those who are older (Jones, 2013). Does this mean that as we age we become less open to the idea of same-sex marriage, or does this mean that older individuals have different perspectives because of the social climates in which they grew up? Longitudinal research is a powerful approach because the same individuals are involved in the research project over time, which means that the researchers need to be less concerned with differences among cohorts affecting the results of their study.

Often longitudinal studies are employed when researching various diseases in an effort to understand particular risk factors. Such studies often involve tens of thousands of individuals who are followed for several decades. Given the enormous number of people involved in these studies, researchers can feel confident that their findings can be generalized to the larger population. The Cancer Prevention Study-3 (CPS-3) is one of a series of longitudinal studies sponsored by the American Cancer Society aimed at determining predictive risk factors associated with cancer. When participants enter the study, they complete a survey about their lives and family histories, providing information on factors that might cause or prevent the development of cancer. Then every few years the participants receive additional surveys to complete. In the end, hundreds of thousands of participants will be tracked over 20 years to determine which of them develop cancer and which do not.

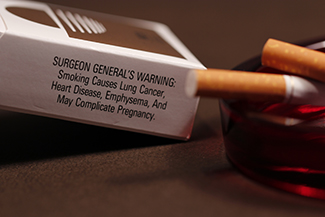

Clearly, this type of research is important and potentially very informative. For instance, earlier longitudinal studies sponsored by the American Cancer Society provided some of the first scientific demonstrations of the now well-established links between increased rates of cancer and smoking (American Cancer Society, n.d.) (Figure 13).

As with any research strategy, longitudinal research is not without limitations. For one, these studies require an incredible time investment by the researcher and research participants. Given that some longitudinal studies take years, if not decades, to complete, the results will not be known for a considerable period of time. In addition to the time demands, these studies also require a substantial financial investment. Many researchers are unable to commit the resources necessary to see a longitudinal project through to the end.

Research participants must also be willing to continue their participation for an extended period of time, and this can be problematic. People move, get married and take new names, get ill, and eventually die. Even without significant life changes, some people may simply choose to discontinue their participation in the project. As a result, the attrition rates, or reduction in the number of research participants due to dropouts, in longitudinal studies are quite high and increases over the course of a project. For this reason, researchers using this approach typically recruit many participants fully expecting that a substantial number will drop out before the end. As the study progresses, they continually check whether the sample still represents the larger population, and make adjustments as necessary.

Correlational Research

Did you know that as sales in ice cream increase, so does the overall rate of crime? Is it possible that indulging in your favorite flavor of ice cream could send you on a crime spree? Or, after committing crime do you think you might decide to treat yourself to a cone? There is no question that a relationship exists between ice cream and crime (e.g., Harper, 2013), but it would be pretty foolish to decide that one thing actually caused the other to occur.

It is much more likely that both ice cream sales and crime rates are related to the temperature outside. When the temperature is warm, there are lots of people out of their houses, interacting with each other, getting annoyed with one another, and sometimes committing crimes. Also, when it is warm outside, we are more likely to seek a cool treat like ice cream. How do we determine if there is indeed a relationship between two things? And when there is a relationship, how can we discern whether it is attributable to coincidence or causation?

Correlation Does Not Indicate Causation

Correlational research is useful because it allows us to discover the strength and direction of relationships that exist between two variables. However, correlation is limited because establishing the existence of a relationship tells us little about cause and effect . While variables are sometimes correlated because one does cause the other, it could also be that some other factor, a confounding variable , is actually causing the systematic movement in our variables of interest. In the ice cream/crime rate example mentioned earlier, temperature is a confounding variable that could account for the relationship between the two variables.

Even when we cannot point to clear confounding variables, we should not assume that a correlation between two variables implies that one variable causes changes in another. This can be frustrating when a cause-and-effect relationship seems clear and intuitive. Think back to our discussion of the research done by the American Cancer Society and how their research projects were some of the first demonstrations of the link between smoking and cancer. It seems reasonable to assume that smoking causes cancer, but if we were limited to correlational research , we would be overstepping our bounds by making this assumption.

Unfortunately, people mistakenly make claims of causation as a function of correlations all the time. Such claims are especially common in advertisements and news stories. For example, recent research found that people who eat cereal on a regular basis achieve healthier weights than those who rarely eat cereal (Frantzen, Treviño, Echon, Garcia-Dominic, & DiMarco, 2013; Barton et al., 2005). Guess how the cereal companies report this finding. Does eating cereal really cause an individual to maintain a healthy weight, or are there other possible explanations, such as, someone at a healthy weight is more likely to regularly eat a healthy breakfast than someone who is obese or someone who avoids meals in an attempt to diet (Figure 15)? While correlational research is invaluable in identifying relationships among variables, a major limitation is the inability to establish causality. Psychologists want to make statements about cause and effect, but the only way to do that is to conduct an experiment to answer a research question. The next section describes how scientific experiments incorporate methods that eliminate, or control for, alternative explanations, which allow researchers to explore how changes in one variable cause changes in another variable.

Watch this clip from Freakonomics for an example of how correlation does not indicate causation.

You can view the transcript for “Correlation vs. Causality: Freakonomics Movie” here (opens in new window) .

Illusory Correlations

The temptation to make erroneous cause-and-effect statements based on correlational research is not the only way we tend to misinterpret data. We also tend to make the mistake of illusory correlations, especially with unsystematic observations. Illusory correlations , or false correlations, occur when people believe that relationships exist between two things when no such relationship exists. One well-known illusory correlation is the supposed effect that the moon’s phases have on human behavior. Many people passionately assert that human behavior is affected by the phase of the moon, and specifically, that people act strangely when the moon is full (Figure 16).

There is no denying that the moon exerts a powerful influence on our planet. The ebb and flow of the ocean’s tides are tightly tied to the gravitational forces of the moon. Many people believe, therefore, that it is logical that we are affected by the moon as well. After all, our bodies are largely made up of water. A meta-analysis of nearly 40 studies consistently demonstrated, however, that the relationship between the moon and our behavior does not exist (Rotton & Kelly, 1985). While we may pay more attention to odd behavior during the full phase of the moon, the rates of odd behavior remain constant throughout the lunar cycle.

Why are we so apt to believe in illusory correlations like this? Often we read or hear about them and simply accept the information as valid. Or, we have a hunch about how something works and then look for evidence to support that hunch, ignoring evidence that would tell us our hunch is false; this is known as confirmation bias . Other times, we find illusory correlations based on the information that comes most easily to mind, even if that information is severely limited. And while we may feel confident that we can use these relationships to better understand and predict the world around us, illusory correlations can have significant drawbacks. For example, research suggests that illusory correlations—in which certain behaviors are inaccurately attributed to certain groups—are involved in the formation of prejudicial attitudes that can ultimately lead to discriminatory behavior (Fiedler, 2004).

We all have a tendency to make illusory correlations from time to time. Try to think of an illusory correlation that is held by you, a family member, or a close friend. How do you think this illusory correlation came about and what can be done in the future to combat them?

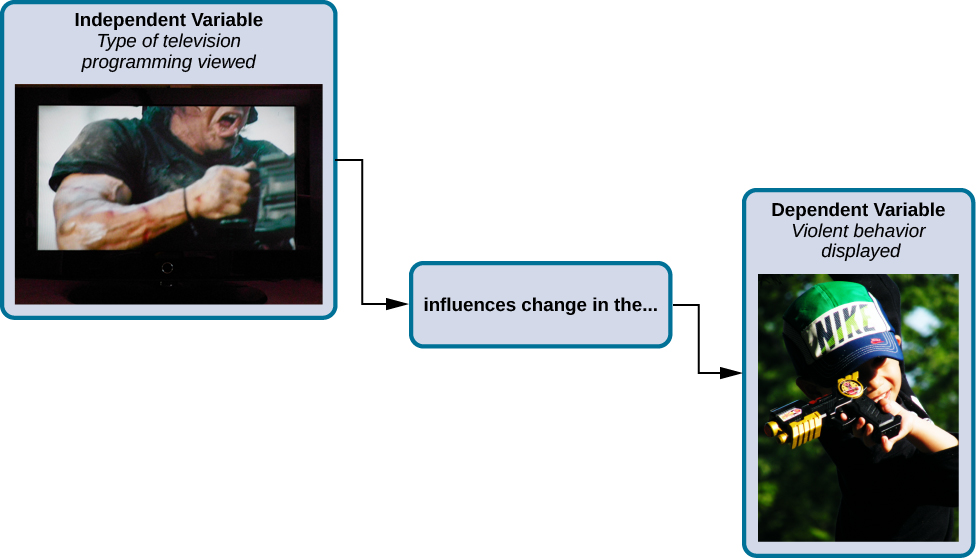

Experiments

Causality: conducting experiments and using the data, experimental hypothesis.

In order to conduct an experiment, a researcher must have a specific hypothesis to be tested. As you’ve learned, hypotheses can be formulated either through direct observation of the real world or after careful review of previous research. For example, if you think that children should not be allowed to watch violent programming on television because doing so would cause them to behave more violently, then you have basically formulated a hypothesis—namely, that watching violent television programs causes children to behave more violently. How might you have arrived at this particular hypothesis? You may have younger relatives who watch cartoons featuring characters using martial arts to save the world from evildoers, with an impressive array of punching, kicking, and defensive postures. You notice that after watching these programs for a while, your young relatives mimic the fighting behavior of the characters portrayed in the cartoon (Figure 17).

These sorts of personal observations are what often lead us to formulate a specific hypothesis, but we cannot use limited personal observations and anecdotal evidence to rigorously test our hypothesis. Instead, to find out if real-world data supports our hypothesis, we have to conduct an experiment.

Designing an Experiment

The most basic experimental design involves two groups: the experimental group and the control group. The two groups are designed to be the same except for one difference— experimental manipulation. The experimental group gets the experimental manipulation—that is, the treatment or variable being tested (in this case, violent TV images)—and the control group does not. Since experimental manipulation is the only difference between the experimental and control groups, we can be sure that any differences between the two are due to experimental manipulation rather than chance.

In our example of how violent television programming might affect violent behavior in children, we have the experimental group view violent television programming for a specified time and then measure their violent behavior. We measure the violent behavior in our control group after they watch nonviolent television programming for the same amount of time. It is important for the control group to be treated similarly to the experimental group, with the exception that the control group does not receive the experimental manipulation. Therefore, we have the control group watch non-violent television programming for the same amount of time as the experimental group.

We also need to precisely define, or operationalize, what is considered violent and nonviolent. An operational definition is a description of how we will measure our variables, and it is important in allowing others understand exactly how and what a researcher measures in a particular experiment. In operationalizing violent behavior, we might choose to count only physical acts like kicking or punching as instances of this behavior, or we also may choose to include angry verbal exchanges. Whatever we determine, it is important that we operationalize violent behavior in such a way that anyone who hears about our study for the first time knows exactly what we mean by violence. This aids peoples’ ability to interpret our data as well as their capacity to repeat our experiment should they choose to do so.

Once we have operationalized what is considered violent television programming and what is considered violent behavior from our experiment participants, we need to establish how we will run our experiment. In this case, we might have participants watch a 30-minute television program (either violent or nonviolent, depending on their group membership) before sending them out to a playground for an hour where their behavior is observed and the number and type of violent acts is recorded.

Ideally, the people who observe and record the children’s behavior are unaware of who was assigned to the experimental or control group, in order to control for experimenter bias. Experimenter bias refers to the possibility that a researcher’s expectations might skew the results of the study. Remember, conducting an experiment requires a lot of planning, and the people involved in the research project have a vested interest in supporting their hypotheses. If the observers knew which child was in which group, it might influence how much attention they paid to each child’s behavior as well as how they interpreted that behavior. By being blind to which child is in which group, we protect against those biases. This situation is a single-blind study , meaning that one of the groups (participants) are unaware as to which group they are in (experiment or control group) while the researcher who developed the experiment knows which participants are in each group.

In a double-blind study , both the researchers and the participants are blind to group assignments. Why would a researcher want to run a study where no one knows who is in which group? Because by doing so, we can control for both experimenter and participant expectations. If you are familiar with the phrase placebo effect, you already have some idea as to why this is an important consideration. The placebo effect occurs when people’s expectations or beliefs influence or determine their experience in a given situation. In other words, simply expecting something to happen can actually make it happen.

The placebo effect is commonly described in terms of testing the effectiveness of a new medication. Imagine that you work in a pharmaceutical company, and you think you have a new drug that is effective in treating depression. To demonstrate that your medication is effective, you run an experiment with two groups: The experimental group receives the medication, and the control group does not. But you don’t want participants to know whether they received the drug or not.

Why is that? Imagine that you are a participant in this study, and you have just taken a pill that you think will improve your mood. Because you expect the pill to have an effect, you might feel better simply because you took the pill and not because of any drug actually contained in the pill—this is the placebo effect.

To make sure that any effects on mood are due to the drug and not due to expectations, the control group receives a placebo (in this case a sugar pill). Now everyone gets a pill, and once again neither the researcher nor the experimental participants know who got the drug and who got the sugar pill. Any differences in mood between the experimental and control groups can now be attributed to the drug itself rather than to experimenter bias or participant expectations (Figure 18).

Independent and Dependent Variables

In a research experiment, we strive to study whether changes in one thing cause changes in another. To achieve this, we must pay attention to two important variables, or things that can be changed, in any experimental study: the independent variable and the dependent variable. An independent variable is manipulated or controlled by the experimenter. In a well-designed experimental study, the independent variable is the only important difference between the experimental and control groups. In our example of how violent television programs affect children’s display of violent behavior, the independent variable is the type of program—violent or nonviolent—viewed by participants in the study (Figure 19). A dependent variable is what the researcher measures to see how much effect the independent variable had. In our example, the dependent variable is the number of violent acts displayed by the experimental participants.

We expect that the dependent variable will change as a function of the independent variable. In other words, the dependent variable depends on the independent variable. A good way to think about the relationship between the independent and dependent variables is with this question: What effect does the independent variable have on the dependent variable? Returning to our example, what effect does watching a half hour of violent television programming or nonviolent television programming have on the number of incidents of physical aggression displayed on the playground?

Selecting and Assigning Experimental Participants

Now that our study is designed, we need to obtain a sample of individuals to include in our experiment. Our study involves human participants so we need to determine who to include. Participants are the subjects of psychological research, and as the name implies, individuals who are involved in psychological research actively participate in the process. Often, psychological research projects rely on college students to serve as participants. In fact, the vast majority of research in psychology subfields has historically involved students as research participants (Sears, 1986; Arnett, 2008). But are college students truly representative of the general population? College students tend to be younger, more educated, more liberal, and less diverse than the general population. Although using students as test subjects is an accepted practice, relying on such a limited pool of research participants can be problematic because it is difficult to generalize findings to the larger population.

Our hypothetical experiment involves children, and we must first generate a sample of child participants. Samples are used because populations are usually too large to reasonably involve every member in our particular experiment (Figure 20). If possible, we should use a random sample (there are other types of samples, but for the purposes of this section, we will focus on random samples). A random sample is a subset of a larger population in which every member of the population has an equal chance of being selected. Random samples are preferred because if the sample is large enough we can be reasonably sure that the participating individuals are representative of the larger population. This means that the percentages of characteristics in the sample—sex, ethnicity, socioeconomic level, and any other characteristics that might affect the results—are close to those percentages in the larger population.

In our example, let’s say we decide our population of interest is fourth graders. But all fourth graders is a very large population, so we need to be more specific; instead we might say our population of interest is all fourth graders in a particular city. We should include students from various income brackets, family situations, races, ethnicities, religions, and geographic areas of town. With this more manageable population, we can work with the local schools in selecting a random sample of around 200 fourth graders who we want to participate in our experiment.

In summary, because we cannot test all of the fourth graders in a city, we want to find a group of about 200 that reflects the composition of that city. With a representative group, we can generalize our findings to the larger population without fear of our sample being biased in some way.

Now that we have a sample, the next step of the experimental process is to split the participants into experimental and control groups through random assignment. With random assignment , all participants have an equal chance of being assigned to either group. There is statistical software that will randomly assign each of the fourth graders in the sample to either the experimental or the control group.

Random assignment is critical for sound experimental design. With sufficiently large samples, random assignment makes it unlikely that there are systematic differences between the groups. So, for instance, it would be very unlikely that we would get one group composed entirely of males, a given ethnic identity, or a given religious ideology. This is important because if the groups were systematically different before the experiment began, we would not know the origin of any differences we find between the groups: Were the differences preexisting, or were they caused by manipulation of the independent variable? Random assignment allows us to assume that any differences observed between experimental and control groups result from the manipulation of the independent variable.

Issues to Consider

While experiments allow scientists to make cause-and-effect claims, they are not without problems. True experiments require the experimenter to manipulate an independent variable, and that can complicate many questions that psychologists might want to address. For instance, imagine that you want to know what effect sex (the independent variable) has on spatial memory (the dependent variable). Although you can certainly look for differences between males and females on a task that taps into spatial memory, you cannot directly control a person’s sex. We categorize this type of research approach as quasi-experimental and recognize that we cannot make cause-and-effect claims in these circumstances.

Experimenters are also limited by ethical constraints. For instance, you would not be able to conduct an experiment designed to determine if experiencing abuse as a child leads to lower levels of self-esteem among adults. To conduct such an experiment, you would need to randomly assign some experimental participants to a group that receives abuse, and that experiment would be unethical.

Introduction to Statistical Thinking

Psychologists use statistics to assist them in analyzing data, and also to give more precise measurements to describe whether something is statistically significant. Analyzing data using statistics enables researchers to find patterns, make claims, and share their results with others. In this section, you’ll learn about some of the tools that psychologists use in statistical analysis.

- Define reliability and validity

- Describe the importance of distributional thinking and the role of p-values in statistical inference

- Describe the role of random sampling and random assignment in drawing cause-and-effect conclusions

- Describe the basic structure of a psychological research article

Interpreting Experimental Findings

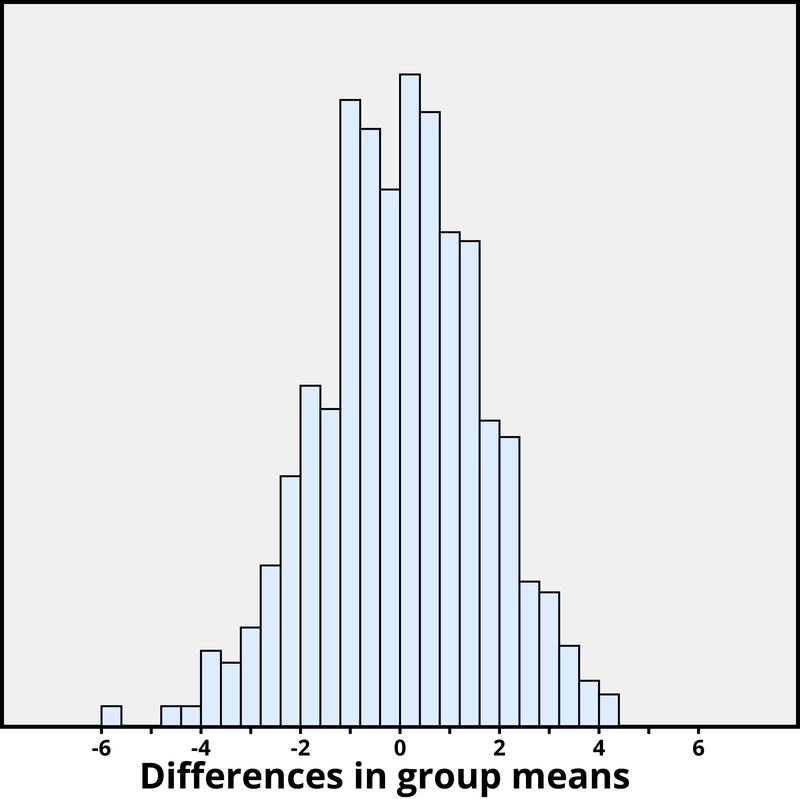

Once data is collected from both the experimental and the control groups, a statistical analysis is conducted to find out if there are meaningful differences between the two groups. A statistical analysis determines how likely any difference found is due to chance (and thus not meaningful). In psychology, group differences are considered meaningful, or significant, if the odds that these differences occurred by chance alone are 5 percent or less. Stated another way, if we repeated this experiment 100 times, we would expect to find the same results at least 95 times out of 100.

The greatest strength of experiments is the ability to assert that any significant differences in the findings are caused by the independent variable. This occurs because random selection, random assignment, and a design that limits the effects of both experimenter bias and participant expectancy should create groups that are similar in composition and treatment. Therefore, any difference between the groups is attributable to the independent variable, and now we can finally make a causal statement. If we find that watching a violent television program results in more violent behavior than watching a nonviolent program, we can safely say that watching violent television programs causes an increase in the display of violent behavior.

Reporting Research

When psychologists complete a research project, they generally want to share their findings with other scientists. The American Psychological Association (APA) publishes a manual detailing how to write a paper for submission to scientific journals. Unlike an article that might be published in a magazine like Psychology Today, which targets a general audience with an interest in psychology, scientific journals generally publish peer-reviewed journal articles aimed at an audience of professionals and scholars who are actively involved in research themselves.