Teaching @ Purdue

Applications in Context Topics

- Overview of Applications in Context

- Rubrics

- Discussions

- Lecturing

- Outcomes and Objectives

- Creating Exams

- Creating Inclusive Grading Structures

Leave Your Feedback Cancel reply

You must be logged in to post a comment.

Outcomes and Objectives

Home » Applications in Context » Outcomes and Objectives

Learning outcomes and objectives are the fundamental elements of most well-designed courses. Well-conceived outcomes and objectives serve as guideposts to help instructors work through the design of a course such that students receive the guidance and structure to achieve meaningful outcomes, as well as guide how those outcomes can be assessed accurately and appropriately.

The Basics of Learning Outcomes and Objectives

Defining terms.

While the terms “learning outcomes” and “learning objectives” are used with varied meanings in varied contexts across higher education, at Purdue we try to use them in a more precise manner. By Learning Outcomes we mean a set of three to five goals that reflect what students will be able to achieve or skills or attitudes they will develop during the class. We use Learning Objective to refer to the steps that lead into a particular outcome. By approaching teaching and learning goals in this way, we can help students understand the path toward successful completion of the class. Some people also use the term Learning Goals , and this can be useful especially in discussions with students about what outcomes and objectives mean, particularly if you co-construct one or more outcomes with students; so, while we do not use the term officially, learning goals may be useful in discussions with students.

Represent the result rather than the means

Outcomes define the end results of a student’s successful engagement in a class. It is important to remember that the ends are different than the means . An outcome is not the process with which students engage to reach that goal, but the end result of achieving that goal. In some cases, the end result will be learning a process, but integrating a process into one’s cognitive and skill repertoire is different than going through a process (e.g., the act of learning how to write a research paper is different than the process of writing a research paper).

A note about Foundational and Embedded learning outcomes

At Purdue, many courses are designated as fulfilling foundational and/or embedded learning outcomes. These outcomes are defined at the university level and assessed regularly. They help to define what it means for a student to complete an undergraduate degree from Purdue, and they set Purdue apart for its high standards of student achievement across a range of core topics. Each of the outcome types is approached and handled differently. Check with your department about if/how your class is designated as fulfilling these outcomes, and email [email protected] if you would like assistance incorporating them into your class.

Writing Meaningful Outcomes

There are numerous strategies for writing effective learning outcomes, and they all have various advantages and disadvantages including more or less structure. One of the most common approaches is to think of outcomes as finishing the following sentence: “Upon successfully completing this course, students will be able to…” This framing emphasizes outcomes as the forward-looking result rather than the means. It also supports transparency by prompting a discussion about what success in the course looks like.

The basics: a verb and an object

If you are just beginning to write outcomes and objectives, try aiming for three components. The following are two similar models that may be useful for thinking through this in your class:

Approach 1:

- The verb generally refers to [actions associated with] the intended cognitive process .

- The object generally describes the knowledge students are expected to acquire or construct.

- A statement regarding the criterion for successful performance .

Approach 2 (from Tobin and Behling’s book, Reach Everyone, Teach Everyone see page 181 in Chapter 7 for examples) :

- Desired behavior , with as much specificity as possible.

- Measurement that explains how you will gauge a student’s mastery.

- Level of proficiency a student should exhibit to have mastered the objective.

The implications of language

We begin with the verb because research into cognitive processes reveals that the verb has profound implications for the type and complexity of cognitive processes. In fact, there are countless lists of verbs, often associated with Benjamin Bloom, a highly influential educational theorist who defined learning around mastery and in doing so began to categorize different types of cognitive, affective, and psychomotor processes based on their difficulty in hierarchical order. In the early 2000s, this work was revised and expanded by a large team of scholars, including adding an additional dimension to the cognitive hierarchy. These verb lists can be misleading, as you may often see the same verb associated with multiple cognitive tasks. We encourage you to use the descriptors in your outcome to identify what students will actually be able to do and ensure that your use of the verb appropriately aligns.

When we ask ourselves questions about the implications of our verb choices, we are often forced to reckon with overused generic terms. The most common example is “understand.” For many, this is the first verb that comes to mind when thinking about what students should be able to do at the end of a course. Consider the popular YouTube series by Wired in which an expert explains a topic at five levels of complexity : a child, a teen, an undergraduate, a graduate student, and a peer expert. At the end of these explanations all have developed or demonstrated an understanding of the concept, but their understanding is vastly different. One mode of working out outcomes and objectives is to start with “understand” and then add a second verb that clarifies the level (what a student at this level will be able to do). Often this use of “understand” lacks clarity unless we add a second verb, in which case it often become clearer and more precise to remove the generic “understand.”

Be transparent: avoid secrets and highlight challenges

Valuing and caring are legitimate outcomes.

Instructors often use what might be termed “secret” learning outcomes or objectives, which are often affective rather than cognitive in nature. For example, in some classes an instructor may want students to appreciate the importance of the subject matter. Often, this involves teaching material that students perceive as tangential to their degree program, but instructors and departments believe is essential. Some common examples involve writing and communication skills, ethics, or legal knowledge in fields where practitioners make use of these competencies every day, but students are often more focused on what they perceive as more quantifiable skills. In the affective learning domain, you may consider outcomes focused on valuing or caring about something (see the alternate outcomes below).

Reveal bottlenecks

Another type of secret or hidden outcome or objective involves something instructors have identified as bottlenecks in their course or discipline. These bottlenecks often reflect ideas, concepts, or skills that may seem small, but when not mastered can pose long-lasting challenges for many students. Sometimes these may seem tangential, like those values described above, other times a bottleneck may be part of a process that students tend to skip (varying modes of checking for errors, for example), or sometimes they require that a student take a different perspective when engaging with a source or problem. Students may often experience these bottlenecks by relying on learning methods that worked with low-complexity topics but cannot handle the complex elements of your course. Some topics are counterintuitive to how we experience the world, and to avoid bottlenecks, students need to overcome their preconceptions and experiences. By highlighting these bottlenecks as explicit outcomes or objectives, making them transparent, pointing to the challenges they pose, and highlighting why it is vital to overcome them, we support students’ long-term success as they move beyond our class as well.

Consider different types of outcomes and objectives

The vast majority of learning outcomes and advice related to outcomes focuses on discrete cognitive skills that are measurable through simple means. For example, a common approach to an outcome may read something like: “Apply the first law of thermodynamics in a closed system.” These discrete and easily measurable skills are vital in many disciplines, but you may also think about learning outcomes that focus on other aspects of one’s life and development. L. Dee Fink, the author of the book Creating Significant Learning Experiences , describes six different outcome categories. The first three deal with these cognitive skills and the second three with affective, interpersonal, and intrapersonal development. By including this second set of goals in our course design and development, we introduce opportunities to support students’ ability to engage in more meaningful ways with each other and, by extension, their feeling of belongingness, connection, and individuality in the class.

- Foundational knowledge : understanding and remembering information and ideas

- Application : skills, critical thinking, creative thinking, practical thinking, and managing projects (e.g., the thermodynamics example above)

- Integration : connecting information, ideas, perspectives, people, or realms of life

- Human dimension : learning about oneself and others

- Caring : developing new feelings, interests, and values

- Learning how to learn : becoming a better student, inquiring about a subject, becoming a self-directed learner

Try treating students as partners around outcomes

Co-construction.

While the broad shape of an outcome will almost always be carefully crafted ahead of time, one approach to help students feel connected to the class is to enlist them in co-constructing parts of an outcome. Most frequently, this co-construction revolves around what success will look like, and it is particularly useful when it is an outcome that in which different students can succeed in different ways. For example, in a discussion-oriented class, one of the outcomes may focus on students developing their communication skills through class participation. But personality and other differences may mean that students have vastly different needs in terms of developing these skills. At a basic level, some students may have greater challenges with speaking up and sharing their thoughts in front of their peers and instructor. Other students may need to better develop their skills in listening to peers and responding productively. By approaching this outcome through co-construction, each student can set and be measured by appropriate goals that will pose a challenge to that student and help them develop important skills.

When outcomes are fixed, focus on communicating and responding to students

In most classes, outcomes and objectives are pre-determined and sometimes must adhere to standards beyond an instructor’s control, whether fitting university requirements or those of national accreditors. Especially in cases where outcomes are fixed, it is too easy to assume that students’ goals are also fixed. Even when classes are required as part of a sequence for a major, students often have widely varying goals for their lives and careers, and sometimes even thoughts regarding how this particular class may fit into achieving their goals. When we start the semester, we can ask students about their goals and what they hope to get out of a class and use existing outcomes and objectives to highlight connections and possibilities. Remember that, because students have not yet engaged with this material, they are much less prepared to make the connections. What may seem obvious to an expert instructor may seem opaque to a learner.

Ask students about the achievements related to outcomes

One common model for understanding student achievement involves asking students about their success specifically related to the course outcomes. This can be done to gauge their perception of success: As a result of your work in this class, what gains did you make in [course outcome]” or to gauge the effectiveness of specific teaching practices: “How much did the following aspects of the course help you in your learning? (Examples might include class and lab activities, assessments, particular learning methods, and resources).” Both of these questions come from the SALG (Student Assessment of Learning Gains) survey/tool (note: the website is rather dated). Studies demonstrate that, while students tend to overestimate their competence relative to instructors, their input broadly is informative, and when these disparities emerge, they can be useful for instructors to interrogate teaching and assessment practices.

Share and reference outcomes and objectives early and often

Discuss outcomes and objectives in every class session.

One of the most common instructor complaints is that students do not pay attention to the outcomes and objectives of a class. This is often a case of mutual neglect. In addition to including class outcomes in your syllabus, highlight outcomes and their connections to objectives in each class session and in instructions for assignments. During class sessions, find opportunities to remind students of these connections. By creating a culture of outcomes and objectives integrated throughout elements of the class, students are better able to follow their progression and understand how different class components and learning integrates and synthesizes with each other.

Build outcomes into the design of assignments

When sharing instructions or guidelines for an assessment, make sure to share and discuss how the assignment fits into the structure of learning outcomes and objectives for the class. See the Creating Inclusive Grading Structures page for more detail and structures.

Write outcomes that reflect your students’ experiences and abilities

Prepare for different academic experiences.

One challenge in planning a class is that it is easy to imagine an idealized student who will enroll in your class. They will have completed certain other classes, possibly had certain experiences, may have certain goals. This ideal student assumption leads many instructors to complain that students were not properly prepared for their class. When writing outcomes, it is valuable to write them for the reality of students present. In reality, students will take a variety of paths, and prerequisite classes may have been completed at other institutions or with a variety of instructors who may have emphasized different elements. Even in situations where every student took the exact same class with the exact same instructor the exact semester prior, students’ strengths and weaknesses with particular topics and skills covered will vary. This does not mean you must re-teach prerequisite courses but building in objectives that highlight particular elements of previous classes will help strengthen and clarify previous learning in addition to helping students identify existing gaps to fill.

Outcomes can reflect a multitude of expressive processes

As outcomes — particularly their language — are intimately intertwined with assessment processes, think carefully about how wording choices may limit students’ ability to express their learning. If the outcome specifies writing, is learning to write in the appropriate format and for the appropriate audience central or is writing one common way (e.g., written language) enough for students to express the more central component of an outcome? What if “write” were turned into “express,” “share,” or “present,” all of which open up greater flexibility in modality of conveying a student’s understanding of content or mastery of skills that are not specific to the written form?

Use the Learning Outcomes Worksheet to practice writing at least one outcome and identifying what category you would place it in. You will find a variety of actual examples from Purdue instructors on the second page of the worksheet.

Learning Outcomes

After you have developed one or more outcomes, view the Creating Inclusive Grading Structures and/or Lecturing pages to consider ways of putting your new outcome(s) into practice in your class.

Hanstedt, P. (2018). Creating wicked students: Designing courses for a complex world . Stylus Publishing.

In this book, Hanstedt argues for creating courses to prepare students to deal with complex problems that do not have simple answers and often draw on a variety of different disciplinary skills and techniques. Chapter 2 , in particular, focuses on writing goals (his term for outcomes), with numerous examples.

Fink, L. D. (2013) Creating significant learning experiences: An integrated approach to designing college course . John Wiley & Sons.

As noted above, Fink's approach focuses on creating outcomes (also using the term goals) that fit six distinct categories. Like Handstedt, Fink provides guidance and numerous examples of how to construct such goals.

Anderson L. W. & Krathwohl, D. R. (2001). A taxonomy for learning, teaching, and assessing: A revision of Bloom’s taxonomy of educational objectives . Longman.

This update/revision to Bloom's cognitive domain includes numerous resources and examples as well as adds a cognitive process dimension, recognizing that any of the six cognitive categories can also be broken down into four processes: factual knowledge, conceptual knowledge, procedural knowledge, and metacognitive knowledge.

Note: Purdue Libraries only has a print version of this book. You can find online resources developed by Iowa State University, including detailed information about the knowledge dimension.

Module Navigation

- Next Module: Creating Exams

- Previous Module: Lecturing

- Current Topic: Applications in Context

- Next Topic: Certificate Program

Creating Learning Outcomes

Main navigation.

A learning outcome is a concise description of what students will learn and how that learning will be assessed. Having clearly articulated learning outcomes can make designing a course, assessing student learning progress, and facilitating learning activities easier and more effective. Learning outcomes can also help students regulate their learning and develop effective study strategies.

Defining the terms

Educational research uses a number of terms for this concept, including learning goals, student learning objectives, session outcomes, and more.

In alignment with other Stanford resources, we will use learning outcomes as a general term for what students will learn and how that learning will be assessed. This includes both goals and objectives. We will use learning goals to describe general outcomes for an entire course or program. We will use learning objectives when discussing more focused outcomes for specific lessons or activities.

For example, a learning goal might be “By the end of the course, students will be able to develop coherent literary arguments.”

Whereas a learning objective might be, “By the end of Week 5, students will be able to write a coherent thesis statement supported by at least two pieces of evidence.”

Learning outcomes benefit instructors

Learning outcomes can help instructors in a number of ways by:

- Providing a framework and rationale for making course design decisions about the sequence of topics and instruction, content selection, and so on.

- Communicating to students what they must do to make progress in learning in your course.

- Clarifying your intentions to the teaching team, course guests, and other colleagues.

- Providing a framework for transparent and equitable assessment of student learning.

- Making outcomes concerning values and beliefs, such as dedication to discipline-specific values, more concrete and assessable.

- Making inclusion and belonging explicit and integral to the course design.

Learning outcomes benefit students

Clearly, articulated learning outcomes can also help guide and support students in their own learning by:

- Clearly communicating the range of learning students will be expected to acquire and demonstrate.

- Helping learners concentrate on the areas that they need to develop to progress in the course.

- Helping learners monitor their own progress, reflect on the efficacy of their study strategies, and seek out support or better strategies. (See Promoting Student Metacognition for more on this topic.)

Choosing learning outcomes

When writing learning outcomes to represent the aims and practices of a course or even a discipline, consider:

- What is the big idea that you hope students will still retain from the course even years later?

- What are the most important concepts, ideas, methods, theories, approaches, and perspectives of your field that students should learn?

- What are the most important skills that students should develop and be able to apply in and after your course?

- What would students need to have mastered earlier in the course or program in order to make progress later or in subsequent courses?

- What skills and knowledge would students need if they were to pursue a career in this field or contribute to communities impacted by this field?

- What values, attitudes, and habits of mind and affect would students need if they are to pursue a career in this field or contribute to communities impacted by this field?

- How can the learning outcomes span a wide range of skills that serve students with differing levels of preparation?

- How can learning outcomes offer a range of assessment types to serve a diverse student population?

Use learning taxonomies to inform learning outcomes

Learning taxonomies describe how a learner’s understanding develops from simple to complex when learning different subjects or tasks. They are useful here for identifying any foundational skills or knowledge needed for more complex learning, and for matching observable behaviors to different types of learning.

Bloom’s Taxonomy

Bloom’s Taxonomy is a hierarchical model and includes three domains of learning: cognitive, psychomotor, and affective. In this model, learning occurs hierarchically, as each skill builds on previous skills towards increasingly sophisticated learning. For example, in the cognitive domain, learning begins with remembering, then understanding, applying, analyzing, evaluating, and lastly creating.

Taxonomy of Significant Learning

The Taxonomy of Significant Learning is a non-hierarchical and integral model of learning. It describes learning as a meaningful, holistic, and integral network. This model has six intersecting domains: knowledge, application, integration, human dimension, caring, and learning how to learn.

See our resource on Learning Taxonomies and Verbs for a summary of these two learning taxonomies.

How to write learning outcomes

Writing learning outcomes can be made easier by using the ABCD approach. This strategy identifies four key elements of an effective learning outcome:

Consider the following example: Students (audience) , will be able to label and describe (behavior) , given a diagram of the eye at the end of this lesson (condition) , all seven extraocular muscles, and at least two of their actions (degree) .

Audience

Define who will achieve the outcome. Outcomes commonly include phrases such as “After completing this course, students will be able to...” or “After completing this activity, workshop participants will be able to...”

Keeping your audience in mind as you develop your learning outcomes helps ensure that they are relevant and centered on what learners must achieve. Make sure the learning outcome is focused on the student’s behavior, not the instructor’s. If the outcome describes an instructional activity or topic, then it is too focused on the instructor’s intentions and not the students.

Try to understand your audience so that you can better align your learning goals or objectives to meet their needs. While every group of students is different, certain generalizations about their prior knowledge, goals, motivation, and so on might be made based on course prerequisites, their year-level, or majors.

Use action verbs to describe observable behavior that demonstrates mastery of the goal or objective. Depending on the skill, knowledge, or domain of the behavior, you might select a different action verb. Particularly for learning objectives which are more specific, avoid verbs that are vague or difficult to assess, such as “understand”, “appreciate”, or “know”.

The behavior usually completes the audience phrase “students will be able to…” with a specific action verb that learners can interpret without ambiguity. We recommend beginning learning goals with a phrase that makes it clear that students are expected to actively contribute to progressing towards a learning goal. For example, “through active engagement and completion of course activities, students will be able to…”

Example action verbs

Consider the following examples of verbs from different learning domains of Bloom’s Taxonomy . Generally speaking, items listed at the top under each domain are more suitable for advanced students, and items listed at the bottom are more suitable for novice or beginning students. Using verbs and associated skills from all three domains, regardless of your discipline area, can benefit students by diversifying the learning experience.

For the cognitive domain:

- Create, investigate, design

- Evaluate, argue, support

- Analyze, compare, examine

- Solve, operate, demonstrate

- Describe, locate, translate

- Remember, define, duplicate, list

For the psychomotor domain:

- Invent, create, manage

- Articulate, construct, solve

- Complete, calibrate, control

- Build, perform, execute

- Copy, repeat, follow

For the affective domain:

- Internalize, propose, conclude

- Organize, systematize, integrate

- Justify, share, persuade

- Respond, contribute, cooperate

- Capture, pursue, consume

Often we develop broad goals first, then break them down into specific objectives. For example, if a goal is for learners to be able to compose an essay, break it down into several objectives, such as forming a clear thesis statement, coherently ordering points, following a salient argument, gathering and quoting evidence effectively, and so on.

State the conditions, if any, under which the behavior is to be performed. Consider the following conditions:

- Equipment or tools, such as using a laboratory device or a specified software application.

- Situation or environment, such as in a clinical setting, or during a performance.

- Materials or format, such as written text, a slide presentation, or using specified materials.

The level of specificity for conditions within an objective may vary and should be appropriate to the broader goals. If the conditions are implicit or understood as part of the classroom or assessment situation, it may not be necessary to state them.

When articulating the conditions in learning outcomes, ensure that they are sensorily and financially accessible to all students.

Degree

Degree states the standard or criterion for acceptable performance. The degree should be related to real-world expectations: what standard should the learner meet to be judged proficient? For example:

- With 90% accuracy

- Within 10 minutes

- Suitable for submission to an edited journal

- Obtain a valid solution

- In a 100-word paragraph

The specificity of the degree will vary. You might take into consideration professional standards, what a student would need to succeed in subsequent courses in a series, or what is required by you as the instructor to accurately assess learning when determining the degree. Where the degree is easy to measure (such as pass or fail) or accuracy is not required, it may be omitted.

Characteristics of effective learning outcomes

The acronym SMART is useful for remembering the characteristics of an effective learning outcome.

- Specific : clear and distinct from others.

- Measurable : identifies observable student action.

- Attainable : suitably challenging for students in the course.

- Related : connected to other objectives and student interests.

- Time-bound : likely to be achieved and keep students on task within the given time frame.

Examples of effective learning outcomes

These examples generally follow the ABCD and SMART guidelines.

Arts and Humanities

Learning goals.

Upon completion of this course, students will be able to apply critical terms and methodology in completing a written literary analysis of a selected literary work.

At the end of the course, students will be able to demonstrate oral competence with the French language in pronunciation, vocabulary, and language fluency in a 10 minute in-person interview with a member of the teaching team.

Learning objectives

After completing lessons 1 through 5, given images of specific works of art, students will be able to identify the artist, artistic period, and describe their historical, social, and philosophical contexts in a two-page written essay.

By the end of this course, students will be able to describe the steps in planning a research study, including identifying and formulating relevant theories, generating alternative solutions and strategies, and application to a hypothetical case in a written research proposal.

At the end of this lesson, given a diagram of the eye, students will be able to label all of the extraocular muscles and describe at least two of their actions.

Using chemical datasets gathered at the end of the first lab unit, students will be able to create plots and trend lines of that data in Excel and make quantitative predictions about future experiments.

- How to Write Learning Goals , Evaluation and Research, Student Affairs (2021).

- SMART Guidelines , Center for Teaching and Learning (2020).

- Learning Taxonomies and Verbs , Center for Teaching and Learning (2021).

Writing Student Learning Outcomes

Student learning outcomes state what students are expected to know or be able to do upon completion of a course or program. Course learning outcomes may contribute, or map to, program learning outcomes, and are required in group instruction course syllabi .

At both the course and program level, student learning outcomes should be clear, observable and measurable, and reflect what will be included in the course or program requirements (assignments, exams, projects, etc.). Typically there are 3-7 course learning outcomes and 3-7 program learning outcomes.

When submitting learning outcomes for course or program approvals, or assessment planning and reporting, please:

- Begin with a verb (exclude any introductory text and the phrase “Students will…”, as this is assumed)

- Limit the length of each learning outcome to 400 characters

- Exclude special characters (e.g., accents, umlats, ampersands, etc.)

- Exclude special formatting (e.g., bullets, dashes, numbering, etc.)

Writing Course Learning Outcomes Video

Watch Video

Steps for Writing Outcomes

The following are recommended steps for writing clear, observable and measurable student learning outcomes. In general, use student-focused language, begin with action verbs and ensure that the learning outcomes demonstrate actionable attributes.

1. Begin with an Action Verb

Begin with an action verb that denotes the level of learning expected. Terms such as know , understand , learn , appreciate are generally not specific enough to be measurable. Levels of learning and associated verbs may include the following:

- Remembering and understanding: recall, identify, label, illustrate, summarize.

- Applying and analyzing: use, differentiate, organize, integrate, apply, solve, analyze.

- Evaluating and creating: Monitor, test, judge, produce, revise, compose.

Consult Bloom’s Revised Taxonomy (below) for more details. For additional sample action verbs, consult this list from The Centre for Learning, Innovation & Simulation at The Michener Institute of Education at UNH.

2. Follow with a Statement

- Identify and summarize the important feature of major periods in the history of western culture

- Apply important chemical concepts and principles to draw conclusions about chemical reactions

- Demonstrate knowledge about the significance of current research in the field of psychology by writing a research paper

- Length – Should be no more than 400 characters.

*Note: Any special characters (e.g., accents, umlats, ampersands, etc.) and formatting (e.g., bullets, dashes, numbering, etc.) will need to be removed when submitting learning outcomes through HelioCampus Assessment and Credentialing (formerly AEFIS) and other digital campus systems.

Revised Bloom’s Taxonomy of Learning: The “Cognitive” Domain

To the right: find a sampling of verbs that represent learning at each level. Find additional action verbs .

*Text adapted from: Bloom, B.S. (Ed.) 1956. Taxonomy of Educational Objectives: The classification of educational goals. Handbook 1, Cognitive Domain. New York.

Anderson, L.W. (Ed.), Krathwohl, D.R. (Ed.), Airasian, P.W., Cruikshank, K.A., Mayer, R.E., Pintrich, P.R., Raths, J., & Wittrock, M.C. (2001). A taxonomy for learning, teaching, and assessing: A revision of Bloom’s Taxonomy of Educational Objectives (Complete edition). New York: Longman.

Examples of Learning Outcomes

Academic program learning outcomes.

The following examples of academic program student learning outcomes come from a variety of academic programs across campus, and are organized in four broad areas: 1) contextualization of knowledge; 2) praxis and technique; 3) critical thinking; and, 4) research and communication.

Student learning outcomes for each UW-Madison undergraduate and graduate academic program can be found in Guide . Click on the program of your choosing to find its designated learning outcomes.

This is an accordion element with a series of buttons that open and close related content panels.

Contextualization of Knowledge

Students will…

- identify, formulate and solve problems using appropriate information and approaches.

- demonstrate their understanding of major theories, approaches, concepts, and current and classical research findings in the area of concentration.

- apply knowledge of mathematics, chemistry, physics, and materials science and engineering principles to materials and materials systems.

- demonstrate an understanding of the basic biology of microorganisms.

Praxis and Technique

- utilize the techniques, skills and modern tools necessary for practice.

- demonstrate professional and ethical responsibility.

- appropriately apply laws, codes, regulations, architectural and interiors standards that protect the health and safety of the public.

Critical Thinking

- recognize, describe, predict, and analyze systems behavior.

- evaluate evidence to determine and implement best practice.

- examine technical literature, resolve ambiguity and develop conclusions.

- synthesize knowledge and use insight and creativity to better understand and improve systems.

Research and Communication

- retrieve, analyze, and interpret the professional and lay literature providing information to both professionals and the public.

- propose original research: outlining a plan, assembling the necessary protocol, and performing the original research.

- design and conduct experiments, and analyze and interpret data.

- write clear and concise technical reports and research articles.

- communicate effectively through written reports, oral presentations and discussion.

- guide, mentor and support peers to achieve excellence in practice of the discipline.

- work in multi-disciplinary teams and provide leadership on materials-related problems that arise in multi-disciplinary work.

Course Learning Outcomes

- identify, formulate and solve integrative chemistry problems. (Chemistry)

- build probability models to quantify risks of an insurance system, and use data and technology to make appropriate statistical inferences. (Actuarial Science)

- use basic vector, raster, 3D design, video and web technologies in the creation of works of art. (Art)

- apply differential calculus to model rates of change in time of physical and biological phenomena. (Math)

- identify characteristics of certain structures of the body and explain how structure governs function. (Human Anatomy lab)

- calculate the magnitude and direction of magnetic fields created by moving electric charges. (Physics)

Additional Resources

- Bloom’s Taxonomy

- The Six Facets of Understanding – Wiggins, G. & McTighe, J. (2005). Understanding by Design (2nd ed.). ASCD

- Taxonomy of Significant Learning – Fink, L.D. (2003). A Self-Directed Guide to Designing Courses for Significant Learning. Jossey-Bass

- College of Agricultural & Life Sciences Undergraduate Learning Outcomes

- College of Letters & Science Undergraduate Learning Outcomes

Guides best practices : Write learning outcomes and create an outline

Write learning outcomes.

We recommend writing a few learning outcomes to steer your guide. A learning outcome states what a participant will be able to do after instruction. A learning outcome should contain:

A BEHAVIOR What will they be able to do after the instruction? Use a verb that can be observed so that later on you can assess whether the guide was successful.

For example, a learning outcome for this guide is that - After using this guide (condition) , Stanford Libraries staff (audience) will write (behavior) one to three learning outcomes for each guide they create (degree).

Create an outline

Once you write a handful of learning outcomes (1 to 3), you can start to divide up the material into "chunks". These will become the pages on your guide.

DO create small, digestible chunks. The capacity for working memory is finite. Learning cannot take place if a user is overwhelmed if too much information is presented to them at one time.

- << Previous: What is a guide?

- Next: How to build a guide >>

- What is a guide?

- Write learning outcomes and create an outline

- How to build a guide

- Test for accessibility issues

- Maintenance and help

- Last Updated: Feb 1, 2024 3:38 PM

- URL: https://guides.library.stanford.edu/bestpractices

Academia.edu no longer supports Internet Explorer.

To browse Academia.edu and the wider internet faster and more securely, please take a few seconds to upgrade your browser .

Enter the email address you signed up with and we'll email you a reset link.

- We're Hiring!

- Help Center

Writing and using learning outcomes: a practical guide

Related Papers

Chahira Nouira

Anthony F. Camilleri

Practice and Evidence of the Scholarship of Teaching and Learning in Higher Education

Simon Paul Atkinson

For universities to remain relevant and competitive in a global market of Higher Apprenticeships and work-based learning provision there will need to be a much clearer articulation of the benefits accrued by students in their ‘graduateness’. A review of 20 UK institutions, 80 undergraduate modules and some 435 individual intended learning outcomes (ILOs) being taken by students in 2014-15 reveals the lack of definition of ILOs in terms of skills development attractive to employers. This paper argues that employability should be more clearly articulated in the ILOs specified at module level and that the development of employability skills at an institutional level requires sustained attention to ensuring transparency in module designs to ensure student choice and measurable skills acquisition is possible.

Ivana Batarelo Kokić

Current research on the implementation of the entrepreneurship programs in higher education commonly focuses on the distinction between business and non-business programs. The special focus of this pilot study is Higher Education Institutions (HEI) offering non-business programs. The quantitative section of the larger study of strategic piloting, implemented by SEECEL in cooperation with national partners, focused on the higher education institution (HEI) as unit of change to test the impact of the entrepreneurial learning SEECEL ELKCA instrument on a student’s entrepreneurship-related learning outcomes. The pilot study recommendations are related to the validity of the developed questionnaire and further implementation of entrepreneurial learning in an HEI. The internal consistency of the entrepreneurship-related learning outcomes scale indicates the high validity of the questionnaire. It is possible to conclude that further efforts should be made to incorporate entrepreneurial competences in teacher training programs and also to develop further entrepreneurship modules designed by SEECEL in non-teacher training HEIs to improve entrepreneurial competence in relation to the higher levels of learning in the cognitive domain and the affective domain. Additional data analysis revealed non-teacher training and teacher training HEIs that experienced significant changes in several entrepreneurial competence domains. These institutions should serve as examples of good practice and mentors. The current societal circumstances and the requirements to develop entrepreneurial universities demand additional efforts from higher education institutions and universities in implementing entrepreneurship education in current university programs.

Theoretical considerations and practical approaches to foster employability in a dynamic industry: This paper outlines processes for developing higher quality training and qualification programmes, in particular in regard to internet-sector jobs. In addition, it includes development guidelines for identifying training requirements and writing learning outcomes. This document was prepared as part of the PIN (ProInternet) Thematic Network Project, Agreement no. 2009-2204/001-001, under the auspices of the Leonardo da Vinci Programme. In was written in conjuction with Work Package 3, Labelisation, Certification and Normalisation. Work-package leader was teh DEKRA Akademie GmbH, located in Stuttgart, Germany; primary co-authors were the FOM Hochschule in Essen, Germany and the hellenic Open University in Patras, Greece. It was published as FOM Arbeitspapier Nr. 34.

Bright Ziso

Leilani Escalada

CATALINA ULRICH HYGUM

This Cedefop reference publication maps and analyses the shift to learning outcomes in education and training policies and practices across Europe. Bringing evidence on the development of national policies from 33 countries, the study examines progress made in recent years (2009 onwards) and attempts to capture the character of political reform at national, institution and local levels. Ten case studies in nine countries produce new empirical evidence on the presence of learning outcomes approaches in the design and delivery of programmes and curricula for teacher education programmes. Based on extensive literature review, interviews conducted with various stakeholders in curriculum policy-making and practice, focus groups and on-site visits, findings show how learning outcomes approaches increasingly feature as catalysts for policy and practical reform, influencing education and training practice. This publication also reveals the diversity of uses of the learning outcomes approaches being applied and highlights the complexity of implementing learning-outcomes centred policies and developing appropriate strategies at both systemic and subsystemic levels.

Stephen Adam

This chapter explores the nature and functions of learning outcomes in the context of the Bologna educational reforms. Section 1 explains what they are and where they originate. Section 2 explores their practical application and multiple functions, and provides a schematic summary. Section 3 places them in the context of current pedagogical reform and highlights their relationship to curriculum development - teaching learning and assessment. Section 4 establishes their centrality to the Bologna Process and the successful completion of the European Higher Education Area. Finally, the concluding section 5 points to some important issues associated with their application in the immediate future.

The Curriculum for Spatial Citizenship Education serves as a guiding foundation for creating local curriculum approaches of Spatial Citizenship (SC) teacher education and training across the European Higher Educational Area (EHEA). Thus, it addresses all stakeholders related to that special field of teaching and learning at secondary school as well as higher education, and for in-service teacher training. This paper outlines the development process of the Spatial Citizenship Curriculum within the progress of the Comenius project Spatial Citizenship (SPACIT). Giving an example of implementation, this paper also introduces a framework of a blended-learning in-service teacher training course.

Loading Preview

Sorry, preview is currently unavailable. You can download the paper by clicking the button above.

RELATED PAPERS

Dr. D. ASIR ANTONY GNANA SINGH B.E., M.E., M.B.A., Ph.D., , jebi lee

Irish Educational Studies

David Brancaleone

De Editores , Manuel Silva , Isabel Chumbo , Alexandra Albuquerque

Ana Gonçalves

IEEE Access

Lindsey Conner

Kamo Chilingaryan , Chilingaryan Kamo

Kamo Chilingaryan

Bilal Khawaja

Abdunasir Sideeg , Abdunasir Sideeg

2017 ASEE Annual Conference & Exposition Proceedings

Wajid Hussain

Álvaro Rocha

Richard A. Voorhees , Alice Bedard-Voorhees

REGINA LAMBIN

HJALMAR P U N L A HERNANDEZ

Abubakar Lk

BPP University Working Paper

Journal of Curriculum Studies

Matěj Vrhel

Juan José Sobrino

Proceedings Companion of the 23rd Annual ACM Conference on Innovation and Technology in Computer Science Education

Mats Daniels

Gertina J. van Schalkwyk

Mukund Darvhekar

Hong Y. Ching

Sheperd S Moyo

Ona Vileikis

Giuseppe Modica

Tom S . Cockburn

midhun.k Kulappuram

Clifford Adelman

Marinus de Bakker

ETHE journal

Dr. Ahmed Gumaa Siddiek

8 th European GIS Education Seminar 6-9 September, …

Luc Zwartjes

Koen Van Balen

Science Park Research Organization & Counselling

Francois Adoue

ACM Sigcse Bulletin

Colin Johnson , Jana Jackova

… : Learning outcomes based higher education: the …

The 10 th AISOFOLL, Qitep in Language

Moch Said Mardjuki

Peter Merckx

RELATED TOPICS

- We're Hiring!

- Help Center

- Find new research papers in:

- Health Sciences

- Earth Sciences

- Cognitive Science

- Mathematics

- Computer Science

- Academia ©2024

A Comparison of Student Learning Outcomes: Online Education vs. Traditional Classroom Instruction

Despite the prevalence of online learning today, it is often viewed as a less favorable option when compared to the traditional, in-person educational experience. Criticisms of online learning come from various sectors, like employer groups, college faculty, and the general public, and generally includes a lack of perceived quality as well as rigor. Additionally, some students report feelings of social isolation in online learning (Protopsaltis & Baum, 2019).

In my experience as an online student as well as an online educator, online learning has been just the opposite. I have been teaching in a fully online master’s degree program for the last three years and have found it to be a rich and rewarding experience for students and faculty alike. As an instructor, I have felt more connected to and engaged with my online students when compared to in-person students. I have also found that students are actively engaged with course content and demonstrate evidence of higher-order thinking through their work. Students report high levels of satisfaction with their experiences in online learning as well as the program overall as indicated in their Student Evaluations of Teaching (SET) at the end of every course. I believe that intelligent course design, in addition to my engagement in professional development related to teaching and learning online, has greatly influenced my experience.

In an article by Wiley Education Services, authors identified the top six challenges facing US institutions of higher education, and include:

- Declining student enrollment

- Financial difficulties

- Fewer high school graduates

- Decreased state funding

- Lower world rankings

- Declining international student enrollments

Of the strategies that institutions are exploring to remedy these issues, online learning is reported to be a key focus for many universities (“Top Challenges Facing US Higher Education”, n.d.).

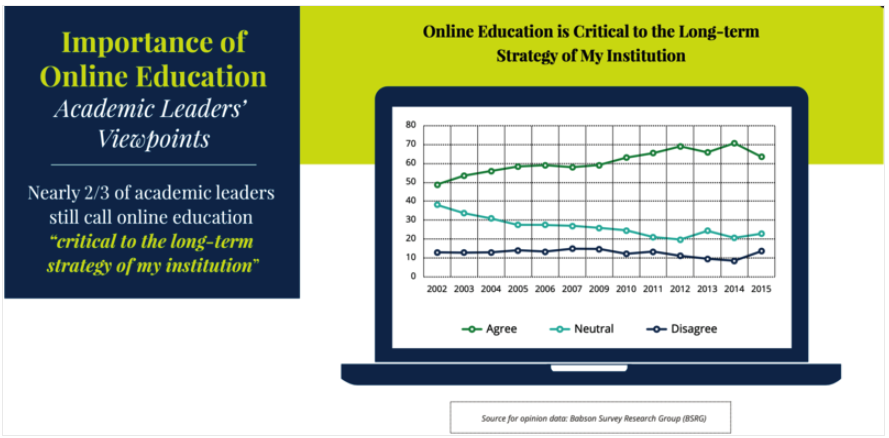

Babson Survey Research Group, 2016, [PDF file].

Some of the questions I would like to explore in further research include:

- What factors influence engagement and connection in distance education?

- Are the learning outcomes in online education any different than the outcomes achieved in a traditional classroom setting?

- How do course design and instructor training influence these factors?

- In what ways might educational technology tools enhance the overall experience for students and instructors alike?

In this literature review, I have chosen to focus on a comparison of student learning outcomes in online education versus the traditional classroom setting. My hope is that this research will unlock the answers to some of the additional questions posed above and provide additional direction for future research.

Online Learning Defined

According to Mayadas, Miller, and Sener (2015), online courses are defined by all course activity taking place online with no required in-person sessions or on-campus activity. It is important to note, however, that the Babson Survey Research Group, a prominent organization known for their surveys and research in online learning, defines online learning as a course in which 80-100% occurs online. While this distinction was made in an effort to provide consistency in surveys year over year, most institutions continue to define online learning as learning that occurs 100% online.

Blended or hybrid learning is defined by courses that mix face to face meetings, sessions, or activities with online work. The ratio of online to classroom activity is often determined by the label in which the course is given. For example, a blended classroom course would likely include more time spent in the classroom, with the remaining work occurring outside of the classroom with the assistance of technology. On the other hand, a blended online course would contain a greater percentage of work done online, with some required in-person sessions or meetings (Mayadas, Miller, & Sener, 2015).

A classroom course (also referred to as a traditional course) refers to course activity that is anchored to a regular meeting time.

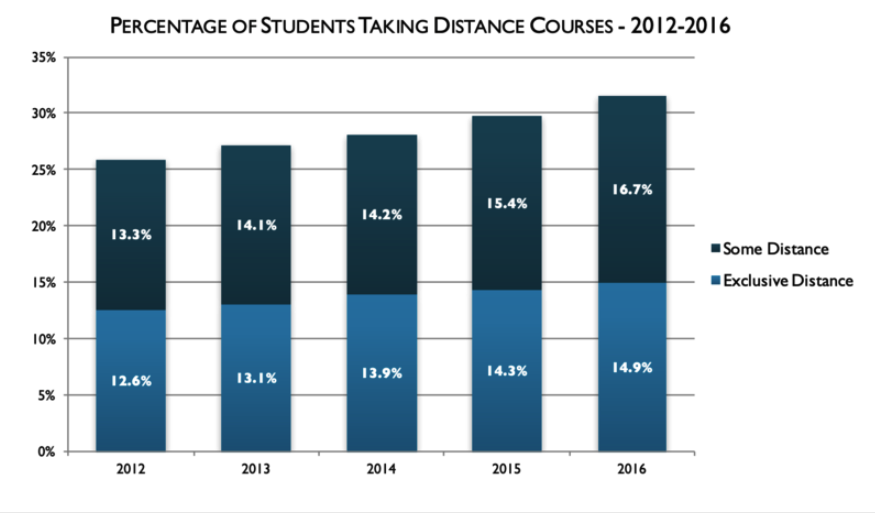

Enrollment Trends in Online Education

There has been an upward trend in the number of postsecondary students enrolled in online courses in the U.S. since 2002. A report by the Babson Survey Research Group showed that in 2016, more than six million students were enrolled in at least one online course. This number accounted for 31.6% of all college students (Seaman, Allen, & Seaman, 2018). Approximately one in three students are enrolled in online courses with no in-person component. Of these students, 47% take classes in a fully online program. The remaining 53% take some, but not all courses online (Protopsaltis & Baum, 2019).

(Seaman et al., 2016, p. 11)

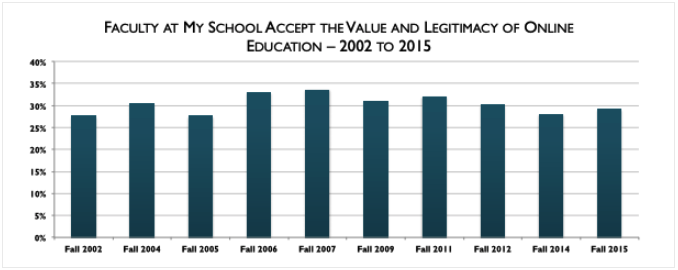

Perceptions of Online Education

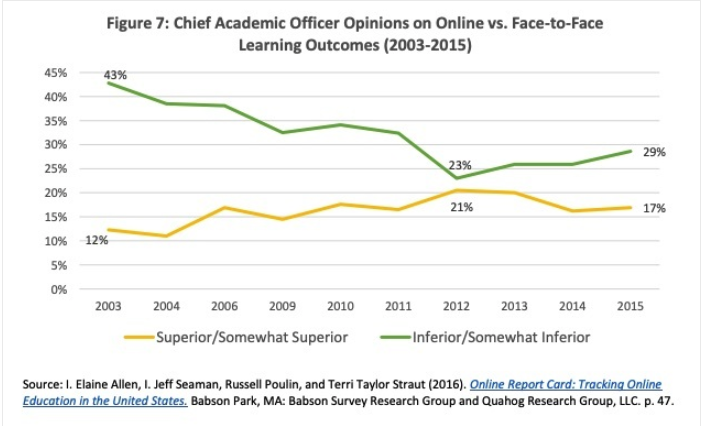

In a 2016 report by the Babson Survey Research Group, surveys of faculty between 2002-2015 showed approval ratings regarding the value and legitimacy of online education ranged from 28-34 percent. While numbers have increased and decreased over the thirteen-year time frame, faculty approval was at 29 percent in 2015, just 1 percent higher than the approval ratings noted in 2002 – indicating that perceptions have remained relatively unchanged over the years (Allen, Seaman, Poulin, & Straut, 2016).

(Allen, I.E., Seaman, J., Poulin, R., Taylor Strout, T., 2016, p. 26)

In a separate survey of chief academic officers, perceptions of online learning appeared to align with that of faculty. In this survey, leaders were asked to rate their perceived quality of learning outcomes in online learning when compared to traditional in-person settings. While the percentage of leaders rating online learning as “inferior” or “somewhat inferior” to traditional face-to-face courses dropped from 43 percent to 23 percent between 2003 to 2012, the number rose again to 29 percent in 2015 (Allen, Seaman, Poulin, & Straut, 2016).

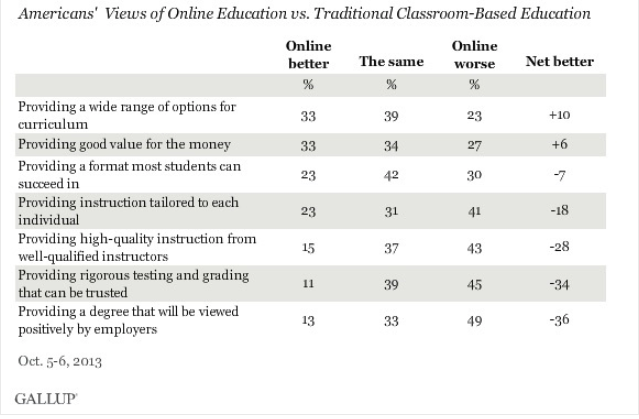

Faculty and academic leaders in higher education are not alone when it comes to perceptions of inferiority when compared to traditional classroom instruction. A 2013 Gallop poll assessing public perceptions showed that respondents rated online education as “worse” in five of the seven categories seen in the table below.

(Saad, L., Busteed, B., and Ogisi, M., 2013, October 15)

In general, Americans believed that online education provides both lower quality and less individualized instruction and less rigorous testing and grading when compared to the traditional classroom setting. In addition, respondents also thought that employers would perceive a degree from an online program less positively when compared to a degree obtained through traditional classroom instruction (Saad, Busteed, & Ogisi, 2013).

Student Perceptions of Online Learning

So what do students have to say about online learning? In Online College Students 2015: Comprehensive Data on Demands and Preferences, 1500 college students who were either enrolled or planning to enroll in a fully online undergraduate, graduate, or certificate program were surveyed. 78 percent of students believed the academic quality of their online learning experience to be better than or equal to their experiences with traditional classroom learning. Furthermore, 30 percent of online students polled said that they would likely not attend classes face to face if their program were not available online (Clienfelter & Aslanian, 2015). The following video describes some of the common reasons why students choose to attend college online.

How Online Learning Affects the Lives of Students ( Pearson North America, 2018, June 25)

In a 2015 study comparing student perceptions of online learning with face to face learning, researchers found that the majority of students surveyed expressed a preference for traditional face to face classes. A content analysis of the findings, however, brought attention to two key ideas: 1) student opinions of online learning may be based on “old typology of distance education” (Tichavsky, et al, 2015, p.6) as opposed to actual experience, and 2) a student’s inclination to choose one form over another is connected to issues of teaching presence and self-regulated learning (Tichavsky et al, 2015).

Student Learning Outcomes

Given the upward trend in student enrollment in online courses in postsecondary schools and the steady ratings of the low perceived value of online learning by stakeholder groups, it should be no surprise that there is a large body of literature comparing student learning outcomes in online classes to the traditional classroom environment.

While a majority of the studies reviewed found no significant difference in learning outcomes when comparing online to traditional courses (Cavanaugh & Jacquemin, 2015; Kemp & Grieve, 2014; Lyke & Frank 2012; Nichols, Shaffer, & Shockey, 2003; Stack, 2015; Summers, Waigandt, & Whittaker, 2005), there were a few outliers. In a 2019 report by Protopsaltis & Baum, authors confirmed that while learning is often found to be similar between the two mediums, students “with weak academic preparation and those from low-income and underrepresented backgrounds consistently underperform in fully-online environments” (Protopsaltis & Baum, 2019, n.p.). An important consideration, however, is that these findings are primarily based on students enrolled in online courses at the community college level – a demographic with a historically high rate of attrition compared to students attending four-year institutions (Ashby, Sadera, & McNary, 2011). Furthermore, students enrolled in online courses have been shown to have a 10 – 20 percent increase in attrition over their peers who are enrolled in traditional classroom instruction (Angelino, Williams, & Natvig, 2007). Therefore, attrition may be a key contributor to the lack of achievement seen in this subgroup of students enrolled in online education.

In contrast, there were a small number of studies that showed that online students tend to outperform those enrolled in traditional classroom instruction. One study, in particular, found a significant difference in test scores for students enrolled in an online, undergraduate business course. The confounding variable, in this case, was age. Researchers found a significant difference in performance in nontraditional age students over their traditional age counterparts. Authors concluded that older students may elect to take online classes for practical reasons related to outside work schedules, and this may, in turn, contribute to the learning that occurs overall (Slover & Mandernach, 2018).

In a meta-analysis and review of online learning spanning the years 1996 to 2008, authors from the US Department of Education found that students who took all or part of their classes online showed better learning outcomes than those students who took the same courses face-to-face. In these cases, it is important to note that there were many differences noted in the online and face-to-face versions, including the amount of time students spent engaged with course content. The authors concluded that the differences in learning outcomes may be attributed to learning design as opposed to the specific mode of delivery (Means, Toyoma, Murphy, Bakia, Jones, 2009).

Limitations and Opportunities

After examining the research comparing student learning outcomes in online education with the traditional classroom setting, there are many limitations that came to light, creating areas of opportunity for additional research. In many of the studies referenced, it is difficult to determine the pedagogical practices used in course design and delivery. Research shows the importance of student-student and student-teacher interaction in online learning, and the positive impact of these variables on student learning (Bernard, Borokhovski, Schmid, Tamim, & Abrami, 2014). Some researchers note that while many studies comparing online and traditional classroom learning exist, the methodologies and design issues make it challenging to explain the results conclusively (Mollenkopf, Vu, Crow, & Black, 2017). For example, some online courses may be structured in a variety of ways, i.e. self-paced, instructor-led and may be classified as synchronous or asynchronous (Moore, Dickson-Deane, Galyan, 2011)

Another gap in the literature is the failure to use a common language across studies to define the learning environment. This issue is explored extensively in a 2011 study by Moore, Dickson-Deane, and Galyan. Here, the authors examine the differences between e-learning, online learning, and distance learning in the literature, and how the terminology is often used interchangeably despite the variances in characteristics that define each. The authors also discuss the variability in the terms “course” versus “program”. This variability in the literature presents a challenge when attempting to compare one study of online learning to another (Moore, Dickson-Deane, & Galyan, 2011).

Finally, much of the literature in higher education focuses on undergraduate-level classes within the United States. Little research is available on outcomes in graduate-level classes as well as general information on student learning outcomes and perceptions of online learning outside of the U.S.

As we look to the future, there are additional questions to explore in the area of online learning. Overall, this research led to questions related to learning design when comparing the two modalities in higher education. Further research is needed to investigate the instructional strategies used to enhance student learning, especially in students with weaker academic preparation or from underrepresented backgrounds. Given the integral role that online learning is expected to play in the future of higher education in the United States, it may be even more critical to move beyond comparisons of online versus face to face. Instead, choosing to focus on sound pedagogical quality with consideration for the mode of delivery as a means for promoting positive learning outcomes.

Allen, I.E., Seaman, J., Poulin, R., & Straut, T. (2016). Online Report Card: Tracking Online Education in the United States [PDF file]. Babson Survey Research Group. http://onlinelearningsurvey.com/reports/onlinereportcard.pdf

Angelino, L. M., Williams, F. K., & Natvig, D. (2007). Strategies to engage online students and reduce attrition rates. The Journal of Educators Online , 4(2).

Ashby, J., Sadera, W.A., & McNary, S.W. (2011). Comparing student success between developmental math courses offered online, blended, and face-to-face. Journal of Interactive Online Learning , 10(3), 128-140.

Bernard, R.M., Borokhovski, E., Schmid, R.F., Tamim, R.M., & Abrami, P.C. (2014). A meta-analysis of blended learning and technology use in higher education: From the general to the applied. Journal of Computing in Higher Education , 26(1), 87-122.

Cavanaugh, J.K. & Jacquemin, S.J. (2015). A large sample comparison of grade based student learning outcomes in online vs. face-fo-face courses. Journal of Asynchronous Learning Network, 19(2).

Clinefelter, D. L., & Aslanian, C. B. (2015). Online college students 2015: Comprehensive data on demands and preferences. https://www.learninghouse.com/wp-content/uploads/2017/09/OnlineCollegeStudents2015.pdf

Golubovskaya, E.A., Tikhonova, E.V., & Mekeko, N.M. (2019). Measuring learning outcome and students’ satisfaction in ELT (e-learning against conventional learning). Paper presented the ACM International Conference Proceeding Series, 34-38. Doi: 10.1145/3337682.3337704

Kemp, N. & Grieve, R. (2014). Face-to-face or face-to-screen? Undergraduates’ opinions and test performance in classroom vs. online learning. Frontiers in Psychology , 5. Doi: 10.3389/fpsyg.2014.01278

Lyke, J., & Frank, M. (2012). Comparison of student learning outcomes in online and traditional classroom environments in a psychology course. (Cover story). Journal of Instructional Psychology , 39(3/4), 245-250.

Mayadas, F., Miller, G. & Senner, J. Definitions of E-Learning Courses and Programs Version 2.0. Online Learning Consortium. https://onlinelearningconsortium.org/updated-e-learning-definitions-2/

Means, B., Toyama, Y., Murphy, R., Bakia, M., & Jones, K. (2010). Evaluation of evidence-based practices in online learning: A meta-analysis and review of online learning studies. US Department of Education. https://www2.ed.gov/rschstat/eval/tech/evidence-based-practices/finalreport.pdf

Mollenkopf, D., Vu, P., Crow, S, & Black, C. (2017). Does online learning deliver? A comparison of student teacher outcomes from candidates in face to face and online program pathways. Online Journal of Distance Learning Administration. 20(1).

Moore, J.L., Dickson-Deane, C., & Galyan, K. (2011). E-Learning, online learning, and distance learning environments: Are they the same? The Internet and Higher Education . 14(2), 129-135.

Nichols, J., Shaffer, B., & Shockey, K. (2003). Changing the face of instruction: Is online or in-class more effective? College & Research Libraries , 64(5), 378–388. https://doi-org.proxy2.library.illinois.edu/10.5860/crl.64.5.378

Parsons-Pollard, N., Lacks, T.R., & Grant, P.H. (2008). A comparative assessment of student learning outcomes in large online and traditional campus based introduction to criminal justice courses. Criminal Justice Studies , 2, 225-239.

Pearson North America. (2018, June 25). How Online Learning Affects the Lives of Students . YouTube. https://www.youtube.com/watch?v=mPDMagf_oAE

Protopsaltis, S., & Baum, S. (2019). Does online education live up to its promise? A look at the evidence and implications for federal policy [PDF file]. http://mason.gmu.edu/~sprotops/OnlineEd.pdf

Saad, L., Busteed, B., & Ogisi, M. (October 15, 2013). In U.S., Online Education Rated Best for Value and Options. https://news.gallup.com/poll/165425/online-education-rated-best-value-options.aspx

Stack, S. (2015). Learning Outcomes in an Online vs Traditional Course. International Journal for the Scholarship of Teaching and Learning , 9(1).

Seaman, J.E., Allen, I.E., & Seaman, J. (2018). Grade Increase: Tracking Distance Education in the United States [PDF file]. Babson Survey Research Group. http://onlinelearningsurvey.com/reports/gradeincrease.pdf

Slover, E. & Mandernach, J. (2018). Beyond Online versus Face-to-Face Comparisons: The Interaction of Student Age and Mode of Instruction on Academic Achievement. Journal of Educators Online, 15(1) . https://files.eric.ed.gov/fulltext/EJ1168945.pdf

Summers, J., Waigandt, A., & Whittaker, T. (2005). A Comparison of Student Achievement and Satisfaction in an Online Versus a Traditional Face-to-Face Statistics Class. Innovative Higher Education , 29(3), 233–250. https://doi-org.proxy2.library.illinois.edu/10.1007/s10755-005-1938-x

Tichavsky, L.P., Hunt, A., Driscoll, A., & Jicha, K. (2015). “It’s just nice having a real teacher”: Student perceptions of online versus face-to-face instruction. International Journal for the Scholarship of Teaching and Learning. 9(2).

Wiley Education Services. (n.d.). Top challenges facing U.S. higher education. https://edservices.wiley.com/top-higher-education-challenges/

July 17, 2020

Online Learning

college , distance education , distance learning , face to face , higher education , online learning , postsecondary , traditional learning , university , virtual learning

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

© 2024 — Powered by WordPress

Theme by Anders Noren — Up ↑

- Office of the Provost

- Academic Assessment

Sample Learning Outcomes and Rubrics

Sample learning outcomes & rubrics.

A printable document of these SAMPLES can be found here.

The following are sample program learning outcomes and rubrics to provide some guidance in the development of assessment standards. These are merely examples and can be modified to fit the needs of your program. The outcomes and measurements MUST be relevant and meaningful to your program, providing information that will be useful in continuing quality improvement. Remember, when developing of rubrics, consider the thresholds that will demonstrate PLO’s are being met.

Examples of Program Learning Outcomes Some learning outcomes will require a rubric with perimeters for achievement, some will be percentage achievement, and still others may be designed as milestones completed (with time or percentage as unit measured). Ideally, your assessments will combine direct and indirect measures. The following are examples of some assessment ideas which are fairly typical of graduate assessment. Depending on your program, what works for you will vary, but most programs should address the following assessment themes:

Demonstrate Subject Content Knowledge (Generally in written or oral form, portfolio, project completion, or other demonstration of content knowledge)

Demonstrate oral communication skills representative of their disciplinary field.

Demonstrate skills in oral and/or written communication sufficient to;

- publish in a peer-reviewed journal

- present work in their field

- prepare grant proposals.

Demonstrate, through service, the value of their discipline to the academy and community at large.

Demonstrate a mastery of skills and knowledge at a level required for college and university undergraduate teaching in their discipline and assessment of student learning.

Critical Thinking Analyze and evaluate the literature relevant to their area of study.

Critically apply theories, methodologies, and knowledge to address fundamental questions in their primary area of study.

Demonstrate knowledge progression

Develop research objectives and hypotheses

Collect, summarize and interpret research data.

Pursue research of significance in the discipline or an interdisciplinary or creative project.

Applications Apply research theories, methodologies, and disciplinary knowledge to address fundamental questions in their primary area of study.

Produce and defend an original significant contribution to knowledge Develop professional curriculum vitae with required skills to secure a profession position appropriate to their degree.

Demonstrate Ethical Standards

Follow the principles of ethics in their field and in academia.

Interact productively with people from diverse backgrounds as both leaders/mentors and team members with integrity and professionalism. Be able to conduct scholarly activities in an ethical manner. Familiarly with guiding principles and strategies in the ethical conduct of research and/or teaching

Understand ethical issues and responsibilities especially in matters related to professionalism and (if applicable) in matters related the laboratory setting and in writing and publishing scientific papers.

Measurement Examples

The assessment of program-level learning outcomes should be formative, providing information on students as they work toward achieving required outcomes, and summative, determining satisfactory progress toward degree completion.

Response Threshold (short list of examples)

- At least 80% of students will be ranked at acceptable or exceptional in subject content knowledge, written communication, and oral communication skills. (Threshold based on rubric)

- At least 90% of students will pass their defense on their first attempt.

- 100% of students will successfully complete the ethics training and lab safety training.

- 90% of students will successfully complete foundation classes (those required by the department) with a grade of “B” or higher.

- By second year, 80% of graduate students will have participated in a Poster Presentation

- By their final year, 80% of students will have a published in a peer-reviewed journal

- Develop a sliding scale for students in different levels within the graduate program. 80% of students score at “mastery” level on department rubric.

- 80% of students will successfully complete courses specified in program of study by end of (period of time – will depend on nature of program, but time is a valuable measurement)

- 60% of Plan A grad students will submit final signed thesis by end of fifth semester.

Note: Rubrics must not be used to asses or evaluate individual students, and should not inform the decision regarding whether a student passes a defense or course. The data should be aggregated for all students in the program over a two-year period in order to assess the success of the program in meeting its program learning outcomes

Use of Rubrics Rubrics are a more precise means of establishing student performance. Depending on the assessment measures for your program learning outcomes, they can be invaluable in seeing trends in the attainment of student achievement. The following are rubrics are from various sources, and they are certainly not the limit your option. The basic concept of a rubric is 1) The assessment outcome (what’s being assessed) 2) Levels of achievement (poor, limited, acceptable, and exceptional) between 4-5 levels are sufficient. Levels can be descriptive (as above), numerical (1-5), or a combination of both.

Sample Rubrics (Developed by CLS):

Rubric for the Assessment of Subject Content Knowledge

|

|

| Score | ||||

|

| 1 | 2* | 3 | 4** | 5 |

|

|

| Little inquiry; limited knowledge shown |

| Explores topic with curiosity; adequate knowledge from variety of sources displayed |

| Knowledge base displays scope, thoroughness, and quality |

|

|

| Does not identify or summarize the problem/ question accurately, if at all |

| The main question is identified and clearly stated |

| The main question and subsidiary, embedded or implicit aspects of a question are identified and clearly stated |

|

| Identifies & evaluates the quality of supporting data/evidence; detects connections and patterns | No supporting data or evidence is utilized; separates into few parts; detects few connections or patterns |

| Evidence is used but not carefully examined; source(s) of evidence are not questioned for accuracy, precision, relevance and completeness; facts and opinions are stated but not clearly distinguished from value judgments |

| Evidence is identified and carefully examined for accuracy, precision, relevance, and completeness; facts and opinions are stated and clearly distinguished; combines facts and ideas to create new knowledge that is comprehensive and significant |

|

| Identifies and evaluates conclusions, implications, & consequences; develops ideas | Combines few facts and ideas; needs more development; conclusions, implications; consequences are not provided |

| Accurately identifies conclusions, implications, and consequences with a brief evaluative summary; uses perspectives and insights to explain relationships; states own position on the question |

| Accurately identifies conclusions, implications, and consequences with a brief evaluative summary; uses perspectives and insights to explain relationships; states own position on the question |

|

|

|

|

|

|

|

|

|

*Exhibits most characteristics of ‘1’ and some of ‘3’; **Exhibits most characteristics of ‘3’ and some of ‘5’

Rubric for the Assessment of Written Communication

|

| Level of Achievement | Score | ||||

|

| 1 | 2* | 3 | 4** | 5 |

|

| ideas, examples, reasons & evidence, point of view | Topic is poorly developed, support is only vague or general; ideas are trite; wording is unclear, simplistic; reflects lack of understanding of topic and audience; minimally accomplishes goals of the assignment |

| Topic is evident; some supporting detail; wording is generally clear; reflects understanding of topic and audience; generally accomplishes goals of the assignment |

| Thesis topic is clearly stated and well developed; details/wording is accurate, specific, appropriate for the topic & audience with no digressions; evidence of effective, clear thinking; completely accomplishes the goals of the assignment |

|

| focus, coherence, progression of ideas, thesis developed | Disorganized and unfocused; serious problems with coherence and progression of ideas; weak or non- existent thesis |

| Generally organized & focused, demonstrating coherence & progression of ideas; presents a thesis and suggests a plan of development that is mostly carried out |

| Clearly focused and organized around a central theme; thesis presented or implied with noticeable coherence; provides specific & accurate support |

|

| word choice & sentence variety | Displays frequent & fundamental errors in vocabulary; repetitive words and sentence types; sentences may be simplistic and disjointed |

| Competent use of language and sometimes varies sentence structure; generally focused |

| Choice of language & sentence structure is precise & purposeful, demonstrating a command of language and variety of sentence structures |

|

| grammar, punctuation, spelling, paragraphing, format; (as applicable) documentation | Errors interfere with writer’s ability to consistently communicate purpose; pervasive mechanical errors obscure meaning; inappropriate format; in text and ending documentation are generally inconsistent and incomplete; cited information is not incorporated into the document |

| Occasional errors do not interfere with writer’s ability to communicate purpose; generally appropriate format; in text and ending documentation are generally clear, consistent, and complete; cited information is somewhat incorporated into the document |

| Control of conventions contribute to the writer’s ability to communicate purpose; free of most mechanical errors; appropriate format; In text and ending documentation are clear, consistent, and complete; cited information is incorporated effectively into the document |

|

|

|

|

|

|

|

|

|

Rubric for the Assessment of Oral Communication

|

| Level of Achievement | Score | ||||

|

| 1 | 2* | 3 | 4** | 5 |

|

| depth of content, relevant support, clear explanation | Provides irrelevant or no support: explanation of concepts is unclear or inaccurate |