To read this content please select one of the options below:

Please note you do not have access to teaching notes, a systematic literature review of data science, data analytics and machine learning applied to healthcare engineering systems.

Management Decision

ISSN : 0025-1747

Article publication date: 7 December 2020

Issue publication date: 2 February 2022

The objective of this paper is to assess and synthesize the published literature related to the application of data analytics, big data, data mining and machine learning to healthcare engineering systems.

Design/methodology/approach

A systematic literature review (SLR) was conducted to obtain the most relevant papers related to the research study from three different platforms: EBSCOhost, ProQuest and Scopus. The literature was assessed and synthesized, conducting analysis associated with the publications, authors and content.

From the SLR, 576 publications were identified and analyzed. The research area seems to show the characteristics of a growing field with new research areas evolving and applications being explored. In addition, the main authors and collaboration groups publishing in this research area were identified throughout a social network analysis. This could lead new and current authors to identify researchers with common interests on the field.

Research limitations/implications

The use of the SLR methodology does not guarantee that all relevant publications related to the research are covered and analyzed. However, the authors' previous knowledge and the nature of the publications were used to select different platforms.

Originality/value

To the best of the authors' knowledge, this paper represents the most comprehensive literature-based study on the fields of data analytics, big data, data mining and machine learning applied to healthcare engineering systems.

- Data analytics

- Machine learning

- Healthcare systems

- Systematic literature review

Salazar-Reyna, R. , Gonzalez-Aleu, F. , Granda-Gutierrez, E.M.A. , Diaz-Ramirez, J. , Garza-Reyes, J.A. and Kumar, A. (2022), "A systematic literature review of data science, data analytics and machine learning applied to healthcare engineering systems", Management Decision , Vol. 60 No. 2, pp. 300-319. https://doi.org/10.1108/MD-01-2020-0035

Emerald Publishing Limited

Copyright © 2020, Emerald Publishing Limited

Related articles

All feedback is valuable.

Please share your general feedback

Report an issue or find answers to frequently asked questions

Contact Customer Support

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- How to Write a Literature Review | Guide, Examples, & Templates

How to Write a Literature Review | Guide, Examples, & Templates

Published on January 2, 2023 by Shona McCombes . Revised on September 11, 2023.

What is a literature review? A literature review is a survey of scholarly sources on a specific topic. It provides an overview of current knowledge, allowing you to identify relevant theories, methods, and gaps in the existing research that you can later apply to your paper, thesis, or dissertation topic .

There are five key steps to writing a literature review:

- Search for relevant literature

- Evaluate sources

- Identify themes, debates, and gaps

- Outline the structure

- Write your literature review

A good literature review doesn’t just summarize sources—it analyzes, synthesizes , and critically evaluates to give a clear picture of the state of knowledge on the subject.

Instantly correct all language mistakes in your text

Upload your document to correct all your mistakes in minutes

Table of contents

What is the purpose of a literature review, examples of literature reviews, step 1 – search for relevant literature, step 2 – evaluate and select sources, step 3 – identify themes, debates, and gaps, step 4 – outline your literature review’s structure, step 5 – write your literature review, free lecture slides, other interesting articles, frequently asked questions, introduction.

- Quick Run-through

- Step 1 & 2

When you write a thesis , dissertation , or research paper , you will likely have to conduct a literature review to situate your research within existing knowledge. The literature review gives you a chance to:

- Demonstrate your familiarity with the topic and its scholarly context

- Develop a theoretical framework and methodology for your research

- Position your work in relation to other researchers and theorists

- Show how your research addresses a gap or contributes to a debate

- Evaluate the current state of research and demonstrate your knowledge of the scholarly debates around your topic.

Writing literature reviews is a particularly important skill if you want to apply for graduate school or pursue a career in research. We’ve written a step-by-step guide that you can follow below.

Prevent plagiarism. Run a free check.

Writing literature reviews can be quite challenging! A good starting point could be to look at some examples, depending on what kind of literature review you’d like to write.

- Example literature review #1: “Why Do People Migrate? A Review of the Theoretical Literature” ( Theoretical literature review about the development of economic migration theory from the 1950s to today.)

- Example literature review #2: “Literature review as a research methodology: An overview and guidelines” ( Methodological literature review about interdisciplinary knowledge acquisition and production.)

- Example literature review #3: “The Use of Technology in English Language Learning: A Literature Review” ( Thematic literature review about the effects of technology on language acquisition.)

- Example literature review #4: “Learners’ Listening Comprehension Difficulties in English Language Learning: A Literature Review” ( Chronological literature review about how the concept of listening skills has changed over time.)

You can also check out our templates with literature review examples and sample outlines at the links below.

Download Word doc Download Google doc

Before you begin searching for literature, you need a clearly defined topic .

If you are writing the literature review section of a dissertation or research paper, you will search for literature related to your research problem and questions .

Make a list of keywords

Start by creating a list of keywords related to your research question. Include each of the key concepts or variables you’re interested in, and list any synonyms and related terms. You can add to this list as you discover new keywords in the process of your literature search.

- Social media, Facebook, Instagram, Twitter, Snapchat, TikTok

- Body image, self-perception, self-esteem, mental health

- Generation Z, teenagers, adolescents, youth

Search for relevant sources

Use your keywords to begin searching for sources. Some useful databases to search for journals and articles include:

- Your university’s library catalogue

- Google Scholar

- Project Muse (humanities and social sciences)

- Medline (life sciences and biomedicine)

- EconLit (economics)

- Inspec (physics, engineering and computer science)

You can also use boolean operators to help narrow down your search.

Make sure to read the abstract to find out whether an article is relevant to your question. When you find a useful book or article, you can check the bibliography to find other relevant sources.

You likely won’t be able to read absolutely everything that has been written on your topic, so it will be necessary to evaluate which sources are most relevant to your research question.

For each publication, ask yourself:

- What question or problem is the author addressing?

- What are the key concepts and how are they defined?

- What are the key theories, models, and methods?

- Does the research use established frameworks or take an innovative approach?

- What are the results and conclusions of the study?

- How does the publication relate to other literature in the field? Does it confirm, add to, or challenge established knowledge?

- What are the strengths and weaknesses of the research?

Make sure the sources you use are credible , and make sure you read any landmark studies and major theories in your field of research.

You can use our template to summarize and evaluate sources you’re thinking about using. Click on either button below to download.

Take notes and cite your sources

As you read, you should also begin the writing process. Take notes that you can later incorporate into the text of your literature review.

It is important to keep track of your sources with citations to avoid plagiarism . It can be helpful to make an annotated bibliography , where you compile full citation information and write a paragraph of summary and analysis for each source. This helps you remember what you read and saves time later in the process.

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

To begin organizing your literature review’s argument and structure, be sure you understand the connections and relationships between the sources you’ve read. Based on your reading and notes, you can look for:

- Trends and patterns (in theory, method or results): do certain approaches become more or less popular over time?

- Themes: what questions or concepts recur across the literature?

- Debates, conflicts and contradictions: where do sources disagree?

- Pivotal publications: are there any influential theories or studies that changed the direction of the field?

- Gaps: what is missing from the literature? Are there weaknesses that need to be addressed?

This step will help you work out the structure of your literature review and (if applicable) show how your own research will contribute to existing knowledge.

- Most research has focused on young women.

- There is an increasing interest in the visual aspects of social media.

- But there is still a lack of robust research on highly visual platforms like Instagram and Snapchat—this is a gap that you could address in your own research.

There are various approaches to organizing the body of a literature review. Depending on the length of your literature review, you can combine several of these strategies (for example, your overall structure might be thematic, but each theme is discussed chronologically).

Chronological

The simplest approach is to trace the development of the topic over time. However, if you choose this strategy, be careful to avoid simply listing and summarizing sources in order.

Try to analyze patterns, turning points and key debates that have shaped the direction of the field. Give your interpretation of how and why certain developments occurred.

If you have found some recurring central themes, you can organize your literature review into subsections that address different aspects of the topic.

For example, if you are reviewing literature about inequalities in migrant health outcomes, key themes might include healthcare policy, language barriers, cultural attitudes, legal status, and economic access.

Methodological

If you draw your sources from different disciplines or fields that use a variety of research methods , you might want to compare the results and conclusions that emerge from different approaches. For example:

- Look at what results have emerged in qualitative versus quantitative research

- Discuss how the topic has been approached by empirical versus theoretical scholarship

- Divide the literature into sociological, historical, and cultural sources

Theoretical

A literature review is often the foundation for a theoretical framework . You can use it to discuss various theories, models, and definitions of key concepts.

You might argue for the relevance of a specific theoretical approach, or combine various theoretical concepts to create a framework for your research.

Like any other academic text , your literature review should have an introduction , a main body, and a conclusion . What you include in each depends on the objective of your literature review.

The introduction should clearly establish the focus and purpose of the literature review.

Depending on the length of your literature review, you might want to divide the body into subsections. You can use a subheading for each theme, time period, or methodological approach.

As you write, you can follow these tips:

- Summarize and synthesize: give an overview of the main points of each source and combine them into a coherent whole

- Analyze and interpret: don’t just paraphrase other researchers — add your own interpretations where possible, discussing the significance of findings in relation to the literature as a whole

- Critically evaluate: mention the strengths and weaknesses of your sources

- Write in well-structured paragraphs: use transition words and topic sentences to draw connections, comparisons and contrasts

In the conclusion, you should summarize the key findings you have taken from the literature and emphasize their significance.

When you’ve finished writing and revising your literature review, don’t forget to proofread thoroughly before submitting. Not a language expert? Check out Scribbr’s professional proofreading services !

This article has been adapted into lecture slides that you can use to teach your students about writing a literature review.

Scribbr slides are free to use, customize, and distribute for educational purposes.

Open Google Slides Download PowerPoint

If you want to know more about the research process , methodology , research bias , or statistics , make sure to check out some of our other articles with explanations and examples.

- Sampling methods

- Simple random sampling

- Stratified sampling

- Cluster sampling

- Likert scales

- Reproducibility

Statistics

- Null hypothesis

- Statistical power

- Probability distribution

- Effect size

- Poisson distribution

Research bias

- Optimism bias

- Cognitive bias

- Implicit bias

- Hawthorne effect

- Anchoring bias

- Explicit bias

A literature review is a survey of scholarly sources (such as books, journal articles, and theses) related to a specific topic or research question .

It is often written as part of a thesis, dissertation , or research paper , in order to situate your work in relation to existing knowledge.

There are several reasons to conduct a literature review at the beginning of a research project:

- To familiarize yourself with the current state of knowledge on your topic

- To ensure that you’re not just repeating what others have already done

- To identify gaps in knowledge and unresolved problems that your research can address

- To develop your theoretical framework and methodology

- To provide an overview of the key findings and debates on the topic

Writing the literature review shows your reader how your work relates to existing research and what new insights it will contribute.

The literature review usually comes near the beginning of your thesis or dissertation . After the introduction , it grounds your research in a scholarly field and leads directly to your theoretical framework or methodology .

A literature review is a survey of credible sources on a topic, often used in dissertations , theses, and research papers . Literature reviews give an overview of knowledge on a subject, helping you identify relevant theories and methods, as well as gaps in existing research. Literature reviews are set up similarly to other academic texts , with an introduction , a main body, and a conclusion .

An annotated bibliography is a list of source references that has a short description (called an annotation ) for each of the sources. It is often assigned as part of the research process for a paper .

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

McCombes, S. (2023, September 11). How to Write a Literature Review | Guide, Examples, & Templates. Scribbr. Retrieved July 16, 2024, from https://www.scribbr.com/dissertation/literature-review/

Is this article helpful?

Shona McCombes

Other students also liked, what is a theoretical framework | guide to organizing, what is a research methodology | steps & tips, how to write a research proposal | examples & templates, what is your plagiarism score.

Advertisement

Data science pedagogical tools and practices: A systematic literature review

- Published: 24 August 2023

- Volume 29 , pages 8179–8201, ( 2024 )

Cite this article

- Bahar Memarian ORCID: orcid.org/0000-0003-0671-3127 1 &

- Tenzin Doleck 1

831 Accesses

Explore all metrics

The development of data science curricula has gained attention in academia and industry. Yet, less is known about the pedagogical practices and tools employed in data science education. Through a systematic literature review, we summarize prior pedagogical practices and tools used in data science initiatives at the higher education level. Following the Technological Pedagogical Content Knowledge (TPACK) framework, we aim to characterize the technological and pedagogical knowledge quality of reviewed studies, as we find the content presented to be diverse and incomparable. TPACK is a universally established method for teaching considering information and communication technology. Yet it is seldom used for the analysis of data science pedagogy. To make this framework more structured, we list the tools employed in each reviewed study to summarize technological knowledge quality. We further examine whether each study follows the needs of the Cognitive Apprenticeship theory to summarize the pedagogical knowledge quality in each reviewed study. Of the 23 reviewed studies, 14 met the needs of Cognitive Apprenticeship theory and include hands-on experiences, promote students’ active learning, seeking guidance from the instructor as a coach, introduce students to the real-world industry demands of data and data scientists, and provide meaningful learning resources and feedback across various stages of their data science initiatives. While each study presents at least one tool to teach data science, we found the assessment of the technological knowledge of data science initiatives to be difficult. This is because the studies fall short of explaining how students come to learn the operation of tools and become proficient in using them throughout a course or program. Our review aims to highlight implications for practices and tools used in data science pedagogy for future research.

This is a preview of subscription content, log in via an institution to check access.

Access this article

Subscribe and save.

- Get 10 units per month

- Download Article/Chapter or Ebook

- 1 Unit = 1 Article or 1 Chapter

- Cancel anytime

Price includes VAT (Russian Federation)

Instant access to the full article PDF.

Rent this article via DeepDyve

Institutional subscriptions

Similar content being viewed by others

Data Sciences and Teaching Methods—Learning

Exploring Interdisciplinary Data Science Education for Undergraduates: Preliminary Results

Designing and Delivering a Curriculum for Data Science Education Across Europe

Data availability.

Data sharing does not apply to this article as no datasets were generated or analyzed during the current study.

Akram, H., Yingxiu, Y., Al-Adwan, A. S., & Alkhalifah, A. (2021). Technology integration in higher education during COVID-19: An assessment of online teaching competencies through technological pedagogical content knowledge model. Frontiers in Psychology, 12 , 736522.

Article Google Scholar

Aktaş, İ, & Özmen, H. (2020). Investigating the impact of TPACK development course on pre-service science teachers’ performances. Asia Pacific Education Review, 21 , 667–682.

Allaire JJ, Xie Y, McPherson J, Luraschi J, Ushey K, Atkins A, Wickham H, Cheng J, Chang W, Iannone R (2021) Rmarkdown: Dynamic documents for R. https://CRAN.R-project.org/package=rmarkdown

Allen, G. I. (2021). Experiential learning in data science: Developing an interdisciplinary, client-sponsored capstone program. SIGCSE - Proc. ACM Tech. Symp. Comput. Sci. Educ. , PG - 516 – 522 , 516–522. https://doi.org/10.1145/3408877.3432536

Anderson, P., Bowring, J., McCauley, R., Pothering, G., & Starr, C. (2014). n undergraduate degree in data science: curriculum and a decade of implementation experience. 45th ACM Technical Symposium on Computer Science Education , 145–150.

Archambault, L. M., & Barnett, J. H. (2010). Revisiting technological pedagogical content knowledge: Exploring the TPACK framework. Computers & Education, 44 (4), 1656–1662.

Barman, A., Chen, S., Chang, A., & Allen, G. (2022). Experiential learning in data science through a novel client-facing consulting course. Proc. Front. Educ. Conf. FIE , 2022 - Octob (PG-). https://doi.org/10.1109/FIE56618.2022.9962532

Bart, A. C., Kafura, D., Shaffer, C. A., & Tilevich, E. (2018). Reconciling the promise and pragmatics of enhancing computing pedagogy with data science. 49th ACM Technical Symposium on Computer Science Education , 1029–1034.

Berman, F., Rutenbar, R., Hailpern, B., Christensen, H., Davidson, S., Estrin, D., ..., & Szalay, A. S. (2018). Realizing the potential of data science. Communications of the ACM , 61 (4), 67–72.

Bonnell, J., Ogihara, M., & Yesha, Y. (2022). Challenges and issues in data science education. Computer, 55 (2 PG-63–66), 63–66. https://doi.org/10.1109/MC.2021.3128734

Bornn, L., Mortensen, J., & Ahrensmeier, D. (2022). A data-first approach to learning real-world statistical modeling. Canadian Journal for the Scholarship of Teaching and Learning , 13 (1 PG-). https://doi.org/10.5206/cjsotlrcacea.2022.1.10204

Brinkley-Etzkorn, K. E. (2018). Learning to teach online: Measuring the influence of faculty development training on teaching effectiveness through a TPACK lens. The Internet and Higher Education, 38 , 28–35.

Cao, L. (2017). Data science: A comprehensive overview. ACM Computing Surveys (CSUR), 50 (3), 1–42.

Cetinkaya-Rundel, M., & Ellison, V. (2021). A fresh look at introductory data science. Journal of Statistics and Data Science Education, 29 (PG-S16-S26), S16–S26. https://doi.org/10.1080/10691898.2020.1804497

Ching, G. S., & Roberts, A. (2020). Evaluating the pedagogy of technology integrated teaching and learning: An overview. International Journal of Research Studies in Education, 9 , 37–50.

Collins, A., Brown, J. S., & Holum, A. (1991). Cognitive apprenticeship: Making thinking visible. American Educator, 15 (3), 6–11.

Google Scholar

Collins, A., Brown, J. S., & Newman, S. E. (2018). Cognitive apprenticeship: Teaching the crafts of reading, writing, and mathematics. In Knowing, learning, and instruction . Routledge.

Collins, A. (2006). Cognitive apprenticeship . The cambridge handbook of the learning sciences.

Covidence. (2023). Covidence systematic review software . Retrieved February 2023 from www.covidence.org

Danyluk, A., Leidig, P., McGettrick, A., Cassel, L., Doyle, M., Servin, C., Schmitt, K., & Stefik, A. (2021). Computing competencies for undergraduate data science programs: An ACM task force final report. SIGCSE , PG - 1119 – 1120 , 1119–1120. https://doi.org/10.1145/3408877.3432586

De Veaux, R. D., Agarwal, M., Averett, M., Baumer, B. S., Bray, A., Bressoud, T. C., Bryant, L., Cheng, L. Z., Francis, A., Gould, R., Kim, A. Y., Kretchmar, M., Lu, Q., Moskol, A., Nolan, D., Pelayo, R., Raleigh, S., Sethi, R. J., Sondjaja, M., …, & Ye, P. (2017). Curriculum guidelines for undergraduate programs in data science. In Annual Review of Statistics and Its Application (Vol. 4, Issue PG-15–30, pp. 15–30). https://doi.org/10.1146/annurev-statistics-060116-053930

Dennen, V. P., & Burner, K. J. (2008). The cognitive apprenticeship model in educational practice . Routledge.

Dogan, A., & Birant, D. (2021). Machine learning and data mining in manufacturing. Expert Systems with Applications, 166 , 114060.

Donoghue, T., Voytek, B., & Ellis, S. E. (2021). Teaching creative and practical data science at scale. Journal of Statistics and Data Science Education, 29 (PG-S27-S39), S27–S39. https://doi.org/10.1080/10691898.2020.1860725

Donoho, D. (2017). 50 years of data science. Journal of Computational and Graphical Statistics, 26 (4), 745–766.

Article MathSciNet Google Scholar

Fennell, H. W., Lyon, J. A., Madamanchi, A., & Magana, A. J. (2020). Toward computational apprenticeship: Bringing a constructivist agenda to computational pedagogy. Journal of Engineering Education, 109 (2), 170–176.

Feyyad, U. M. (1996). Data mining and knowledge discovery: Making sense out of data. IEEE Expert, 11 (5), 20–25.

Finzer, W. (2013). The data science education dilemma. Technology Innovations in Statistics Education , 7 (2). https://doi.org/10.52041/srap.12105

Garrett, K. N. (2014). A quantitative study of higher education faculty self-assessments of technological, pedagogical, and content knowledge (TPaCK) and technology training . The University of Alabama.

Gess-Newsome, J. (1999). Pedagogical content knowledge: An introduction and orientation. In Examining pedagogical content knowledge: The construct and its implications for science education (pp. 3–17).

Green, A., & Zhai, C. (2019). LiveDataLab: A cloud-based platform to facilitate hands-on data science education at scale. In Proceedings of the Sixth (2019) ACM Conference on Learning@ Scale (Issue PG-, pp. 1–2). https://doi.org/10.1145/3330430.3333665

Hassan, O. A. (2011). Learning theories and assessment methodologies–an engineering educational perspective. European Journal of Engineering Education, 36 (4), 327–339.

Hee, K., Zicari, R. V., Tolle, K., & Manieri, A. (2016). Tailored data science education using gamification. In 2016 8TH IEEE International Conference on Cloud Computing Technology and Science (CLOUDCOM 2016) (Issue PG-627–632, pp. 627–632). https://doi.org/10.1109/CloudCom.2016.105

Hicks, S. C., & Irizarry, R. A. (2018). A guide to teaching data science. The American Statistician, 72 (4 PG-382–391), 382–391. https://doi.org/10.1080/00031305.2017.1356747

Holt, D., Smissen, I., & Segrave, S. (2006). New students, new learning, new environments in higher education: Literacies in the digital age. Proceedings of the 23rd Annual ASCILITE Conference “Who’s Learning? Whose Technology , 327–336.

Hughes, J., Thomas, R., & Scharber, C. (2006). Assessing technology integration: The RAT–replacement, amplification, and transformation-framework. In Society for Information. Technology & Teacher Education International Conference , 1616–1620.

Huppenkothen, D., Arendt, A., Hogg, D. W., Ram, K., VanderPlas, J. T., & Rokem, A. (2018). Hack weeks as a model for data science education and collaboration. Proceedings of the National Academy of Sciences of the United States of America, 115 (36 PG-8872–8877), 8872–8877. https://doi.org/10.1073/pnas.1717196115

Ionascu, A., & Stefaniga, S. A. (2020). DS Lab Notebook: A new tool for data science applications. In 2020 22nd International Symposium on Symbolic and Numeric Algorithms for Scientific Computing (SYNASC 2020) (Issue PG-310–314, pp. 310–314). https://doi.org/10.1109/SYNASC51798.2020.00056

Irizarry, R. A. (2020). The role of academia in data science education . 2 (1).

Kim, B., & Henke, G. (2021). Easy-to-use cloud computing for teaching data science. Journal of Statistics and Data Science Education, 29 (PG-S103-S111), S103–S111. https://doi.org/10.1080/10691898.2020.1860726

Kitchin, R. (2014). Big data, new epistemologies and paradigm shifts. Big Data & Society , 1 (1). https://doi.org/10.1177/2053951714528481

Koyuncuoglu, Ö. (2021). An investigation of graduate students’ Technological Pedagogical and Content Knowledge (TPACK). International Journal of Education in Mathematics, Science and Technology, 9 (2), 299–313.

Kristensen, F., Troeng, O., Safavi, M., & Narayanan, P. (2015). Competition in higher education–good or bad .

Kross, S., & Guo, P. J. (2019). Practitioners teaching data science in industry and academia: Expectations, workflows, and challenges. Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems , 1–14.

Maksimenkova, O., Neznanov, A., & Radchenko, I. (2019). Using data expedition as a formative assessment tool in data science education: Reasoning, justification, and evaluation. International Journal of Emerging Technologies in Learning, 14 (11 PG-107–122), 107–122. https://doi.org/10.3991/ijet.v14i11.10202

Maksimenkova, O., Neznanov, A., & Radchenko, I. (2020). Collaborative learning in data Science education: A data expedition as a formative assessment tool. In Challenges of the Digital Transformation in Education, ICL2018, VOL 1 (Vol. 916, Issue PG-14–25, pp. 14–25). https://doi.org/10.1007/978-3-030-11932-4_2

Manyika, J., Chui, M., Brown, B., Bughin, J., Dobbs, R., Roxburgh, C., & Hung Byers, A. (2011). Big data: The next frontier for innovation, competition, and productivity . McKinsey Global Institute.

Mikalef, P., & Krogstie, J. (2019). Investigating the Data Science Skill Gap: An Empirical Analysis. In EDUCON (Issue PG-1275–1284, pp. 1275–1284).

Mikroyannidis, A., Domingue, J., Bachler, M., & Quick, K. (2019). Smart blockchain badges for data science education. Proc. Front. Educ. Conf. FIE , 2018 - Octob (PG-). https://doi.org/10.1109/FIE.2018.8659012

Mikroyannidis, A., Domingue, J., Phethean, C., Beeston, G., & Simperl, E. (2018). Designing and delivering a curriculum for data science education across Europe. In Teaching and Learning in a Digital World (Vol. 716, Issue PG-540–550, pp. 540–550). https://doi.org/10.1007/978-3-319-73204-6_59

Mishra, P., & Koehler, M. J. (2006). Technological pedagogical content knowledge: A framework for teacher knowledge. Teachers College Record, 108 (6), 1017–1054.

Molenda, M. (2003). In search of the elusive ADDIE model. Performance Improvement, 42 (5), 34–37.

Mujallid, A. (2021). Instructors’ readiness to teach online: A review of TPACK standards in online professional development. Programmes in Higher Education. International Journal of Learning, Teaching and Educational Research, 20 (7), 135–150.

Murray, S., Ryan, J., & Pahl, C. (2003). A tool-mediated cognitive apprenticeship approach for a computer engineering course. 3rd IEEE International Conference on Advanced Technologies , 2–6.

Polak, J., & Cook, D. (2021). A study on student performance, engagement, and experience with Kaggle InClass data challenges. Journal of Statistics and Data Science Education, 29 (1 PG-63–70), 63–70. https://doi.org/10.1080/10691898.2021.1892554

Power, D. J. (2016). Data science: Supporting decision-making. Journal of Decision Systems, 25 (4), 345–356.

Rao, A., Bihani, A., & Nair, M. (2018). Milo: A visual programming environment for Data Science Education. In 2018 IEEE Symposium on Visual Languages and Human-Centric Computing (VL/HCC) (Issue PG-211–215, pp. 211–215). NS -

Romrell, D., Kidder, L., & Wood, E. (2014). The SAMR model as a framework for evaluating mLearning. Online Learning Journal , 18 (2). https://doi.org/10.24059/olj.v18i2.435

Rossi, R. (2021). Data science education based on ADDIE model and the EDISON framework. In 2021 International Conference on Big Data Engineering and Education (BDEE 2021) (Issue PG-40–45, pp. 40–45). https://doi.org/10.1109/BDEE52938.2021.00013

Rostami, M. A., & Bucker, H. M. (2019). Redesigning interactive educational modules for combinatorial scientific computing. In Computational Science - ICCS 2019, PT V (Vol. 11540, Issue PG-363–373, pp. 363–373). https://doi.org/10.1007/978-3-030-22750-0_29

Roy, P. K., Saumya, S., Singh, J. P., Banerjee, S., & Gutub, A. (2023). Analysis of community question-answering issues via machine learning and deep learning: State-of-the-art review. CAAI Transactions on Intelligence Technology, 8 (1), 95–117.

Salas-Rueda, R. A. (2020). TPACK: Technological, pedagogical and content model necessary to improve the educational process on mathematics through a web application? International Electronic Journal of Mathematics Education , 15 (1). https://doi.org/10.29333/iejme/5887

Sanchez-Pinto, L. N., Luo, Y., & Churpek, M. M. (2018). Big data and data science in critical care. Chest, 154 (5), 1239–1248.

Sánchez‐Peña, M., Vieira, C., & Magana, A. J. (2022). Data science knowledge integration: Affordances of a computational cognitive apprenticeship on student conceptual understanding. Computer Applications in Engineering Education , 31 (2), 239–259. https://doi.org/10.1002/cae.22580

Savonen, C., Wright, C., Hoffman, A. M., Muschelli, J., Cox, K., Tan, F. J., & Leek, J. T. (2022). Open-source Tools for Training Resources–OTTR. Journal of Statistics and Data Science Education, PG- 1–12. https://doi.org/10.1080/26939169.2022.2118646

Schmidt, D. A., Baran, E., Thompson, A. D., Mishra, P., Koehler, M. J., & Shin, T. S. (2009). Technological pedagogical content knowledge (TPACK) the development and validation of an assessment instrument for preservice teachers. Journal of Research on Technology in Education, 42 (2), 123–149.

Shafi, A., Saeed, S., Bamarouf, Y. A., Iqbal, S. Z., Min-Allah, N., & Alqahtani, M. A. (2019). Student outcomes assessment methodology for ABET accreditation: A case study of computer science and computer information systems programs. IEEE Access, 7 , 13653–13667.

Sheffield, R., Dobozy, E., Gibson, D., Mullaney, J., & Campbell, C. (2015). Teacher education students using TPACK in science: A case study. Educational Media International, 52 (3), 227–238.

Shulman, L. S. (1986). Those who understand: Knowledge growth in teaching. Educational Researcher, 15 (2), 4–14.

Silva, P. (2015). Davis’ technology acceptance model (TAM)(1989). Information Seeking Behavior and Technology Adoption: Theories and Trends (pp. 205–219). https://doi.org/10.4018/978-1-4666-8156-9.ch013

Song, I. Y., & Zhu, Y. J. (2016). Big data and data science: what should we teach? Expert Systems, 33 (4 PG-364–373), 364–373. https://doi.org/10.1111/exsy.12130

Suthar, K., Mitchell, T., Hartwig, A. C., Wang, J., Mao, S., Parson, L., Zeng, P., Liu, B., & He, P. (2021). Real data and application-based interactive modules for data science education in engineering. ASEE Annu. Conf. Expos. Conf. Proc. , PG -. https://www.scopus.com/inward/record.uri?eid=2-s2.0-85124546523&partnerID=40&md5=ed00569a6049c4f397399743b6de40efNS -

Tang, R., & Sae-Lim, W. (2016). Data science programs in US higher education: An exploratory content analysis of program description, curriculum structure, and course focus. Education for Information, 23 (3), 269–290.

Vance, E. A. (2021). Using team-based learning to teach data science. Journal of Statistics and Data Science Education, 29 (3 PG-277–296), 277–296. https://doi.org/10.1080/26939169.2021.1971587

Watson, D. M. (2001). Pedagogy before technology: Re-thinking the relationship between ICT and teaching. Education and Information Technologies, 6 , 251–266.

West, J. (2018). Teaching data science: an objective approach to curriculum validation. Computer Science Education, 28 (2 PG-136–157), 136–157. https://doi.org/10.1080/08993408.2018.1486120

Yavuz, F. G., & Ward, M. D. (2020). Fostering undergraduate data science. American Statistician, 74 (1 PG-8–16), 8–16. https://doi.org/10.1080/00031305.2017.1407360

Download references

Acknowledgements

This study was funded by Canada Research Chair Program and Canada Foundation for Innovation

Author information

Authors and affiliations.

Faculty of Education, Simon Fraser University, Vancouver, British Columbia, Canada

Bahar Memarian & Tenzin Doleck

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Bahar Memarian .

Ethics declarations

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper. The authors declare the following financial interests/personal relationships which may be considered as potential competing interests.

Additional information

Publisher's note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Reprints and permissions

About this article

Memarian, B., Doleck, T. Data science pedagogical tools and practices: A systematic literature review. Educ Inf Technol 29 , 8179–8201 (2024). https://doi.org/10.1007/s10639-023-12102-y

Download citation

Received : 19 April 2023

Accepted : 01 August 2023

Published : 24 August 2023

Issue Date : May 2024

DOI : https://doi.org/10.1007/s10639-023-12102-y

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Data analytics

- Artificial intelligence

- Higher education

- Find a journal

- Publish with us

- Track your research

- Advanced Search

Data science ethical considerations: a systematic literature review and proposed project framework

New citation alert added.

This alert has been successfully added and will be sent to:

You will be notified whenever a record that you have chosen has been cited.

To manage your alert preferences, click on the button below.

New Citation Alert!

Please log in to your account

Information & Contributors

Bibliometrics & citations, view options.

- Fu F (2022) Exploring the Emotional Factors in College English Classroom Teaching Based on Computer-Aided Model Advances in Multimedia 10.1155/2022/4383254 2022 Online publication date: 1-Jan-2022 https://dl.acm.org/doi/10.1155/2022/4383254

- Bandy J (2021) Problematic Machine Behavior Proceedings of the ACM on Human-Computer Interaction 10.1145/3449148 5 :CSCW1 (1-34) Online publication date: 22-Apr-2021 https://dl.acm.org/doi/10.1145/3449148

- Georgiadis G Poels G (2021) Enterprise architecture management as a solution for addressing general data protection regulation requirements in a big data context: a systematic mapping study Information Systems and e-Business Management 10.1007/s10257-020-00500-5 19 :1 (313-362) Online publication date: 1-Mar-2021 https://dl.acm.org/doi/10.1007/s10257-020-00500-5

- Show More Cited By

Index Terms

Applied computing

Life and medical sciences

Security and privacy

Human and societal aspects of security and privacy

Social and professional topics

Computing / technology policy

Privacy policies

Professional topics

Computing profession

Codes of ethics

Recommendations

Key concepts for a data science ethics curriculum.

Data science is a new field that integrates aspects of computer science, statistics and information management. As a new field, ethical issues a data scientist may encounter have received little attention to date, and ethics training within a data ...

Data Science: A Comprehensive Overview

The 21st century has ushered in the age of big data and data economy, in which data DNA , which carries important knowledge, insights, and potential, has become an intrinsic constituent of all data-based organisms. An appropriate understanding of data ...

Big data and data science: what should we teach?

The era of big data has arrived. Big data bring us the data-driven paradigm and enlighten us to challenge new classes of problems we were not able to solve in the past. We are beginning to see the impacts of big data in every aspect of our lives and ...

Information

Published in.

Kluwer Academic Publishers

United States

Publication History

Author tags.

- Data science

- Code of conduct

- Research-article

Contributors

Other metrics, bibliometrics, article metrics.

- 4 Total Citations View Citations

- 0 Total Downloads

- Downloads (Last 12 months) 0

- Downloads (Last 6 weeks) 0

- Rochel J Evéquoz F (2021) Getting into the engine room: a blueprint to investigate the shadowy steps of AI ethics AI & Society 10.1007/s00146-020-01069-w 36 :2 (609-622) Online publication date: 1-Jun-2021 https://dl.acm.org/doi/10.1007/s00146-020-01069-w

View options

Login options.

Check if you have access through your login credentials or your institution to get full access on this article.

Full Access

Share this publication link.

Copying failed.

Share on social media

Affiliations, export citations.

- Please download or close your previous search result export first before starting a new bulk export. Preview is not available. By clicking download, a status dialog will open to start the export process. The process may take a few minutes but once it finishes a file will be downloadable from your browser. You may continue to browse the DL while the export process is in progress. Download

- Download citation

- Copy citation

We are preparing your search results for download ...

We will inform you here when the file is ready.

Your file of search results citations is now ready.

Your search export query has expired. Please try again.

Current approaches for executing big data science projects—a systematic literature review

There is an increasing number of big data science projects aiming to create value for organizations by improving decision making, streamlining costs or enhancing business processes. However, many of these projects fail to deliver the expected value. It has been observed that a key reason many data science projects don’t succeed is not technical in nature, but rather, the process aspect of the project. The lack of established and mature methodologies for executing data science projects has been frequently noted as a reason for these project failures. To help move the field forward, this study presents a systematic review of research focused on the adoption of big data science process frameworks. The goal of the review was to identify (1) the key themes, with respect to current research on how teams execute data science projects, (2) the most common approaches regarding how data science projects are organized, managed and coordinated, (3) the activities involved in a data science projects life cycle, and (4) the implications for future research in this field. In short, the review identified 68 primary studies thematically classified in six categories. Two of the themes (workflow and agility) accounted for approximately 80% of the identified studies. The findings regarding workflow approaches consist mainly of adaptations to CRISP-DM ( vs entirely new proposed methodologies). With respect to agile approaches, most of the studies only explored the conceptual benefits of using an agile approach in a data science project ( vs actually evaluating an agile framework being used in a data science context). Hence, one finding from this research is that future research should explore how to best achieve the theorized benefits of agility. Another finding is the need to explore how to efficiently combine workflow and agile frameworks within a data science context to achieve a more comprehensive approach for project execution.

Introduction

There is an increasing use of big data science across a range of organizations. This means that there is a growing number of big data science projects conducted by organizations. These projects aim to create value by improving decision making, streamlining costs or enhancing business processes.

However, many of these projects fail to deliver the expected value ( Martinez, Viles & Olaizola, 2021 ). For example, VentureBeats (2019) noted that 87% of data science projects never make it into production and a NewVantage survey ( NewVantage Partners, 2019 ) reported that for 77% of businesses, the adoption of big data and artificial intelligence (AI) initiatives is a big challenge. A systematic review over the grey and scientific literature has found 21 cases of failed big data projects reported over the last decade ( Reggio & Astesiano, 2020 ). This is due, at least in part, to that fact that data science teams generally suffer from immature processes, often relying on trial-and-error and Ad Hoc processes ( Bhardwaj et al., 2015 ; Gao, Koronios & Selle, 2015 ; Saltz & Shamshurin, 2015 ). In short, big data science projects often do not leverage well-defined process methodologies ( Martinez, Viles & Olaizola, 2021 ; Saltz & Hotz, 2020 ). To further emphasize this point, in a survey to data scientists from both industry as well as from not-for-profit organizations, 82% of the respondents did not follow an explicit process methodology for developing data science projects, and equally important, 85% of the respondents stated that using an improved and more consistent process would produce more effective data science projects ( Saltz et al., 2018 ).

While a literature review in 2016 did not identify any research focused on improving data science team processes ( Saltz & Shamshurin, 2016 ), more recently, there has been increase in the studies specifically focused on how to organize and manage big data science projects in more efficient manner ( e.g . Martinez, Viles & Olaizola, 2021 ; Saltz & Hotz, 2020 ).

With this in mind, this paper presents a systematic review of research focused on the adoption of big data science process frameworks. The purpose is to present an overview of research works, findings, as well as implications for research and practice. This is necessary to identify (1) the key themes, with respect to current research on how teams execute data science projects, (2) the most common approaches regarding how data science projects are organized, managed and coordinated, (3) the activities involved in a data science projects life cycle, and (4) the implications for future research in this field.

The rest of the paper is organized as follows: “Background and Related Work” section provides information on big data process frameworks and the key challenges with respect to teams executing big data science projects. In the “Survey Methodology” section, the adopted research methodology is discussed, while the “Results” section presents the findings of the study. The insights from this SLR as well as implications for future research and limitations of the study are highlighted in the “Discussion” section. “Conclusions” section concludes the paper.

Background and Related Work

It has been frequently noted that project management (PM) is a key challenge for successfully executing data science projects. In other words, a key reason many data science projects fail is not technical in nature, but rather, the process aspect of the project ( Ponsard et al., 2017 ). Furthermore, Espinosa & Armour (2016) argue that task coordination is a major challenge for data projects. Likewise, Chen, Kazman & Haziyev (2016) conclude that coordination among business analysts, data scientists, system designers, development and operations is a major obstacle that compromises big data science initiatives. Angée et al. (2018) summarized the challenge by noting that it is important to use an appropriate process methodology, but which, if any, process is the most appropriate is not easy to know.

The importance of using a well-defined process framework

This data science process challenge, in terms of knowing what process framework to use for data science projects, is important because it has been observed that big data science projects are non-trivial and require well-defined processes ( Angée et al., 2018 ). Furthermore, using a process model or methodology results in higher quality outcomes and avoids numerous problems that decrease the risk of failure in data analytics projects ( Mariscal, Marbán & Fernández, 2010 ). Example problems that occur when a team does not use a process model include the team being slow to share information, deliver the wrong result, and in general, work inefficiently ( Gao, Koronios & Selle, 2015 ; Chen et al., 2017 ).

The most common framework: CRISP-DM

The CRoss-Industry Standard Process for Data Mining (CRISP-DM) ( Chapman et al., 2000 ) along with Knowledge Discovery in Databases (KDD) ( Fayyad, Piatetky-Shapiro & Smyth, 1996 ), which both were created in the 1990s, are considered ‘canonical’ methodologies for most of the data mining and data science processes and methodologies ( Martinez-Plumed et al., 2019 ; Mariscal, Marbán & Fernández, 2010 ). The evolution of those methodologies can be traced forward to more recent methodologies such as Refined Data Mining Process ( Mariscal, Marbán & Fernández, 2010 ), IBM’s Foundational Methodology for Data Science ( Rollins, 2015 ) and Microsoft’s Team Data Science Process ( Microsoft, 2020 ).

However, recent surveys show that when data science teams do use a process, CRISP-DM has been consistently the most commonly used framework and de facto standard for analytics, data mining and data science projects ( Martinez-Plumed et al., 2019 ; Saltz & Hotz, 2020 ). In fact, according to many opinion polls, CRISP-DM is the only process framework that is typically known by data science teams ( Saltz, n.d. ), with roughly half the respondents reporting to use some version of CRISP-DM.

Business understanding—includes identification of business objectives and data mining goals

Data understanding—involves data collection, exploration and validation

Data preparation—involves data cleaning, transformation and integration

Modelling—includes selecting modelling technique and creating and assessing models

Evaluation—evaluates the results against business objectives

Deployment—includes planning for deployment, monitoring and maintenance.

CRISP-DM allows some high-level iteration between the steps ( Gao, Koronios & Selle, 2015 ). Typically, when a project uses CRISP-DM, the project moves from one phase (such as data understanding) to the next phase ( e.g ., data preparation). However, as the team deems appropriate, the team can go back to a previous phase. In a sense, one can think of CRISP-DM as a waterfall model for data mining ( Gao, Koronios & Selle, 2015 ).

While CRISP-DM is popular, and CRISP-DM’s phased based approach is helpful to describe what the team should do, there are some limitations with the framework. For example, the framework provides little guidance on how to know when to loop back to a previous phase, iterate on the current phase, or move to the next phase. In addition, CRISP-DM does not contemplate the need for operational support after deployment.

The stated need for more research

Given that many data science teams do not use a well-defined process and that others use CRISP-DM with known challenges, it is not surprising that there has been a consistent calling for more research with respect to data science team process. For example, in Cao’s discussion of Data Science challenges and future directions ( Cao & Fayyad, 2017 ), it was noted that one of the key challenges in analyzing data includes developing methodologies for data science teams. Gupte (2018) similarly noted that the best approach to execute data science projects must be studied. However, even with this noted challenge on data science process, there is a well-accepted view that not enough has been written about the solutions to tackle these problems ( Martinez, Viles & Olaizola, 2021 ).

Is there still a need for more research?

This lack of research on data science process frameworks was certainly true 6 years ago, when the need for concise, thorough and validated information regarding the ways data science projects are organized, managed and coordinated was noted ( Saltz, 2015 ). This need was further clarified when, in a literature review of big data science process research, no papers were found that focused on improving a data science team’s process or overall project management ( Ransbotham, David & Prentice, 2015 ). This was also consistent with the view that most big data science research has focused on the technical capabilities required for data science and has overlooked the topic of managing data science projects ( Saltz & Shamshurin, 2016 ).

RQ1: Has research in this domain increased recently?

RQ2: What are the most common approaches regarding how data science projects are organized, managed and coordinated?

RQ3: What are the phases or activities in a data science project life cycle?

Survey Methodology

While there are many approaches to a literature review, one approach, which is followed in this research, is to combine quantitative and qualitative analysis to provide deeper insights ( Joseph et al., 2007 ). Furthermore, the systematic literature review conducted in this study leveraged the guidelines for performing SLRs suggested by Kitchenham & Charters (2007) and the data were collected in a similar manner as described in Saltz & Dewar (2019) . Hence, the SLR process consisted of three phases: planning, conducting and reporting the review. The subsections below present the outcomes of the first two phases, while the results of the review are reported in the next section.

Planning the review

In general, systematic reviews address the need to summarize and present the existing information about some phenomenon in a thorough and unbiased manner ( Kitchenham & Charters, 2007 ). As previously noted, the need for concise, thorough and validated information regarding the ways data science projects are organized, managed and coordinated is justified by the lack of established and mature methodologies for executing data science projects. This has led to our previously defined research questions, which are the drivers for how we structured our research.

The study search space comprises the following five online sources: ACM Digital Library, IEEEXplore, Scopus, ScienceDirect and Google Scholar. In addition to online sources, the search space might be enriched with reference lists from relevant primary studies and review articles ( Kitchenham & Charters, 2007 ). Specifically, the papers that cite the study providing justification for the present research ( Saltz, 2015 ) and the previous SLR on the subject ( Saltz & Shamshurin, 2016 ) are added to the study search space.

Data science related terms: (“data science” OR “big data” OR “machine learning”).

Project execution related terms: (“process methodology” OR “team process” OR “team coordination” OR “project management”).

To determine whether a paper should be included in our analysis, the following selection criteria are defined:

Papers that fully or partly include a description of the organization, management or coordination of big data science projects.

Papers that suggest specific approaches for executing big data science projects.

Papers that were published after 2015.

Papers that are not written in English

Papers that did not focus on data science team process, but rather, focused on using data analytics to improve overall project management processes were excluded.

Papers that had no form of peer review ( e.g . blogs).

Papers with irrelevant document type such as posters, conference summaries, etc .

Our exclusion of papers that discussed the use of analytics for overall project management considerations was driven by our desire to focus this research on understanding the specific attributes of data science projects, and how different frameworks were, or were not, applicable in the context of a data science project. This does not imply that data science has no role in helping to improve overall project management approaches. In fact, data science can and should add to the field of general project management, but we view this analysis as beyond the scope of our research.

Step1: Title and abstract screen—Initially, after the relevant papers from the search space are identified according to the study search strategy, the selection criteria will be applied considering only the title and the abstracts of the papers. This step is to be executed by the two authors over different sets of identified papers.

Step2: Full text screen—The full text of the candidate papers will then be reviewed by the two authors independently to identify the final set of primary studies to be included for further data analysis.

The approach for data extraction and synthesis followed in our study is based on the content analysis suggested in Elo & Kyngäs (2008) , Hsieh & Shannon (2005) . After exploring the key concepts used within each of the primary studies, general research themes are to be identified and further analysis of the data with respect to the study research questions is to be performed in both qualitative and quantitative manner.

Conducting the review

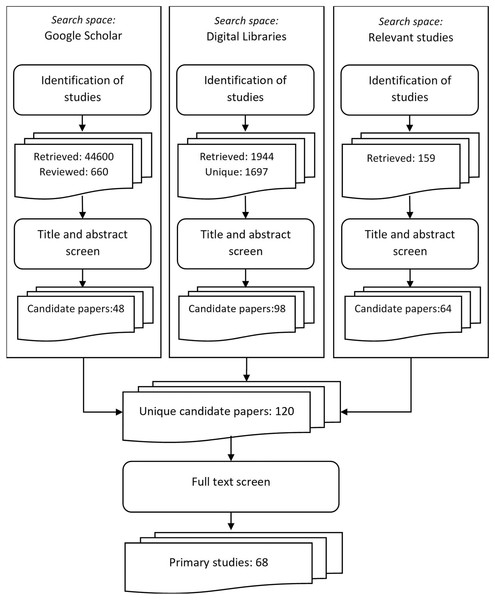

The SLR procedure was performed at the beginning of May, 2021. Because of the differences in running the searches over the online sources included in our search space, the identification of research and the first step of the selection procedure for Google Scholar were executed independently from the other digital libraries.

Search 1, the “data science” search: “data science” AND (“process methodology” OR “team process” OR “team coordination” OR “project management”).

Search 2, the “machine learning” search: “machine learning” AND (“process methodology” OR “team process” OR “team coordination” OR “project management”).

Search 3, the “big data” search: “big data” AND (“process methodology” OR “team process” OR “team coordination” OR “project management”).

Since the number of papers returned after executing the searches were very large, via a snowball sampling approach, only the first 220 papers in each result sets were included for further analysis. The first step of the selection procedure was executed for the unique papers in each of the sets and 48 papers were selected as candidates for primary studies. Table 1 shows the exact number of papers returned after running the searches and the first step of the selection procedure for Google Scholar.

| Search strings | Retrieved papers | Candidate papers |

|---|---|---|

| “data science” search string | 9,200 (first 220 used) | 37 |

| “machine learning” search string | 17,800 (first 220 used) | 1 |

| “big data” search string | 17,600 (first 220 used) | 10 |

Executing the initial search strings over the digital libraries resulted a vast number of papers ( e.g ., over 1,500 papers for IEEE Xplore full text). Motivated by the results of the executed searches in Google Scholar, an optimization of the search terms was introduced. Since the ratio of candidate to retrieved papers for the “machine learning” Google Scholar search string was very low and only one paper was selected after the first step of the selection procedure, we removed the term “machine learning” from the initial “Data science related terms” search phrase. The final search string that was used for identification of studies from the digital libraries the was: (“data science” OR “big data” OR “machine learning”) AND (“process methodology” OR “team process” OR “team coordination” OR “project management”).

ACM Digital Library—full text search.

IEEEXplore—metadata-based and full text searches.

Scopus—metadata-based search.

ScienceDirect—metadata-based search.

When executing the searches, appropriate filters helping to meet inclusion and exclusion criteria for each of the sources were applied where available. We used Mendeley as a reference management tool to help us organize the retrieved papers and to automate the removal of duplicates. A total of 1,944 was returned by the searches, from which 1,697 were unique papers. After executing the title and abstract screen, 98 papers were selected for candidates for primary studies. The exact numbers of retrieved and candidate papers are presented in Table 2 . The numbers shown in the table include papers duplicated across the digital libraries.

| Digital library search | Retrieved papers | Candidate papers |

|---|---|---|

| Scopus: Metadata | 327 | 52 |

| ACM: Full text | 330 | 18 |

| IEEE: All metadata | 197 | 24 |

| IEEE: Full Text | 1,066 | 36 |

| Science Direct: Metadata | 24 | 5 |

The relevant studies search space comprised the papers that cite the two studies which provide the proper justification and relevant background for our research, namely ( Saltz, 2015 ) and ( Saltz & Shamshurin, 2016 ). A total of 159 papers were found to cite the two papers. After filtering the papers by screening the titles and abstracts, 64 of those papers were selected for candidate primary studies.

A consolidated list of all the candidate papers which were selected in the previous step of the selection procedure was created. The list included 120 unique papers. After performing the next step of the selection procedure (full text review), 68 papers were selected. These papers comprised the list of primary studies that were further analyzed to provide the answers to our research questions. The steps of the SLR procedure that led to the identification of the primary studies for our study are presented in Fig. 1 .

Figure 1: Steps of the SLR procedure for identification of primary studies.

Following the guidelines by Cruzes & Dybå (2011) , thematic analysis and synthesis was applied during data extraction and synthesis. We used the integrated approach ( Cruzes & Dybå, 2011 ), which employs both inductive and deductive code development, for retrieving the research themes related to the execution of data science projects as well as for defining the categories of workflow approaches and the themes for agile adoption presented in the following section.

This section presents the findings of the SLR with regard to the three research questions defined in the planning phase.

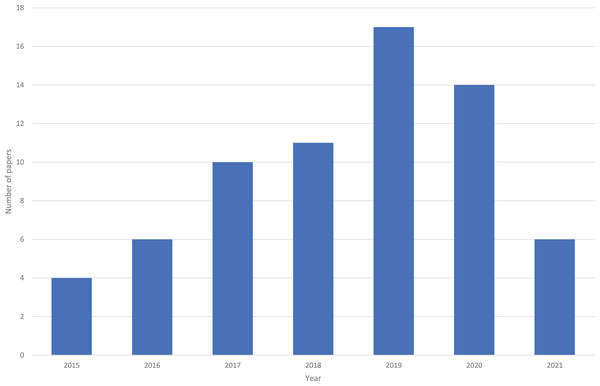

Research activity in this domain (RQ1)

As shown in Fig. 2 , there has been an increase in the number of articles published over time. Note that the review was in done in May 2021, so the 2021 year was on pace to have more papers than any other year ( i.e ., over the full year, 2021 was on pace to have 18+ papers). Furthermore, it is likely that 2020 had a reduction due to COVID.

Figure 2: Number of papers per year.

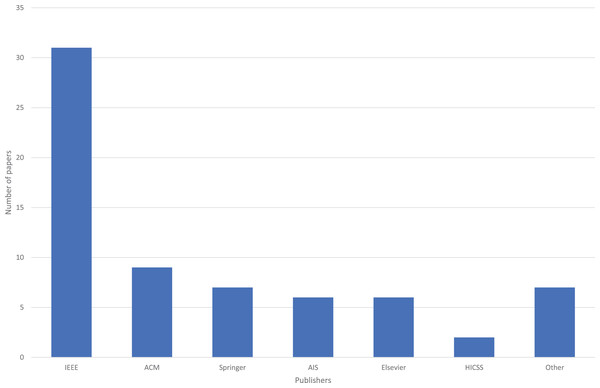

We also explored publishing outlets. Specifically, Fig. 3 shows the number of papers for each publisher. IEEE was the most frequent publisher, with 31 (46%) papers, due in part to a yearly IEEE workshop on this domain, that started in 2015. The next highest publisher was ACM, with nine papers (13%).

Figure 3: Number of papers for each publisher.

Approaches for executing data science projects (rq2).

Table 3 provides an overview of the six themes identified, with respect to the approaches for defining and using a data science process framework. The table also shows the relevant primary studies. While the six themes that we identified in our SLR are all relevant to project execution, there was a wide range in the number of papers published for the different themes. The ratio of publications across the different themes provides a high-level view of current research efforts regarding the execution of data science projects.

| Theme | Primary studies | Total number |

|---|---|---|

| Workflows | See | 27 |

| Agility | See | 26 |

| Process adoption | ( , ; ; ; ; ) | 6 |

| General PM | ( ; ; ; ) | 4 |

| Tools | ( ; ; ; ; ) | 5 |

| Reviews | ( ; ; ; ; ; ; ) | 7 |

| Category | Reference workflows | Primary studies |

|---|---|---|

| New | N\A | ( ; ) |

| CRISP-DM | ( ; ; ) | |

| KDD, CRISP-DM | ( ) | |

| Standard | CRISP-DM | ( ; ; ) |

| Specialization | CRISP-DM | ( ; ) |

| KDD | ( ) | |

| Extension | CRISP-DM | ( ; ; ; ) |

| KDD | ( ) | |

| other | ( ; ; ) | |

| Enrichment | CRISP-DM | ( ; ; ; ; ; ) |

| other | ( ) |

| Theme | Primary studies | Type | Total number |

|---|---|---|---|

| Conceptual Benefits of Agility | ( ; ; ; ; ; ; ; ; ; ; ; ; ; ; ) | Conceptual | 15 (58%) |

| Challenges in Scrum | ( ; ; ; ; ) | Case Study | 5 (19%) |

| Scrum is used | ( ; ) | Case Study | 2 (7%) |

| Conceptual Benefits of Scrum | ( ; ) | Conceptual | 2 (7%) |

| Conceptual Benefits of Lean | ( ) | Conceptual | 1 (4%) |

| Challenges in Kanban | ( ) | Case Study | 1 (4%) |

Below we provide a description for each of the themes, with an expanded focus on the two most popular themes (workflows and agility).

Workflows papers explored how data science projects were organized with respect to the phases, steps, activities and tasks of the execution process ( e.g ., CRISP-DM’s project phases). There were 27 papers in this theme, which is about 40% of the total number of primary studies. Workflow approaches are discussed in our second research question and a detailed overview of the relevant studies will be provided in the following section.

Agility papers described the adoption of agile approaches and considered specific aspects of project execution such as the need for iterations or how teams should coordination and collaborate. The high number of papers categorized in the Agility theme (26 out of 68) might be due to the successful adoption of agile methodologies in various software development projects. The theme will be covered in the next section since agile adoption is also relevant to our second research question. Seven papers explored both the workflows and agility themes.

Process adoption papers discussed the key factors as well as the challenges for a data science team to adopt a new process. Specifically, the papers that discussed process adoption considered questions such as acceptance factors ( Saltz, 2017 , 2018 ; Saltz & Hotz, 2021 ), project success factors ( Soukaina et al., 2019 ), exploring the application of software engineering practices in the data science context ( Saltz & Shamshurin, 2017 ), and would deep learning impact a data science teams process adoption ( Shamshurin & Saltz, 2019a ).

General PM papers discussed general project management challenges. These papers did not focus on addressing any data science unique characteristics, but rather, general management challenges such as the team’s process maturity ( Saltz & Shamshurin, 2015 ), the need for collaboration ( Mao et al., 2019 ), the organizational needs and challenges when executing projects ( Ramesh & Ramakrishna, 2018 ) and training of human resources ( Mullarkey et al., 2019 ).

Tools focused papers described new tools that could improve the data science team’s productivity. Five papers explored how different tools, both custom and commercial, could be used to support various aspects of the execution of the data science projects. The tools explored focused on communication and collaboration ( Marin, 2019 ; Wang et al., 2019 ), Continuous Integration/Continuous Development ( Chen et al., 2020 ), the maintainability of a data science project ( Saltz et al., 2020 ) and a tool to improve the coordination of the data science team ( Crowston et al., 2021 ).

Reviews were papers that reported on a SLR for a specific topic related to data science project execution or papers that report on an industry survey. An SLR aiming to find out benefits and challenges on applying CRISP-DM in research studies is presented in Schröer, Kruse & Gómez (2021) . How different data mining methodologies are adapted in practice is investigated in Plotnikova, Dumas & Milani (2020) . That literature review covered 207 peer-reviewed and ‘grey’ publications and identified four adaptation patters and two recurrent purposes for adaptation. Another SLR focused on experience reports and explored the adoption of agile software development methods in data science projects ( Krasteva & Ilieva, 2020 ). An extensive critical review over 19 data science methodologies is presented in Martinez, Viles & Olaizola (2021) . The paper also proposed principles of an integral methodology for data science which should include the three foundation stones: project, team and data & information management. Professionals with different roles across multiple organizations were surveyed in Saltz et al. (2018) about the methodology they used in their data science projects and whether an improved project management process would benefit their results. The two papers that formed the core of our search space of related papers ( Saltz, 2015 ) and ( Saltz & Shamshurin, 2016 ), were also included in the Reviews thematic category.

Workflow approaches

Specialization—adjustments to standard workflows, which are made to better suit particular big data technology or specific domain.

Extension—addition of new steps, tasks or activities to extend standard workflow phases.

Enrichment—extension of the scope of a standard workflow to provide more comprehensive coverage of the project execution activities.

An overview of workflow categories and respective primary studies is presented in Table 4 . Multiple studies of the same workflow are shown in brackets. Most of the workflows use a standard framework as a reference point for specification of both new and adapted workflows. As seen in Table 4 , CRISP-DM provides the basis for the majority of the workflow papers. Below we explore each of these categories in more depth.

New workflows

While the workflow proposed in Grady (2016) make use of CRISP-DM activities, a new workflow with four phases, five stages and more than 15 activities was designed to accommodate big data technologies and data science activities. Providing a more focused technology perspective ( Amershi et al., 2019 ) proposes a nine-stage workflow for integrating machine learning into application and platform development. Uniting the advantages of experimentation and iterative working along with a greater understanding of the user requirements, a novel approach for data projects is proposed in Ahmed, Dannhauser & Philip (2019) . The suggested workflow consists of three stages and seven steps and integrates the principles of the Lean Start-up method and design thinking with CRISP-DM activities. The workflows in Dutta & Bose (2015) and Shah, Gochtovtt & Baldini (2019) are designed and used in companies, and integrate strategic perspective with planning, management and implementation.

Standard workflows

Three of the primary studies reported on using CRISP-DM in student projects and compared and contracted the adoption of different methodologies ( e.g . CRISP-DM, Scrum and Kanban) for executing data science projects.

Workflow specializations

Specialization category is the smallest of the three adaption sub-categories. Two of the workflows in this category were based on CRISP-DM and were specialized for sequence analysis ( Kalgotra & Sharda, 2016 ) or anomaly detection ( Schwenzfeier & Gruhn, 2018 ). In addition, a revised KDD procedure model for time-series data was proposed in Vernickel et al. (2019) .

Workflow extensions

An extension to CRISP-DM for knowledge discovery on social networks was specified as a seven-stage workflow that can be applied in different domains intersecting with social network platforms ( Asamoah & Sharda, 2019 ). While this workflow extended CRISP-DM for big data, the workflows in Ponsard, Touzani & Majchrowski (2017) and Qadadeh & Abdallah (2020) added additional workflow steps focused on identification of data value and business objectives. An extension to KDD for public healthcare was proposed in Silva, Saraee & Saraee (2019) . The suggested workflow implies user-friendly techniques and tools to help healthcare professionals use data science in their daily work. By performing a SLR of recent developments in KD process models ( Baijens & Helms, 2019 ) proposes relevant adjustments of the steps and tasks of the Refined Data Mining Process ( Mariscal, Marbán & Fernández, 2010 ). The IBM’s Analytics Solutions Unified Method for Data Mining/predictive analytics (ASUM-DM) is extended in Angée et al. (2018) for a specific use case in the banking sector with focus on big data analytics, prototyping and evaluation. A software engineering lifecycle process for big data projects is proposed in Lin & Huang (2017) as an extension to the ISO/IEC standard 15288:2008.

Workflow enrichments

There were several papers that extend CRISP-DM in different dimensions. The studies in Kolyshkina & Simoff (2019) and Fahse, Huber & van Giffen (2021) addressed two important aspects of ML solutions—interpretability and bias, respectively. They suggested new activities and methods integrated in CRISP-DM steps for satisfying desired interpretability level and for bias prevention and mitigation. A novel approach for custom workflow creation from a flexible and comprehensive Data Science Trajectory map of activities was suggested in Martinez-Plumed et al. (2019) . The approach is designed to address the diversity of data science projects and their exploratory nature. The workflow presented in Kordon (2020) proposes improvements to CRISP-DM in several areas—maintenance and support, knowledge acquisition and project management. Scheduling, roles and tools are integrated with CRISP-DM in a methodology, presented in Costa & Aparicio (2020) . Checkpoints and synchronization are used in the proposed in Yamada & Peran (2017) Analytics Governance Framework to facilitate communication and coordination between the client and the data science team. Collaboration is the primary focus in Zhang, Muller & Wang (2020) , in which a basic workflow is extended with collaborative practices, roles and tools.

Agile approaches

As shown in Table 5 , there were 26 papers that focused on the need for agility within data science projects. Only 31% of the papers actually reported on teams using an agile approach. The rest of the papers, 69% (18 of the 26 papers), were conceptual in nature. These conceptual papers explained why it makes sense that a framework should be helpful for a data science project but provided no examples that the framework actually helps a data science team.

Specifically, the vast majority of the papers (15 papers), explored the potential benefits of agility for data science projects. These papers were labeled general agility papers since they did not explicitly support any specific agile approach, but rather, noted the benefits teams should get by adopting an agile framework. The expected benefits of agility typically focused on the need for multiple iterations to support the exploratory nature of data science projects, especially since the outcomes are uncertain. This would allow teams to adjust their future plans based on the results of their current iteration.

Two papers discussed the potential benefits of Scrum. However, five papers reported on the difficulty teams encountered when they actually tried to use Scrum. Often times, issues arose due to the challenge in accurately estimating how long a task would take to complete. This issue of task estimation impacted the team’s ability to determine what work items could fit into a sprint. Two other papers reported on the use of Scrum within data science team, but both of those papers did not describe in depth how the team used Scrum, nor if there were any benefits or issues due to their use of Scrum.

Finally, one paper discussed the conceptual benefits of using a lean approach and a different paper reported on the challenge in using Kanban (which can be thought as supporting both agility and lean principles). That paper explored the need for the process master role, similar to the Scrum Master role in Scrum.

Combined approaches

The seven papers that covered both the workflow and agility themes presented a more comprehensive methodology for project execution. Several proposed new frameworks ( Grady, Payne & Parker, 2017 ; Ponsard, Touzani & Majchrowski, 2017 ; Ponsard et al., 2017 ; Ahmed, Dannhauser & Philip, 2019 ). All of the newly proposed frameworks defined a new workflow (typically based on CRISP-DM), and also suggested that the project do iterations and focus on creating a minimal viable product (MVP). However, there was no consensus on if the iterations should be time-boxed or capability based. Furthermore, there no consensus on how to integrate the data science life cycle into each iteration. In fact, two papers didn’t explicitly address this question ( Ponsard, Touzani & Majchrowski, 2017 ; Ponsard et al., 2017 ) and another article implied that something should be done for each phase in each sprint ( Grady, Payne & Parker, 2017 ). Yet another article suggested that maybe some iterations focus on a specific phase and other iterations might focus on more than one phase ( Ahmed, Dannhauser & Philip, 2019 ).

Three articles analyzed existing frameworks, including both workflow and agile frameworks ( Saltz, Shamshurin & Crowston, 2017 ; Saltz, Heckman & Shamshurin, 2017 ; Shah, Gochtovtt & Baldini, 2019 ). For both of these articles, there was not explicit discussion on how to integrate workflow frameworks with agile frameworks.

Data science project life cycle activities (RQ3)

Table 6 shows a synthesized overview of the life cycle phases mentioned in the workflow papers, presented above. This table also shows the number (and percentage) of papers that mention a specific data science life cycle phase. One can note that the most common phases are the CRISP-DM phases.

| Theme | Total number | CRISP-DM phase |

|---|---|---|

| Readiness assessment | 1 (4%) | |

| Project organization | 5 (18%) | |

| Business understanding | 19 (68%) | ✓ |