- Data Structures

- Linked List

- Binary Tree

- Binary Search Tree

- Segment Tree

- Disjoint Set Union

- Fenwick Tree

- Red-Black Tree

- Advanced Data Structures

Hungarian Algorithm for Assignment Problem | Set 1 (Introduction)

- Hungarian Algorithm for Assignment Problem | Set 2 (Implementation)

- Introduction to Exact Cover Problem and Algorithm X

- Greedy Approximate Algorithm for Set Cover Problem

- Job Assignment Problem using Branch And Bound

- Implementation of Exhaustive Search Algorithm for Set Packing

- Channel Assignment Problem

- Chocolate Distribution Problem | Set 2

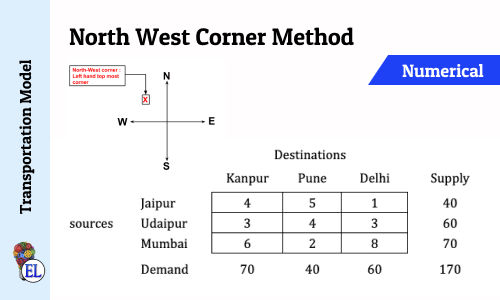

- Transportation Problem | Set 1 (Introduction)

- OLA Interview Experience | Set 11 ( For Internship)

- Top 20 Greedy Algorithms Interview Questions

- Job Sequencing Problem - Loss Minimization

- Prim's Algorithm (Simple Implementation for Adjacency Matrix Representation)

- Data Structures and Algorithms | Set 21

- Adobe Interview Experience | Set 55 (On-Campus Full Time for MTS profile)

- Amazon Interview Experience | Set 211 (On-Campus for Internship)

- OYO Rooms Interview Experience | Set 3 (For SDE-II, Gurgaon)

- C# Program for Dijkstra's shortest path algorithm | Greedy Algo-7

- Algorithms | Dynamic Programming | Question 7

- Amazon Interview | Set 46 (On-campus for Internship)

- For each row of the matrix, find the smallest element and subtract it from every element in its row.

- Do the same (as step 1) for all columns.

- Cover all zeros in the matrix using minimum number of horizontal and vertical lines.

- Test for Optimality: If the minimum number of covering lines is n, an optimal assignment is possible and we are finished. Else if lines are lesser than n, we haven’t found the optimal assignment, and must proceed to step 5.

- Determine the smallest entry not covered by any line. Subtract this entry from each uncovered row, and then add it to each covered column. Return to step 3.

Try it before moving to see the solution

Explanation for above simple example:

An example that doesn’t lead to optimal value in first attempt: In the above example, the first check for optimality did give us solution. What if we the number covering lines is less than n.

Time complexity : O(n^3), where n is the number of workers and jobs. This is because the algorithm implements the Hungarian algorithm, which is known to have a time complexity of O(n^3).

Space complexity : O(n^2), where n is the number of workers and jobs. This is because the algorithm uses a 2D cost matrix of size n x n to store the costs of assigning each worker to a job, and additional arrays of size n to store the labels, matches, and auxiliary information needed for the algorithm.

In the next post, we will be discussing implementation of the above algorithm. The implementation requires more steps as we need to find minimum number of lines to cover all 0’s using a program. References: http://www.math.harvard.edu/archive/20_spring_05/handouts/assignment_overheads.pdf https://www.youtube.com/watch?v=dQDZNHwuuOY

Please Login to comment...

Similar reads.

- Mathematical

Improve your Coding Skills with Practice

What kind of Experience do you want to share?

Hungarian Matching Algorithm

Introduction

The Hungarian matching algorithm is a combinatorial optimization algorithm that solves the assignment linear-programming problem in polynomial time. The assignment problem is an interesting problem and the Hungarian algorithm is difficult to understand.

In this blog post, I would like to talk about what assignment is and give some intuitions behind the Hungarian algorithm.

Minimum Cost Assignment Problem

Problem definition.

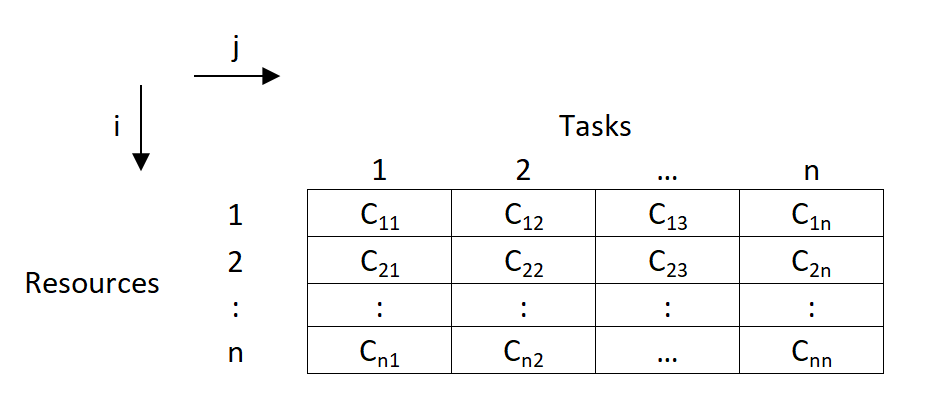

In the matrix formulation, we are given a nonnegative $n \times n$ cost matrix, where the element in the $i$-th row and $j$-th column represents the cost of assigning the $j$-th job to the $i$-th worker. We have to find an assignment of the jobs to the workers, such that each job is assigned to one worker, each worker is assigned one job, and the total cost of assignment is minimum.

Finding a brute-force solution for this problem takes $O(n!)$ because the number of valid assignments is $n!$. We really need a better algorithm, which preferably takes polynomial time, to solve this problem.

Maximum Cost Assignment VS Minimum Cost Assignment

Solving a maximum cost assignment problem could be converted to solving a minimum cost assignment problem, and vice versa. Suppose the cost matrix is $c$, solving the maximum cost assignment problem for cost matrix $c$ is equivalent to solving the minimum cost assignment problem for cost matrix $-c$. So we will only discuss the minimum cost assignment problem in this article.

Non-Square Cost Matrix

In practice, it is common to have a cost matrix which is not square. But we could make the cost matrix square, fill the empty entries with $0$, and apply the Hungarian algorithm to solve the optimal cost assignment problem.

Brilliant has a very good summary on the Hungarian algorithm for adjacency cost matrix. Let’s walk through it.

- Subtract the smallest entry in each row from all the other entries in the row. This will make the smallest entry in the row now equal to 0.

- Subtract the smallest entry in each column from all the other entries in the column. This will make the smallest entry in the column now equal to 0.

- Draw lines through the row and columns that have the 0 entries such that the fewest lines possible are drawn.

- If there are nn lines drawn, an optimal assignment of zeros is possible and the algorithm is finished. If the number of lines is less than nn, then the optimal number of zeroes is not yet reached. Go to the next step.

- Find the smallest entry not covered by any line. Subtract this entry from each row that isn’t crossed out, and then add it to each column that is crossed out. Then, go back to Step 3.

Note that we could not tell the algorithm time complexity from the description above. The time complexity is actually $O(n^3)$ where $n$ is the side length of the square cost adjacency matrix.

The Brilliant Hungarian algorithm for adjacency cost matrix also comes with a good example. We slightly modified the example by making the cost matrix non-square.

To avoid duplicating the solution on Brilliant, instead of solving it manually, we will use the existing SciPy linear sum assignment optimizer to solve, and verified using a brute force solver.

If a number is added to or subtracted from all of the entries of any one row or column of a cost matrix $c$ (all the values in $c$ are non-negative), then an optimal assignment for the resulting cost matrix is also an optimal assignment for the original cost matrix.

Here is the way to understand this. The cost of each assignment consists of two parts, the cost from one of the entries in the selected row and the cost from the entries in the other rows. Suppose the original optimal (maximum or minimum) cost of the original optimal assignment is $c$, and $c > c^{\prime}$ if the optimal is maximum and $c < c^{\prime}$ if the optimal is minimum where $c^{\prime}$ is any of the costs of the non-optimal assignments. If a number $a$ is added to or subtracted from the selected row, the original optimal cost $c$ now becomes $c + a$ if it was addition, and $c - a$ if it was subtraction. In addition, any non-optimal cost $c^{\prime}$ now becomes $c^{\prime} + a$ if it was addition, and $c^{\prime} - a$ if it was subtraction. We still have $c + a > c^{\prime} + a$ or $c - a > c^{\prime} - a$ if the optimal is maximum and $c + a < c^{\prime} + a$ or $c - a < c^{\prime} - a$ if the optimal is minimum. This means a number is added to or subtracted from the selected row, the optimal assignment is still the optimal. Similarly, we could understand it for adding or subtracting a number from a selected column.

Given a $m \times m$ cost matrix $c$ and $m \times m$ assignment matrix $x$, we define $c_{ij}$ as the cost of assigning worker $i$ to task $j$, and $x_{ij} = 1$ if worker $i$ is assigned to task $j$, otherwise $x_{ij} = 0$. So the minimum cost assignment problem could be formulated as a linear programming problem mathematically as follows.

$$ \DeclareMathOperator*{\argmin}{argmin} \begin{align} &\argmin_{x} \sum_{i=1}^{m} \sum_{j=1}^{m} c_{ij} x_{ij} \\ \end{align} $$

subject to a valid assignment $x$

$$ \begin{align} &\sum_{i=1}^{m} x_{ij} = 1 \\ &\sum_{j=1}^{m} x_{ij} = 1 \\ &x_{ij} \in {0, 1} \\ \end{align} $$

Note that for maximum cost assignment problem, it could be formulated as the following minimization problem as well.

$$ \DeclareMathOperator*{\argmin}{argmin} \begin{align} &\argmin_{x} \sum_{i=1}^{m} \sum_{j=1}^{m} -c_{ij} x_{ij} \\ \end{align} $$

we further define

$$ \begin{align} z &\equiv \sum_{i=1}^{m} \sum_{j=1}^{m} c_{ij} x_{ij} \\ \end{align} $$

If somehow we have a series of magic values, $u = \{u_1, u_2, \cdots, u_m\}$, and $v = \{v_1, v_2, \cdots, v_m\}$, such that

$$ \begin{align} c_{ij} - u_i - v_j \geq 0 \quad \forall i \in [1, m], j \in [1, m] \end{align} $$

$$ \begin{align} c_{ij} - u_i - v_j = 0 \quad \forall (i, j) \in \text{a valid assignment $x^0$ such that } x_{ij}^0 = 1 \end{align} $$

We would like to plug in the values $u = \{u_1, u_2, \cdots, u_m\}$, and $v = \{v_1, v_2, \cdots, v_m\}$ to $z$. Note that the behavior of subtracting values from rows and columns in the Hungarian algorithm is encoded in this step.

$$ \begin{align} z &= \sum_{i=1}^{m} \sum_{j=1}^{m} c_{ij} x_{ij} \\ &= \sum_{i=1}^{m} \sum_{j=1}^{m} (c_{ij} - u_i - v_j + u_i + v_j) x_{ij} \\ &= \sum_{i=1}^{m} \sum_{j=1}^{m} (c_{ij} - u_i - v_j) x_{ij} + \sum_{i=1}^{m} \sum_{j=1}^{m} u_i x_{ij} + \sum_{i=1}^{m} \sum_{j=1}^{m} v_j x_{ij} \\ &= \sum_{i=1}^{m} \sum_{j=1}^{m} (c_{ij} - u_i - v_j) x_{ij} + \sum_{i=1}^{m} u_i \sum_{j=1}^{m} x_{ij} + \sum_{j=1}^{m} v_j \sum_{i=1}^{m} x_{ij} \\ &= \sum_{i=1}^{m} \sum_{j=1}^{m} (c_{ij} - u_i - v_j) x_{ij} + \sum_{i=1}^{m} u_i \times 1 + \sum_{j=1}^{m} v_j \times 1 \\ &= \sum_{i=1}^{m} \sum_{j=1}^{m} (c_{ij} - u_i - v_j) x_{ij} + \sum_{i=1}^{m} u_i + \sum_{j=1}^{m} v_j \\ \end{align} $$

We see that different valid assignment will change the value for $\sum_{i=1}^{m} \sum_{j=1}^{m} (c_{ij} - u_i - v_j) x_{ij}$, but the value for $\sum_{i=1}^{m} u_i + \sum_{j=1}^{m} v_j$ remains unaffected. So minimizing $z$ is equivalent to minimizing $\sum_{i=1}^{m} \sum_{j=1}^{m} (c_{ij} - u_i - v_j) x_{ij}$.

We have $\sum_{i=1}^{m} \sum_{j=1}^{m} (c_{ij} - u_i - v_j) x_{ij} \geq 0$.

If $x = x^0$, $\sum_{i=1}^{m} \sum_{j=1}^{m} (c_{ij} - u_i - v_j) x_{ij} = 0$, which is the minimum value it can achieve.

Therefore, we have found the optimal assignment $x = x^0$, and the minimum cost is $\sum_{i=1}^{m} u_i + \sum_{j=1}^{m} v_j$.

This partially explains why Hungarian algorithm works. However, it does not explain why the magic values exists and how the magic values are determined. The rationale behind this is related to the duality of a linear programming optimization problem. In our case, $x$ is the primal variables and $u$ and $v$ are dual variables. More details could be found from Katta G. Murty’s book “Network Programming” Chapter 3.1, which looks very complicated though. Frankly, I really need to find some time to go through this chapter.

Final Remarks

I rarely applaud for deep learning algorithms. Conventional algorithms such as the Hungarian matching algorithm are truly amazing.

- Hungarian Maximum Matching Algorithm

- Intuition behind the Hungarian Algorithm

- The Hungarian Method for the Assignment Problem

https://leimao.github.io/blog/Hungarian-Matching-Algorithm/

Licensed under

Like this article support the author with.

- 1 Introduction

- 2.1 Problem Definition

- 2.2 Maximum Cost Assignment VS Minimum Cost Assignment

- 2.3 Non-Square Cost Matrix

- 3.1 Algorithm

- 3.2 Example

- 3.3 Key Idea

- 3.4 Intuitions

- 4 Final Remarks

- 5 References

Reset password New user? Sign up

Existing user? Log in

Hungarian Maximum Matching Algorithm

Already have an account? Log in here.

The Hungarian matching algorithm , also called the Kuhn-Munkres algorithm, is a \(O\big(|V|^3\big)\) algorithm that can be used to find maximum-weight matchings in bipartite graphs , which is sometimes called the assignment problem . A bipartite graph can easily be represented by an adjacency matrix , where the weights of edges are the entries. Thinking about the graph in terms of an adjacency matrix is useful for the Hungarian algorithm.

A matching corresponds to a choice of 1s in the adjacency matrix, with at most one 1 in each row and in each column.

The Hungarian algorithm solves the following problem:

In a complete bipartite graph \(G\), find the maximum-weight matching. (Recall that a maximum-weight matching is also a perfect matching.)

This can also be adapted to find the minimum-weight matching.

Say you are having a party and you want a musician to perform, a chef to prepare food, and a cleaning service to help clean up after the party. There are three companies that provide each of these three services, but one company can only provide one service at a time (i.e. Company B cannot provide both the cleaners and the chef). You are deciding which company you should purchase each service from in order to minimize the cost of the party. You realize that is an example of the assignment problem, and set out to make a graph out of the following information: \(\quad\) Company\(\quad\) \(\quad\) Cost for Musician\(\quad\) \(\quad\) Cost for Chef\(\quad\) \(\quad\) Cost for Cleaners\(\quad\) \(\quad\) Company A\(\quad\) \(\quad\) $108\(\quad\) \(\quad\) $125\(\quad\) \(\quad\) $150\(\quad\) \(\quad\) Company B\(\quad\) \(\quad\) $150\(\quad\) \(\quad\) $135\(\quad\) \(\quad\) $175\(\quad\) \(\quad\) Company C\(\quad\) \(\quad\) $122\(\quad\) \(\quad\) $148\(\quad\) \(\quad\) $250\(\quad\) Can you model this table as a graph? What are the nodes? What are the edges? Show Answer The nodes are the companies and the services. The edges are weighted by the price.

What are some ways to solve the problem above? Since the table above can be thought of as a \(3 \times 3\) matrix, one could certainly solve this problem using brute force, checking every combination and seeing what yields the lowest price. However, there are \(n!\) combinations to check, and for large \(n\), this method becomes very inefficient very quickly.

The Hungarian Algorithm Using an Adjacency Matrix

The hungarian algorithm using a graph.

With the cost matrix from the example above in mind, the Hungarian algorithm operates on this key idea: if a number is added to or subtracted from all of the entries of any one row or column of a cost matrix, then an optimal assignment for the resulting cost matrix is also an optimal assignment for the original cost matrix.

The Hungarian Method [1] Subtract the smallest entry in each row from all the other entries in the row. This will make the smallest entry in the row now equal to 0. Subtract the smallest entry in each column from all the other entries in the column. This will make the smallest entry in the column now equal to 0. Draw lines through the row and columns that have the 0 entries such that the fewest lines possible are drawn. If there are \(n\) lines drawn, an optimal assignment of zeros is possible and the algorithm is finished. If the number of lines is less than \(n\), then the optimal number of zeroes is not yet reached. Go to the next step. Find the smallest entry not covered by any line. Subtract this entry from each row that isn’t crossed out, and then add it to each column that is crossed out. Then, go back to Step 3.

Solve for the optimal solution for the example in the introduction using the Hungarian algorithm described above. Here is the initial adjacency matrix: Subtract the smallest value in each row from the other values in the row: Now, subtract the smallest value in each column from all other values in the column: Draw lines through the row and columns that have the 0 entries such that the fewest possible lines are drawn: There are 2 lines drawn, and 2 is less than 3, so there is not yet the optimal number of zeroes. Find the smallest entry not covered by any line. Subtract this entry from each row that isn’t crossed out, and then add it to each column that is crossed out. Then, go back to Step 3. 2 is the smallest entry. First, subtract from the uncovered rows: Now add to the covered columns: Now go back to step 3, drawing lines through the rows and columns that have 0 entries: There are 3 lines (which is \(n\)), so we are done. The assignment will be where the 0's are in the matrix such that only one 0 per row and column is part of the assignment. Replace the original values: The Hungarian algorithm tells us that it is cheapest to go with the musician from company C, the chef from company B, and the cleaners from company A. We can verify this by brute force. 108 + 135 + 250 = 493 108 + 148 + 175 = 431 150 + 125 + 250 = 525 150 + 148 + 150 = 448 122 + 125 + 175 = 422 122 + 135 + 150 = 407. We can see that 407 is the lowest price and matches the assignment the Hungarian algorithm determined. \(_\square\)

The Hungarian algorithm can also be executed by manipulating the weights of the bipartite graph in order to find a stable, maximum (or minimum) weight matching. This can be done by finding a feasible labeling of a graph that is perfectly matched, where a perfect matching is denoted as every vertex having exactly one edge of the matching.

How do we know that this creates a maximum-weight matching?

A feasible labeling on a perfect match returns a maximum-weighted matching. Suppose each edge \(e\) in the graph \(G\) connects two vertices, and every vertex \(v\) is covered exactly once. With this, we have the following inequality: \[w(M’) = \sum_{e\ \epsilon\ E} w(e) \leq \sum_{e\ \epsilon\ E } \big(l(e_x) + l(e_y)\big) = \sum_{v\ \epsilon\ V} l(v),\] where \(M’\) is any perfect matching in \(G\) created by a random assignment of vertices, and \(l(x)\) is a numeric label to node \(x\). This means that \(\sum_{v\ \epsilon\ V}\ l(v)\) is an upper bound on the cost of any perfect matching. Now let \(M\) be a perfect match in \(G\), then \[w(M) = \sum_{e\ \epsilon\ E} w(e) = \sum_{v\ \epsilon\ V}\ l(v).\] So \(w(M’) \leq w(M)\) and \(M\) is optimal. \(_\square\)

Start the algorithm by assigning any weight to each individual node in order to form a feasible labeling of the graph \(G\). This labeling will be improved upon by finding augmenting paths for the assignment until the optimal one is found.

A feasible labeling is a labeling such that

\(l(x) + l(y) \geq w(x,y)\ \forall x \in X, y \in Y\), where \(X\) is the set of nodes on one side of the bipartite graph, \(Y\) is the other set of nodes, \(l(x)\) is the label of \(x\), etc., and \(w(x,y)\) is the weight of the edge between \(x\) and \(y\).

A simple feasible labeling is just to label a node with the number of the largest weight from an edge going into the node. This is certain to be a feasible labeling because if \(A\) is a node connected to \(B\), the label of \(A\) plus the label of \(B\) is greater than or equal to the weight \(w(x,y)\) for all \(y\) and \(x\).

A feasible labeling of nodes, where labels are in red [2] .

Imagine there are four soccer players and each can play a few positions in the field. The team manager has quantified their skill level playing each position to make assignments easier.

How can players be assigned to positions in order to maximize the amount of skill points they provide?

The algorithm starts by labeling all nodes on one side of the graph with the maximum weight. This can be done by finding the maximum-weighted edge and labeling the adjacent node with it. Additionally, match the graph with those edges. If a node has two maximum edges, don’t connect them.

Although Eva is the best suited to play defense, she can't play defense and mid at the same time!

If the matching is perfect, the algorithm is done as there is a perfect matching of maximum weights. Otherwise, there will be two nodes that are not connected to any other node, like Tom and Defense. If this is the case, begin iterating.

Improve the labeling by finding the non-zero label vertex without a match, and try to find the best assignment for it. Formally, the Hungarian matching algorithm can be executed as defined below:

The Hungarian Algorithm for Graphs [3] Given: the labeling \(l\), an equality graph \(G_l = (V, E_l)\), an initial matching \(M\) in \(G_l\), and an unmatched vertex \(u \in V\) and \(u \notin M\) Augmenting the matching A path is augmenting for \(M\) in \(G_l\) if it alternates between edges in the matching and edges not in the matching, and the first and last vertices are free vertices , or unmatched, in \(M\). We will keep track of a candidate augmenting path starting at the vertex \(u\). If the algorithm finds an unmatched vertex \(v\), add on to the existing augmenting path \(p\) by adding the \(u\) to \(v\) segment. Flip the matching by replacing the edges in \(M\) with the edges in the augmenting path that are not in \(M\) \((\)in other words, the edges in \(E_l - M).\) Improving the labeling \(S \subseteq X\) and \(T \subseteq Y,\) where \(S\) and \(T\) represent the candidate augmenting alternating path between the matching and the edges not in the matching. Let \(N_l(S)\) be the neighbors to each node that is in \(S\) along edges in \(E_l\) such that \(N_l(S) = \{v|\forall u \in S: (u,v) \in E_l\}\). If \(N_l(S) = T\), then we cannot increase the size of the alternating path (and therefore can't further augment), so we need to improve the labeling. Let \(\delta_l\) be the minimum of \(l(u) + l(v) - w(u,v)\) over all of the \(u \in S\) and \(v \notin T\). Improve the labeling \(l\) to \(l'\): If \(r \in S,\) then \(l'(r) = l(r) - \delta_l,\) If \(r \in T,\) then \(l'(r) = l(r) + \delta_l.\) If \(r \notin S\) and \(r \notin T,\) then \(l'(r) = l(r).\) \(l'\) is a valid labeling and \(E_l \subset E_{l'}.\) Putting it all together: The Hungarian Algorithm Start with some matching \(M\), a valid labeling \(l\), where \(l\) is defined as the labelling \(\forall x \in X, y \in Y| l(y) = 0, l(x) = \text{ max}_{y \in Y}(w\big(x, y)\big)\). Do these steps until a perfect matching is found \((\)when \(M\) is perfect\():\) (a) Look for an augmenting path in \(M.\) (b) If an augmenting path does not exist, improve the labeling and then go back to step (a).

Each step will increase the size of the matching \(M\) or it will increase the size of the set of labeled edges, \(E_l\). This means that the process will eventually terminate since there are only so many edges in the graph \(G\). [4]

When the process terminates, \(M\) will be a perfect matching. By the Kuhn-Munkres theorem , this means that the matching is a maximum-weight matching.

The algorithm defined above can be implemented in the soccer scenario. First, the conflicting node is identified, implying that there is an alternating tree that must be reconfigured.

There is an alternating path between defense, Eva, mid, and Tom.

To find the best appropriate node, find the minimum \(\delta_l\), as defined in step 4 above, where \(l_u\) is the label for player \(u,\) \(l_v\) is the label for position \(v,\) and \(w_{u, v}\) is the weight on that edge.

The \(\delta_l\) of each unmatched node is computed, where the minimum is found to be a value of 2, between Tom playing mid \((8 + 0 – 6 = 2).\)

The labels are then augmented and the new edges are graphed in the example. Notice that defense and mid went down by 2 points, whereas Eva’s skillset got back two points. However, this is expected as Eva can't play in both positions at once.

Augmenting path leads to relabeling of nodes, which gives rise to the maximum-weighted path.

These new edges complete the perfect matching of the graph, which implies that a maximum-weighted graph has been found and the algorithm can terminate.

The complexity of the algorithm will be analyzed using the graph-based technique as a reference, yet the result is the same as for the matrix-based one.

Algorithm analysis [3] At each \(a\) or \(b\) step, the algorithm adds one edge to the matching and this happens \(O\big(|V|\big)\) times. It takes \(O\big(|V|\big)\) time to find the right vertex for the augmenting (if there is one at all), and it is \(O\big(|V|\big)\) time to flip the matching. Improving the labeling takes \(O\big(|V|\big)\) time to find \(\delta_l\) and to update the labelling accordingly. We might have to improve the labeling up to \(O\big(|V|\big)\) times if there is no augmenting path. This makes for a total of \(O\big(|V|^2\big)\) time. In all, there are \(O\big(|V|\big)\) iterations each taking \(O\big(|V|\big)\) work, leading to a total running time of \(O\big(|V|^3\big)\).

- Matching Algorithms

- Bruff, D. The Assignment Problem and the Hungarian Method . Retrieved June 26, 2016, from http://www.math.harvard.edu/archive/20_spring_05/handouts/assignment_overheads.pdf

- Golin, M. Bipartite Matching &amp; the Hungarian Method . Retrieved Retrieved June 26th, 2016, from http://www.cse.ust.hk/~golin/COMP572/Notes/Matching.pdf

- Grinman, A. The Hungarian Algorithm for Weighted Bipartite Graphs . Retrieved June 26, 2016, from http://math.mit.edu/~rpeng/18434/hungarianAlgorithm.pdf

- Golin, M. Bipartite Matching & the Hungarian Method . Retrieved June 26, 2016, from http://www.cse.ust.hk/~golin/COMP572/Notes/Matching.pdf

Problem Loading...

Note Loading...

Set Loading...

Index Assignment problem Hungarian algorithm Solve online

The Hungarian algorithm

The Hungarian algorithm consists of the four steps below. The first two steps are executed once, while Steps 3 and 4 are repeated until an optimal assignment is found. The input of the algorithm is an n by n square matrix with only nonnegative elements.

Step 1: Subtract row minima

For each row, find the lowest element and subtract it from each element in that row.

Step 2: Subtract column minima

Similarly, for each column, find the lowest element and subtract it from each element in that column.

Step 3: Cover all zeros with a minimum number of lines

Cover all zeros in the resulting matrix using a minimum number of horizontal and vertical lines. If n lines are required, an optimal assignment exists among the zeros. The algorithm stops.

If less than n lines are required, continue with Step 4.

Step 4: Create additional zeros

Find the smallest element (call it k ) that is not covered by a line in Step 3. Subtract k from all uncovered elements, and add k to all elements that are covered twice.

Continue with:

The Hungarian algorithm explained based on an example.

The Hungarian algorithm explained based on a self chosen or on a random cost matrix.

HungarianAlgorithm.com © 2013-2024

Hungarian Method

The Hungarian method is a computational optimization technique that addresses the assignment problem in polynomial time and foreshadows following primal-dual alternatives. In 1955, Harold Kuhn used the term “Hungarian method” to honour two Hungarian mathematicians, Dénes Kőnig and Jenő Egerváry. Let’s go through the steps of the Hungarian method with the help of a solved example.

Hungarian Method to Solve Assignment Problems

The Hungarian method is a simple way to solve assignment problems. Let us first discuss the assignment problems before moving on to learning the Hungarian method.

What is an Assignment Problem?

A transportation problem is a type of assignment problem. The goal is to allocate an equal amount of resources to the same number of activities. As a result, the overall cost of allocation is minimised or the total profit is maximised.

Because available resources such as workers, machines, and other resources have varying degrees of efficiency for executing different activities, and hence the cost, profit, or loss of conducting such activities varies.

Assume we have ‘n’ jobs to do on ‘m’ machines (i.e., one job to one machine). Our goal is to assign jobs to machines for the least amount of money possible (or maximum profit). Based on the notion that each machine can accomplish each task, but at variable levels of efficiency.

Hungarian Method Steps

Check to see if the number of rows and columns are equal; if they are, the assignment problem is considered to be balanced. Then go to step 1. If it is not balanced, it should be balanced before the algorithm is applied.

Step 1 – In the given cost matrix, subtract the least cost element of each row from all the entries in that row. Make sure that each row has at least one zero.

Step 2 – In the resultant cost matrix produced in step 1, subtract the least cost element in each column from all the components in that column, ensuring that each column contains at least one zero.

Step 3 – Assign zeros

- Analyse the rows one by one until you find a row with precisely one unmarked zero. Encircle this lonely unmarked zero and assign it a task. All other zeros in the column of this circular zero should be crossed out because they will not be used in any future assignments. Continue in this manner until you’ve gone through all of the rows.

- Examine the columns one by one until you find one with precisely one unmarked zero. Encircle this single unmarked zero and cross any other zero in its row to make an assignment to it. Continue until you’ve gone through all of the columns.

Step 4 – Perform the Optimal Test

- The present assignment is optimal if each row and column has exactly one encircled zero.

- The present assignment is not optimal if at least one row or column is missing an assignment (i.e., if at least one row or column is missing one encircled zero). Continue to step 5. Subtract the least cost element from all the entries in each column of the final cost matrix created in step 1 and ensure that each column has at least one zero.

Step 5 – Draw the least number of straight lines to cover all of the zeros as follows:

(a) Highlight the rows that aren’t assigned.

(b) Label the columns with zeros in marked rows (if they haven’t already been marked).

(c) Highlight the rows that have assignments in indicated columns (if they haven’t previously been marked).

(d) Continue with (b) and (c) until no further marking is needed.

(f) Simply draw the lines through all rows and columns that are not marked. If the number of these lines equals the order of the matrix, then the solution is optimal; otherwise, it is not.

Step 6 – Find the lowest cost factor that is not covered by the straight lines. Subtract this least-cost component from all the uncovered elements and add it to all the elements that are at the intersection of these straight lines, but leave the rest of the elements alone.

Step 7 – Continue with steps 1 – 6 until you’ve found the highest suitable assignment.

Hungarian Method Example

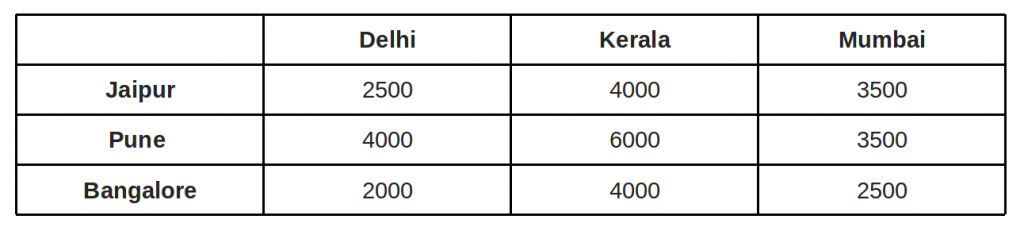

Use the Hungarian method to solve the given assignment problem stated in the table. The entries in the matrix represent each man’s processing time in hours.

\(\begin{array}{l}\begin{bmatrix} & I & II & III & IV & V \\1 & 20 & 15 & 18 & 20 & 25 \\2 & 18 & 20 & 12 & 14 & 15 \\3 & 21 & 23 & 25 & 27 & 25 \\4 & 17 & 18 & 21 & 23 & 20 \\5 & 18 & 18 & 16 & 19 & 20 \\\end{bmatrix}\end{array} \)

With 5 jobs and 5 men, the stated problem is balanced.

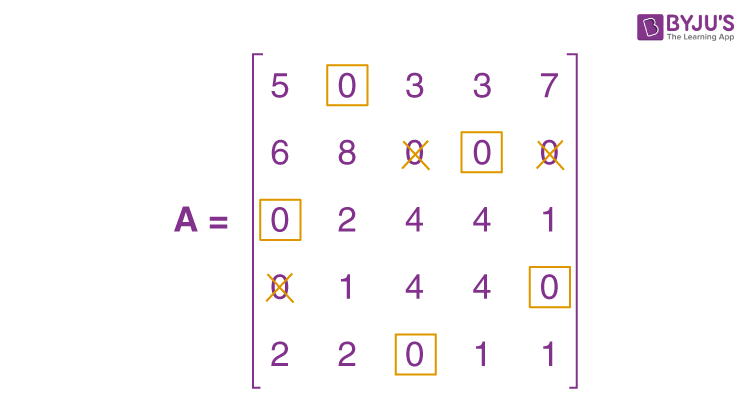

\(\begin{array}{l}A = \begin{bmatrix}20 & 15 & 18 & 20 & 25 \\18 & 20 & 12 & 14 & 15 \\21 & 23 & 25 & 27 & 25 \\17 & 18 & 21 & 23 & 20 \\18 & 18 & 16 & 19 & 20 \\\end{bmatrix}\end{array} \)

Subtract the lowest cost element in each row from all of the elements in the given cost matrix’s row. Make sure that each row has at least one zero.

\(\begin{array}{l}A = \begin{bmatrix}5 & 0 & 3 & 5 & 10 \\6 & 8 & 0 & 2 & 3 \\0 & 2 & 4 & 6 & 4 \\0 & 1 & 4 & 6 & 3 \\2 & 2 & 0 & 3 & 4 \\\end{bmatrix}\end{array} \)

Subtract the least cost element in each Column from all of the components in the given cost matrix’s Column. Check to see if each column has at least one zero.

\(\begin{array}{l}A = \begin{bmatrix}5 & 0 & 3 & 3 & 7 \\6 & 8 & 0 & 0 & 0 \\0 & 2 & 4 & 4 & 1 \\0 & 1 & 4 & 4 & 0 \\2 & 2 & 0 & 1 & 1 \\\end{bmatrix}\end{array} \)

When the zeros are assigned, we get the following:

The present assignment is optimal because each row and column contain precisely one encircled zero.

Where 1 to II, 2 to IV, 3 to I, 4 to V, and 5 to III are the best assignments.

Hence, z = 15 + 14 + 21 + 20 + 16 = 86 hours is the optimal time.

Practice Question on Hungarian Method

Use the Hungarian method to solve the following assignment problem shown in table. The matrix entries represent the time it takes for each job to be processed by each machine in hours.

\(\begin{array}{l}\begin{bmatrix}J/M & I & II & III & IV & V \\1 & 9 & 22 & 58 & 11 & 19 \\2 & 43 & 78 & 72 & 50 & 63 \\3 & 41 & 28 & 91 & 37 & 45 \\4 & 74 & 42 & 27 & 49 & 39 \\5 & 36 & 11 & 57 & 22 & 25 \\\end{bmatrix}\end{array} \)

Stay tuned to BYJU’S – The Learning App and download the app to explore all Maths-related topics.

Frequently Asked Questions on Hungarian Method

What is hungarian method.

The Hungarian method is defined as a combinatorial optimization technique that solves the assignment problems in polynomial time and foreshadowed subsequent primal–dual approaches.

What are the steps involved in Hungarian method?

The following is a quick overview of the Hungarian method: Step 1: Subtract the row minima. Step 2: Subtract the column minimums. Step 3: Use a limited number of lines to cover all zeros. Step 4: Add some more zeros to the equation.

What is the purpose of the Hungarian method?

When workers are assigned to certain activities based on cost, the Hungarian method is beneficial for identifying minimum costs.

Leave a Comment Cancel reply

Your Mobile number and Email id will not be published. Required fields are marked *

Request OTP on Voice Call

Post My Comment

- Share Share

Register with BYJU'S & Download Free PDFs

Register with byju's & watch live videos.

Glarity - YouTube Video Summary with ChatGPT (AD-Free)

Show summary of chatgpt in youtube, youtube videos transcript and summary with chatgpt openai. easily copy responses of chatgpt. generate key moments of youtube videos, inquiring about the content of the video..

Key Features

**youtube video summarization**.

· Glarity excels at summarizing YouTube video highlights.

· Easily get a concise overview of the video's key points.

**YouTube Video Transcript Generation**

· Generate YouTube video transcripts instantly.

· Copy video subtitles with a single click.

**YouTube Video Navigate to Key Moments**

· Effortlessly jump to the most relevant parts of a YouTube video.

· Find and access the content you care about quickly.

**Interactive YouTube Video Q&A**

· Seamlessly interact with video content.

· Get answers to your questions and explore video details efficiently.

**Customizable YouTube Video Summarization**

· Tailor video summaries to your specific needs.

· Extract the content that matters most to you.

Nash Balanced Assignment Problem

- Conference paper

- First Online: 21 November 2022

- Cite this conference paper

- Minh Hieu Nguyen 11 ,

- Mourad Baiou 11 &

- Viet Hung Nguyen 11

Part of the book series: Lecture Notes in Computer Science ((LNCS,volume 13526))

Included in the following conference series:

- International Symposium on Combinatorial Optimization

356 Accesses

2 Citations

In this paper, we consider a variant of the classic Assignment Problem (AP), called the Balanced Assignment Problem (BAP) [ 2 ]. The BAP seeks to find an assignment solution which has the smallest value of max-min distance : the difference between the maximum assignment cost and the minimum one. However, by minimizing only the max-min distance, the total cost of the BAP solution is neglected and it may lead to an inefficient solution in terms of total cost. Hence, we propose a fair way based on Nash equilibrium [ 1 , 3 , 4 ] to inject the total cost into the objective function of the BAP for finding assignment solutions having a better trade-off between the two objectives: the first aims at minimizing the total cost and the second aims at minimizing the max-min distance. For this purpose, we introduce the concept of Nash Fairness (NF) solutions based on the definition of proportional-fair scheduling adapted in the context of the AP: a transfer of utilities between the total cost and the max-min distance is considered to be fair if the percentage increase in the total cost is smaller than the percentage decrease in the max-min distance and vice versa.

We first show the existence of a NF solution for the AP which is exactly the optimal solution minimizing the product of the total cost and the max-min distance. However, finding such a solution may be difficult as it requires to minimize a concave function. The main result of this paper is to show that finding all NF solutions can be done in polynomial time. For that, we propose a Newton-based iterative algorithm converging to NF solutions in polynomial time. It consists in optimizing a sequence of linear combinations of the two objective based on Weighted Sum Method [ 5 ]. Computational results on various instances of the AP are presented and commented.

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

Bertsimas, D., Farias, V.F., Trichakis, N.: The price of fairness. Oper. Res. January–February 59 (1), 17–31 (2011)

MathSciNet MATH Google Scholar

Martello, S., Pulleyblank, W.R., Toth, P., De Werra, D.: Balanced optimization problems. Oper. Res. Lett. 3 (5), 275–278 (1984)

Article MathSciNet MATH Google Scholar

Kelly, F.P., Maullo, A.K., Tan, D.K.H.: Rate control for communication networks: shadow prices, proportional fairness and stability. J. Oper. Res. Soc. 49 (3), 237–252 (1997). https://doi.org/10.1057/palgrave.jors.2600523

Article Google Scholar

Ogryczak, W., Luss, H., Pioro, M., Nace, D., Tomaszewski, A.: Fair optimization and networks: a survey. J. Appl. Math. 2014 , 1–26 (2014)

Marler, R.T., Arora, J.S.: The weighted sum method for multi-objective optimization: new insights. Struct. Multi. Optim. 41 (6), 853–862 (2010)

Heller, I., Tompkins, C.B.: An extension of a theorem of Dantzig’s. Ann. Math. Stud. (38), 247–254 (1956)

Google Scholar

Kuhn, H.W.: The Hungarian method for assignment problem. Naval Res. Logist. Q. 2 (1–2), 83–97 (1955)

Martello, S.: Most and least uniform spanning trees. Discrete Appl. Math. 15 (2), 181–197 (1986)

Beasley, J.E.: Linear programming on Clay supercomputer. J. Oper. Res. Soc. 41 , 133–139 (1990)

Nguyen, M.H, Baiou, M., Nguyen, V.H., Vo, T.Q.T.: Nash fairness solutions for balanced TSP. In: International Network Optimization Conference (INOC2022) (2022)

Download references

Author information

Authors and affiliations.

INP Clermont Auvergne, Univ Clermont Auvergne, Mines Saint-Etienne, CNRS, UMR 6158 LIMOS, 1 Rue de la Chebarde, Aubiere Cedex, France

Minh Hieu Nguyen, Mourad Baiou & Viet Hung Nguyen

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Viet Hung Nguyen .

Editor information

Editors and affiliations.

ESSEC Business School of Paris, Cergy Pontoise Cedex, France

Ivana Ljubić

IBM TJ Watson Research Center, Yorktown Heights, NY, USA

Francisco Barahona

Georgia Institute of Technology, Atlanta, GA, USA

Santanu S. Dey

Université Paris-Dauphine, Paris, France

A. Ridha Mahjoub

Proposition 1 . There may be more than one NF solution for the AP.

Let us illustrate this by an instance of the AP having the following cost matrix

By verifying all feasible assignment solutions in this instance, we obtain easily three assignment solutions \((1-1, 2-2, 3-3), (1-2, 2-3, 3-1)\) , \((1-3, 2-2, 3-1)\) and \((1-3, 2-1, 3-2)\) corresponding to 4 NF solutions (280, 36), (320, 32), (340, 30) and (364, 28). Note that \(i-j\) where \(1 \le i,j \le 3\) represents the assignment between worker i and job j in the solution of this instance. \(\square \)

We recall below the proofs of some recent results that we have published in [ 10 ]. They are needed to prove the new results presented in this paper.

Theorem 2 [ 10 ] . \((P^{*},Q^{*}) = {{\,\mathrm{arg\,min}\,}}_{(P,Q) \in \mathcal {S}} PQ\) is a NF solution.

Obviously, there always exists a solution \((P^{*},Q^{*}) \in \mathcal {S}\) such that

Now \(\forall (P',Q') \in \mathcal {S}\) we have \(P'Q' \ge P^{*}Q^{*}\) . Then

The first inequality holds by the Cauchy-Schwarz inequality.

Hence, \((P^{*},Q^{*})\) is a NF solution. \(\square \)

Theorem 3 [ 10 ] . \((P^{*},Q^{*}) \in \mathcal {S}\) is a NF solution if and only if \((P^{*},Q^{*})\) is an optimal solution of \(\mathcal {P(\alpha ^{*})}\) where \(\alpha ^{*} = \frac{Q^{*}}{P^{*}}\) .

Firstly, let \((P^{*},Q^{*})\) be a NF solution and \(\alpha ^{*} = \frac{Q^{*}}{P^{*}}\) . We will show that \((P^{*},Q^{*})\) is an optimal solution of \(\mathcal {P(\alpha ^{*})}\) .

Since \((P^{*},Q^{*})\) is a NF solution, we have

Since \(\alpha ^{*} = \frac{Q^{*}}{P^{*}}\) , we have \(\alpha ^{*}P^{*}+Q^{*} = 2Q^{*}\) .

Dividing two sides of ( 6 ) by \(P^{*} > 0\) we obtain

So we deduce from ( 7 )

Hence, \((P^{*},Q^{*})\) is an optimal solution of \(\mathcal {P}(\alpha ^{*})\) .

Now suppose \(\alpha ^{*} = \frac{Q^{*}}{P^{*}}\) and \((P^{*},Q^{*})\) is an optimal solution of \(\mathcal {P}(\alpha ^{*})\) , we show that \((P^{*},Q^{*})\) is a NF solution.

If \((P^{*},Q^{*})\) is not a NF solution, there exists a solution \((P',Q') \in \mathcal {S}\) such that

We have then

which contradicts the optimality of \((P^{*},Q^{*})\) . \(\square \)

Lemma 3 [ 10 ] . Let \(\alpha , \alpha ' \in \mathbb {R}_+\) and \((P_{\alpha }, Q_{\alpha })\) , \((P_{\alpha '}, Q_{\alpha '})\) be the optimal solutions of \(\mathcal {P(\alpha )}\) and \(\mathcal {P(\alpha ')}\) respectively, if \(\alpha \le \alpha '\) then \(P_{\alpha } \ge P_{\alpha '}\) and \(Q_{\alpha } \le Q_{\alpha '}\) .

The optimality of \((P_{\alpha }, Q_{\alpha })\) and \((P_{\alpha '}, Q_{\alpha '})\) gives

By adding both sides of ( 8a ) and ( 8b ), we obtain \((\alpha - \alpha ') (P_{\alpha } - P_{\alpha '}) \le 0\) . Since \(\alpha \le \alpha '\) , it follows that \(P_{\alpha } \ge P_{\alpha '}\) .

On the other hand, inequality ( 8a ) implies \(Q_{\alpha '} - Q_{\alpha } \ge \alpha (P_{\alpha } - P_{\alpha '}) \ge 0\) that leads to \(Q_{\alpha } \le Q_{\alpha '}\) . \(\square \)

Lemma 4 [ 10 ] . During the execution of Procedure Find ( \(\alpha _{0})\) in Algorithm 1 , \(\alpha _{i} \in [0,1], \, \forall i \ge 1\) . Moreover, if \(T_{0} \ge 0\) then the sequence \(\{\alpha _i\}\) is non-increasing and \(T_{i} \ge 0, \, \forall i \ge 0\) . Otherwise, if \(T_{0} \le 0\) then the sequence \(\{\alpha _i\}\) is non-decreasing and \(T_{i} \le 0, \, \forall i \ge 0\) .

Since \(P \ge Q \ge 0, \, \forall (P, Q) \in \mathcal {S}\) , it follows that \(\alpha _{i+1} = \frac{Q_i}{P_i} \in [0,1], \, \forall i \ge 0\) .

We first consider \(T_{0} \ge 0\) . We proof \(\alpha _i \ge \alpha _{i+1}, \, \forall i \ge 0\) by induction on i . For \(i = 0\) , we have \(T_{0} = \alpha _{0} P_{0} - Q_{0} = P_{0}(\alpha _{0}-\alpha _{1}) \ge 0\) , it follows that \(\alpha _{0} \ge \alpha _{1}\) . Suppose that our hypothesis is true until \(i = k \ge 0\) , we will prove that it is also true with \(i = k+1\) .

Indeed, we have

The inductive hypothesis gives \(\alpha _k \ge \alpha _{k+1}\) that implies \(P_{k+1} \ge P_k > 0\) and \(Q_{k} \ge Q_{k+1} \ge 0\) according to Lemma 3 . It leads to \(Q_{k}P_{k+1} - P_{k}Q_{k+1} \ge 0\) and then \(\alpha _{k+1} - \alpha _{k+2} \ge 0\) .

Hence, we have \(\alpha _{i} \ge \alpha _{i+1}, \, \forall i \ge 0\) .

Consequently, \(T_{i} = \alpha _{i}P_{i} - Q_{i} = P_{i}(\alpha _{i}-\alpha _{i+1}) \ge 0, \, \forall i \ge 0\) .

Similarly, if \(T_{0} \le 0\) we obtain that the sequence \(\{\alpha _i\}\) is non-decreasing and \(T_{i} \le 0, \, \forall i \ge 0\) . That concludes the proof. \(\square \)

Lemma 5 [ 10 ] . From each \(\alpha _{0} \in [0,1]\) , Procedure Find \((\alpha _{0})\) converges to a coefficient \(\alpha _{k} \in \mathcal {C}_{0}\) satisfying \(\alpha _{k}\) is the unique element \(\in \mathcal {C}_{0}\) between \(\alpha _{0}\) and \(\alpha _{k}\) .

As a consequence of Lemma 4 , Procedure \(\textit{Find}(\alpha _{0})\) converges to a coefficient \(\alpha _{k} \in [0,1], \forall \alpha _{0} \in [0,1]\) .

By the stopping criteria of Procedure Find \((\alpha _{0})\) , when \(T_{k} = \alpha _{k} P_{k} - Q_{k} = 0\) we obtain \(\alpha _{k} \in C_{0}\) and \((P_{k},Q_{k})\) is a NF solution. (Theorem 3 )

If \(T_{0} = 0\) then obviously \(\alpha _{k} = \alpha _{0}\) . We consider \(T_{0} > 0\) and the sequence \(\{\alpha _i\}\) is now non-negative, non-increasing. We will show that \([\alpha _{k},\alpha _{0}] \cap \mathcal {C}_{0} = \alpha _{k}\) .

Suppose that we have \(\alpha \in (\alpha _{k},\alpha _{0}]\) and \(\alpha \in \mathcal {C}_{0}\) corresponding to a NF solution ( P , Q ). Then there exists \(1 \le i \le k\) such that \(\alpha \in (\alpha _{i}, \alpha _{i-1}]\) . Since \(\alpha \le \alpha _{i-1}\) , \(P \ge P_{i-1}\) and \(Q \le Q_{i-1}\) due to Lemma 3 . Thus, we get

By the definitions of \(\alpha \) and \(\alpha _{i}\) , inequality ( 9 ) is equivalent to \(\alpha \le \alpha _{i}\) which leads to a contradiction.

By repeating the same argument for \(T_{0} < 0\) , we also have a contradiction. \(\square \)

Rights and permissions

Reprints and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper.

Nguyen, M.H., Baiou, M., Nguyen, V.H. (2022). Nash Balanced Assignment Problem. In: Ljubić, I., Barahona, F., Dey, S.S., Mahjoub, A.R. (eds) Combinatorial Optimization. ISCO 2022. Lecture Notes in Computer Science, vol 13526. Springer, Cham. https://doi.org/10.1007/978-3-031-18530-4_13

Download citation

DOI : https://doi.org/10.1007/978-3-031-18530-4_13

Published : 21 November 2022

Publisher Name : Springer, Cham

Print ISBN : 978-3-031-18529-8

Online ISBN : 978-3-031-18530-4

eBook Packages : Computer Science Computer Science (R0)

Share this paper

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

Linear assignment with non-perfect matching

Dec 8, 2020

The linear assignment problem (or simply assignment problem) is the problem of finding a matching between two sets that minimizes the sum of pair-wise assignment costs. This can be expressed as finding a matching (or independent edge set) in a bipartite graph \(G = (U, V, E)\) that minimizes the sum of edge weights. The edge weights may be positive or negative and the bipartite graph does not need to be complete : if there is no edge between two vertices then they cannot be associated. Note that a maximum-weight assignment can be obtained by negating the weights and finding a minimum-weight assignment.

The simplest form of the assignment problem assumes that the bipartite graph is balanced (the two sets of vertices are the same size) and that there exists a perfect matching (in which every vertex has a match). Let \(n\) be the number of elements in each set and let \(C\) be a square matrix of size \(n \times n\) that contains the edge weights. Missing edges are represented by \(\infty\), such that \(C_{i j} < \infty \Leftrightarrow (i, j) \in E\). The assignment problem can then be clearly expressed as an integer linear program : (note that the problem is not actually solved using a general-purpose ILP solver, it is just a convenient framework in which to express the problem)

The constraint that the sum of each row and column is equal to one ensures that each element has exactly one match. However, what happens when the graph is not balanced or does not contain a perfect matching? We cannot enforce the sums to be equal to one. Which problem should be solved?

I recommend reading the tech report “On Minimum-Cost Assignments in Unbalanced Bipartite Graphs” by Lyle Ramshaw and Robert E. Tarjan. I will summarize some of the main points here.

Let us consider a more general, rectangular problem of size \(r \times n\) and assume (without loss of generality) that \(r \le n\). If \(r = n\) then the problem is balanced, if \(r < n\) it is unbalanced.

Clearly an unbalanced probem cannot have a perfect matching, since there will be at least \(n - r\) unmatched elements in the larger set. However, it may be possible to find a matching in which every vertex in the smaller set has a match. This is referred to as a one-sided perfect matching and the optimization problem can be expressed:

The inequality constraints enforce that each element in the smaller set has exactly one match while each element in the larger set has at most one match. Ramshaw and Tarjan outline a method to reduce from an unbalanced problem to a balanced problem while preserving sparsity. A simple alternative is to add \(n - r\) rows of zeros and then exclude these edges from the eventual solution. Most libraries for the assignment problem solve either the balanced or unbalanced version of this problem (see the later section).

However, whether balanced or unbalanced, it may still occur that the constraint set is infeasible, meaning that there does not exist a (one-sided) perfect matching. Let \(\nu(W) \le r\) denote the size of the largest matching in the graph. If \(\nu(W) < r\), then there does not exist a one-sided perfect matching and all possible matchings are imperfect.

Ramshaw and Tarjan actually outline three different versions of the assignment problem:

- perfect matching

- imperfect matching

- minimum-weight matching

The imperfect matching problem is to find the matching of size \(\nu(G)\) with the minimum cost. The minimum-weight matching problem is to find the matching of any size with the minimum cost. If \(\nu(G) = r = n\), then perfect and imperfect matching coincide. Otherwise, when \(\nu(G) < n\), there does not exist a perfect matching.

The imperfect matching problem can be expressed

and the minimum-weight matching problem can be expressed

Ramshaw and Tarjan show that both of these problems can be reduced to finding a perfect matching in a balanced graph. When using linear assignment, we should carefully consider which of the three problems we actually want to solve.

In support of minimum-weight matching

The minimum-weight matching problem is often the most natural choice, since it puts no constraint on the size of the matching. To illustrate the difference between this and the other problems, consider the following balanced problem:

The solution to perfect (or imperfect) matching is to choose -1 and -2 for a total score of -3 and a cardinality of 2. The solution to minimum-weight matching is to choose -4 with a cardinality of 1.

Minimum-weight matching will never select an edge with positive cost: it is better to simply leave it unselected. Edges with zero cost have no impact.

It may be more natural to consider the maximization of positive weights than the minimization of negative costs.

Min-cost imperfect matching with positive weights

Be careful when solving imperfect matching problems with positive edge weights! I would avoid this situation altogether due to the tension that exists between maximizing the number of matches and minimizing the sum of (positive) costs. This may result in the unexpected behaviour that adding an edge to the graph increases the minimum cost. For example, compare the following two problems:

Quick and dirty transformations

Ramshaw and Tarjan above describes some clever techniques to transform imperfect and minimum-weight matching problems into perfect matching problems while preserving sparsity. Here we describe some quick and dirty alternatives.

We can always transform an unbalanced (and therefore imperfect) problem into a balanced problem by adding \(n - r\) rows of zeros. The resulting balanced graph has a perfect matching if and only if the original unbalanced graph had a matching of size \(r\) (in which every vertex in the smaller set is matched).

If we need to solve imperfect matching but we only have a solver for perfect matching, it suffices to replace the infinite edge weights with a large, finite cost (e.g. larger than the total absolute value of all weights). The resulting graph must contain a perfect matching since it is a complete bipartite graph, and each high-cost edge is worth more than all original edges combined. The high-cost edges can be excluded at the end.

Most existing packages either solve perfect, one-sided perfect or imperfect matching. To use one of these solvers for the minimum-weight matching problem, it suffices to replace all positive edges (including infinite edges) with zero. If using a solver that leverages sparsity, it is better to use the technique described by Ramshaw and Tarjan.

Python packages

The table below outlines the different behaviour of several popular packages. The code that was used to determine the behaviour is available as a Jupyter notebook .

Assignment Model | Linear Programming Problem (LPP) | Introduction

What is assignment model.

→ Assignment model is a special application of Linear Programming Problem (LPP) , in which the main objective is to assign the work or task to a group of individuals such that;

i) There is only one assignment.

ii) All the assignments should be done in such a way that the overall cost is minimized (or profit is maximized, incase of maximization).

→ In assignment problem, the cost of performing each task by each individual is known. → It is desired to find out the best assignments, such that overall cost of assigning the work is minimized.

For example:

Suppose there are 'n' tasks, which are required to be performed using 'n' resources.

The cost of performing each task by each resource is also known (shown in cells of matrix)

- In the above asignment problem, we have to provide assignments such that there is one to one assignments and the overall cost is minimized.

How Assignment Problem is related to LPP? OR Write mathematical formulation of Assignment Model.

→ Assignment Model is a special application of Linear Programming (LP).

→ The mathematical formulation for Assignment Model is given below:

→ Let, C i j \text {C}_{ij} C ij denotes the cost of resources 'i' to the task 'j' ; such that

→ Now assignment problems are of the Minimization type. So, our objective function is to minimize the overall cost.

→ Subjected to constraint;

(i) For all j t h j^{th} j t h task, only one i t h i^{th} i t h resource is possible:

(ii) For all i t h i^{th} i t h resource, there is only one j t h j^{th} j t h task possible;

(iii) x i j x_{ij} x ij is '0' or '1'.

Types of Assignment Problem:

(i) balanced assignment problem.

- It consist of a suqare matrix (n x n).

- Number of rows = Number of columns

(ii) Unbalanced Assignment Problem

- It consist of a Non-square matrix.

- Number of rows ≠ \not= = Number of columns

Methods to solve Assignment Model:

(i) integer programming method:.

In assignment problem, either allocation is done to the cell or not.

So this can be formulated using 0 or 1 integer.

While using this method, we will have n x n decision varables, and n+n equalities.

So even for 4 x 4 matrix problem, it will have 16 decision variables and 8 equalities.

So this method becomes very lengthy and difficult to solve.

(ii) Transportation Methods:

As assignment problem is a special case of transportation problem, it can also be solved using transportation methods.

In transportation methods ( NWCM , LCM & VAM), the total number of allocations will be (m+n-1) and the solution is known as non-degenerated. (For eg: for 3 x 3 matrix, there will be 3+3-1 = 5 allocations)

But, here in assignment problems, the matrix is a square matrix (m=n).

So total allocations should be (n+n-1), i.e. for 3 x 3 matrix, it should be (3+3-1) = 5

But, we know that in 3 x 3 assignment problem, maximum possible possible assignments are 3 only.

So, if are we will use transportation methods, then the solution will be degenerated as it does not satisfy the condition of (m+n-1) allocations.

So, the method becomes lengthy and time consuming.

(iii) Enumeration Method:

It is a simple trail and error type method.

Consider a 3 x 3 assignment problem. Here the assignments are done randomly and the total cost is found out.

For 3 x 3 matrix, the total possible trails are 3! So total 3! = 3 x 2 x 1 = 6 trails are possible.

The assignments which gives minimum cost is selected as optimal solution.

But, such trail and error becomes very difficult and lengthy.

If there are more number of rows and columns, ( For eg: For 6 x 6 matrix, there will be 6! trails. So 6! = 6 x 5 x 4 x 3 x 2 x 1 = 720 trails possible) then such methods can't be applied for solving assignments problems.

(iv) Hungarian Method:

It was developed by two mathematicians of Hungary. So, it is known as Hungarian Method.

It is also know as Reduced matrix method or Flood's technique.

There are two main conditions for applying Hungarian Method:

(1) Square Matrix (n x n). (2) Problem should be of minimization type.

Suggested Notes:

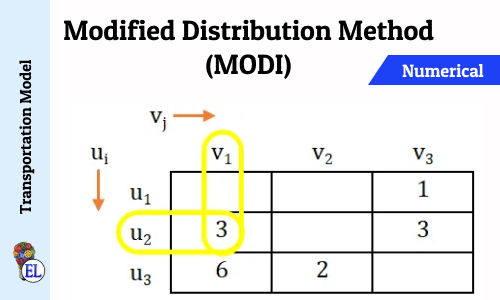

Modified Distribution Method (MODI) | Transportation Problem | Transportation Model

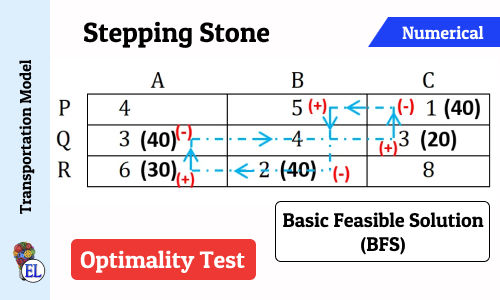

Stepping Stone | Transportation Problem | Transportation Model

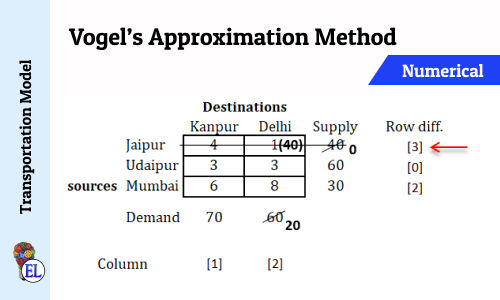

Vogel’s Approximation Method (VAM) | Method to Solve Transportation Problem | Transportation Model

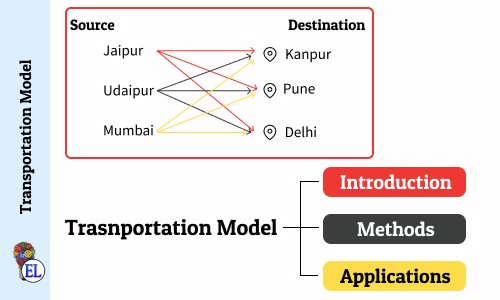

Transportation Model - Introduction

North West Corner Method | Method to Solve Transportation Problem | Transportation Model

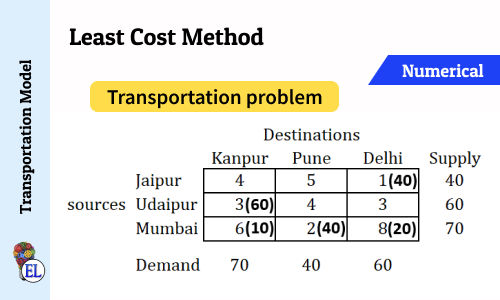

Least Cost Method | Method to Solve Transportation Problem | Transportation Model

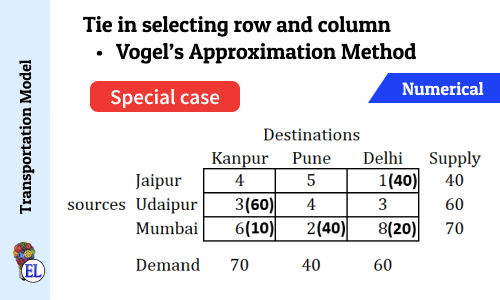

Tie in selecting row and column (Vogel's Approximation Method - VAM) | Numerical | Solving Transportation Problem | Transportation Model

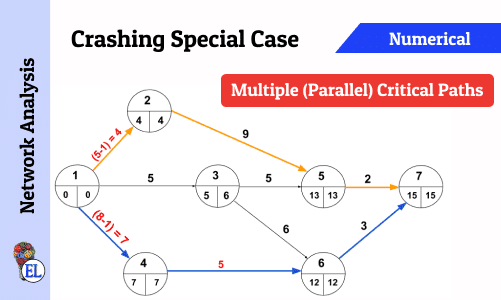

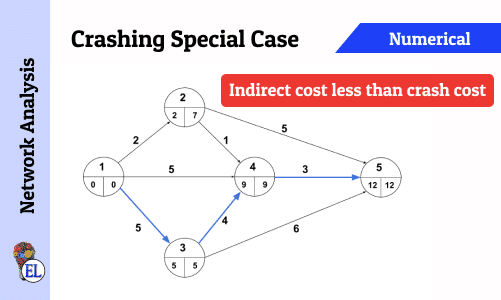

Crashing Special Case - Multiple (Parallel) Critical Paths

Crashing Special Case - Indirect cost less than Crash Cost

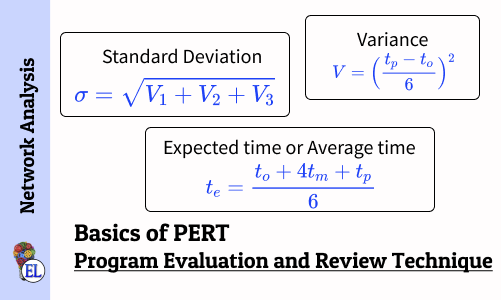

Basics of Program Evaluation and Review Technique (PERT)

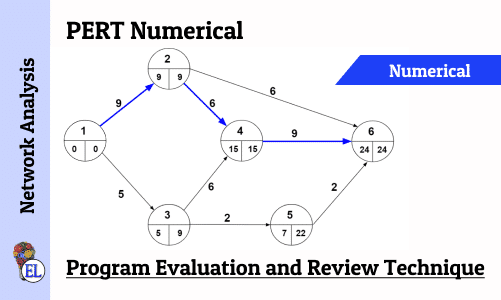

Numerical on PERT (Program Evaluation and Review Technique)

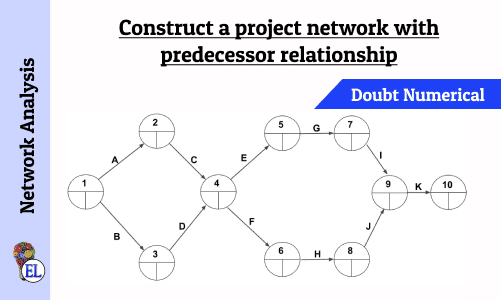

Network Analysis - Dealing with Network Construction Basics

Construct a project network with predecessor relationship | Operation Research | Numerical

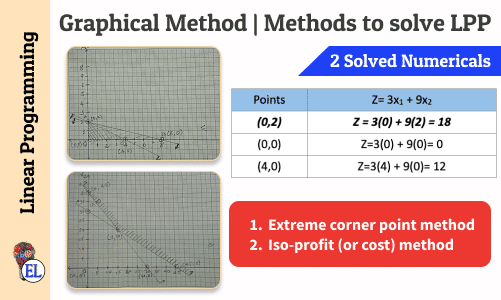

Graphical Method | Methods to solve LPP | Linear Programming

Basics of Linear Programming

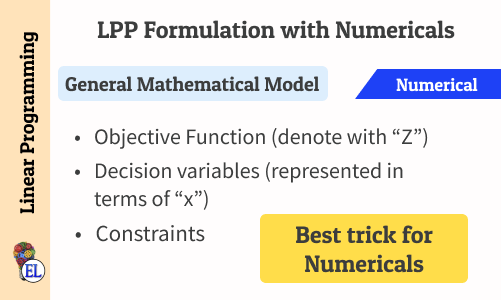

Linear Programming Problem (LPP) Formulation with Numericals

All comments that you add will await moderation. We'll publish all comments that are topic related, and adhere to our Code of Conduct .

Want to tell us something privately? Contact Us

Post comment

Education Lessons

Stay in touch, [notes] operation research, [notes] dynamics of machinery, [notes] maths, [notes] science, [notes] computer aided design.

IMAGES

VIDEO

COMMENTS

Here is the video about unbalanced Assignment problem using Hungarian method,In this video we have seen how to solve unbalanced assignment problem using step...

Step 3. Cover all the zeros of the matrix with the minimum number of horizontal or vertical lines. Step 4. Since the minimal number of lines is 3, an optimal assignment of zeros is possible and we are finished. Since the total cost for this assignment is 0, it must be. Step 3.

Core of the algorithm (assuming square matrix): For each row of the matrix, find the smallest element and subtract it from every element in its row. Do the same (as step 1) for all columns. Cover all zeros in the matrix using minimum number of horizontal and vertical lines.

So we will only discuss the minimum cost assignment problem in this article. Non-Square Cost Matrix. In practice, it is common to have a cost matrix which is not square. But we could make the cost matrix square, fill the empty entries with $0$, and apply the Hungarian algorithm to solve the optimal cost assignment problem. Hungarian Matching ...

The Hungarian method is a combinatorial optimization algorithm that solves the assignment problem in polynomial time and which anticipated later primal-dual methods.It was developed and published in 1955 by Harold Kuhn, who gave it the name "Hungarian method" because the algorithm was largely based on the earlier works of two Hungarian mathematicians, Dénes Kőnig and Jenő Egerváry.

The assignment problem is a fundamental combinatorial optimization ... Usually the weight function is viewed as a square real-valued matrix C, ... assign the pair with the smallest cost; and so on. This algorithm may yield a non-optimal solution. For example, suppose there are two tasks and two agents with costs as follows: Alice: Task 1 = 1 ...

It should also be noted that if you care about performance, the approach of adding dummies will generally increase the time it takes to solve the problem more than using specialized algorithms that cater to the non-square case would. See e.g. Bijsterbosch, Volgenant. Solving the Rectangular assignment problem and applications. 2010. Annals of ...

This is the video about unbalanced Assignment problem using Hungarian method,In this video we will learn how to solve unbalanced assignment problem using ste...

The Hungarian matching algorithm, also called the Kuhn-Munkres algorithm, is a \(O\big(|V|^3\big)\) algorithm that can be used to find maximum-weight matchings in bipartite graphs, which is sometimes called the assignment problem.A bipartite graph can easily be represented by an adjacency matrix, where the weights of edges are the entries.Thinking about the graph in terms of an adjacency ...

Here in this video discuss Non Square Matrix problem of assignment problem - Hungarian steps on Operations research, In this video we discussed how to solv...

Connection Between Transportation and Assignment Problem An assignment problem is a special case of transportation problem in which m = n, all a i and b j are unity and each is limited to either 0 or 1. Hungarian Method for Solving an Assignment Problem 1. Prepare a square n n matrix. If not, make it square by adding suitable

The first two steps are executed once, while Steps 3 and 4 are repeated until an optimal assignment is found. The input of the algorithm is an n by n square matrix with only nonnegative elements. Step 1: Subtract row minima. For each row, find the lowest element and subtract it from each element in that row. Step 2: Subtract column minima.

The Hungarian method is a computational optimization technique that addresses the assignment problem in polynomial time and foreshadows following primal-dual alternatives. In 1955, Harold Kuhn used the term "Hungarian method" to honour two Hungarian mathematicians, Dénes Kőnig and Jenő Egerváry. Let's go through the steps of the Hungarian method with the help of a solved example.

The problem involves assigning jobs to operators efficiently despite having an unequal number of jobs and operators, resulting in a non-square matrix. The solution involves converting the non-square matrix into a square one, applying row and column reduction techniques, and optimizing the assignment through a series of steps including row ...

The Assignment Problem (AP) is a fundamental combinatorial optimization problem. It can be formally defined as follows. Given a set n workers, a set of n jobs and a \(n \times n\) cost matrix whose elements are positive representing the assignment of any worker to any job, the AP aims at finding an one-to-one worker-job assignment (i.e., a bipartite perfect matching) that minimizes certain ...

3. Purpose of introducing a dummy variable: Your assignment problem is such that, the number of jobs is greater than number of persons available. In every assignment problem, it is assumed that each person will do one job, and a job will be done by only one person. Hence assuming that you are a manager, and you have three workers working for ...

Unbalanced Assignment Problem( part -3) using Hungarian Method in hindi by Gourav Manjrekar : For full PDF on this topic visit our website http://beinggoura...

Let's say we have. A = BX A = B X. where A A and B B are known matrices, X X is unknown. In case B B was square, a solution can be found by B−1A =X B − 1 A = X. But how do you attempt to solve for X X when B B is not square, i.e., n ≠ m n ≠ m? linear-algebra. matrices. matrix-equations. Share.

The simplest form of the assignment problem assumes that the bipartite graph is balanced (the two sets of vertices are the same size) and that there exists a perfect matching (in which every vertex has a match). Let \(n\) be the number of elements in each set and let \(C\) be a square matrix of size \(n \times n\) that contains the edge weights.

This purpose can be served by assigning multiple jobs to a single machine. The present paper proposes a Modified Hungarian Method for solving unbalanced assignment problems which gives the optimal policy of assignment of jobs to machines. A stepwise algorithm of proposed method is presented and the developed algorithm is also coded in Java SE 11.

There are two main conditions for applying Hungarian Method: (1) Square Matrix (n x n). (2) Problem should be of minimization type. Assignment model is a special application of Linear Programming Problem (LPP), in which the main objective is to assign the work or task to a group of individuals such that; i) There is only one assignment.