What Is Metacognition? How Does It Help Us Think?

Metacognitive strategies like self-reflection empower students for a lifetime..

Posted October 9, 2020 | Reviewed by Abigail Fagan

Metacognition is a high order thinking skill that is emerging from the shadows of academia to take its rightful place in classrooms around the world. As online classrooms extend into homes, this is an important time for parents and teachers to understand metacognition and how metacognitive strategies affect learning. These skills enable children to become better thinkers and decision-makers.

Metacognition: The Neglected Skill Set for Empowering Students is a new research-based book by educational consultants Dr. Robin Fogarty and Brian Pete that not only gets to the heart of why metacognition is important but gives teachers and parents insightful strategies for teaching metacognition to children from kindergarten through high school. This article summarizes several concepts from their book and shares three of their thirty strategies to strengthen metacognition.

What Is Metacognition?

Metacognition is the practice of being aware of one’s own thinking. Some scholars refer to it as “thinking about thinking.” Fogarty and Pete give a great everyday example of metacognition:

Think about the last time you reached the bottom of a page and thought to yourself, “I’m not sure what I just read.” Your brain just became aware of something you did not know, so instinctively you might reread the last sentence or rescan the paragraphs of the page. Maybe you will read the page again. In whatever ways you decide to capture the missing information, this momentary awareness of knowing what you know or do not know is called metacognition.

When we notice ourselves having an inner dialogue about our thinking and it prompts us to evaluate our learning or problem-solving processes, we are experiencing metacognition at work. This skill helps us think better, make sound decisions, and solve problems more effectively. In fact, research suggests that as a young person’s metacognitive abilities increase, they achieve at higher levels.

Fogarty and Pete outline three aspects of metacognition that are vital for children to learn: planning, monitoring, and evaluation. They convincingly argue that metacognition is best when it is infused in teaching strategies rather than taught directly. The key is to encourage students to explore and question their own metacognitive strategies in ways that become spontaneous and seemingly unconscious .

Metacognitive skills provide a basis for broader, psychological self-awareness , including how children gain a deeper understanding of themselves and the world around them.

Metacognitive Strategies to Use at Home or School

Fogarty and Pete successfully demystify metacognition and provide simple ways teachers and parents can strengthen children’s abilities to use these higher-order thinking skills. Below is a summary of metacognitive strategies from the three areas of planning, monitoring, and evaluation.

1. Planning Strategies

As students learn to plan, they learn to anticipate the strengths and weaknesses of their ideas. Planning strategies used to strengthen metacognition help students scrutinize plans at a time when they can most easily be changed.

One of ten metacognitive strategies outlined in the book is called “Inking Your Thinking.” It is a simple writing log that requires students to reflect on a lesson they are about to begin. Sample starters may include: “I predict…” “A question I have is…” or “A picture I have of this is…”

Writing logs are also helpful in the middle or end of assignments. For example, “The homework problem that puzzles me is…” “The way I will solve this problem is to…” or “I’m choosing this strategy because…”

2. Monitoring Strategies

Monitoring strategies used to strengthen metacognition help students check their progress and review their thinking at various stages. Different from scrutinizing, this strategy is reflective in nature. It also allows for adjustments while the plan, activity, or assignment is in motion. Monitoring strategies encourage recovery of learning, as in the example cited above when we are reading a book and notice that we forgot what we just read. We can recover our memory by scanning or re-reading.

One of many metacognitive strategies shared by Fogarty and Pete, called the “Alarm Clock,” is used to recover or rethink an idea once the student realizes something is amiss. The idea is to develop internal signals that sound an alarm. This signal prompts the student to recover a thought, rework a math problem, or capture an idea in a chart or picture. Metacognitive reflection involves thinking about “What I did,” then reviewing the pluses and minuses of one’s action. Finally, it means asking, “What other thoughts do I have” moving forward?

Teachers can easily build monitoring strategies into student assignments. Parents can reinforce these strategies too. Remember, the idea is not to tell children what they did correctly or incorrectly. Rather, help children monitor and think about their own learning. These are formative skills that last a lifetime.

3. Evaluation Strategies

According to Fogarty and Pete, the evaluation strategies of metacognition “are much like the mirror in a powder compact. Both serve to magnify the image, allow for careful scrutiny, and provide an up-close and personal view. When one opens the compact and looks in the mirror, only a small portion of the face is reflected back, but that particular part is magnified so that every nuance, every flaw, and every bump is blatantly in view.” Having this enlarged view makes inspection much easier.

When students inspect parts of their work, they learn about the nuances of their thinking processes. They learn to refine their work. They grow in their ability to apply their learning to new situations. “Connecting Elephants” is one of many metacognitive strategies to help students self-evaluate and apply their learning.

In this exercise, the metaphor of three imaginary elephants is used. The elephants are walking together in a circle, connected by the trunk and tail of another elephant. The three elephants represent three vital questions: 1) What is the big idea? 2) How does this connect to other big ideas? 3) How can I use this big idea? Using the image of a “big idea” helps students magnify and synthesize their learning. It encourages them to think about big ways their learning can be applied to new situations.

Metacognition and Self-Reflection

Reflective thinking is at the heart of metacognition. In today’s world of constant chatter, technology and reflective thinking can be at odds. In fact, mobile devices can prevent young people from seeing what is right before their eyes.

John Dewey, a renowned psychologist and education reformer, claimed that experiences alone were not enough. What is critical is an ability to perceive and then weave meaning from the threads of our experiences.

The function of metacognition and self-reflection is to make meaning. The creation of meaning is at the heart of what it means to be human.

Everyone can help foster self-reflection in young people.

Marilyn Price-Mitchell, Ph.D., is an Institute for Social Innovation Fellow at Fielding Graduate University and author of Tomorrow’s Change Makers.

- Find a Therapist

- Find a Treatment Center

- Find a Psychiatrist

- Find a Support Group

- Find Online Therapy

- United States

- Brooklyn, NY

- Chicago, IL

- Houston, TX

- Los Angeles, CA

- New York, NY

- Portland, OR

- San Diego, CA

- San Francisco, CA

- Seattle, WA

- Washington, DC

- Asperger's

- Bipolar Disorder

- Chronic Pain

- Eating Disorders

- Passive Aggression

- Personality

- Goal Setting

- Positive Psychology

- Stopping Smoking

- Low Sexual Desire

- Relationships

- Child Development

- Self Tests NEW

- Therapy Center

- Diagnosis Dictionary

- Types of Therapy

At any moment, someone’s aggravating behavior or our own bad luck can set us off on an emotional spiral that could derail our entire day. Here’s how we can face triggers with less reactivity and get on with our lives.

- Emotional Intelligence

- Gaslighting

- Affective Forecasting

- Neuroscience

- Resource Collection

- State Resources

Access Resources for State Adult Education Staff

- Topic Areas

- About the Collection

- Review Process

- Reviewer Biographies

- Federal Initiatives

- COVID-19 Support

- ADVANCE Integrated Education and Training (IET)

- IET Train-the Trainer Resources

- IET Resource Repository

- Program Design

- Collaboration and Industry Engagement

- Curriculum and Instruction

- Policy and Funding

- Program Management - Staffing -Organization Support

- Student Experience and Progress

- Adult Numeracy Instruction 2.0

- Advancing Innovation in Adult Education

- Bridge Practices

- Holistic Approach to Adult Ed

- Integrated Education and Training (IET) Practices

- Secondary Credentialing Practices

- Business-Adult Education Partnerships Toolkit

- Partnerships: Business Leaders

- Partnerships: Adult Education Providers

- Success Stories

- Digital Literacy Initiatives

- Digital Resilience in the American Workforce

- Landscape Scan

- Publications and Resources

- DRAW Professional Development Resources

- Employability Skills Framework

- Enhancing Access for Refugees and New Americans

- English Language Acquisition

- Internationally-Trained Professionals

- Rights and Responsibilities of Citizenship and Civic Participation

- Workforce Preparation Activities

- Workforce Training

- Integrated Education and Training in Corrections

- LINCS ESL PRO

- Integrating Digital Literacy into English Language Instruction

- Meeting the Language Needs of Today's English Language Learner

- Open Educational Resources (OER) for English Language Instruction

- Preparing English Learners for Work and Career Pathways

- Recommendations for Applying These Resources Successfully

- Moving Pathways Forward

- Career Pathways Exchange

- Power in Numbers

- Adult Learner Stories

- Meet Our Experts

- Newsletters

- Reentry Education Tool Kit

- Education Services

- Strategic Partnerships

- Sustainability

- Transition Processes

- Program Infrastructure

- SIA Resources and Professional Development

- Fulfilling the Instructional Shifts

- Observing in Classrooms

- SIA ELA/Literacy Videos

- SIA Math Videos

- SIA ELA Videos

- Conducting Curriculum Reviews

- Boosting English Learner Instruction

- Student Achievement in Reading

- TEAL Just Write! Guide

- Introduction

- Fact Sheet: Research-Based Writing Instruction

- Increase the Amount of Student Writing

- Fact Sheet: Adult Learning Theories

- Fact Sheet: Student-Centered Learning

- Set and Monitor Goals

- Fact Sheet: Self-Regulated Learning

- Fact Sheet: Metacognitive Processes

- Combine Sentences

- Teach Self-Regulated Strategy Development

- Fact Sheet: Self-Regulated Strategy Development

- Teach Summarization

- Make Use of Frames

- Provide Constructive Feedback

- Apply Universal Design for Learning

- Fact Sheet: Universal Design for Learning

- Check for Understanding

- Fact Sheet: Formative Assessment

- Differentiated Instruction

- Fact Sheet: Differentiated Instruction

- Gradual Release of Responsibility

- Join a Professional Learning Community

- Look at Student Work Regularly

- Fact Sheet: Effective Lesson Planning

- Use Technology Effectively

- Fact Sheet: Technology-Supported Writing Instruction

- Project Resources

- Summer Institute

- Teacher Effectiveness in Adult Education

- Adult Education Teacher Induction Toolkit

- Adult Education Teacher Competencies

- Teacher Effectiveness Online Courses

- Teaching Skills that Matter

- Teaching Skills that Matter Toolkit Overview

- Teaching Skills that Matter Civics Education

- Teaching Skills that Matter Digital Literacy

- Teaching Skills that Matter Financial Literacy

- Teaching Skills that Matter Health Literacy

- Teaching Skills that Matter Workforce Preparation

- Teaching Skills that Matter Other Tools and Resources

- Technology-Based Coaching in Adult Education

- Technical Assistance and Professional Development

- About LINCS

- History of LINCS

- LINCS Guide

- Style Guide

TEAL Center Fact Sheet No. 4: Metacognitive Processes

Metacognition is one’s ability to use prior knowledge to plan a strategy for approaching a learning task, take necessary steps to problem solve, reflect on and evaluate results, and modify one’s approach as needed. It helps learners choose the right cognitive tool for the task and plays a critical role in successful learning.

What Is Metacognition?

Metacognition refers to awareness of one’s own knowledge—what one does and doesn’t know—and one’s ability to understand, control, and manipulate one’s cognitive processes (Meichenbaum, 1985). It includes knowing when and where to use particular strategies for learning and problem solving as well as how and why to use specific strategies. Metacognition is the ability to use prior knowledge to plan a strategy for approaching a learning task, take necessary steps to problem solve, reflect on and evaluate results, and modify one’s approach as needed. Flavell (1976), who first used the term, offers the following example: I am engaging in Metacognition if I notice that I am having more trouble learning A than B; if it strikes me that I should double check C before accepting it as fact (p. 232).

Cognitive strategies are the basic mental abilities we use to think, study, and learn (e.g., recalling information from memory, analyzing sounds and images, making associations between or comparing/contrasting different pieces of information, and making inferences or interpreting text). They help an individual achieve a particular goal, such as comprehending text or solving a math problem, and they can be individually identified and measured. In contrast, metacognitive strategies are used to ensure that an overarching learning goal is being or has been reached. Examples of metacognitive activities include planning how to approach a learning task, using appropriate skills and strategies to solve a problem, monitoring one’s own comprehension of text, self-assessing and self-correcting in response to the self-assessment, evaluating progress toward the completion of a task, and becoming aware of distracting stimuli.

Elements of Metacognition

Researchers distinguish between metacognitive knowledge and metacognitive regulation (Flavell, 1979, 1987; Schraw & Dennison, 1994). Metacognitive knowledge refers to what individuals know about themselves as cognitive processors, about different approaches that can be used for learning and problem solving, and about the demands of a particular learning task. Metacognitive regulation refers to adjustments individuals make to their processes to help control their learning, such as planning, information management strategies, comprehension monitoring, de-bugging strategies, and evaluation of progress and goals. Flavell (1979) further divides metacognitive knowledge into three categories:

- Person variables: What one recognizes about his or her strengths and weaknesses in learning and processing information.

- Task variables: What one knows or can figure out about the nature of a task and the processing demands required to complete the task—for example, knowledge that it will take more time to read, comprehend, and remember a technical article than it will a similar-length passage from a novel.

- Strategy variables: The strategies a person has “at the ready” to apply in a flexible way to successfully accomplish a task; for example, knowing how to activate prior knowledge before reading a technical article, using a glossary to look up unfamiliar words, or recognizing that sometimes one has to reread a paragraph several times before it makes sense.

Livingston (1997) provides an example of all three variables: “I know that I ( person variable ) have difficulty with word problems ( task variable ), so I will answer the computational problems first and save the word problems for last ( strategy variable ).”

Why Teach Metacognitive Skills?

Research shows that metacognitive skills can be taught to students to improve their learning (Nietfeld & Shraw, 2002; Thiede, Anderson, & Therriault, 2003).

Constructing understanding requires both cognitive and metacognitive elements. Learners “construct knowledge” using cognitive strategies, and they guide, regulate, and evaluate their learning using metacognitive strategies. It is through this “thinking about thinking,” this use of metacognitive strategies, that real learning occurs. As students become more skilled at using metacognitive strategies, they gain confidence and become more independent as learners.

Individuals with well-developed metacognitive skills can think through a problem or approach a learning task, select appropriate strategies, and make decisions about a course of action to resolve the problem or successfully perform the task. They often think about their own thinking processes, taking time to think about and learn from mistakes or inaccuracies (North Central Regional Educational Laboratory, 1995). Some instructional programs encourage students to engage in “metacognitive conversations” with themselves so that they can “talk” with themselves about their learning, the challenges they encounter, and the ways in which they can self-correct and continue learning.

Moreover, individuals who demonstrate a wide variety of metacognitive skills perform better on exams and complete work more efficiently—they use the right tool for the job, and they modify learning strategies as needed, identifying blocks to learning and changing tools or strategies to ensure goal attainment. Because Metacognition plays a critical role in successful learning, it is imperative that instructors help learners develop metacognitively.

What’s the Research?

Metacognitive strategies can be taught (Halpern, 1996), they are associated with successful learning (Borkowski, Carr, & Pressley, 1987). Successful learners have a repertoire of strategies to select from and can transfer them to new settings (Pressley, Borkowski, & Schneider, 1987). Instructors need to set tasks at an appropriate level of difficulty (i.e., challenging enough so that students need to apply metacognitive strategies to monitor success but not so challenging that students become overwhelmed or frustrated), and instructors need to prompt learners to think about what they are doing as they complete these tasks (Biemiller & Meichenbaum, 1992). Instructors should take care not to do the thinking for learners or tell them what to do because this runs the risk of making students experts at seeking help rather than experts at thinking about and directing their own learning. Instead, effective instructors continually prompt learners, asking “What should you do next?”

McKeachie (1988) found that few college instructors explicitly teach strategies for monitoring learning. They assume that students have already learned these strategies in high school. But many have not and are unaware of the metacognitive process and its importance to learning. Rote memorization is the usual—and often the only—learning strategy employed by high school students when they enter college (Nist, 1993). Simpson and Nist (2000), in a review of the literature on strategic learning, emphasize that instructors need to provide explicit instruction on the use of study strategies. The implication for ABE programs is that it is likely that ABE learners need explicit instruction in both cognitive and metacognitive strategies. They need to know that they have choices about the strategies they can employ in different contexts, and they need to monitor their use of and success with these strategies.

Recommended Instructional Strategies

Instructors can encourage ABE learners to become more strategic thinkers by helping them focus on the ways they process information. Self-questioning, reflective journal writing, and discussing their thought processes with other learners are among the ways that teachers can encourage learners to examine and develop their metacognitive processes.

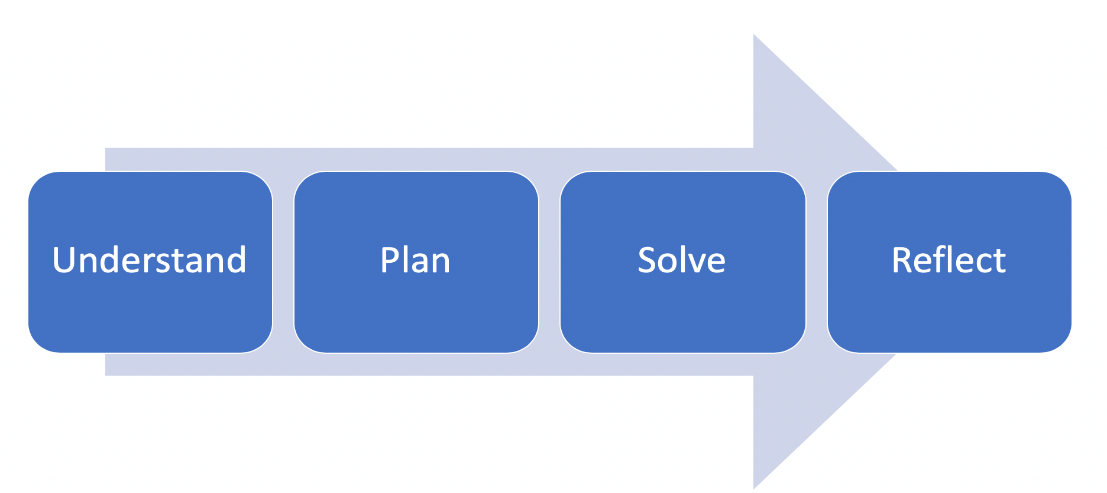

Fogarty (1994) suggests that Metacognition is a process that spans three distinct phases, and that, to be successful thinkers, students must do the following:

- Develop a plan before approaching a learning task, such as reading for comprehension or solving a math problem.

- Monitor their understanding; use “fix-up” strategies when meaning breaks down.

- Evaluate their thinking after completing the task.

Instructors can model the application of questions, and they can prompt learners to ask themselves questions during each phase. They can incorporate into lesson plans opportunities for learners to practice using these questions during learning tasks, as illustratetd in the following examples:

- During the planning phase, learners can ask, What am I supposed to learn? What prior knowledge will help me with this task? What should I do first? What should I look for in this reading? How much time do I have to complete this? In what direction do I want my thinking to take me?

- During the monitoring phase, learners can ask, How am I doing? Am I on the right track? How should I proceed? What information is important to remember? Should I move in a different direction? Should I adjust the pace because of the difficulty? What can I do if I do not understand?

- During the evaluation phase, learners can ask, H ow well did I do? What did I learn? Did I get the results I expected? What could I have done differently? Can I apply this way of thinking to other problems or situations? Is there anything I don’t understand—any gaps in my knowledge? Do I need to go back through the task to fill in any gaps in understanding? How might I apply this line of thinking to other problems?

Rather than viewing reading, writing, science, social studies, and math only as subjects or content to be taught, instructors can see them as opportunities for learners to reflect on their learning processes. Examples follow for each content area:

- Reading: Teach learners how to ask questions during reading and model “think-alouds.” Ask learners questions during read-alouds and teach them to monitor their reading by constantly asking themselves if they understand what the text is about. Teach them to take notes or highlight important details, asking themselves, “Why is this a key phrase to highlight?” and “Why am I not highlighting this?”

- Writing: Model prewriting strategies for organizing thoughts, such as brainstorming ideas using a word web, or using a graphic organizer to put ideas into paragraphs, with the main idea at the top and the supporting details below it.

- Social Studies and Science: Teach learners the importance of using organizers such as KWL charts, Venn diagrams, concept maps , and anticipation/reaction charts to sort information and help them learn and understand content. Learners can use organizers prior to a task to focus their attention on what they already know and identify what they want to learn. They can use a Venn diagram to identify similarities and differences between two related concepts.

- Math: Teach learners to use mnemonics to recall steps in a process, such as the order of mathematical operations. Model your thought processes in solving problems—for example, “This is a lot of information; where should I start? Now that I know____, is there something else I know?”

The goal of teaching metacognitive strategies is to help learners become comfortable with these strategies so that they employ them automatically to learning tasks, focusing their attention, deriving meaning, and making adjustments if something goes wrong. They do not think about these skills while performing them but, if asked what they are doing, they can usually accurately describe their metacognitive processes.

Biemiller, A., & Meichenbaum, D. (1992). The nature and nurture of the self-directed learner. Educational Leadership, 50, 75–80.

Borkowski, J., Carr, M., & Pressely, M. (1987). “Spontaneous” strategy use: Perspectives from metacognitive theory. Intelligence, 11, 61–75.

Flavell, J. H. (1979). Metacognition and cognitive monitoring: A new area of cognitive-developmental inquiry. American Psychologist, 34, 906–911.

Flavell, J. H. (1976). Metacognitive aspects of problem solving. In L. B. Resnick (Ed.), The nature of intelligence (pp. 231–236). Hillsdale, NJ: Lawrence Erlbaum Associates.

Flavell, J. H. (1987). Speculations about the nature and development of metacognition. In F. E. Weinert & R. H. Kluwe (Eds.), Metacognition, motivation, and understanding (pp. 21–29). Hillside, NJ: Lawrence Erlbaum Associates.

Fogarty, R. (1994). How to teach for metacognition. Palatine, IL: IRI/Skylight Publishing.

Halpern, D. F. (1996). Thought and knowledge: An introduction to critical thinking. Mahwah, NJ: Lawrence Erlbaum Associates.

Livingston, J. A. (1997). Metacognition: An overview. Retrieved December 27, 2011 from http://gse.buffalo.edu/fas/shuell/CEP564/Metacog.htm

McKeachie, W. J. (1988). The need for study strategy training. In C. E. Weinstein, E. T. Goetz, & P. A. Alexander (Eds.), Learning and study strategies: Issues in assessment, instruction, and evaluation (pp. 3–9). New York: Academic Press.

Meichenbaum, D. (1985). Teaching thinking: A cognitive-behavioral perspective. In S. F., Chipman, J. W. Segal, & R. Glaser (Eds.), Thinking and learning skills, Vol. 2: Research and open questions. Hillsdale, NJ: Lawrence Erlbaum Associates.

North Central Regional Educational Laboratory. (1995). Strategic teaching and reading project guidebook. Retrieved December 27, 2011

Nietfeld, J. L., & Shraw, G. (2002). The effect of knowledge and strategy explanation on monitoring accuracy. Journal of Educational Research, 95, 131–142.

Nist, S. (1993). What the literature says about academic literacy. Georgia Journal of Reading, Fall-Winter, 11–18.

Pressley, M., Borkowski, J. G., & Schneider, W. (1987). Cognitive strategies: Good strategy users coordinate metacognition and knowledge. In R. Vasta, & G. Whitehurst (Eds.), Annals of child development, 4, 80–129. Greenwich, CT: JAI Press.

Schraw, G., & Dennison, R. S. (1994). Assessing metacognitive awareness. Contemporary Educational Psychology, 19, 460–475.

Simpson, M. L., & Nist, S. L. (2000). An update on strategic learning: It’s more than textbook reading strategies. Journal of Adolescent and Adult Literacy, 43 (6) 528–541.

Thiede, K. W., Anderson, M. C., & Therriault, D. (2003). Accuracy of metacognitive monitoring affects learning of texts. Journal of Educational Psychology, 95, 66–73.

Authors: TEAL Center staff

Reviewed by: David Scanlon, Boston College

About the TEAL Center: The Teaching Excellence in Adult Literacy (TEAL) Center is a project of the U.S. Department of Education, Office of Career, Technical, and Adult Education (OCTAE), designed to improve the quality of teaching in adult education in the content areas.

- Columbia University in the City of New York

- Office of Teaching, Learning, and Innovation

- University Policies

- Columbia Online

- Academic Calendar

- Resources and Technology

- Resources and Guides

- Metacognition

Metacognitive thinking skills are important for instructors and students alike. This resource provides instructors with an overview of the what and why of metacognition and general “getting started” strategies for teaching for and with metacognition.

In this page:

What is metacognition?

Why use metacognition, getting started: how to teach both for and with metacognition, metacognition at columbia.

Cite this resource: Columbia Center for Teaching and Learning (2018). Metacognition Resource. Columbia University. Retrieved [today’s date] from https://ctl.columbia.edu/resources-and-technology/resources/metacognition/

- assess the task.

- plan for and use appropriate strategies and resources.

- monitor task performance.

- evaluate processes and products of their learning and revise their goals and strategies accordingly.

The Center for Teaching and Learning encourages instructors to teach metacognitively. This means to teach “ with and for metacognition.” To teach with metacognition involves instructors “thinking about their own thinking regarding their teaching” (Hartman, 2001: 149). To teach for metacognition involves instructors thinking about how their instruction helps to elucidate learning and problem solving strategies to their students (Hartman, 2001).

Learners with metacognitive skills are:

- More self-aware as critical thinkers and problem solvers, enabling them to actively approach knowledge gaps and problems and to rely on themselves.

- Able to monitor, plan, and control their mental processes.

- Better able to assess the depth of their knowledge.

- Able to transfer/apply their knowledge and skills to new situations.

- Able to choose more effective learning strategies.

- More likely to perform better academically.

Instructors who teach metacognitively / think about their teaching are:

- More self-aware of their instructional capacities, and know what teaching strategies they rely upon, when and why these use these strategies, and how to use them effectively and inclusively.

- Better able to regulate their instruction before, during, and after conducting a class session (i.e., to plan what and how to teach, monitor how lessons are going and make adjustments, and evaluate how a lesson went afterwards).

- Better able to communicate, helping students understand the what, why, and how of their learning, which can lead to better learning outcomes.

- Able to use their knowledge of students’ metacognitive skills to plan instruction designed to improve students’ metacognition and to create inclusive course climates.

Teaching for metacognition — Metacognitive strategies that serve students and their learning:

Design homework assignments that ask students to focus on their learning process. This includes having students monitor progress, identify and correct mistakes, and plan next steps.

Provide structures to guide students in creating implementable action plans for improvement.

Show students how to move stepwise from reflection to action. Use appropriate technology to support student self-regulation. Many platforms such as CourseWorks provide tools that students can use to keep up with their course work and monitor their progress.

Teaching with metacognition — Metacognitive strategies that serve the course and the instructor’s teaching practice:

Create an evaluation plan to periodically evaluate one’s teaching and course design, set-up, and content.

Structure the course to provide time for students to give feedback on the course and teaching. Evaluate course progress and successes of teaching Use course and instructional objectives to measure progress.

Schedule mid-course feedback surveys with students.

Request a mid-course review (offered as a service for graduate students).

Review end-of-course evaluations and reflect on the changes that will be made to maximize student learning. Build in time for metacognitive work Set aside time before, during, and after a course to reflect on one’s teaching practice, relationship with students, course climate and dynamics, as well as assumptions about the course material and its accessibility to students.

Metacognition and Memory Lab | Dr. Janet Metcalfe (Professor of Psychology and of Neurobiology and Behavior) runs a lab that focuses on how people use their metacognition to improve self-awareness and to guide their own learning and behavior. Dr. Metcalfe is author of Metacognition: A Textbook for Cognitive, Educational, Life Span & Applied Psychology (2009), co-authored with John Dunlosky.

In Fall 2018, the CTL and the Science of LEarning Research (SOLER) initiative co-organized the inaugural Science of Learning Symposium “Metacognition: From Research to Classroom” which brought together Columbia faculty, staff, graduate students, and experts in the science of learning to share the research on metacognition in learning, and to translate it into strategies that maximize student learning. View video recording of the event here .

Ambrose, S. A., Lovett, M., Bridges, M. W., DiPietro, M., & Norman, M. K. (2010). How Learning Works: Seven Research-Based Principles for Smart Teaching . San Francisco: John Wiley & Sons.

Dunlosky, J. and Metcalfe, J. (2009). Metacognition. Thousand Oaks, CA: Sage.

Flavell, J.H. (1976). Metacognitive Aspects of Problem Solving. In L.B. Resnick (Ed.), The Nature of Intelligence (pp. 231-236). Hillsdale, NJ: Erlbaum.

Hacker, D.J. (1998). Chapter 1. Definitions and Empirical Foundations. In Hacker, D.J.; Dunlosky, J.; and Graesser, A.C. (1998). Metacognition in Educational Theory and Practice. Mahwah, N.J.: Routledge.

Hartman, H.J. (2001). Chapter 8: Teaching Metacognitively. In Metacognition in Learning and Instruction. Kluwer Academic Publishers, 149 – 172.

Lai, E.R. (2011). Metacognition: A Literature Review. Pearson’s Research Reports. Retrieved from https://images.pearsonassessments.com/images/tmrs/Metacognition_Literature_Review_Final.pdf

McGuire, S.Y. (2015). Teach Students How to Learn: Strategies You Can Incorporate Into Any Course to Improve Student Metacognition, Study Skills, and Motivation. Sterling, VA: Stylus.

National Research Council (2000). How People Learn: Brain, Mind, Experience, and School . Expanded Edition . Washington, DC: The National Academies Press. https://doi.org/10.17226/9853

Nilson, L. (2013). Creating Self-Regulated Learners: Strategies to Strengthen Students’ Self-Awareness and Learning Skills. Sterling, VA: Stylus.

Schraw, G. and Dennison, R.S. (1994). Assessing Metacognitive Awareness. Contemporary Educational Psychology. 19(4): 460-475.

Explore our teaching resources.

- Blended Learning

- Contemplative Pedagogy

- Inclusive Teaching Guide

- FAQ for Teaching Assistants

The CTL researches and experiments.

The Columbia Center for Teaching and Learning provides an array of resources and tools for instructional activities.

This website uses cookies to identify users, improve the user experience and requires cookies to work. By continuing to use this website, you consent to Columbia University's use of cookies and similar technologies, in accordance with the Columbia University Website Cookie Notice .

Center for Teaching

Metacognition.

| Chick, N. (2013). Metacognition. Vanderbilt University Center for Teaching. Retrieved [todaysdate] from https://cft.vanderbilt.edu/guides-sub-pages/metacognition/. |

Thinking about One’s Thinking | Putting Metacognition into Practice

Thinking about One’s Thinking

Initially studied for its development in young children (Baker & Brown, 1984; Flavell, 1985), researchers soon began to look at how experts display metacognitive thinking and how, then, these thought processes can be taught to novices to improve their learning (Hatano & Inagaki, 1986). In How People Learn , the National Academy of Sciences’ synthesis of decades of research on the science of learning, one of the three key findings of this work is the effectiveness of a “‘metacognitive’ approach to instruction” (Bransford, Brown, & Cocking, 2000, p. 18).

Metacognitive practices increase students’ abilities to transfer or adapt their learning to new contexts and tasks (Bransford, Brown, & Cocking, p. 12; Palincsar & Brown, 1984; Scardamalia et al., 1984; Schoenfeld, 1983, 1985, 1991). They do this by gaining a level of awareness above the subject matter : they also think about the tasks and contexts of different learning situations and themselves as learners in these different contexts. When Pintrich (2002) asserts that “Students who know about the different kinds of strategies for learning, thinking, and problem solving will be more likely to use them” (p. 222), notice the students must “know about” these strategies, not just practice them. As Zohar and David (2009) explain, there must be a “ conscious meta-strategic level of H[igher] O[rder] T[hinking]” (p. 179).

Metacognitive practices help students become aware of their strengths and weaknesses as learners, writers, readers, test-takers, group members, etc. A key element is recognizing the limit of one’s knowledge or ability and then figuring out how to expand that knowledge or extend the ability. Those who know their strengths and weaknesses in these areas will be more likely to “actively monitor their learning strategies and resources and assess their readiness for particular tasks and performances” (Bransford, Brown, & Cocking, p. 67).

The absence of metacognition connects to the research by Dunning, Johnson, Ehrlinger, and Kruger on “Why People Fail to Recognize Their Own Incompetence” (2003). They found that “people tend to be blissfully unaware of their incompetence,” lacking “insight about deficiencies in their intellectual and social skills.” They identified this pattern across domains—from test-taking, writing grammatically, thinking logically, to recognizing humor, to hunters’ knowledge about firearms and medical lab technicians’ knowledge of medical terminology and problem-solving skills (p. 83-84). In short, “if people lack the skills to produce correct answers, they are also cursed with an inability to know when their answers, or anyone else’s, are right or wrong” (p. 85). This research suggests that increased metacognitive abilities—to learn specific (and correct) skills, how to recognize them, and how to practice them—is needed in many contexts.

Putting Metacognition into Practice

In “ Promoting Student Metacognition ,” Tanner (2012) offers a handful of specific activities for biology classes, but they can be adapted to any discipline. She first describes four assignments for explicit instruction (p. 116):

- Preassessments—Encouraging Students to Examine Their Current Thinking: “What do I already know about this topic that could guide my learning?”

- Retrospective Postassessments—Pushing Students to Recognize Conceptual Change: “Before this course, I thought evolution was… Now I think that evolution is ….” or “How is my thinking changing (or not changing) over time?”

- Reflective Journals—Providing a Forum in Which Students Monitor Their Own Thinking: “What about my exam preparation worked well that I should remember to do next time? What did not work so well that I should not do next time or that I should change?”

Next are recommendations for developing a “classroom culture grounded in metacognition” (p. 116-118):

- Giving Students License to Identify Confusions within the Classroom Culture: ask students what they find confusing, acknowledge the difficulties

- Integrating Reflection into Credited Course Work: integrate short reflection (oral or written) that ask students what they found challenging or what questions arose during an assignment/exam/project

- Metacognitive Modeling by the Instructor for Students: model the thinking processes involved in your field and sought in your course by being explicit about “how you start, how you decide what to do first and then next, how you check your work, how you know when you are done” (p. 118)

To facilitate these activities, she also offers three useful tables:

- Questions for students to ask themselves as they plan, monitor, and evaluate their thinking within four learning contexts—in class, assignments, quizzes/exams, and the course as a whole (p. 115)

- Prompts for integrating metacognition into discussions of pairs during clicker activities, assignments, and quiz or exam preparation (p. 117)

- Questions to help faculty metacognitively assess their own teaching (p. 119)

Weimer’s “ Deep Learning vs. Surface Learning: Getting Students to Understand the Difference ” (2012) offers additional recommendations for developing students’ metacognitive awareness and improvement of their study skills:

“[I]t is terribly important that in explicit and concerted ways we make students aware of themselves as learners. We must regularly ask, not only ‘What are you learning?’ but ‘How are you learning?’ We must confront them with the effectiveness (more often ineffectiveness) of their approaches. We must offer alternatives and then challenge students to test the efficacy of those approaches. ” (emphasis added)

She points to a tool developed by Stanger-Hall (2012, p. 297) for her students to identify their study strategies, which she divided into “ cognitively passive ” (“I previewed the reading before class,” “I came to class,” “I read the assigned text,” “I highlighted the text,” et al) and “ cognitively active study behaviors ” (“I asked myself: ‘How does it work?’ and ‘Why does it work this way?’” “I wrote my own study questions,” “I fit all the facts into a bigger picture,” “I closed my notes and tested how much I remembered,” et al) . The specific focus of Stanger-Hall’s study is tangential to this discussion, 1 but imagine giving students lists like hers adapted to your course and then, after a major assignment, having students discuss which ones worked and which types of behaviors led to higher grades. Even further, follow Lovett’s advice (2013) by assigning “exam wrappers,” which include students reflecting on their previous exam-preparation strategies, assessing those strategies and then looking ahead to the next exam, and writing an action plan for a revised approach to studying. A common assignment in English composition courses is the self-assessment essay in which students apply course criteria to articulate their strengths and weaknesses within single papers or over the course of the semester. These activities can be adapted to assignments other than exams or essays, such as projects, speeches, discussions, and the like.

As these examples illustrate, for students to become more metacognitive, they must be taught the concept and its language explicitly (Pintrich, 2002; Tanner, 2012), though not in a content-delivery model (simply a reading or a lecture) and not in one lesson. Instead, the explicit instruction should be “designed according to a knowledge construction approach,” or students need to recognize, assess, and connect new skills to old ones, “and it needs to take place over an extended period of time” (Zohar & David, p. 187). This kind of explicit instruction will help students expand or replace existing learning strategies with new and more effective ones, give students a way to talk about learning and thinking, compare strategies with their classmates’ and make more informed choices, and render learning “less opaque to students, rather than being something that happens mysteriously or that some students ‘get’ and learn and others struggle and don’t learn” (Pintrich, 2002, p. 223).

- What to Expect (when reading philosophy)

- The Ultimate Goal (of reading philosophy)

- Basic Good Reading Behaviors

- Important Background Information, or discipline- and course-specific reading practices, such as “reading for enlightenment” rather than information, and “problem-based classes” rather than historical or figure-based classes

- A Three-Part Reading Process (pre-reading, understanding, and evaluating)

- Flagging, or annotating the reading

- Linear vs. Dialogical Writing (Philosophical writing is rarely straightforward but instead “a monologue that contains a dialogue” [p. 365].)

What would such a handout look like for your discipline?

Students can even be metacognitively prepared (and then prepare themselves) for the overarching learning experiences expected in specific contexts . Salvatori and Donahue’s The Elements (and Pleasures) of Difficulty (2004) encourages students to embrace difficult texts (and tasks) as part of deep learning, rather than an obstacle. Their “difficulty paper” assignment helps students reflect on and articulate the nature of the difficulty and work through their responses to it (p. 9). Similarly, in courses with sensitive subject matter, a different kind of learning occurs, one that involves complex emotional responses. In “ Learning from Their Own Learning: How Metacognitive and Meta-affective Reflections Enhance Learning in Race-Related Courses ” (Chick, Karis, & Kernahan, 2009), students were informed about the common reactions to learning about racial inequality (Helms, 1995; Adams, Bell, & Griffin, 1997; see student handout, Chick, Karis, & Kernahan, p. 23-24) and then regularly wrote about their cognitive and affective responses to specific racialized situations. The students with the most developed metacognitive and meta-affective practices at the end of the semester were able to “clear the obstacles and move away from” oversimplified thinking about race and racism ”to places of greater questioning, acknowledging the complexities of identity, and redefining the world in racial terms” (p. 14).

Ultimately, metacognition requires students to “externalize mental events” (Bransford, Brown, & Cocking, p. 67), such as what it means to learn, awareness of one’s strengths and weaknesses with specific skills or in a given learning context, plan what’s required to accomplish a specific learning goal or activity, identifying and correcting errors, and preparing ahead for learning processes.

————————

1 Students who were tested with short answer in addition to multiple-choice questions on their exams reported more cognitively active behaviors than those tested with just multiple-choice questions, and these active behaviors led to improved performance on the final exam.

- Adams, Maurianne, Bell, Lee Ann, and Griffin, Pat. (1997). Teaching for diversity and social justice: A sourcebook . New York: Routledge.

- Bransford, John D., Brown Ann L., and Cocking Rodney R. (2000). How people learn: Brain, mind, experience, and school . Washington, D.C.: National Academy Press.

- Baker, Linda, and Brown, Ann L. (1984). Metacognitive skills and reading. In Paul David Pearson, Michael L. Kamil, Rebecca Barr, & Peter Mosenthal (Eds.), Handbook of research in reading: Volume III (pp. 353–395). New York: Longman.

- Brown, Ann L. (1980). Metacognitive development and reading. In Rand J. Spiro, Bertram C. Bruce, and William F. Brewer, (Eds.), Theoretical issues in reading comprehension: Perspectives from cognitive psychology, linguistics, artificial intelligence, and education (pp. 453-482). Hillsdale, NJ: Erlbaum.

- Chick, Nancy, Karis, Terri, and Kernahan, Cyndi. (2009). Learning from their own learning: how metacognitive and meta-affective reflections enhance learning in race-related courses . International Journal for the Scholarship of Teaching and Learning, 3(1). 1-28.

- Commander, Nannette Evans, and Valeri-Gold, Marie. (2001). The learning portfolio: A valuable tool for increasing metacognitive awareness . The Learning Assistance Review, 6 (2), 5-18.

- Concepción, David. (2004). Reading philosophy with background knowledge and metacognition . Teaching Philosophy , 27 (4). 351-368.

- Dunning, David, Johnson, Kerri, Ehrlinger, Joyce, and Kruger, Justin. (2003) Why people fail to recognize their own incompetence . Current Directions in Psychological Science, 12 (3). 83-87.

- Flavell, John H. (1985). Cognitive development. Englewood Cliffs, NJ: Prentice Hall.

- Hatano, Giyoo and Inagaki, Kayoko. (1986). Two courses of expertise. In Harold Stevenson, Azuma, Horishi, and Hakuta, Kinji (Eds.), Child development and education in Japan, New York: W.H. Freeman.

- Helms, Janet E. (1995). An update of Helms’ white and people of color racial identity models . In J.G. Ponterotto, Joseph G., Casas, Manuel, Suzuki, Lisa A., and Alexander, Charlene M. (Eds.), Handbook of multicultural counseling (pp. 181-198) . Thousand Oaks, CA: Sage.

- Lovett, Marsha C. (2013). Make exams worth more than the grade. In Matthew Kaplan, Naomi Silver, Danielle LaVague-Manty, and Deborah Meizlish (Eds.), Using reflection and metacognition to improve student learning: Across the disciplines, across the academy . Sterling, VA: Stylus.

- Palincsar, Annemarie Sullivan, and Brown, Ann L. (1984). Reciprocal teaching of comprehension-fostering and comprehension-monitoring activities . Cognition and Instruction, 1 (2). 117-175.

- Pintrich, Paul R. (2002). The Role of metacognitive knowledge in learning, teaching, and assessing . Theory into Practice, 41 (4). 219-225.

- Salvatori, Mariolina Rizzi, and Donahue, Patricia. (2004). The Elements (and pleasures) of difficulty . New York: Pearson-Longman.

- Scardamalia, Marlene, Bereiter, Carl, and Steinbach, Rosanne. (1984). Teachability of reflective processes in written composition . Cognitive Science , 8, 173-190.

- Schoenfeld, Alan H. (1991). On mathematics as sense making: An informal attack on the fortunate divorce of formal and informal mathematics. In James F. Voss, David N. Perkins, and Judith W. Segal (Eds.), Informal reasoning and education (pp. 311-344). Hillsdale, NJ: Erlbaum.

- Stanger-Hall, Kathrin F. (2012). Multiple-choice exams: An obstacle for higher-level thinking in introductory science classes . Cell Biology Education—Life Sciences Education, 11(3), 294-306.

- Tanner, Kimberly D. (2012). Promoting student metacognition . CBE—Life Sciences Education, 11, 113-120.

- Weimer, Maryellen. (2012, November 19). Deep learning vs. surface learning: Getting students to understand the difference . Retrieved from the Teaching Professor Blog from http://www.facultyfocus.com/articles/teaching-professor-blog/deep-learning-vs-surface-learning-getting-students-to-understand-the-difference/ .

- Zohar, Anat, and David, Adi Ben. (2009). Paving a clear path in a thick forest: a conceptual analysis of a metacognitive component . Metacognition Learning , 4 , 177-195.

Photo credit: wittygrittyinvisiblegirl via Compfight cc

Photo Credit: Helga Weber via Compfight cc

Photo Credit: fiddle oak via Compfight cc

Teaching Guides

- Online Course Development Resources

- Principles & Frameworks

- Pedagogies & Strategies

- Reflecting & Assessing

- Challenges & Opportunities

- Populations & Contexts

Quick Links

- Services for Departments and Schools

- Examples of Online Instructional Modules

How it works

Transform your enterprise with the scalable mindsets, skills, & behavior change that drive performance.

Explore how BetterUp connects to your core business systems.

We pair AI with the latest in human-centered coaching to drive powerful, lasting learning and behavior change.

Build leaders that accelerate team performance and engagement.

Unlock performance potential at scale with AI-powered curated growth journeys.

Build resilience, well-being and agility to drive performance across your entire enterprise.

Transform your business, starting with your sales leaders.

Unlock business impact from the top with executive coaching.

Foster a culture of inclusion and belonging.

Accelerate the performance and potential of your agencies and employees.

See how innovative organizations use BetterUp to build a thriving workforce.

Discover how BetterUp measurably impacts key business outcomes for organizations like yours.

A demo is the first step to transforming your business. Meet with us to develop a plan for attaining your goals.

- What is coaching?

Learn how 1:1 coaching works, who its for, and if it's right for you.

Accelerate your personal and professional growth with the expert guidance of a BetterUp Coach.

Types of Coaching

Navigate career transitions, accelerate your professional growth, and achieve your career goals with expert coaching.

Enhance your communication skills for better personal and professional relationships, with tailored coaching that focuses on your needs.

Find balance, resilience, and well-being in all areas of your life with holistic coaching designed to empower you.

Discover your perfect match : Take our 5-minute assessment and let us pair you with one of our top Coaches tailored just for you.

Research, expert insights, and resources to develop courageous leaders within your organization.

Best practices, research, and tools to fuel individual and business growth.

View on-demand BetterUp events and learn about upcoming live discussions.

The latest insights and ideas for building a high-performing workplace.

- BetterUp Briefing

The online magazine that helps you understand tomorrow's workforce trends, today.

Innovative research featured in peer-reviewed journals, press, and more.

Founded in 2022 to deepen the understanding of the intersection of well-being, purpose, and performance

We're on a mission to help everyone live with clarity, purpose, and passion.

Join us and create impactful change.

Read the buzz about BetterUp.

Meet the leadership that's passionate about empowering your workforce.

For Business

For Individuals

What are metacognitive skills? Examples in everyday life

Jump to section

What are metacognitive skills?

Examples of metacognitive skills, how to improve metacognitive skills, take charge of your mind.

When facing a career change or deciding to switch jobs, you might update the hard and soft skills on your resume. You could even take courses to upskill and expand your portfolio.

But some growth happens off the page. Your metacognitive skills contribute to your learning process and help you look inward to self-reflect and monitor your growth. They’re like a golden ticket toward excellence in both academia and your career path , always pushing you further.

A deeper understanding of metacognition, along with effective strategies for developing related skills, opens the door to heightened personal and professional development . Metacognitive thinking might just be the tool you need to reach your academic and career goals

Metacognitive skills are the soft skills you use to monitor and control your learning and problem-solving processes , or your thinking about thinking. This self-understanding is known as metacognition theory, a term that the American developmental psychologist John H. Flavell coined in the 1970s .

It might sound abstract, but these skills are mostly about self-awareness , learning, and organizing your thoughts. Metacognitive strategies include thinking out loud and answering reflective questions. They’re often relevant for students who need to memorize concepts fast or absorb lots of information at once.

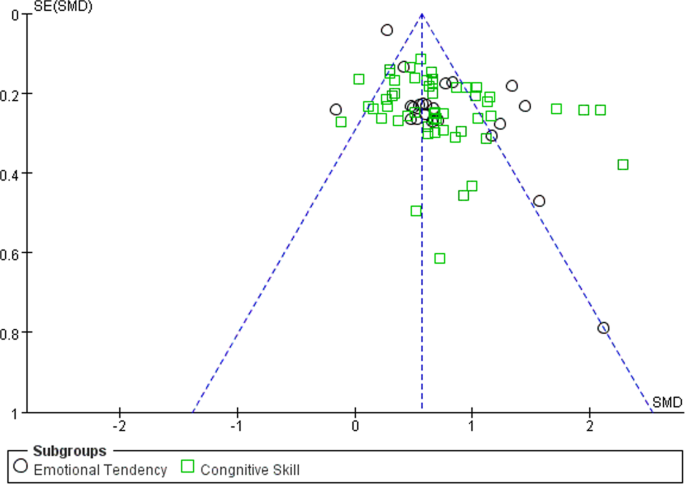

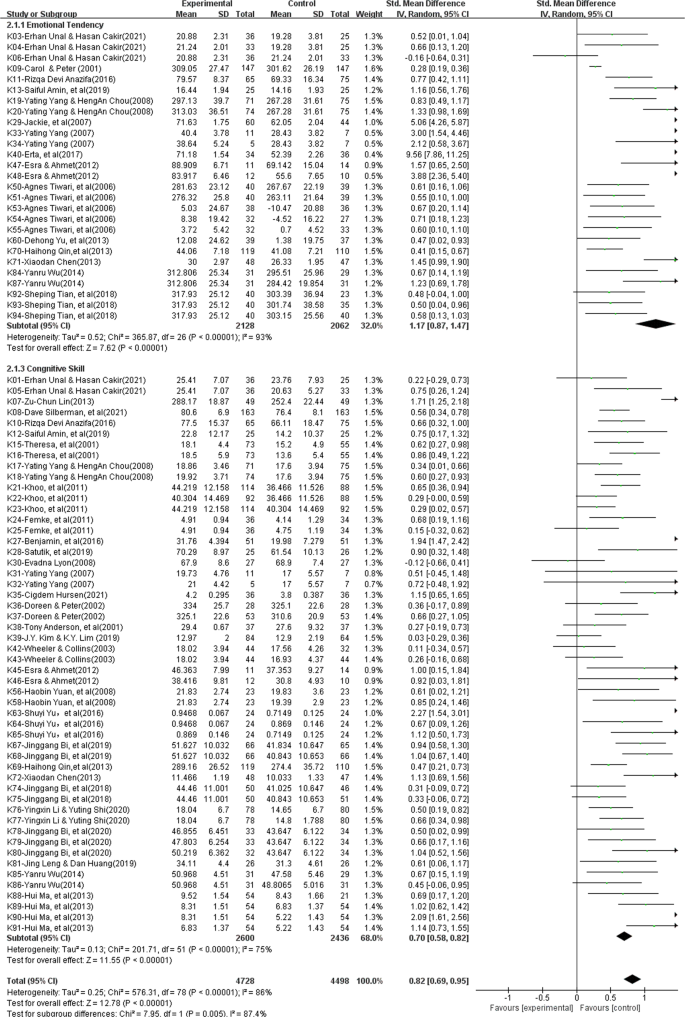

But metacognition is important for everyone because it helps you retain information more efficiently and feel more confident about what you know. One meta-analysis of many studies showed that being aware of metacognitive strategies has a strong positive impact on teaching and learning , and that knowing how to plan ahead was a key indicator of future success.

Understanding your cognition and how you learn is a fundamental step in optimizing your educational process. To make the concept more tangible, here are a few cognitive skills examples:

Goal setting

One of the foremost metacognitive skills is knowing how to set goals — recognizing what your ambitions are and fine-tuning them into manageable and attainable objectives. The SMART goal framework is a good place to start because it dives deeper into what you know you can realistically achieve.

Whether it's a personal goal of grasping a complex concept, a professional goal of developing a new skill set, or a financial goal of achieving a budgeting milestone , setting a concrete goal helps you know what you’re working toward. It’s the first step to self-directed learning and achievement, giving you a destination for your path.

Planning and organization

Planning is an essential metacognition example because it sketches out the route you'll take to reach your goal, as well as identifying and collecting the specific strategies, resources, and support mechanisms you'll need along the way. It’s an in-demand skill for many jobs, but it also helps you learn new things.

Creating and organizing a plan is where you contemplate the best methods for learning, evaluate the materials and resources at your disposal, and determine the most efficient time management strategies. Even though it’s a concrete skill, it falls under the umbrella of metacognition because it involves self-awareness about your learning style and abilities.

Problem-solving

Central to metacognition is problem-solving, a higher-order cognitive process requiring both creative and critical thinking skills . Solving problems both at work and during learning begins with recognizing the issue at hand, analyzing the details, and considering potential solutions. The next step is selecting the most promising solution from the pool of possibilities and evaluating the results after implementation.

The problem-solving process gives you the opportunity to grow from your mistakes and practice trial and error. It also helps you reflect and refine your approach for future endeavors. These qualities make it central to metacognition’s inward-facing yet action-oriented processes.

Concentration

Concentration allows you to fully engage with the information you’re processing and retain new knowledge. It involves a high degree of mental fitness , which you can develop with metacognition. Most tasks require the ability to ignore distractions , resist procrastination , and maintain a steady focus on the task at hand.

This skill is paramount when it comes to work-from-home settings or jobs with lots of moving parts where countless distractions are constantly vying for your attention. And training your mind to focus better in general can also increase your learning efficacy and overall productivity.

Self-reflection

The practice of self-reflection involves continually assessing your performance, cognitive strategies, and experiences to foster self-improvement . It's a type of mental debriefing where you look back on your actions and outcomes, examining them critically to gain insight and experience valuable lessons.

Reflective practice can help you identify what worked well, what didn't, and why, giving you the opportunity to make necessary adjustments for future actions. This continuous process enhances your learning and helps you adapt to new changes and strategies.

Metacognition turns you into a self-aware problem solver, empowering you to take control of your education and become a more efficient thinker. Although it’s helpful for students, you can also apply it in the workplace while brainstorming and discovering new ways to fulfill your roles and responsibilities .

Here are some examples of metacognitive strategies and how to cultivate your abilities:

1. Determine your learning style

Are you a visual learner who thrives on images, diagrams, and color-coded notes? Are you an auditory learner who benefits more from verbal instructions, podcasts , or group discussions? Or are you a kinesthetic learner who enjoys hands-on experiences, experiments, or physical activities?

Metacognition in education is critical because it teaches you to recognize the way you intake information — the first step to effective strategies that help you truly retain information. By identifying your learning style, you can tailor your goals and study strategies to suit your strengths, maximizing your cognitive potential and improving your understanding of new material.

2. Find deeper meaning in what you read

Merely skimming the surface of the text you read won't lead to profound understanding or long-term retention. Instead, dive deep into the material. Employ reading strategies like note-taking, highlighting, and summarizing to help information enter your brain.

If that process doesn’t work for you, try using brainstorming techniques like mind mapping to tease out the underlying themes and messages. This depth of processing enhances comprehension and allows you to connect new information to prior knowledge, promoting meaningful learning.

3. Write organized plans

Deconstruct your tasks into manageable units and create a comprehensive, step-by-step plan. Having a detailed guide breaks down large, intimidating tasks into bite-sized, achievable parts, reduces the risk of procrastination, and helps manage cognitive load. This process frees up your mental energy for higher-order thinking.

4. Ask yourself open-ended questions

Metacognitive questioning is a powerful tool for fostering self-awareness. Asking good questions like “What am I trying to achieve?” and “Why did this approach work or not work?” facilitates a deeper understanding of your education style, promotes critical thinking, and enables self-directed learning. Your answers will pave the way for improved processes.

5. Ask for feedback

External perspectives offer valuable insights into your thinking patterns and strategies. Seek feedback from teachers, peers, or mentors and earn the metacognitive knowledge you need to identify strengths to harness and weaknesses to address. Remember, the objective isn’t to nitpick or micromanage. It’s constructive criticism to help refine your learning process.

6. Self-evaluate

Cultivate a habit of self-assessment and self-monitoring, whether you’re experiencing something new or working on an innovative project. Check in on progress regularly, and compare current performance with your goals. This continuous self-evaluation helps you maintain focus on your objectives and identify when you're going off track, allowing for timely adjustments when necessary.

Introspection is a powerful tool, and you can’t overstate the importance of knowing yourself . After all, building your metacognitive skills begins with a strong foundation of self-awareness and accountability .

7. Focus on solutions

It's easy to let problems and obstacles discourage you during the learning process. But metacognitive skills encourage a solutions-oriented mindset. Instead of fixating on the challenges, shift your focus to identifying, analyzing, and implementing creative solutions .

This proactive approach fosters resilience and adaptability skills in the face of adversity, helping you overcome whatever comes your way. Cultivating this mindset — sometimes known as a growth mindset — also boosts your problem-solving prowess and transforms challenges into opportunities for growth.

The simple act of writing about your learning experiences can heighten your metacognitive awareness. Journaling provides a space to reflect on your thought processes, emotions, and struggles, which can reveal patterns and trends in your behavior. It’s a springboard for improvement that helps you recognize and solve problems as they come.

In the journey of learning and career advancement, metacognitive skills are your compass toward improvement. They empower you to understand your cognitive processes, enhance your strategies, and become a more effective thinker. They’re useful whether you’re just starting a master’s degree or upskilling to earn a promotion.

Remember, the journey to gain metacognitive skills isn’t a race. It’s a personal voyage of self-discovery and growth. Each stride you take toward honing your metacognitive skills is a step toward a more successful, fulfilling, and self-aware life.

Cultivate your creativity

Foster creativity and continuous learning with guidance from our certified Coaches.

Elizabeth Perry, ACC

Elizabeth Perry is a Coach Community Manager at BetterUp. She uses strategic engagement strategies to cultivate a learning community across a global network of Coaches through in-person and virtual experiences, technology-enabled platforms, and strategic coaching industry partnerships. With over 3 years of coaching experience and a certification in transformative leadership and life coaching from Sofia University, Elizabeth leverages transpersonal psychology expertise to help coaches and clients gain awareness of their behavioral and thought patterns, discover their purpose and passions, and elevate their potential. She is a lifelong student of psychology, personal growth, and human potential as well as an ICF-certified ACC transpersonal life and leadership Coach.

What’s convergent thinking? How to be a better problem-solver

Self directed learning is the key to new skills and knowledge, what we can learn from “pandemic thrivers”, what i didn't know before working with a coach: the power of reflection, how observational learning affects growth and development, mental fitness tips from nba all-star, pau gasol, why asynchronous learning is the key to successful upskilling, the path to individual transformation in the workplace: part three, how to do inner work® (even if you're way too busy), similar articles, 10 problem-solving strategies to turn challenges on their head, how to develop critical thinking skills, self-awareness in leadership: how it will make you a better boss, learn what process mapping is and how to create one (+ examples), what are analytical skills examples and how to level up, critical thinking is the one skillset you can't afford not to master, stay connected with betterup, get our newsletter, event invites, plus product insights and research..

3100 E 5th Street, Suite 350 Austin, TX 78702

- Platform Overview

- Integrations

- Powered by AI

- BetterUp Lead™

- BetterUp Manage™

- BetterUp Care®

- Sales Performance

- Diversity & Inclusion

- Case Studies

- Why BetterUp?

- About Coaching

- Find your Coach

- Career Coaching

- Communication Coaching

- Life Coaching

- News and Press

- Leadership Team

- Become a BetterUp Coach

- BetterUp Labs

- Center for Purpose & Performance

- Leadership Training

- Business Coaching

- Contact Support

- Contact Sales

- Privacy Policy

- Acceptable Use Policy

- Trust & Security

- Cookie Preferences

- MyU : For Students, Faculty, and Staff

- Academic Leaders

- Faculty and Instructors

- Graduate Students and Postdocs

Center for Educational Innovation

- Campus and Collegiate Liaisons

- Pedagogical Innovations Journal Club

- Teaching Enrichment Series

- Recorded Webinars

- Video Series

- All Services

- Teaching Consultations

- Student Feedback Facilitation

- Instructional Media Production

- Curricular and Educational Initiative Consultations

- Educational Research and Evaluation

- Thank a Teacher

- All Teaching Resources

- Aligned Course Design

- Active Learning

- Team Projects

- Active Learning Classrooms

- Leveraging the Learning Sciences

- Inclusive Teaching at a Predominantly White Institution

- Strategies to Support Challenging Conversations in the Classroom

- Assessments

- Online Teaching and Design

- AI and ChatGPT in Teaching

- Documenting Growth in Teaching

- Early Term Feedback

- Scholarship of Teaching and Learning

- Writing Your Teaching Philosophy

- All Programs

- Assessment Deep Dive

- Designing and Delivering Online Learning

- Early Career Teaching and Learning Program

- International Teaching Assistant (ITA) Program

- Preparing Future Faculty Program

- Teaching with Access and Inclusion Program

- Teaching for Student Well-Being Program

- Teaching Assistant and Postdoc Professional Development Program

Metacognitive strategies improve learning

Metacognition refers to thinking about one's thinking and is a skill students can use as part of a broader collection of skills known as self-regulated learning. Metacognitive strategies for learning include planning and goal setting, monitoring, and reflecting on learning. Students can be instructed in the use of metacognitive strategies. Classroom interventions designed to improve students’ metacognitive approaches are associated with improved learning (Cogliano, 2021; Theobald, 2021).

Strategies to encourage students to use metacognitive techniques

- Prompt students to develop study plans and to evaluate their approaches to planning for, monitoring, and evaluating their learning. Early in the term, advise and support students in making a study plan. After receiving feedback on the first and subsequent assessments, ask students to reflect on their performance and determine which study strategies worked and which did not. Encourage them to revise their study plans if needed. One way to support this is to ask students to identify their personal learning environment . This is an activity where students identify the various resources and support available to them.

- Offer practice tests. Explain to students the benefits of practice testing for improving retention and performance on exams. Create practice tests with an answer key to help students prepare for exams. Use practice questions for in-class formative feedback throughout the term. Consider creating a bank of practice questions from previous exams to share with students (Stanton, 2021).

- Call attention to strategies students can adopt to space their practice. This can include explaining the benefits of spaced practice and encouraging students to map out weekly study sessions for your course on their calendar. These study sessions should include the most recent material and revisit older material, perhaps in the form of practice tests (Stanton, 2021).

- Model your metacognitive processes with students. Show students the thinking process behind your approach to solving problems (Ambrose, 2010). This can take the form of a think-aloud where you talk through the steps you would take to plan, monitor, and reflect on your problem-solving approach.

- Caroline Hilk

- Research and Resources

- Why Use Active Learning?

- Successful Active Learning Implementation

- Addressing Active Learning Challenges

- Why Use Team Projects?

- Project Description Examples

- Project Description for Students

- Team Projects and Student Development Outcomes

- Forming Teams

- Team Output

- Individual Contributions to the Team

- Individual Student Understanding

- Supporting Students

- Wrapping up the Project

- Addressing Challenges

- Course Planning

- Working memory

- Retrieval of information

- Spaced practice

- Active learning

- Metacognition

- Definitions and PWI Focus

- A Flexible Framework

- Class Climate

- Course Content

- An Ongoing Endeavor

- Learn About Your Context

- Design Your Course to Support Challenging Conversations

- Design Your Challenging Conversations Class Session

- Use Effective Facilitation Strategies

- What to Do in a Challenging Moment

- Debrief and Reflect On Your Experience, and Try, Try Again

- Supplemental Resources

- Align Assessments

- Multiple Low Stakes Assessments

- Authentic Assessments

- Formative and Summative Assessments

- Varied Forms of Assessments

- Cumulative Assessments

- Equitable Assessments

- Essay Exams

- Multiple Choice Exams and Quizzes

- Academic Paper

- Skill Observation

- Alternative Assessments

- Assessment Plan

- Grade Assessments

- Prepare Students

- Reduce Student Anxiety

- SRT Scores: Interpreting & Responding

- Student Feedback Question Prompts

- Research Questions and Design

- Gathering data

- Publication

- GRAD 8101: Teaching in Higher Education

- Finding a Practicum Mentor

- GRAD 8200: Teaching for Learning

- Proficiency Rating & TA Eligibility

- Schedule a SETTA

- TAPD Webinars

- NLP Anchoring

- NLP Rapport

- Threshold Patterns

- NLP Reframing

- History of NLP

- NLP Definition

- NLP Modeling

- NLP Strategies

- Action Filter

- Motivation Direction

- Primary Interest

- Convincer Channel

- Satir Categories

- NLP Glossary

- NLP Products

- Hypnosis Products

- Useful Links

- Book Summaries

- Book Reviews

- Your Questions

- Conversational Hypnosis

- Hypnosis Benefits

- Hypnosis Myths

- Hypnosis – the Milton Model

- Persuasion and Influence

- Weight Loss

The Meta Model

The Meta Model Problem Solving Strategies

The Meta model is a model for changing our maps of the world. It provides a number of problem solving strategies. We cause many of our problems by our unconscious rule governed behavior.

We have problems not because the world isn’t rich enough, but because our maps aren’t. Alfred Korzybski’s work demonstrated that we don’t operate on the world directly but through our maps or models

This model is the foundation of NLP. It evolved from watching extraordinary therapists and the kinds of interactions they had with clients that got results.

Our nervous system deletes and distorts whole portions of reality in order to make the world manageable. Our maps determine our behavioral options by creating rules and programs for how we do things.

We delete information to avoid being overwhelmed. We don’t see all the choices we have available. We attend to our priorities and overlook other things that might be valuable.

We generalize information in order to summarize and synthesize. Dealing with categories is much less demanding than dealing with individual cases. For example, we talk about dogs as a category rather than all the individual dogs that we have met.

Lastly we distort information, for instance when we plan or visualize the future.

How we build the maps that control our behavior

We use three universal modeling processes to build our maps or models. The Meta model uses these three processes. Its terminology is from the field of linguistics and may seem quite strange.

Meta Model Deletions

We pay attention to some parts of our experiences and not others. The millions of sights, sounds, smells and feelings in the external environment and our internal world would overwhelm us if we didn’t delete most of them.

Deleting enables us for instance to talk on the phone in the middle of a crowded room. We tune in to what is important like hearing our name mentioned at a party. We are also deleting information when we think of ourselves as having limited choices. We often overlook problem-solving strategies that recover deleted choices.

Deletion Patterns

- Unspecified Nouns – Who or What

- Unspecified Verbs – Understanding the Process

Simple deletions

- Comparative deletions

- Ly Adverbs – Obviously this is Useful

Meta Model Generalizations

We categorize and summarize in order to manage our experience. We do this by choosing a representative experience, so one particular dog (real or a combination) will represent our category of dogs.

Generalizing enables us to transfer learning from one area to another. We learn the doorknob principle and use it to open doors we’ve never seen before.

Generalization Patterns

- Universal quantifiers – a Meta Model Generalization

- Modal operators – a Meta Model Generalization

- Complex equivalences – a Meta model generalization

The Meta Model Distortions

Our ability to distort experiences enables us to imagine new things and plan for the future. Distortion is useful in planning a trip, choosing new clothes and decorating a room.

On the other hand, distortions probably cause us the most problems. It can be limiting when we imagine negative events and become unresourceful. For instance, jealousy can be a response to imagining a partner being unfaithful and then responding as though it is real.

Distortion Patterns

- Nominalizations – Recipe for Misunderstanding

- Mind reading – Jumping to Conclusions

- Cause effects – How our world works

- Lost Performatives – Not my Beliefs

- Linguistic Presuppositions – Accepting What I Say

Using the Model

This model provides a way to recover deleted information, uncover our rules and untangle misunderstandings in our own and others’ communication. It is particularly useful in business communication where clear unambiguous directions can be critical.

Meta model questions

The model is the questions. By listening for how someone has created his or her maps, we can ask an appropriate question to recover what has been deleted, generalized or distorted. This then expands and enriches the person’s choices for solving the problem.

Further Reading: The Secrets of Magic by L.Michael Hall reprinted as Communication Magic

Leave a Reply

Your email address will not be published. Required fields are marked

Related Posts

Ly adverbs – obviously this is useful, cause effects – how our world works, lost performatives – not my beliefs, mind reading – jumping to conclusions, complex equivalences – a meta model generalization, privacy overview.

| Cookie | Duration | Description |

|---|---|---|

| cookielawinfo-checkbox-analytics | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookie is used to store the user consent for the cookies in the category "Analytics". |