Subscribe to the PwC Newsletter

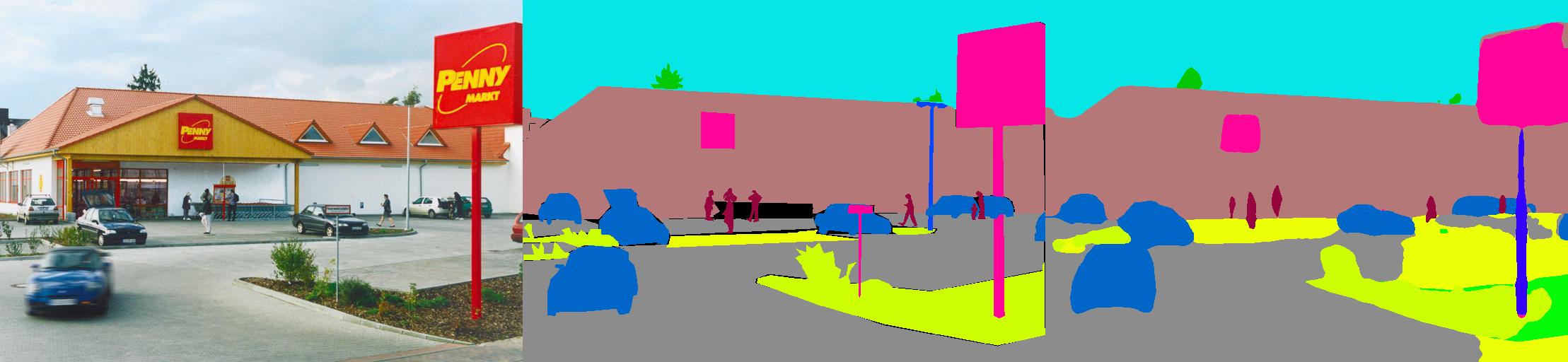

Join the community, computer vision, semantic segmentation.

Tumor Segmentation

Panoptic Segmentation

3D Semantic Segmentation

Weakly-Supervised Semantic Segmentation

Representation learning.

Disentanglement

Graph representation learning, sentence embeddings.

Network Embedding

Classification.

Text Classification

Graph Classification

Audio Classification

Medical Image Classification

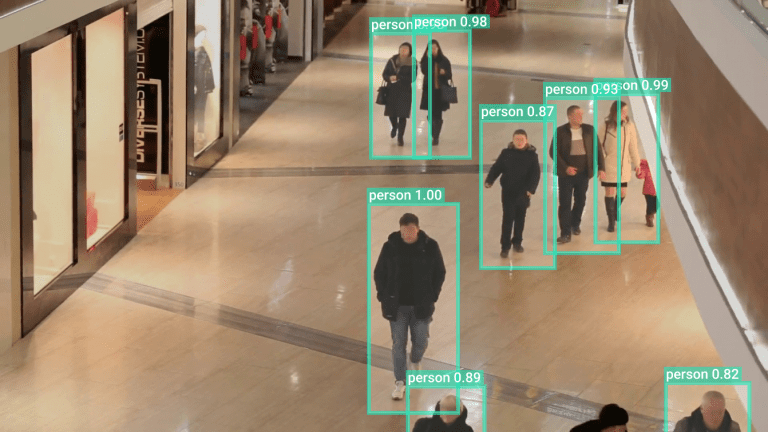

Object detection.

3D Object Detection

Real-Time Object Detection

RGB Salient Object Detection

Few-Shot Object Detection

Image classification.

Out of Distribution (OOD) Detection

Few-Shot Image Classification

Fine-Grained Image Classification

Semi-Supervised Image Classification

2d object detection.

Edge Detection

Thermal image segmentation.

Open Vocabulary Object Detection

Reinforcement learning (rl), off-policy evaluation, multi-objective reinforcement learning, 3d point cloud reinforcement learning, deep hashing, table retrieval, domain adaptation.

Unsupervised Domain Adaptation

Domain Generalization

Test-time Adaptation

Source-free domain adaptation, image generation.

Image-to-Image Translation

Text-to-Image Generation

Image Inpainting

Conditional Image Generation

Data augmentation.

Image Augmentation

Text Augmentation

Autonomous vehicles.

Autonomous Driving

Self-Driving Cars

Simultaneous Localization and Mapping

Autonomous Navigation

Image Denoising

Color Image Denoising

Sar Image Despeckling

Grayscale image denoising, meta-learning.

Few-Shot Learning

Sample Probing

Universal meta-learning, contrastive learning.

Super-Resolution

Image Super-Resolution

Video Super-Resolution

Multi-Frame Super-Resolution

Reference-based Super-Resolution

Pose estimation.

3D Human Pose Estimation

Keypoint Detection

3D Pose Estimation

6D Pose Estimation

Self-supervised learning.

Point Cloud Pre-training

Unsupervised video clustering, 2d semantic segmentation, image segmentation, text style transfer.

Scene Parsing

Reflection Removal

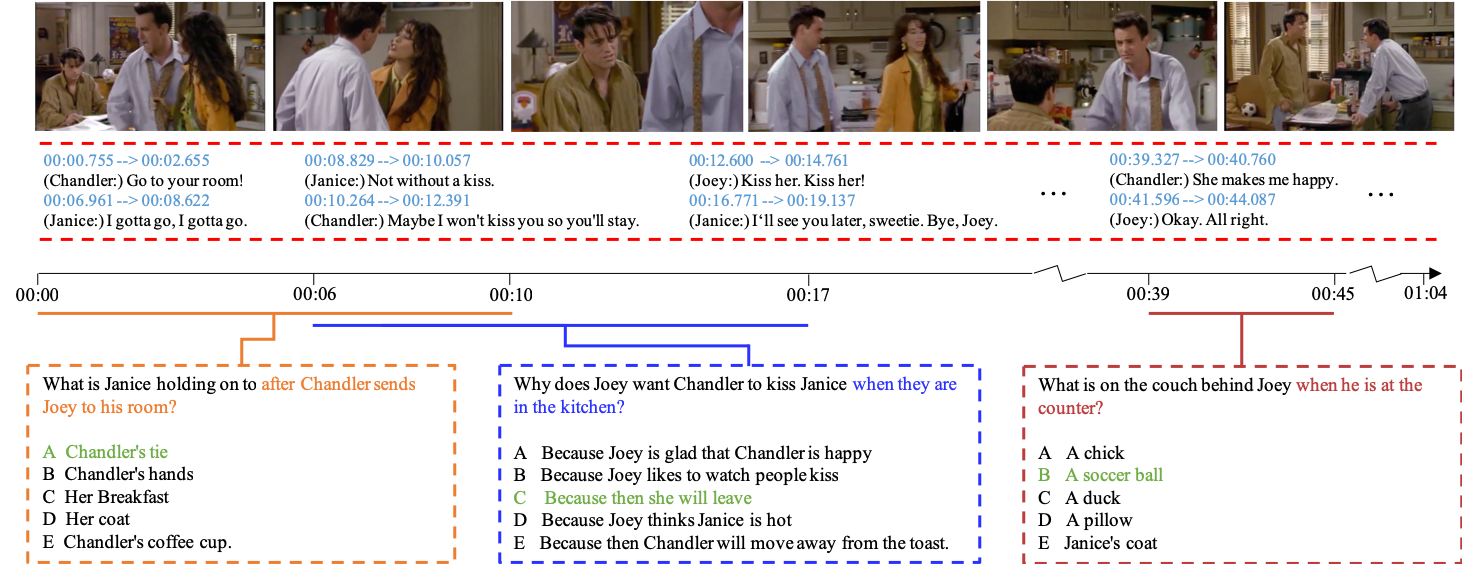

Visual question answering (vqa).

Visual Question Answering

Machine Reading Comprehension

Chart Question Answering

Embodied Question Answering

Depth Estimation

3D Reconstruction

Neural Rendering

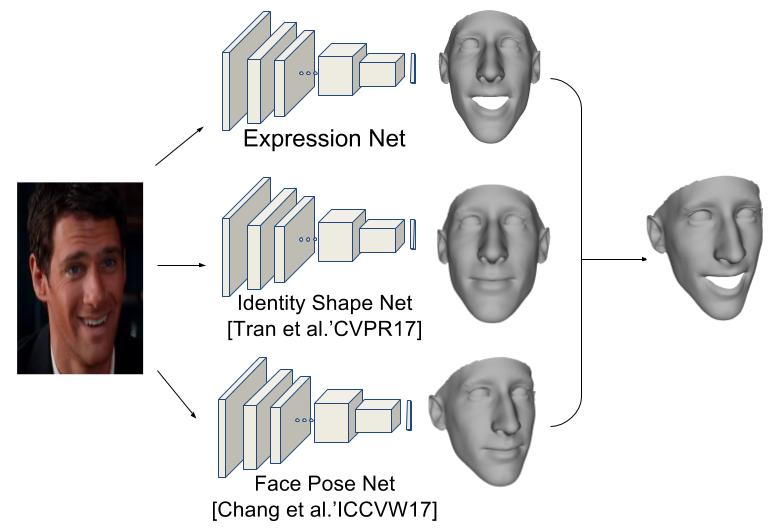

3D Face Reconstruction

Sentiment analysis.

Aspect-Based Sentiment Analysis (ABSA)

Multimodal Sentiment Analysis

Aspect Sentiment Triplet Extraction

Twitter Sentiment Analysis

Anomaly detection.

Unsupervised Anomaly Detection

One-Class Classification

Supervised anomaly detection, anomaly detection in surveillance videos.

Temporal Action Localization

Video Understanding

Video generation.

Video Object Segmentation

Action Classification

Activity recognition.

Action Recognition

Human Activity Recognition

Egocentric activity recognition.

Group Activity Recognition

3d object super-resolution.

One-Shot Learning

Few-Shot Semantic Segmentation

Cross-domain few-shot.

Unsupervised Few-Shot Learning

Medical image segmentation.

Lesion Segmentation

Brain Tumor Segmentation

Cell Segmentation

Skin lesion segmentation, monocular depth estimation.

Stereo Depth Estimation

Depth and camera motion.

3D Depth Estimation

Exposure fairness, optical character recognition (ocr).

Active Learning

Handwriting Recognition

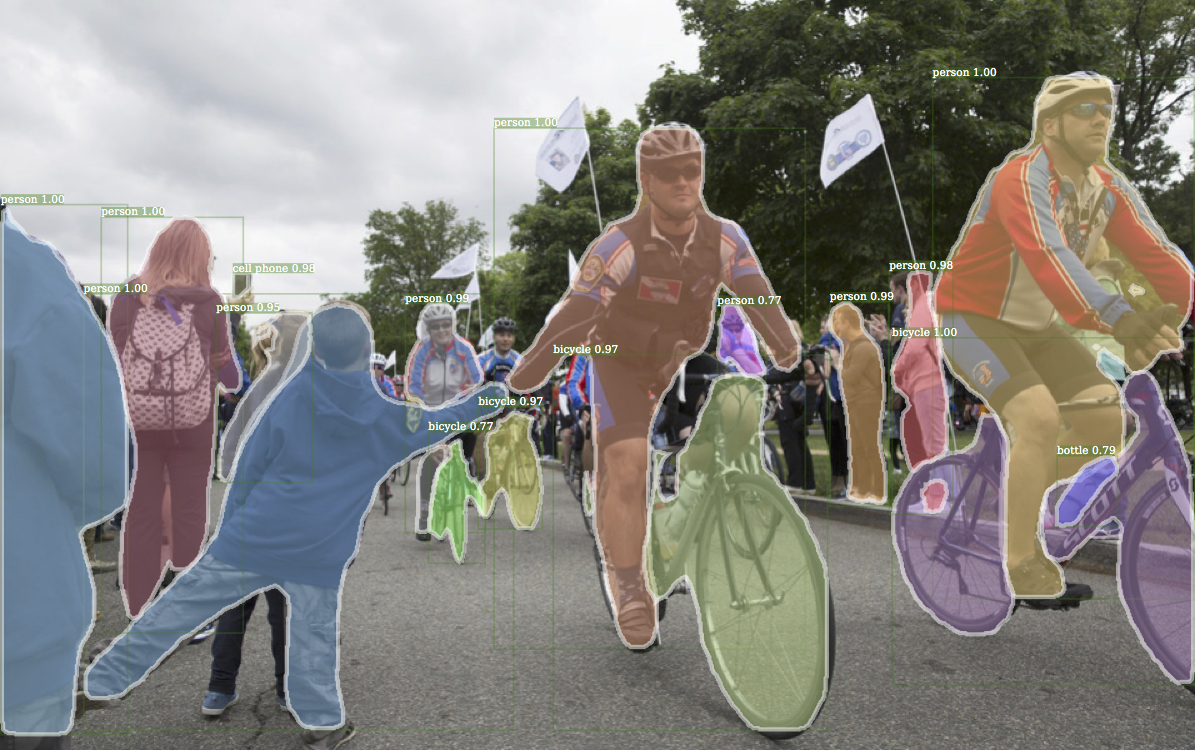

Handwritten digit recognition, irregular text recognition, instance segmentation.

Referring Expression Segmentation

3D Instance Segmentation

Real-time Instance Segmentation

Unsupervised Object Segmentation

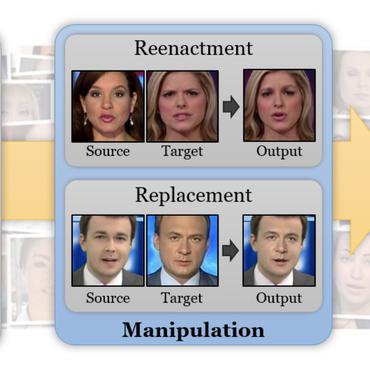

Facial recognition and modelling.

Face Recognition

Face Swapping

Face Detection

Facial Expression Recognition (FER)

Face Verification

Object tracking.

Multi-Object Tracking

Visual Object Tracking

Multiple Object Tracking

Cell Tracking

Zero-shot learning.

Generalized Zero-Shot Learning

Compositional Zero-Shot Learning

Multi-label zero-shot learning, quantization, data free quantization, unet quantization, continual learning.

Class Incremental Learning

Continual named entity recognition, unsupervised class-incremental learning.

Action Recognition In Videos

3D Action Recognition

Self-supervised action recognition, few shot action recognition.

Scene Understanding

Scene Text Recognition

Scene Graph Generation

Scene Recognition

Adversarial attack.

Backdoor Attack

Adversarial Text

Adversarial attack detection, real-world adversarial attack, active object detection, image retrieval.

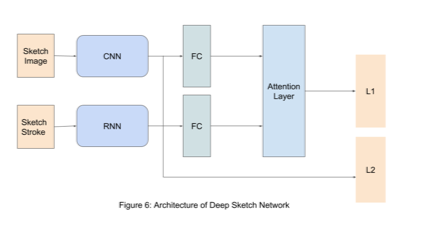

Sketch-Based Image Retrieval

Content-Based Image Retrieval

Composed Image Retrieval (CoIR)

Medical Image Retrieval

Dimensionality reduction.

Supervised dimensionality reduction

Online nonnegative cp decomposition, emotion recognition.

Speech Emotion Recognition

Emotion Recognition in Conversation

Multimodal Emotion Recognition

Emotion-cause pair extraction.

Monocular 3D Object Detection

3D Object Detection From Stereo Images

Multiview Detection

Robust 3d object detection, image reconstruction.

MRI Reconstruction

Film Removal

Style transfer.

Image Stylization

Font style transfer, style generalization, face transfer, optical flow estimation.

Video Stabilization

Image captioning.

3D dense captioning

Controllable image captioning, aesthetic image captioning.

Relational Captioning

Action localization.

Action Segmentation

Spatio-temporal action localization, person re-identification.

Unsupervised Person Re-Identification

Video-based person re-identification, generalizable person re-identification, cloth-changing person re-identification, image restoration.

Demosaicking

Spectral reconstruction, underwater image restoration.

JPEG Artifact Correction

Visual relationship detection, lighting estimation.

3D Room Layouts From A Single RGB Panorama

Road scene understanding, action detection.

Skeleton Based Action Recognition

Online Action Detection

Audio-visual active speaker detection, metric learning.

Object Recognition

3D Object Recognition

Continuous object recognition.

Depiction Invariant Object Recognition

Monocular 3D Human Pose Estimation

Pose prediction.

3D Multi-Person Pose Estimation

3d human pose and shape estimation, image enhancement.

Low-Light Image Enhancement

Image relighting, de-aliasing, multi-label classification.

Missing Labels

Extreme multi-label classification, hierarchical multi-label classification, medical code prediction, continuous control.

Steering Control

Drone controller.

Semi-Supervised Video Object Segmentation

Unsupervised Video Object Segmentation

Referring Video Object Segmentation

Video Salient Object Detection

3d face modelling.

Trajectory Prediction

Trajectory Forecasting

Human motion prediction, out-of-sight trajectory prediction.

Multivariate Time Series Imputation

Image quality assessment, no-reference image quality assessment, blind image quality assessment.

Aesthetics Quality Assessment

Stereoscopic image quality assessment, object localization.

Weakly-Supervised Object Localization

Image-based localization, unsupervised object localization, monocular 3d object localization, novel view synthesis.

Novel LiDAR View Synthesis

Gournd video synthesis from satellite image

Blind Image Deblurring

Single-image blind deblurring, out-of-distribution detection, video semantic segmentation.

Camera shot segmentation

Cloud removal.

Facial Inpainting

Fine-Grained Image Inpainting

Instruction following, visual instruction following, change detection.

Semi-supervised Change Detection

Saliency detection.

Saliency Prediction

Co-Salient Object Detection

Video saliency detection, unsupervised saliency detection, image compression.

Feature Compression

Jpeg compression artifact reduction.

Lossy-Compression Artifact Reduction

Color image compression artifact reduction, explainable artificial intelligence, explainable models, explanation fidelity evaluation, fad curve analysis, prompt engineering.

Visual Prompting

Image registration.

Unsupervised Image Registration

Ensemble learning, visual reasoning.

Visual Commonsense Reasoning

Salient object detection, saliency ranking, visual tracking.

Point Tracking

Rgb-t tracking, real-time visual tracking.

RF-based Visual Tracking

3d point cloud classification.

3D Object Classification

Few-Shot 3D Point Cloud Classification

Supervised only 3d point cloud classification, zero-shot transfer 3d point cloud classification, motion estimation, 2d classification.

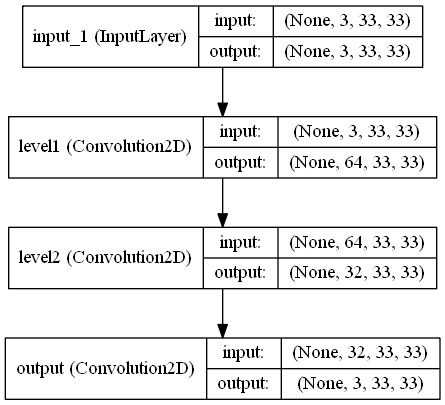

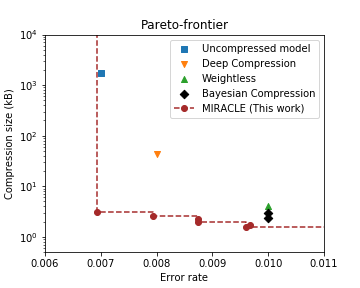

Neural Network Compression

Music Source Separation

Cell detection.

Plant Phenotyping

Open-set classification, image manipulation detection.

Zero Shot Skeletal Action Recognition

Generalized zero shot skeletal action recognition, whole slide images, activity prediction, motion prediction, cyber attack detection, sequential skip prediction, gesture recognition.

Hand Gesture Recognition

Hand-Gesture Recognition

RF-based Gesture Recognition

Video captioning.

Dense Video Captioning

Boundary captioning, visual text correction, audio-visual video captioning, video question answering.

Zero-Shot Video Question Answer

Few-shot video question answering.

Robust 3D Semantic Segmentation

Real-Time 3D Semantic Segmentation

Unsupervised 3D Semantic Segmentation

Furniture segmentation, point cloud registration.

Image to Point Cloud Registration

Text detection, medical diagnosis.

Alzheimer's Disease Detection

Retinal OCT Disease Classification

Blood cell count, thoracic disease classification, 3d point cloud interpolation, visual grounding.

Person-centric Visual Grounding

Phrase Extraction and Grounding (PEG)

Visual odometry.

Face Anti-Spoofing

Monocular visual odometry.

Hand Pose Estimation

Hand Segmentation

Gesture-to-gesture translation, rain removal.

Single Image Deraining

Image clustering.

Online Clustering

Face Clustering

Multi-view subspace clustering, multi-modal subspace clustering.

Image Dehazing

Single Image Dehazing

Colorization.

Line Art Colorization

Point-interactive Image Colorization

Color Mismatch Correction

Robot navigation.

PointGoal Navigation

Social navigation.

Sequential Place Learning

Image manipulation, conformal prediction.

Unsupervised Image-To-Image Translation

Synthetic-to-Real Translation

Multimodal Unsupervised Image-To-Image Translation

Cross-View Image-to-Image Translation

Fundus to Angiography Generation

Visual place recognition.

Indoor Localization

3d place recognition, image editing, rolling shutter correction, shadow removal, multimodel-guided image editing, joint deblur and frame interpolation, multimodal fashion image editing, visual localization.

DeepFake Detection

Synthetic Speech Detection

Human detection of deepfakes, multimodal forgery detection, stereo matching, object reconstruction.

3D Object Reconstruction

Crowd Counting

Visual Crowd Analysis

Group detection in crowds, human-object interaction detection.

Affordance Recognition

Image deblurring, low-light image deblurring and enhancement, earth observation, video quality assessment, video alignment, temporal sentence grounding, long-video activity recognition, point cloud classification, jet tagging, few-shot point cloud classification, image matching.

Semantic correspondence

Patch matching, set matching.

Matching Disparate Images

Hyperspectral.

Hyperspectral Image Classification

Hyperspectral unmixing, hyperspectral image segmentation, classification of hyperspectral images, document text classification.

Learning with noisy labels

Multi-label classification of biomedical texts, political salient issue orientation detection, 3d point cloud reconstruction.

Weakly Supervised Action Localization

Weakly-supervised temporal action localization.

Temporal Action Proposal Generation

Activity recognition in videos, scene classification.

2D Human Pose Estimation

Action anticipation.

3D Face Animation

Semi-supervised human pose estimation, point cloud generation, point cloud completion, referring expression, reconstruction, 3d human reconstruction.

Single-View 3D Reconstruction

4d reconstruction, single-image-based hdr reconstruction, compressive sensing, keyword spotting.

Small-Footprint Keyword Spotting

Visual keyword spotting, scene text detection.

Curved Text Detection

Multi-oriented scene text detection, boundary detection.

Junction Detection

Camera calibration, image matting.

Semantic Image Matting

Video retrieval, video-text retrieval, video grounding, video-adverb retrieval, replay grounding, composed video retrieval (covr), motion synthesis.

Motion Style Transfer

Temporal human motion composition, emotion classification.

Video Summarization

Unsupervised Video Summarization

Supervised video summarization, document ai, document understanding, sensor fusion, superpixels, point cloud segmentation, remote sensing.

Remote Sensing Image Classification

Change detection for remote sensing images, building change detection for remote sensing images.

Segmentation Of Remote Sensing Imagery

The Semantic Segmentation Of Remote Sensing Imagery

Few-Shot Transfer Learning for Saliency Prediction

Aerial Video Saliency Prediction

Document layout analysis.

3D Anomaly Detection

Video anomaly detection, artifact detection.

Point cloud reconstruction

3D Semantic Scene Completion

3D Semantic Scene Completion from a single RGB image

Garment reconstruction, face generation.

Talking Head Generation

Talking face generation.

Face Age Editing

Facial expression generation, kinship face generation, cross-modal retrieval, image-text matching, multilingual cross-modal retrieval.

Zero-shot Composed Person Retrieval

Cross-modal retrieval on rsitmd, video instance segmentation.

Privacy Preserving Deep Learning

Membership inference attack, human detection.

Generalized Few-Shot Semantic Segmentation

Virtual try-on, scene flow estimation.

Self-supervised Scene Flow Estimation

3d classification, depth completion.

Motion Forecasting

Multi-Person Pose forecasting

Multiple Object Forecasting

Video editing, video temporal consistency, face reconstruction, object discovery, carla map leaderboard, dead-reckoning prediction.

Generalized Referring Expression Segmentation

Gaze estimation.

Texture Synthesis

Text-based Image Editing

Text-guided-image-editing.

Zero-Shot Text-to-Image Generation

Concept alignment, conditional text-to-image synthesis, machine unlearning, continual forgetting, sign language recognition.

Image Recognition

Fine-grained image recognition, license plate recognition, material recognition, multi-view learning, incomplete multi-view clustering.

Breast Cancer Detection

Skin cancer classification.

Breast Cancer Histology Image Classification

Lung cancer diagnosis, classification of breast cancer histology images, gait recognition.

Multiview Gait Recognition

Gait recognition in the wild, human parsing.

Multi-Human Parsing

Pose tracking.

3D Human Pose Tracking

Interactive segmentation, scene generation.

3D Multi-Person Pose Estimation (absolute)

3D Multi-Person Pose Estimation (root-relative)

3D Multi-Person Mesh Recovery

Event-based vision.

Event-based Optical Flow

Event-Based Video Reconstruction

Event-based motion estimation, disease prediction, disease trajectory forecasting, object counting, training-free object counting, open-vocabulary object counting, interest point detection, homography estimation.

3D Hand Pose Estimation

Weakly supervised segmentation, facial landmark detection.

Unsupervised Facial Landmark Detection

3D Facial Landmark Localization

3d character animation from a single photo, scene segmentation.

Dichotomous Image Segmentation

Activity detection, inverse rendering, temporal localization.

Language-Based Temporal Localization

Temporal defect localization, multi-label image classification.

Multi-label Image Recognition with Partial Labels

3d object tracking.

3D Single Object Tracking

Template matching, text-to-video generation, text-to-video editing, subject-driven video generation, camera localization.

Camera Relocalization

Lidar semantic segmentation, visual dialog.

Motion Segmentation

Relation network, intelligent surveillance.

Vehicle Re-Identification

Text spotting.

Disparity Estimation

Few-Shot Class-Incremental Learning

Class-incremental semantic segmentation, non-exemplar-based class incremental learning, handwritten text recognition, handwritten document recognition, unsupervised text recognition, knowledge distillation.

Data-free Knowledge Distillation

Self-knowledge distillation, moment retrieval.

Zero-shot Moment Retrieval

Text to video retrieval, partially relevant video retrieval, person search, decision making under uncertainty.

Uncertainty Visualization

Semi-supervised object detection.

Shadow Detection

Shadow Detection And Removal

Unconstrained Lip-synchronization

Mixed reality, video inpainting.

Cross-corpus

Micro-expression recognition, micro-expression spotting.

3D Facial Expression Recognition

Smile Recognition

Future prediction, human mesh recovery, video enhancement.

Face Image Quality Assessment

Lightweight face recognition.

Age-Invariant Face Recognition

Synthetic face recognition, face quality assessement.

3D Multi-Object Tracking

Real-time multi-object tracking, multi-animal tracking with identification, trajectory long-tail distribution for muti-object tracking, grounded multiple object tracking, image categorization, fine-grained visual categorization, overlapped 10-1, overlapped 15-1, overlapped 15-5, disjoint 10-1, disjoint 15-1.

Burst Image Super-Resolution

Stereo image super-resolution, satellite image super-resolution, multispectral image super-resolution, color constancy.

Few-Shot Camera-Adaptive Color Constancy

Hdr reconstruction, multi-exposure image fusion, open vocabulary semantic segmentation, zero-guidance segmentation, physics-informed machine learning, soil moisture estimation, deep attention, line detection, video reconstruction.

Zero Shot Segmentation

Visual recognition.

Fine-Grained Visual Recognition

Image cropping, sign language translation.

Stereo Matching Hand

3D Absolute Human Pose Estimation

Text-to-Face Generation

Image forensics, tone mapping, zero-shot action recognition, natural language transduction, video restoration.

Analog Video Restoration

Novel class discovery.

Transparent Object Detection

Transparent objects, surface normals estimation.

hand-object pose

Grasp Generation

3D Canonical Hand Pose Estimation

Breast cancer histology image classification (20% labels), cross-domain few-shot learning, texture classification, vision-language navigation.

Abnormal Event Detection In Video

Semi-supervised Anomaly Detection

Infrared and visible image fusion.

Image Animation

Image to 3D

Probabilistic deep learning, unsupervised few-shot image classification, generalized few-shot classification, pedestrian attribute recognition.

Steganalysis

Sketch Recognition

Face Sketch Synthesis

Drawing pictures.

Photo-To-Caricature Translation

Spoof detection, face presentation attack detection, detecting image manipulation, cross-domain iris presentation attack detection, finger dorsal image spoof detection, computer vision techniques adopted in 3d cryogenic electron microscopy, single particle analysis, cryogenic electron tomography, highlight detection, iris recognition, pupil dilation, action quality assessment.

One-shot visual object segmentation

Unbiased Scene Graph Generation

Panoptic Scene Graph Generation

Image to video generation.

Unconditional Video Generation

Automatic post-editing.

Dense Captioning

Image stitching.

Multi-View 3D Reconstruction

Universal domain adaptation, action understanding, blind face restoration.

Document Image Classification

Face Reenactment

Geometric Matching

Human action generation.

Action Generation

Object categorization, person retrieval, text based person retrieval, surgical phase recognition, online surgical phase recognition, offline surgical phase recognition, human dynamics.

3D Human Dynamics

Meme classification, hateful meme classification, severity prediction, intubation support prediction, cloud detection.

Text-To-Image

Story visualization, complex scene breaking and synthesis, diffusion personalization.

Diffusion Personalization Tuning Free

Efficient Diffusion Personalization

Image fusion, pansharpening, image deconvolution.

Image Outpainting

Object Segmentation

Camouflaged Object Segmentation

Landslide segmentation, text-line extraction, point clouds, point cloud video understanding, point cloud rrepresentation learning.

Semantic SLAM

Object SLAM

Intrinsic image decomposition, line segment detection, table recognition, situation recognition, grounded situation recognition, motion detection, multi-target domain adaptation, sports analytics.

Robot Pose Estimation

Camouflaged Object Segmentation with a Single Task-generic Prompt

Image morphing, image shadow removal, person identification, visual prompt tuning, weakly-supervised instance segmentation, image smoothing, fake image detection.

GAN image forensics

Fake Image Attribution

Image steganography, rotated mnist, contour detection.

Face Image Quality

Lane detection.

3D Lane Detection

Layout design, license plate detection.

Video Panoptic Segmentation

Viewpoint estimation.

Drone navigation

Drone-view target localization, value prediction, body mass index (bmi) prediction, multi-object tracking and segmentation.

Occlusion Handling

Zero-shot transfer image classification.

3D Object Reconstruction From A Single Image

CAD Reconstruction

3d point cloud linear classification, crop classification, crop yield prediction, photo retouching, motion retargeting, shape representation of 3d point clouds, bird's-eye view semantic segmentation.

Dense Pixel Correspondence Estimation

Human part segmentation.

Multiview Learning

Person recognition.

Document Shadow Removal

Symmetry detection, traffic sign detection, video style transfer, referring image matting.

Referring Image Matting (Expression-based)

Referring Image Matting (Keyword-based)

Referring Image Matting (RefMatte-RW100)

Referring image matting (prompt-based), human interaction recognition, one-shot 3d action recognition, mutual gaze, affordance detection.

Gaze Prediction

Image forgery detection, image instance retrieval, amodal instance segmentation, image quality estimation.

Image Similarity Search

Precipitation Forecasting

Referring expression generation, road damage detection.

Space-time Video Super-resolution

Video matting.

Open-World Semi-Supervised Learning

Semi-supervised image classification (cold start), hand detection, material classification.

Open Vocabulary Attribute Detection

Inverse tone mapping, image/document clustering, self-organized clustering, instance search.

Audio Fingerprint

3d shape modeling.

Action Analysis

Facial editing.

Food Recognition

Holdout Set

Motion magnification, semi-supervised instance segmentation, binary classification, llm-generated text detection, cancer-no cancer per breast classification, cancer-no cancer per image classification, suspicous (birads 4,5)-no suspicous (birads 1,2,3) per image classification, cancer-no cancer per view classification, video segmentation, camera shot boundary detection, open-vocabulary video segmentation, open-world video segmentation, lung nodule classification, lung nodule 3d classification, lung nodule detection, lung nodule 3d detection, 3d scene reconstruction, art analysis.

Zero-Shot Composed Image Retrieval (ZS-CIR)

Event segmentation, generic event boundary detection, image retouching, image-variation, jpeg artifact removal, multispectral object detection, point cloud super resolution, skills assessment.

Sensor Modeling

10-shot image generation, video prediction, earth surface forecasting, predict future video frames, ad-hoc video search, audio-visual synchronization, handwriting generation, pose retrieval, scanpath prediction, scene change detection.

Sketch-to-Image Translation

Skills evaluation, synthetic image detection, highlight removal, 3d shape reconstruction from a single 2d image.

Shape from Texture

Deception detection, deception detection in videos, handwriting verification, bangla spelling error correction, 3d open-vocabulary instance segmentation.

3D Shape Representation

3D Dense Shape Correspondence

Birds eye view object detection.

Multiple People Tracking

Network Interpretation

Rgb-d reconstruction, seeing beyond the visible, semi-supervised domain generalization, unsupervised semantic segmentation.

Unsupervised Semantic Segmentation with Language-image Pre-training

Multiple object tracking with transformer.

Multiple Object Track and Segmentation

Constrained lip-synchronization, face dubbing, vietnamese visual question answering, explanatory visual question answering.

Video Visual Relation Detection

Human-object relationship detection, 3d shape reconstruction, defocus blur detection, event data classification, image comprehension, image manipulation localization, instance shadow detection, kinship verification, medical image enhancement, open vocabulary panoptic segmentation, single-object discovery, training-free 3d point cloud classification, video forensics.

Sequential Place Recognition

Autonomous flight (dense forest), autonomous web navigation.

Generative 3D Object Classification

Cube engraving classification, multimodal machine translation.

Face to Face Translation

Multimodal lexical translation, 2d semantic segmentation task 3 (25 classes), document enhancement, 4d panoptic segmentation, action assessment, bokeh effect rendering, drivable area detection, face anonymization, font recognition, horizon line estimation, image imputation.

Long Video Retrieval (Background Removed)

Medical image denoising.

Occlusion Estimation

Physiological computing.

Lake Ice Monitoring

Short-term object interaction anticipation, spatio-temporal video grounding, unsupervised 3d point cloud linear evaluation, wireframe parsing, single-image-generation, unsupervised anomaly detection with specified settings -- 30% anomaly, root cause ranking, anomaly detection at 30% anomaly, anomaly detection at various anomaly percentages.

Unsupervised Contextual Anomaly Detection

2d pose estimation, category-agnostic pose estimation, overlapping pose estimation, facial expression recognition, cross-domain facial expression recognition, zero-shot facial expression recognition, landmark tracking, muscle tendon junction identification, 3d object captioning, animated gif generation, generalized referring expression comprehension, image deblocking, infrared image super-resolution, motion disentanglement, persuasion strategies, scene text editing, traffic accident detection, accident anticipation, unsupervised landmark detection, visual speech recognition, lip to speech synthesis, continual anomaly detection, gaze redirection, weakly supervised action segmentation (transcript), weakly supervised action segmentation (action set)), calving front delineation in synthetic aperture radar imagery, calving front delineation in synthetic aperture radar imagery with fixed training amount.

Handwritten Line Segmentation

Handwritten word segmentation.

General Action Video Anomaly Detection

Physical video anomaly detection, monocular cross-view road scene parsing(road), monocular cross-view road scene parsing(vehicle).

Transparent Object Depth Estimation

3d semantic occupancy prediction, 3d scene editing, age and gender estimation, data ablation.

Occluded Face Detection

Gait identification, historical color image dating, stochastic human motion prediction, image retargeting, image and video forgery detection, motion captioning, personality trait recognition, personalized segmentation, scene-aware dialogue, spatial relation recognition, spatial token mixer, steganographics, story continuation.

Unsupervised Anomaly Detection with Specified Settings -- 0.1% anomaly

Unsupervised anomaly detection with specified settings -- 1% anomaly, unsupervised anomaly detection with specified settings -- 10% anomaly, unsupervised anomaly detection with specified settings -- 20% anomaly, vehicle speed estimation, visual analogies, visual social relationship recognition, zero-shot text-to-video generation, text-guided-generation, video frame interpolation, 3d video frame interpolation, unsupervised video frame interpolation.

eXtreme-Video-Frame-Interpolation

Continual semantic segmentation, overlapped 5-3, overlapped 25-25, evolving domain generalization, source-free domain generalization, micro-expression generation, micro-expression generation (megc2021), mistake detection, online mistake detection, period estimation, art period estimation (544 artists), unsupervised panoptic segmentation, unsupervised zero-shot panoptic segmentation, 3d rotation estimation, camera auto-calibration, defocus estimation, derendering, fingertip detection, hierarchical text segmentation, human-object interaction concept discovery.

One-Shot Face Stylization

Speaker-specific lip to speech synthesis, multi-person pose estimation, neural stylization.

Part-aware Panoptic Segmentation

Population Mapping

Pornography detection, prediction of occupancy grid maps, raw reconstruction, repetitive action counting, svbrdf estimation, semi-supervised video classification, spectrum cartography, supervised image retrieval, synthetic image attribution, training-free 3d part segmentation, unsupervised image decomposition, video propagation, vietnamese multimodal learning, weakly supervised 3d point cloud segmentation, weakly-supervised panoptic segmentation, drone-based object tracking, brain visual reconstruction, brain visual reconstruction from fmri.

Human-Object Interaction Generation

Image-guided composition, fashion understanding, semi-supervised fashion compatibility.

intensity image denoising

Lifetime image denoising, observation completion, active observation completion, boundary grounding.

Video Narrative Grounding

3d inpainting, 3d scene graph alignment, 4d spatio temporal semantic segmentation.

Age Estimation

Few-shot Age Estimation

Brdf estimation, camouflage segmentation, clothing attribute recognition, damaged building detection, depth image estimation, detecting shadows, dynamic texture recognition.

Disguised Face Verification

Few shot open set object detection, gaze target estimation, generalized zero-shot learning - unseen, hd semantic map learning, human-object interaction anticipation, image deep networks, keypoint detection and image matching, manufacturing quality control, materials imaging, micro-gesture recognition, multi-person pose estimation and tracking.

Multi-modal image segmentation

Multi-object discovery, neural radiance caching.

Parking Space Occupancy

Partial Video Copy Detection

Multimodal Patch Matching

Perpetual view generation, procedure learning, prompt-driven zero-shot domain adaptation, single-shot hdr reconstruction, on-the-fly sketch based image retrieval, thermal image denoising, trademark retrieval, unsupervised instance segmentation, unsupervised zero-shot instance segmentation, vehicle key-point and orientation estimation.

Video Individual Counting

Video-adverb retrieval (unseen compositions), video-to-image affordance grounding.

Vietnamese Scene Text

Visual sentiment prediction, human-scene contact detection, localization in video forgery, 3d canonicalization, 3d surface generation.

Visibility Estimation from Point Cloud

Amodal layout estimation, blink estimation, camera absolute pose regression, change data generation, constrained diffeomorphic image registration, continuous affect estimation, deep feature inversion, document image skew estimation, earthquake prediction, fashion compatibility learning.

Displaced People Recognition

Finger vein recognition, flooded building segmentation.

Future Hand Prediction

Generative temporal nursing, grounded multimodal named entity recognition, house generation, human fmri response prediction, hurricane forecasting, ifc entity classification, image declipping, image similarity detection.

Image Text Removal

Image-to-gps verification.

Image-based Automatic Meter Reading

Dial meter reading, indoor scene reconstruction, jpeg decompression.

Kiss Detection

Laminar-turbulent flow localisation.

Landmark Recognition

Brain landmark detection, corpus video moment retrieval, mllm evaluation: aesthetics, medical image deblurring, mental workload estimation, meter reading, motion expressions guided video segmentation, natural image orientation angle detection, multi-object colocalization, multilingual text-to-image generation, video emotion detection, nwp post-processing, occluded 3d object symmetry detection, open set video captioning, pso-convnets dynamics 1, pso-convnets dynamics 2, partial point cloud matching.

Partially View-aligned Multi-view Learning

Pedestrian Detection

Thermal Infrared Pedestrian Detection

Personality trait recognition by face, physical attribute prediction, point cloud semantic completion, point cloud classification dataset, point- of-no-return (pnr) temporal localization, pose contrastive learning, potrait generation, prostate zones segmentation, pulmorary vessel segmentation, pulmonary artery–vein classification, reference expression generation, safety perception recognition, jersey number recognition, interspecies facial keypoint transfer, image to sketch recognition, specular reflection mitigation, specular segmentation, state change object detection, surface normals estimation from point clouds, train ego-path detection.

Transform A Video Into A Comics

Transparency separation, typeface completion.

Unbalanced Segmentation

Unsupervised Long Term Person Re-Identification

Video correspondence flow.

Key-Frame-based Video Super-Resolution (K = 15)

Zero-shot single object tracking, yield mapping in apple orchards, lidar absolute pose regression, opd: single-view 3d openable part detection, self-supervised scene text recognition, spatial-aware image editing, video narration captioning, spectral estimation, spectral estimation from a single rgb image, 3d prostate segmentation, aggregate xview3 metric, atomic action recognition, composite action recognition, calving front delineation from synthetic aperture radar imagery, computer vision transduction, crosslingual text-to-image generation, zero-shot dense video captioning, document to image conversion, frame duplication detection, geometrical view, hyperview challenge.

Image Operation Chain Detection

Kinematic based workflow recognition, logo recognition.

MLLM Aesthetic Evaluation

Motion detection in non-stationary scenes, open-set video tagging, satellite orbit determination.

Segmentation Based Workflow Recognition

2d particle picking, small object detection.

Rice Grain Disease Detection

Sperm morphology classification, video & kinematic base workflow recognition, video based workflow recognition, video, kinematic & segmentation base workflow recognition, animal pose estimation.

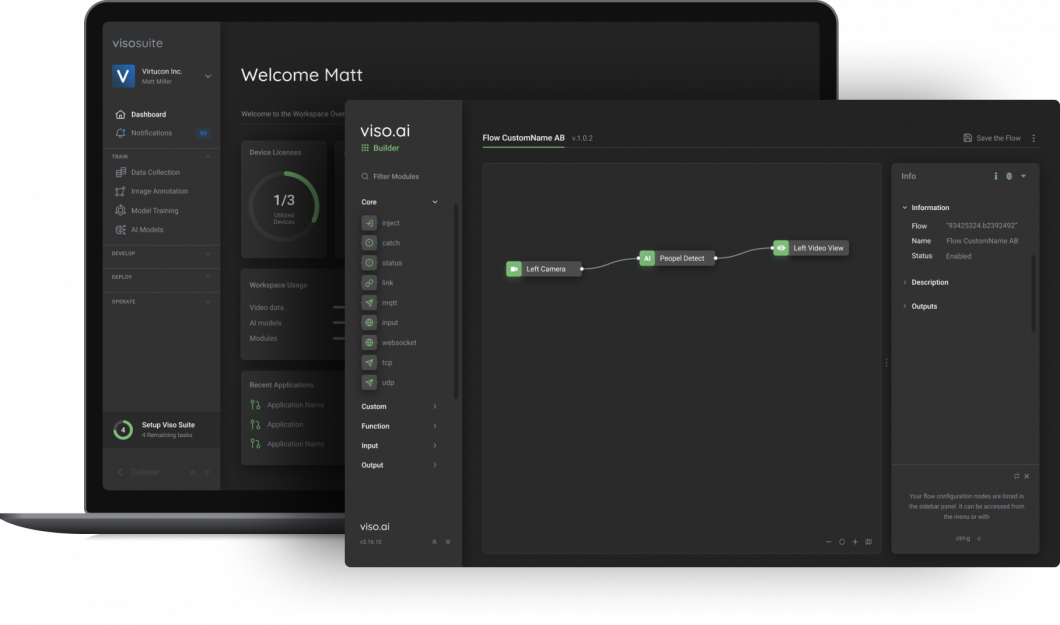

- Explore Blog

Data Collection

Building Blocks

Device Enrollment

Monitoring Dashboards

Video Annotation

Application Editor

Device Management

Remote Maintenance

Model Training

Application Library

Deployment Manager

Unified Security Center

AI Model Library

Configuration Manager

IoT Edge Gateway

Privacy-preserving AI

Ready to get started?

- Why Viso Suite

Top Computer Vision Papers of All Time (Updated 2024)

Viso Suite is the all-in-one solution for teams to build, deliver, scale computer vision applications.

Viso Suite is the world’s only end-to-end computer vision platform. Request a demo.

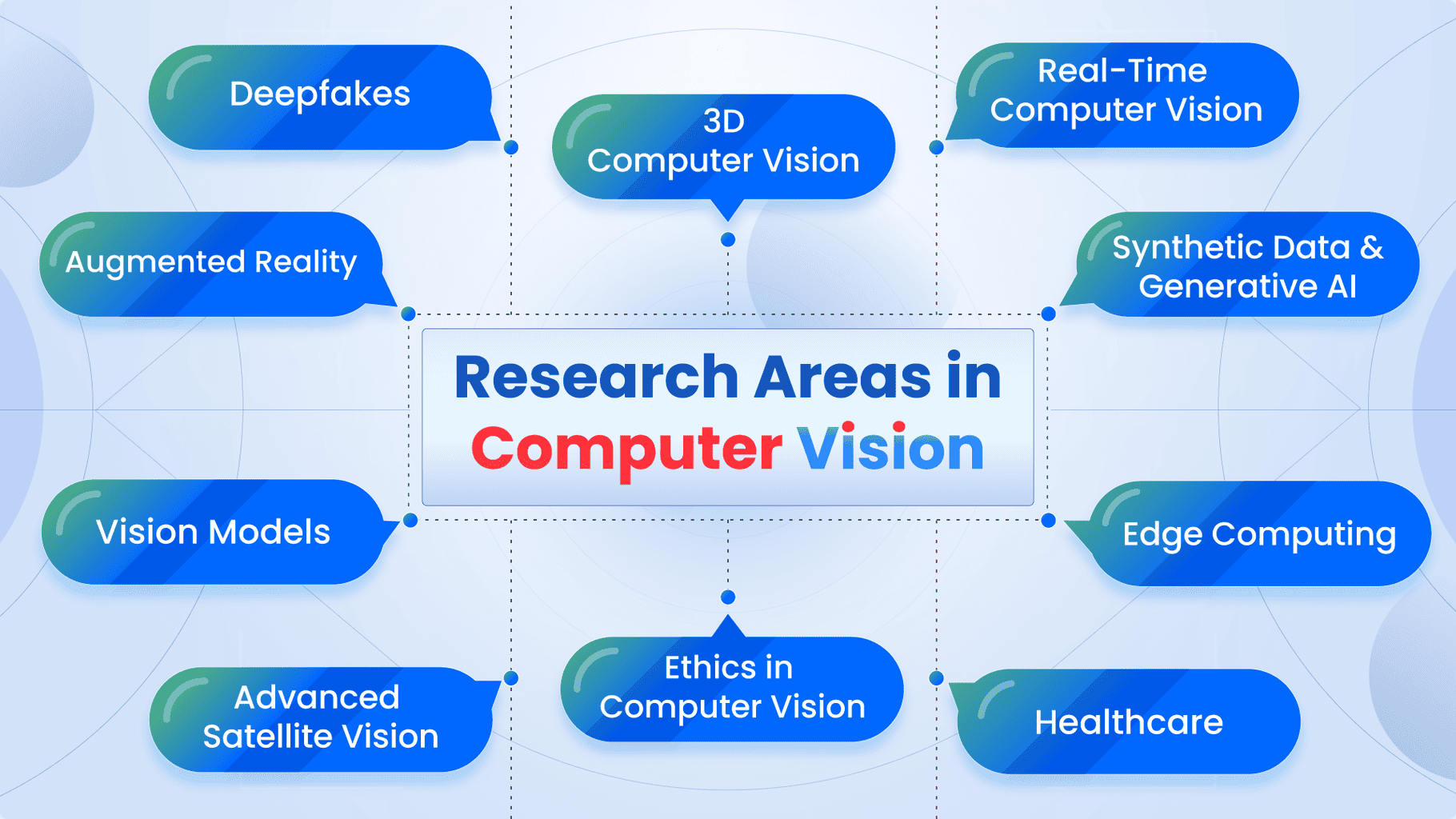

Today’s boom in computer vision (CV) started at the beginning of the 21 st century with the breakthrough of deep learning models and convolutional neural networks (CNN). The main CV methods include image classification, image localization, object detection, and segmentation.

In this article, we dive into some of the most significant research papers that triggered the rapid development of computer vision. We split them into two categories – classical CV approaches, and papers based on deep-learning. We chose the following papers based on their influence, quality, and applicability.

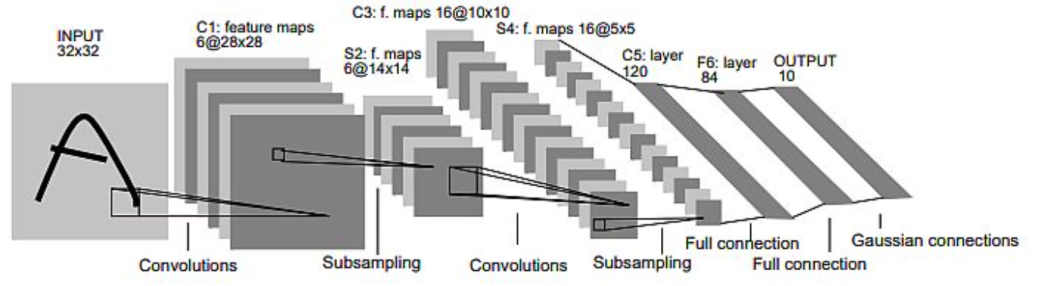

Gradient-based Learning Applied to Document Recognition (1998)

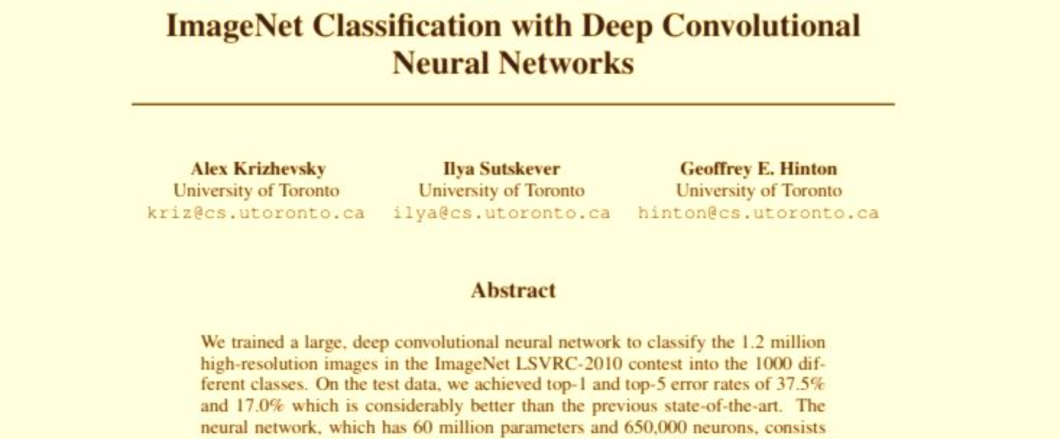

Distinctive image features from scale-invariant keypoints (2004), histograms of oriented gradients for human detection (2005), surf: speeded up robust features (2006), imagenet classification with deep convolutional neural networks (2012), very deep convolutional networks for large-scale image recognition (2014), googlenet – going deeper with convolutions (2014), resnet – deep residual learning for image recognition (2015), faster r-cnn: towards real-time object detection with region proposal networks (2015), yolo: you only look once: unified, real-time object detection (2016), mask r-cnn (2017), efficientnet – rethinking model scaling for convolutional neural networks (2019).

About us: Viso Suite is the end-to-end computer vision solution for enterprises. With a simple interface and features that give machine learning teams control over the entire ML pipeline, Viso Suite makes it possible to achieve a 3-year ROI of 695%. Book a demo to learn more about how Viso Suite can help solve business problems.

Classic Computer Vision Papers

The authors Yann LeCun, Leon Bottou, Yoshua Bengio, and Patrick Haffner published the LeNet paper in 1998. They introduced the concept of a trainable Graph Transformer Network (GTN) for handwritten character and word recognition . They researched (non) discriminative gradient-based techniques for training the recognizer without manual segmentation and labeling.

Characteristics of the model:

- LeNet-5 CNN contains 6 convolution layers with multiple feature maps (156 trainable parameters).

- The input is a 32×32 pixel image and the output layer is composed of Euclidean Radial Basis Function units (RBF) one for each class (letter).

- The training set consists of 30000 examples, and authors achieved a 0.35% error rate on the training set (after 19 passes).

Find the LeNet paper here .

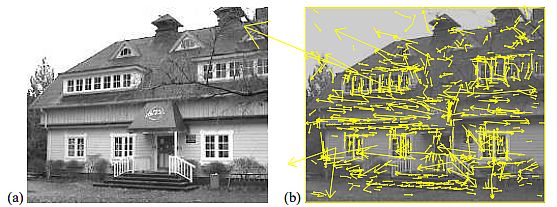

David Lowe (2004), proposed a method for extracting distinctive invariant features from images. He used them to perform reliable matching between different views of an object or scene. The paper introduced Scale Invariant Feature Transform (SIFT), while transforming image data into scale-invariant coordinates relative to local features.

Model characteristics:

- The method generates large numbers of features that densely cover the image over the full range of scales and locations.

- The model needs to match at least 3 features from each object – in order to reliably detect small objects in cluttered backgrounds.

- For image matching and recognition, the model extracts SIFT features from a set of reference images stored in a database.

- SIFT model matches a new image by individually comparing each feature from the new image to this previous database (Euclidian distance).

Find the SIFT paper here .

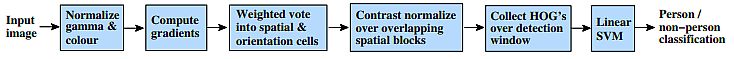

The authors Navneet Dalal and Bill Triggs researched the feature sets for robust visual object recognition, by using a linear SVM-based human detection as a test case. They experimented with grids of Histograms of Oriented Gradient (HOG) descriptors that significantly outperform existing feature sets for human detection .

Authors achievements:

- The histogram method gave near-perfect separation from the original MIT pedestrian database.

- For good results – the model requires: fine-scale gradients, fine orientation binning, i.e. high-quality local contrast normalization in overlapping descriptor blocks.

- Researchers examined a more challenging dataset containing over 1800 annotated human images with many pose variations and backgrounds.

- In the standard detector, each HOG cell appears four times with different normalizations and improves performance to 89%.

Find the HOG paper here .

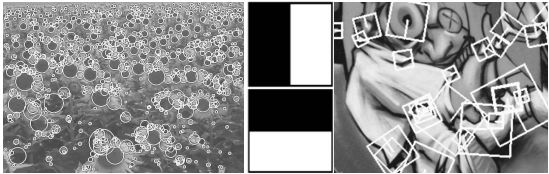

Herbert Bay, Tinne Tuytelaars, and Luc Van Goo presented a scale- and rotation-invariant interest point detector and descriptor, called SURF (Speeded Up Robust Features). It outperforms previously proposed schemes concerning repeatability, distinctiveness, and robustness, while computing much faster. The authors relied on integral images for image convolutions, furthermore utilizing the leading existing detectors and descriptors.

- Applied a Hessian matrix-based measure for the detector, and a distribution-based descriptor, simplifying these methods to the essential.

- Presented experimental results on a standard evaluation set, as well as on imagery obtained in the context of a real-life object recognition application.

- SURF showed strong performance – SURF-128 with an 85.7% recognition rate, followed by U-SURF (83.8%) and SURF (82.6%).

Find the SURF paper here .

Papers Based on Deep-Learning Models

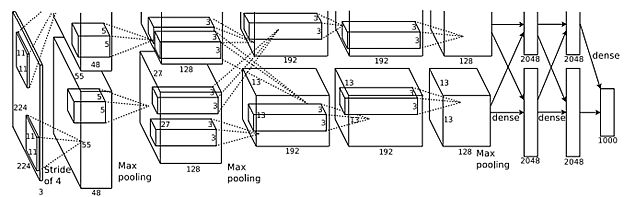

Alex Krizhevsky and his team won the ImageNet Challenge in 2012 by researching deep convolutional neural networks. They trained one of the largest CNNs at that moment over the ImageNet dataset used in the ILSVRC-2010 / 2012 challenges and achieved the best results reported on these datasets. They implemented a highly-optimized GPU of 2D convolution, thus including all required steps in CNN training, and published the results.

- The final CNN contained five convolutional and three fully connected layers, and the depth was quite significant.

- They found that removing any convolutional layer (each containing less than 1% of the model’s parameters) resulted in inferior performance.

- The same CNN, with an extra sixth convolutional layer, was used to classify the entire ImageNet Fall 2011 release (15M images, 22K categories).

- After fine-tuning on ImageNet-2012 it gave an error rate of 16.6%.

Find the ImageNet paper here .

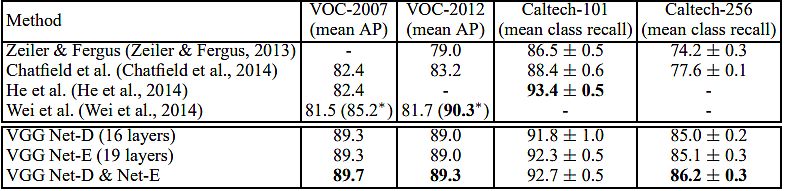

Karen Simonyan and Andrew Zisserman (Oxford University) investigated the effect of the convolutional network depth on its accuracy in the large-scale image recognition setting. Their main contribution is a thorough evaluation of networks of increasing depth using an architecture with very small (3×3) convolution filters, specifically focusing on very deep convolutional networks (VGG) . They proved that a significant improvement on the prior-art configurations can be achieved by pushing the depth to 16–19 weight layers.

- Their ImageNet Challenge 2014 submission secured the first and second places in the localization and classification tracks respectively.

- They showed that their representations generalize well to other datasets, where they achieved state-of-the-art results.

- They made two best-performing ConvNet models publicly available, in addition to the deep visual representations in CV.

Find the VGG paper here .

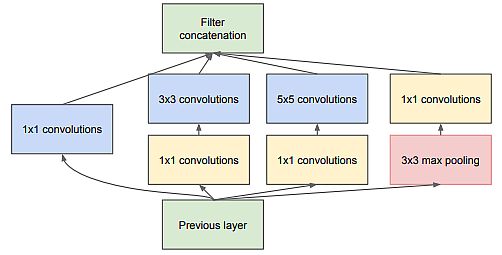

The Google team (Christian Szegedy, Wei Liu, et al.) proposed a deep convolutional neural network architecture codenamed Inception. They intended to set the new state of the art for classification and detection in the ImageNet Large-Scale Visual Recognition Challenge 2014 (ILSVRC14). The main hallmark of their architecture was the improved utilization of the computing resources inside the network.

- A carefully crafted design that allows for increasing the depth and width of the network while keeping the computational budget constant.

- Their submission for ILSVRC14 was called GoogLeNet , a 22-layer deep network. Its quality was assessed in the context of classification and detection.

- They added 200 region proposals coming from multi-box increasing the coverage from 92% to 93%.

- Lastly, they used an ensemble of 6 ConvNets when classifying each region which improved results from 40% to 43.9% accuracy.

Find the GoogLeNet paper here .

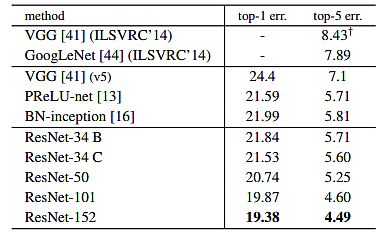

Microsoft researchers Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun presented a residual learning framework (ResNet) to ease the training of networks that are substantially deeper than those used previously. They reformulated the layers as learning residual functions concerning the layer inputs, instead of learning unreferenced functions.

- They evaluated residual nets with a depth of up to 152 layers – 8× deeper than VGG nets, but still having lower complexity.

- This result won 1st place on the ILSVRC 2015 classification task.

- The team also analyzed the CIFAR-10 with 100 and 1000 layers, achieving a 28% relative improvement on the COCO object detection dataset.

- Moreover – in ILSVRC & COCO 2015 competitions, they won 1 st place on the tasks of ImageNet detection, ImageNet localization, COCO detection/segmentation.

Find the ResNet paper here .

Shaoqing Ren, Kaiming He, Ross Girshick, and Jian Sun introduced the Region Proposal Network (RPN) with full-image convolutional features with the detection network, therefore enabling nearly cost-free region proposals. Their RPN was a fully convolutional network that simultaneously predicted object bounds and objective scores at each position. Also, they trained the RPN end-to-end to generate high-quality region proposals, which Fast R-CNN used for detection.

- Merged RPN and fast R-CNN into a single network by sharing their convolutional features. In addition, they applied neural networks with “ attention” mechanisms .

- For the very deep VGG-16 model, their detection system had a frame rate of 5fps on a GPU.

- Achieved state-of-the-art object detection accuracy on PASCAL VOC 2007, 2012, and MS COCO datasets with only 300 proposals per image.

- In ILSVRC and COCO 2015 competitions, faster R-CNN and RPN were the foundations of the 1st-place winning entries in several tracks.

Find the Faster R-CNN paper here .

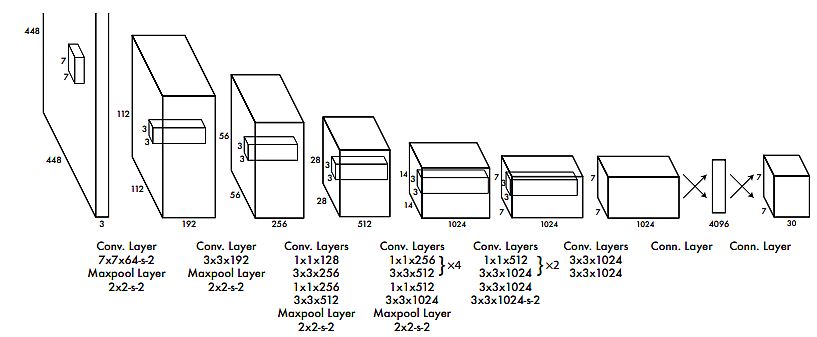

Joseph Redmon, Santosh Divvala, Ross Girshick, and Ali Farhadi developed YOLO, an innovative approach to object detection. Instead of repurposing classifiers to perform detection, the authors framed object detection as a regression problem. In addition, they spatially separated bounding boxes and associated class probabilities. A single neural network predicts bounding boxes and class probabilities directly from full images in one evaluation. Since the whole detection pipeline is a single network, it can be optimized end-to-end directly on detection performance .

- The base YOLO model processed images in real-time at 45 frames per second.

- A smaller version of the network, Fast YOLO, processed 155 frames per second, while still achieving double the mAP of other real-time detectors.

- Compared to state-of-the-art detection systems, YOLO was making more localization errors, but was less likely to predict false positives in the background.

- YOLO learned very general representations of objects and outperformed other detection methods, including DPM and R-CNN , when generalizing natural images.

Find the YOLO paper here .

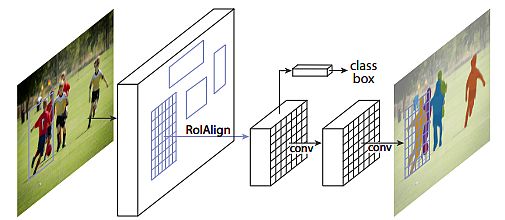

Kaiming He, Georgia Gkioxari, Piotr Dollar, and Ross Girshick (Facebook) presented a conceptually simple, flexible, and general framework for object instance segmentation. Their approach could detect objects in an image, while simultaneously generating a high-quality segmentation mask for each instance. The method, called Mask R-CNN , extended Faster R-CNN by adding a branch for predicting an object mask in parallel with the existing branch for bounding box recognition.

- Mask R-CNN is simple to train and adds only a small overhead to Faster R-CNN, running at 5 fps.

- Showed great results in all three tracks of the COCO suite of challenges. Also, it includes instance segmentation, bounding box object detection, and person keypoint detection.

- Mask R-CNN outperformed all existing, single-model entries on every task, including the COCO 2016 challenge winners.

- The model served as a solid baseline and eased future research in instance-level recognition.

Find the Mask R-CNN paper here .

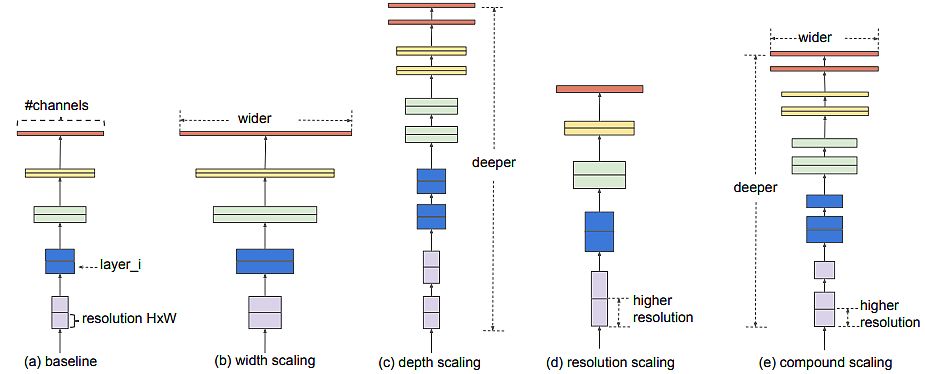

The authors (Mingxing Tan, Quoc V. Le) of EfficientNet studied model scaling and identified that carefully balancing network depth, width, and resolution can lead to better performance. They proposed a new scaling method that uniformly scales all dimensions of depth resolution using a simple but effective compound coefficient. They demonstrated the effectiveness of this method in scaling up MobileNet and ResNet .

- Designed a new baseline network and scaled it up to obtain a family of models, called EfficientNets. It had much better accuracy and efficiency than previous ConvNets.

- EfficientNet-B7 achieved state-of-the-art 84.3% top-1 accuracy on ImageNet, while being 8.4x smaller and 6.1x faster on inference than the best existing ConvNet.

- It also transferred well and achieved state-of-the-art accuracy on CIFAR-100 (91.7%), Flowers (98.8%), and 3 other transfer learning datasets, with much fewer parameters.

Find the EfficientNet paper here .

Related Articles

Synthetic Data: A Model Training Solution

Discover the role of synthetic data in AI, ML, and data privacy. Learn how it enhances AI and ML training and how it is generated.

ImageNet Dataset: Evolution & Applications (2024)

Everything you need to know about the ImageNet dataset and its resounding impact on the world of computer vision and machine learning.

All-in-one platform to build, deploy, and scale computer vision applications

Join 6,300+ Fellow AI Enthusiasts

Get expert news and updates straight to your inbox. Subscribe to the Viso Blog.

Get expert AI news 2x a month. Subscribe to the most read Computer Vision Blog.

You can unsubscribe anytime. See our privacy policy .

Build any Computer Vision Application, 10x faster

All-in-one Computer Vision Platform for businesses to build, deploy and scale real-world applications.

- Deploy Apps

- Monitor Apps

- Manage Apps

- Help Center

Privacy Overview

The application of deep learning in computer vision

Ieee account.

- Change Username/Password

- Update Address

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- My Account Login

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Review Article

- Open access

- Published: 08 January 2021

Deep learning-enabled medical computer vision

- Andre Esteva ORCID: orcid.org/0000-0003-1937-9682 1 ,

- Katherine Chou 2 na1 ,

- Serena Yeung 3 na1 ,

- Nikhil Naik ORCID: orcid.org/0000-0002-5191-2726 1 na1 ,

- Ali Madani 1 na1 ,

- Ali Mottaghi 3 na1 ,

- Yun Liu ORCID: orcid.org/0000-0003-4079-8275 2 ,

- Eric Topol 4 ,

- Jeff Dean 2 &

- Richard Socher 1

npj Digital Medicine volume 4 , Article number: 5 ( 2021 ) Cite this article

92k Accesses

491 Citations

312 Altmetric

Metrics details

- Computational science

- Health care

- Medical research

A decade of unprecedented progress in artificial intelligence (AI) has demonstrated the potential for many fields—including medicine—to benefit from the insights that AI techniques can extract from data. Here we survey recent progress in the development of modern computer vision techniques—powered by deep learning—for medical applications, focusing on medical imaging, medical video, and clinical deployment. We start by briefly summarizing a decade of progress in convolutional neural networks, including the vision tasks they enable, in the context of healthcare. Next, we discuss several example medical imaging applications that stand to benefit—including cardiology, pathology, dermatology, ophthalmology–and propose new avenues for continued work. We then expand into general medical video, highlighting ways in which clinical workflows can integrate computer vision to enhance care. Finally, we discuss the challenges and hurdles required for real-world clinical deployment of these technologies.

Similar content being viewed by others

Diagnostic accuracy of deep learning in medical imaging: a systematic review and meta-analysis

Where do we stand in AI for endoscopic image analysis? Deciphering gaps and future directions

Applications of artificial intelligence in cardiovascular imaging

Introduction.

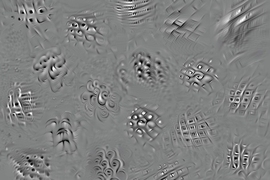

Computer vision (CV) has a rich history spanning decades 1 of efforts to enable computers to perceive visual stimuli meaningfully. Machine perception spans a range of levels, from low-level tasks such as identifying edges, to high-level tasks such as understanding complete scenes. Advances in the last decade have largely been due to three factors: (1) the maturation of deep learning (DL)—a type of machine learning that enables end-to-end learning of very complex functions from raw data 2 (2) strides in localized compute power via GPUs 3 , and (3) the open-sourcing of large labeled datasets with which to train these algorithms 4 . The combination of these three elements has enabled individual researchers the resource access needed to advance the field. As the research community grew exponentially, so did progress.

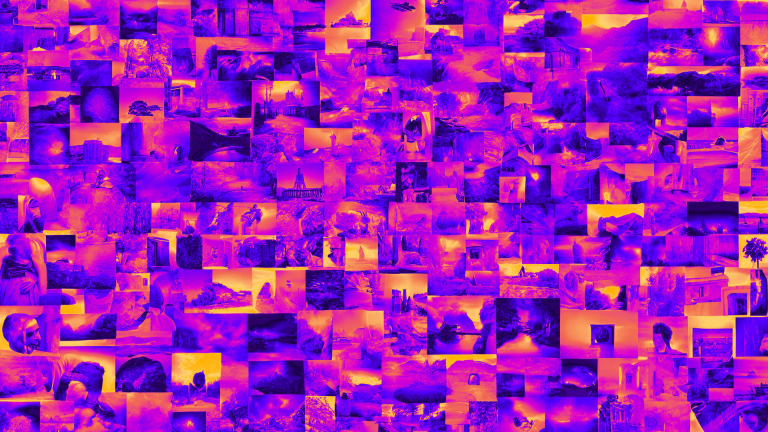

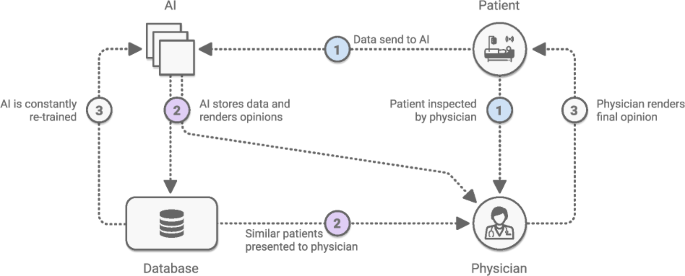

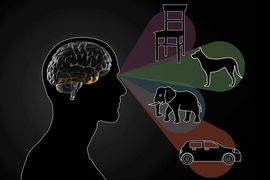

The growth of modern CV has overlapped with the generation of large amounts of digital data in a number of scientific fields. Recent medical advances have been prolific 5 , 6 , owing largely to DL’s remarkable ability to learn many tasks from most data sources. Using large datasets, CV models can acquire many pattern-recognition abilities—from physician-level diagnostics 7 to medical scene perception 8 . See Fig. 1 .

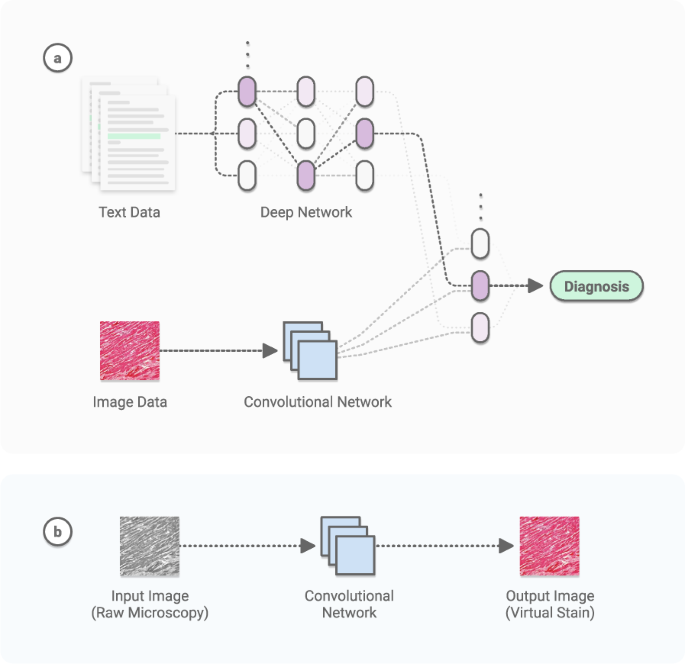

a Multimodal discriminative model. Deep learning architectures can be constructed to jointly learn from both image data, typically with convolutional networks, and non-image data, typically with general deep networks. Learned annotations can include disease diagnostics, prognostics, clinical predictions, and combinations thereof. b Generative model. Convolutional neural networks can be trained to generate images. Tasks include image-to-image regression (shown), super-resolution image enhancement, novel image generation, and others.

Here we survey the intersection of CV and medicine, focusing on research in medical imaging, medical video, and real clinical deployment. We discuss key algorithmic capabilities which unlocked these opportunities, and dive into the myriad of accomplishments from recent years. The clinical tasks suitable for CV span many categories, such as screening, diagnosis, detecting conditions, predicting future outcomes, segmenting pathologies from organs to cells, monitoring disease, and clinical research. Throughout, we consider the future growth of this technology and its implications for medicine and healthcare.

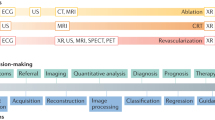

Computer vision

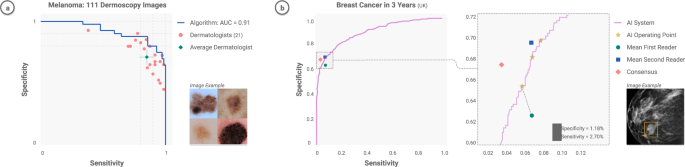

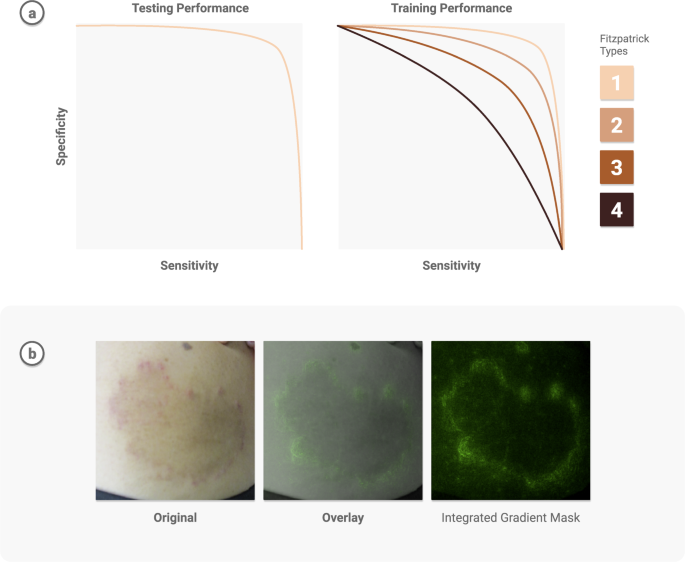

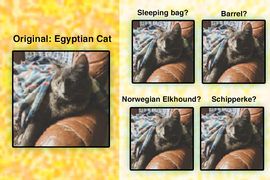

Object classification, localization, and detection, respectively refer to identifying the type of an object in an image, the location of objects present, and both type and location simultaneously. The ImageNet Large-Scale Visual Recognition Challenge 9 (ILSVRC) was a spearhead to progress in these tasks over the last decade. It created a large community of DL researchers competing and collaborating together to improve techniques on various CV tasks. The first contemporary, GPU-powered DL approach, in 2012 10 , yielded an inflection point in the growth of this community, heralding an era of significant year-over-year improvements 11 , 12 , 13 , 14 through the competition’s final year in 2017. Notably, classification accuracy achieved human-level performance during this period. Within medicine, fine-grained versions of these methods 15 have successfully been applied to the classification and detection of many diseases (Fig. 2 ). Given sufficient data, the accuracy often matches or surpasses the level of expert physicians 7 , 16 . Similarly, the segmentation of objects has substantially improved 17 , 18 , particularly in challenging scenarios such as the biomedical segmentation of multiple types of overlapping cells in microscopy. The key DL technique leveraged in these tasks is the convolutional neural network 19 (CNN)—a type of DL algorithm which hardcodes translational invariance, a key feature of image data. Many other CV tasks have benefited from this progress, including image registration (identifying corresponding points across similar images), image retrieval (finding similar images), and image reconstruction and enhancement. The specific challenges of working with medical data require the utilization of many types of AI models.

CNNs—trained to classify disease states—have been extensively tested across diseases, and benchmarked against physicians. Their performance is typically on par with experts when both are tested on the same image classification task. a Dermatology 7 and b Radiology 156 . Examples reprinted with permission and adapted for style.

These techniques largely rely on supervised learning, which leverages datasets that contain both data points (e.g. images) and data labels (e.g. object classes). Given the sparsity and access difficulties of medical data, transfer learning—in which an algorithm is first trained on a large and unrelated corpus (e.g. ImageNet 4 ), then fine-tuned on a dataset of interest (e.g. medical)—has been critical for progress. To reduce the costs associated with collecting and labeling data, techniques to generate synthetic data, such as data augmentation 20 and generative adversarial networks (GANs) 21 are being developed. Researchers have even shown that crowd-sourcing image annotations can yield effective medical algorithms 22 , 23 . Recently, self-supervised learning 24 —in which implicit labels are extracted from data points and used to train algorithms (e.g predicting the spatial arrangement of tiles generated from splitting an image into pieces)—have pushed the field towards fully unsupervised learning, which lacks the need for labels. Applying these techniques in medicine will reduce the barrier to development and deployment.

Medical data access is central to this field, and key ethical and legal questions must be addressed. Do patients own their de-identified data? What if methods to re-identify data improve over time? Should the community open-source large quantities of data? To date, academia and industry have largely relied on small, open-source datasets, and data collected through commercial products. Dynamics around data sharing and country-specific availability will impact deployment opportunities. The field of federated learning 25 —in which centralized algorithms can be trained on distributed data that never leaves protected enclosures—may enable a workaround in stricter jurisdictions.

These advances have spurred growth in other domains of CV, such as multimodal learning, which combines vision with other modalities such as language (Fig. 1a ) 26 , time-series data, and genomic data 5 . These methods can combine with 3D vision 27 , 28 to turn depth-cameras into privacy-preserving sensors 29 , making deployment easier for patient settings such as the intensive care unit 8 . The range of tasks is even broader in video. Applications like activity recognition 30 and live scene understanding 31 are useful in detecting and responding to important or adverse clinical events 32 .

Medical imaging

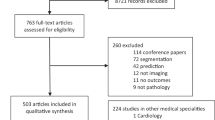

In recent years the number of publications applying computer vision techniques to static medical imagery has grown from hundreds to thousands 33 . A few areas have received substantial attention—radiology, pathology, ophthalmology, and dermatology—owing to the visual pattern-recognition nature of diagnostic tasks in these specialities, and the growing availability of highly structured images.

The unique characteristics of medical imagery pose a number of challenges to DL-based computer vision. For one, images can be massive. Digitizing histopathology slides produces gigapixel images of around 100,000 ×100,000 pixels, whereas typical CNN image inputs are around 200 ×200 pixels. Further, different chemical preparations will render different slides for the same piece of tissue, and different digitization devices or settings may produce different images for the same slide. Radiology modalities such as CT and MRI render equally massive 3D images, forcing standard CNNs to either work with a set of 2D slices, or adjust their internal structure to process in 3D. Similarly, ultrasound renders a time-series of noisy 2D slices of a 3D context–slices which are spatially correlated but not aligned. DL has started to account for the unique challenges of medical data. For instance, multiple-instance-learning (MIL) 34 enables learning from datasets containing massive images and few labels (e.g. histopathology). 3D convolutions in CNNs are enabling better learning from 3D volumes (e.g MRI and CT) 35 . Spatio-temporal models 36 and image registration enable working with time-series images (e.g. ultrasound).

Dozens of companies have obtained US FDA and European CE approval for medical imaging AI 37 , and commercial markets have begun to form as sustainable business models are created. For instance, regions of high-throughput healthcare, such as India and Thailand, have welcomed the deployment of technologies such as diabetic retinopathy screening systems 38 . This rapid growth has now reached the point of directly impacting patient outcomes—the US CMS recently approved reimbursement for a radiology stroke triage use-case which reduces the time it takes for patients to receive treatment 39 .

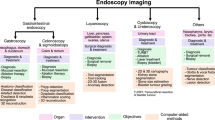

CV in medical modalities with non-standardized data collection requires the integration of CV into existing physical systems. For instance, in otolaryngology, CNNs can be used to help primary care physicians manage patients’ ears, nose, and throat 40 , through mountable devices attached to smartphones 41 . Hematology and serology can benefit from microscope-integrated AIs 42 that diagnose common conditions 43 or count blood cells of various types 44 —repetitive tasks that are easy to augment with CNNs. AI in gastroenterology has demonstrated stunning capabilities. Video-based CNNs can be integrated into endoscopic procedures 45 for scope guidance, lesion detection, and lesion diagnosis. Applications include esophageal cancer screening 46 , detecting gastric cancer 47 , 48 , detecting stomach infections such as H. Pylori 49 , and even finding hookworms 50 . Scientists have taken this field one step further by building entire medical AI devices designed for monitoring, such as at-home smart toilets outfitted with diagnostic CNNs on cameras 51 . Beyond the analysis of disease states, CV can serve the future of human health and welfare through applications such as screening human embryos for implantation 52 .

Computer vision in radiology is so pronounced that it has quickly burgeoned into its own field of research, growing a corpus of work 53 , 54 , 55 that extends into all modalities, with a focus on X-rays, CT, and MRI. Chest X-ray analysis—a key clinical focus area 33 —has been an exemplar. The field has collected nearly 1 million annotated, open-source images 56 , 57 , 58 —the closest ImageNet 9 equivalent to date in medical CV. Analysis of brain imagery 59 (particularly for time-critical use-cases like stroke), and abdominal imagery 60 have similarly received substantial attention. Disease classification, nodule detection 61 , and region segmentation (e.g. ventricular 62 ) models have been developed for most conditions for which data can be collected. This has enabled the field to respond rapidly in times of crisis—for instance, developing and deploying COVID-19 detection models 63 . The field continues to expand with work in image translation (e.g. converting noisy ultrasound images into MRI), image reconstruction and enhancement (e.g. converting low-dosage, low-resolution CT images into high-resolution images 64 ), automated report generation, and temporal tracking (e.g. image registration to track tumor growth over time). In the sections below, we explore vision-based applications in other specialties.

Cardiac imaging is increasingly used in a wide array of clinical diagnoses and workflows. Key clinical applications for deep learning include diagnosis and screening. The most common imaging modality in cardiovascular medicine is the cardiac ultrasound, or echocardiogram. As a cost-effective, radiation-free technique, echocardiography is uniquely suited for DL due to straightforward data acquisition and interpretation—it is routinely used in most acute inpatient facilities, outpatient centers, and emergency rooms 65 . Further, 3D imaging techniques such as CT and MRI are used for the understanding of cardiac anatomy and to better characterize supply-demand mismatch. CT segmentation algorithms have even been FDA—cleared for coronary artery visualization 66 .

There are many example applications. DL can be trained on a large database of echocardiographic studies and surpass the performance of board-certified echocardiographers in view classification 67 . Computational DL pipelines can assess hypertrophic cardiomyopathy, cardiac amyloid, and pulmonary arterial hypertension 68 . EchoNet 69 —a deep learning model that can recognize cardiac structures, estimate function, and predict systemic phenotypes that are not readily identifiable to human interpretation—has recently furthered the field.

To account for challenges around data access, 70 data-efficient echocardiogram algorithms 70 have been developed, such as semi-supervised GANs that are effective at downstream tasks (e.g predicting left ventricular hypertrophy). To account for the fact that most studies utilize privately held medical imaging datasets, 10,000 annotated echocardiogram videos were recently open-sourced 36 . Alongside this release, a video-based model, EchoNet-Dynamic 36 , was developed. It can estimate ejection fraction and assess cardiomyopathy, alongside a comprehensive evaluation criterion based on results from an external dataset and human experts.

Pathologists play a key role in cancer detection and treatment. Pathological analysis—based on visual inspection of tissue samples under microscope—is inherently subjective in nature. Differences in visual perception and clinical training can lead to inconsistencies in diagnostic and prognostic opinions 71 , 72 , 73 . Here, DL can support critical medical tasks, including diagnostics, prognostication of outcomes and treatment response, pathology segmentation, disease monitoring, and so forth.

Recent years have seen the adoption of sub-micron-level resolution tissue scanners that capture gigapixel whole-slide images (WSI) 74 . This development, coupled with advances in CV has led to research and commercialization activity in AI-driven digital histopathology 75 . This field has the potential to (i) overcome limitations of human visual perception and cognition by improving the efficiency and accuracy of routine tasks, (ii) develop new signatures of disease and therapy from morphological structures invisible to the human eye, and (iii) combine pathology with radiological, genomic, and proteomic measurements to improve diagnosis and prognosis 76 .

One thread of research has focused on automating the routine, time-consuming task of localization and quantification of morphological features. Examples include the detection and classification of cells, nuclei, and mitoses 77 , 78 , 79 , and the localization and segmentation of histological primitives such as nuclei, glands, ducts, and tumors 80 , 81 , 82 , 83 . These methods typically require expensive manual annotation of tissue components by pathologists as training data.

Another research avenue focuses on direct diagnostics 84 , 85 , 86 and prognostics 87 , 88 from WSI or tissue microarrays (TMA) for a variety of cancers—breast, prostate, lung cancer, etc. Studies have even shown that morphological features captured by a hematoxylin and eosin (H&E) stain are predictive of molecular biomarkers utilized in theragnosis 85 , 89 . While histopathology slides digitize into massive, data-rich gigapixel images, region-level annotations are sparse and expensive. To help overcome this challenge, the field has developed DL algorithms based on multiple-instance learning 90 that utilize slide-level “weak” annotations and exploit the sheer size of these images for improved performance.

The data abundance of this domain has further enabled tasks such as virtual staining 91 , in which models are trained to predict one type of image (e.g. a stained image) from another (e.g. a raw microscopy image). See Fig. 1b . Moving forward, AI algorithms that learn to perform diagnosis, prognosis, and theragnosis using digital pathology image archives and annotations readily available from electronic health records have the potential to transform the fields of pathology and oncology.

Dermatology

The key clinical tasks for DL in dermatology include lesion-specific differential diagnostics, finding concerning lesions amongst many benign lesions, and helping track lesion growth over time 92 . A series of works have demonstrated that CNNs can match the performance of board-certified dermatologists at classifying malignant skin lesions from benign ones 7 , 93 , 94 . These studies have sequentially tested increasing numbers of dermatologists (25– 7 57– 93 , 157– 94 ), consistently demonstrating a sensitivity and specificity in classification that matches or even exceeds physician levels. These studies were largely restricted to the binary classification task of discerning benign vs malignant cutaneous lesions, classifying either melanomas from nevi or carcinomas from seborrheic keratoses.

Recently, this line of work has expanded to encompass differential diagnostics across dozens of skin conditions 95 , including non-neoplastic lesions such as rashes and genetic conditions, and incorporating non-visual metadata (e.g. patient demographics) as classifier inputs 96 . These works have been catalyzed by open-access image repositories and AI challenges that encourage teams to compete on predetermined benchmarks 97 .

Incorporating these algorithms into clinical workflows would allow their utility to support other key tasks, including large-scale detection of malignancies on patients with many lesions, and tracking lesions across images in order to capture temporal features, such as growth and color changes. This area remains fairly unexplored, with initial works that jointly train CNNs to detect and track lesions 98 .

Ophthalmology

Ophthalmology, in recent years, has observed a significant uptick in AI efforts, with dozens of papers demonstrating clinical diagnostic and analytical capabilities that extend beyond current human capability 99 , 100 , 101 . The potential clinical impact is significant 102 , 103 —the portability of the machinery used to inspect the eye means that pop-up clinics and telemedicine could be used to distribute testing sites to underserved areas. The field depends largely on fundus imaging, and optical coherence tomography (OCT) to diagnose and manage patients.

CNNs can accurately diagnose a number of conditions. Diabetic retinopathy—a condition in which blood vessels in the eyes of diabetic patients “leak” and can lead to blindness—has been extensively studied. CNNs consistently demonstrate physician-level grading from fundus photographs 104 , 105 , 106 , 107 , which has led to a recent US FDA-cleared system 108 . Similarly, they can diagnose or predict the progression of center-involved diabetic macular edema 109 , age-related macular degeneration 107 , 110 , glaucoma 107 , 111 , manifest visual field loss 112 , childhood blindness 113 , and others.

The eyes contain a number of non-human-interpretable features, indicative of meaningful medical information, that CNNs can pick up on. Remarkably, it was shown that CNNs can classify a number of cardiovascular and diabetic risk factors from fundus photographs 114 , including age, gender, smoking, hemoglobin-A1c, body-mass index, systolic blood pressure, and diastolic blood pressure. CNNs can also pick up signs of anemia 115 and chronic kidney disease 116 from fundus photographs. This presents an exciting opportunity for future AI studies predicting nonocular information from eye images. This could lead to a paradigm shift in care in which eye exams screen you for the presence of both ocular and nonocular disease—something currently limited for human physicians.

Medical video

Surgical applications.

The CV may provide significant utility in procedural fields such as surgery and endoscopy. Key clinical applications for deep learning include enhancing surgeon performance through real-time contextual awareness 117 , skills assessments, and training. Early studies have begun pursuing these objectives, primarily in video-based robotic and laparoscopic surgery—a number of works propose methods for detecting surgical tools and actions 118 , 119 , 120 , 121 , 122 , 123 , 124 . Some studies analyze tool movement or other cues to assess surgeon skill 119 , 121 , 123 , 124 , through established ratings such as the Global Operative Assessment of Laparoscopic Skills (GOALS) criteria for laparoscopic surgery 125 . Another line of work uses CV to recognize distinct phases of surgery during operations, towards developing context-aware computer assistance systems 126 , 127 . CV is also starting to emerge in open surgery settings 128 , of which there is a significant volume. The challenge here lies in the diversity of video capture viewpoints (e.g., head-mounted, side-view, and overhead cameras) and types of surgeries. For all types of surgical video, translating CV analysis to tools and applications that can improve patient outcomes is a natural next direction of research.

Human activity

CV can recognize human activity in physical spaces, such as hospitals and clinics, for a range of “ambient intelligence” applications. Ambient intelligence refers to a continuous, non-invasive awareness of activity in a physical space that can provide clinicians, nurses, and other healthcare workers with assistance such as patient monitoring, automated documentation, and monitoring for protocol compliance (Fig. 3 ). In hospitals, for example, early works have demonstrated CV-based ambient intelligence in intensive care units to monitor for safety-critical behaviors such as hand hygiene activity 32 and patient mobilization 8 , 129 , 130 . CV has also been developed for the emergency department, to transcribe procedures performed during the resuscitation of a patient 131 , and for the operating room (OR), to recognize activities for workflow optimization 132 . At the hospital operations level, CV can be a scalable and detailed form of labor and resource measurement that improves resource allocation for optimal care 133 .